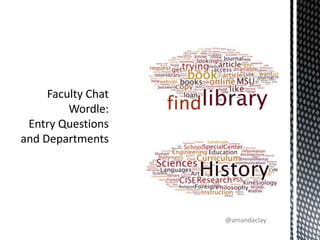

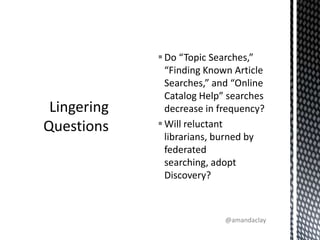

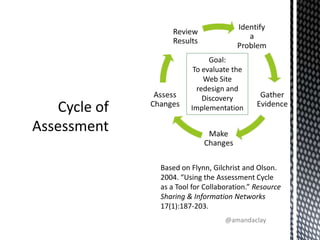

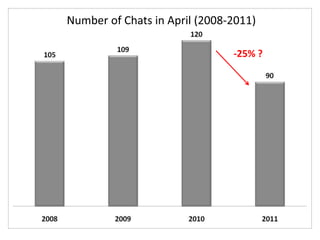

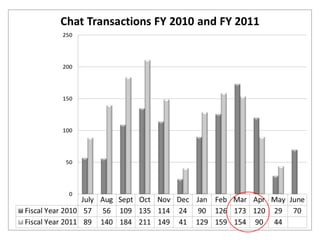

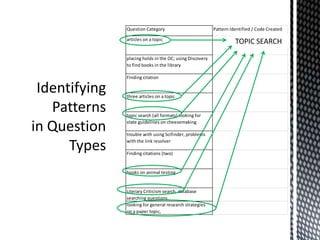

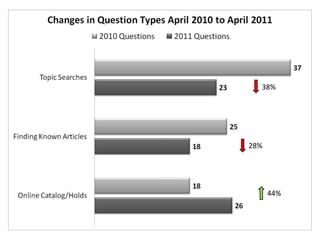

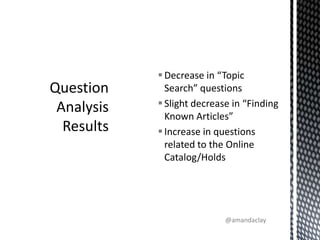

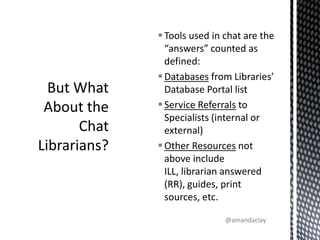

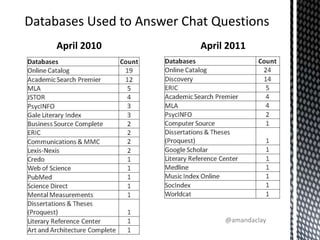

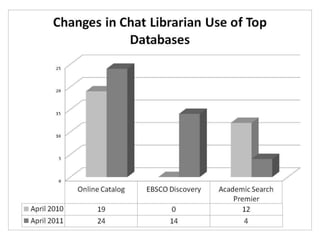

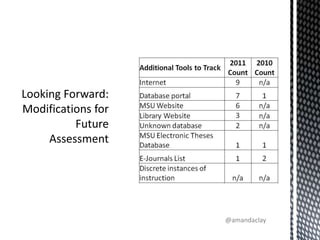

This document summarizes Amanda Clay Powers' presentation on iteratively assessing virtual reference services at Mississippi State University Libraries. The libraries analyzed 1800 chat transcripts from 2010 to evaluate their new website and discovery tool. Topic search questions decreased while catalog/holds questions increased. Discovery replaced the main database for answering questions. The methodology allows ongoing evaluation to measure library effectiveness.