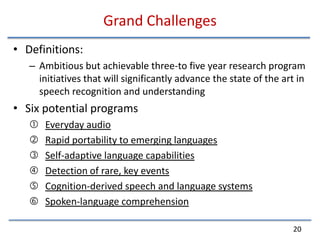

The document discusses research developments and directions in speech recognition and understanding. It outlines significant developments including improvements to infrastructure like hardware, corpora, and evaluation benchmarks. It also discusses advances in knowledge representation like acoustic features and graph representations, as well as models and algorithms like hidden Markov models and discriminative training. The document proposes six potential "grand challenges" for future research, including creating systems robust to everyday audio variability, rapidly developing speech technologies for emerging languages with limited resources, and enabling spoken language comprehension.