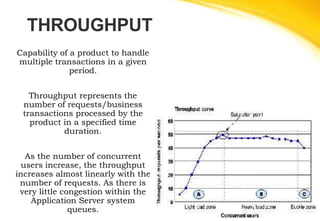

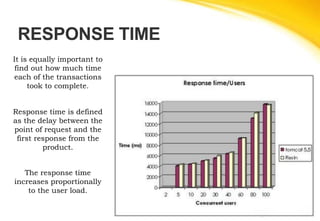

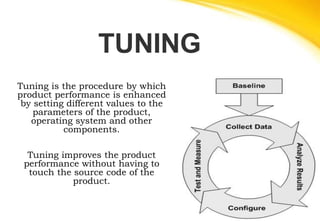

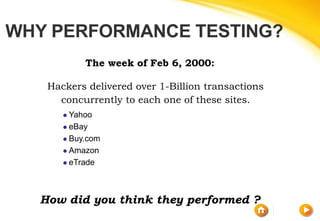

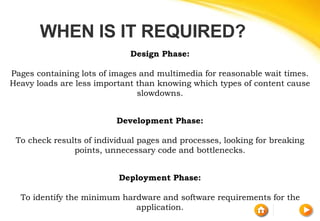

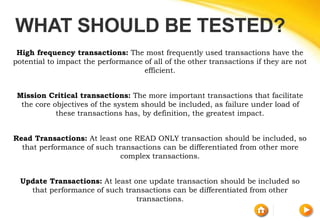

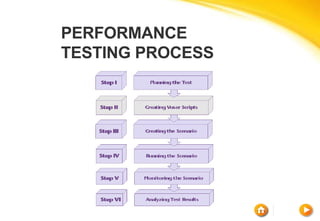

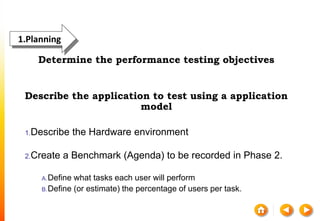

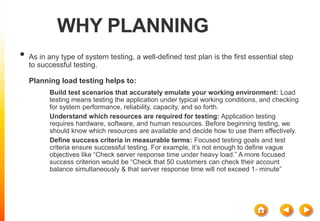

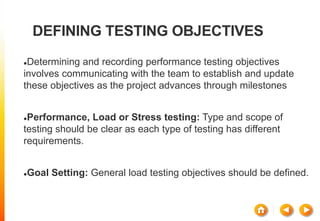

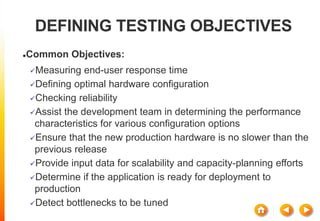

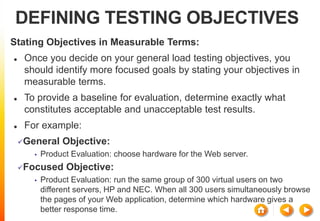

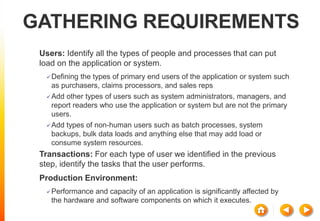

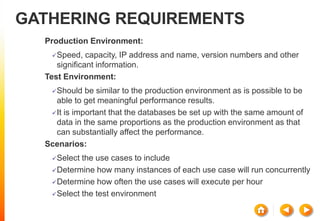

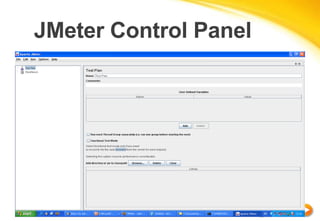

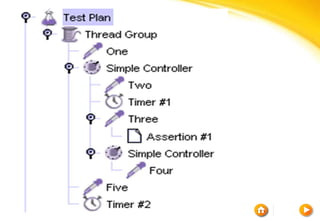

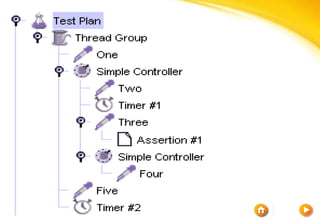

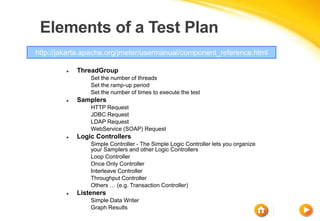

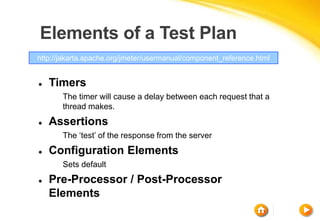

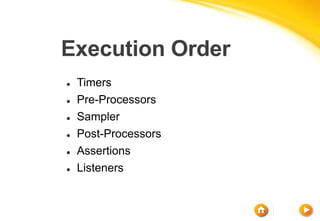

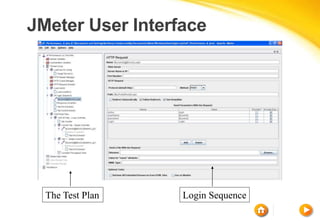

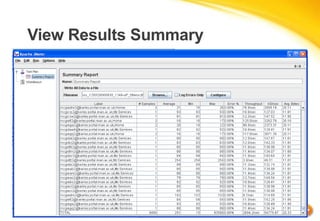

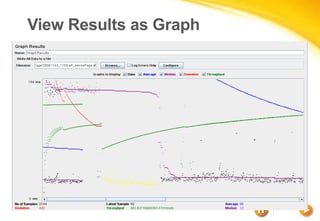

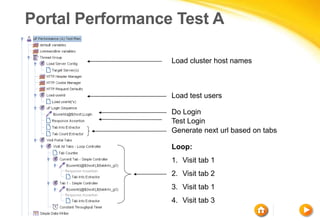

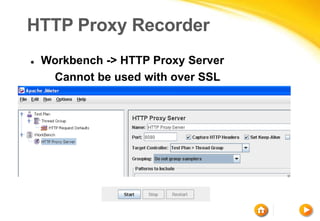

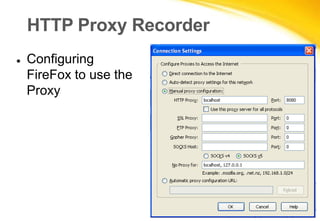

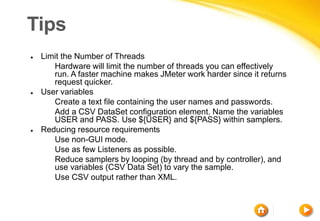

The document provides an overview of performance testing, which assesses the speed and effectiveness of computer systems, software, and networks, focusing on critical factors like throughput, response time, tuning, and benchmarking. It distinguishes between performance, load, and stress testing while emphasizing the importance of performance testing throughout the design, development, and deployment phases to ensure system reliability and efficiency under various loads. Additionally, tools like Apache JMeter are discussed for simulating user load and evaluating application performance through structured test plans.