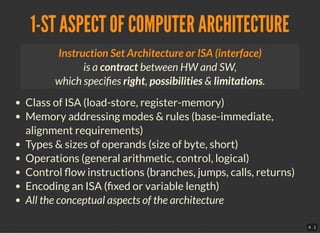

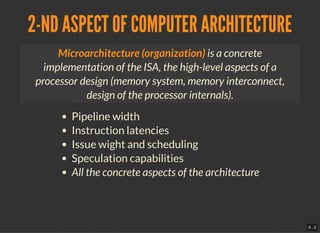

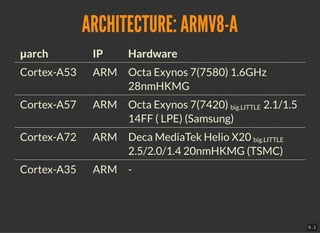

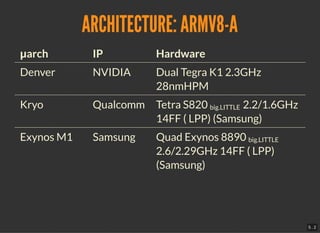

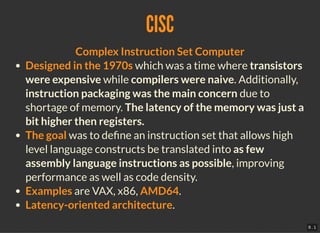

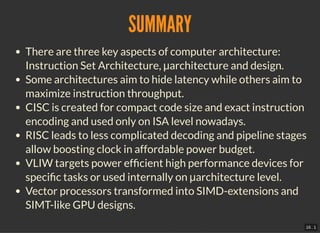

There are three key aspects of computer architecture: instruction set architecture, microarchitecture, and hardware design. Modern architectures aim to either hide latency or maximize throughput. Reduced instruction set computers (RISC) became popular due to simpler decoding and pipelining allowing higher clock speeds. While complex instruction set computers (CISC) focused on code density, RISC architectures are now dominant due to their efficiency. Very long instruction word (VLIW) and vector processors targeted specialized workloads but their concepts influence modern designs. Load-store RISC architectures with fixed-width instructions and minimal addressing modes provide an optimal balance between performance and efficiency.