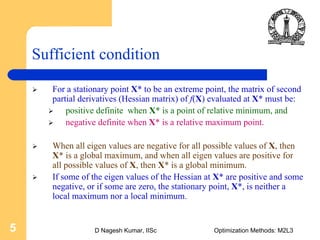

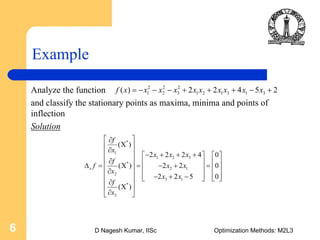

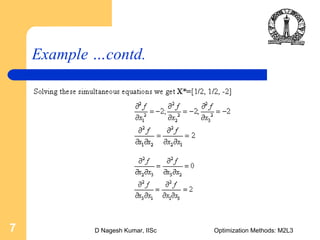

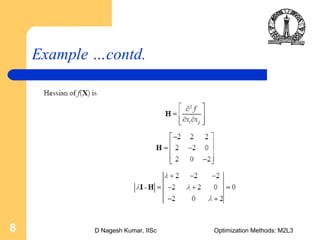

This document discusses unconstrained optimization of functions with multiple variables. It explains that the gradient vector and Hessian matrix are used to analyze these types of functions. The necessary condition for a stationary point is that the gradient vector must be equal to zero. The sufficient condition is that the Hessian matrix must be positive definite for a minimum or negative definite for a maximum. An example function is analyzed to classify its stationary points as maxima, minima or points of inflection. The solutions and working are shown step-by-step for this example.