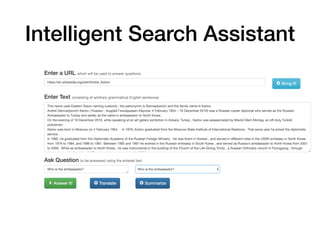

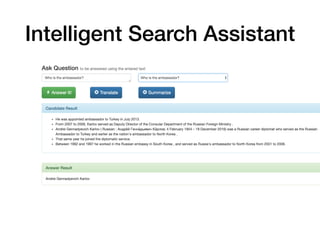

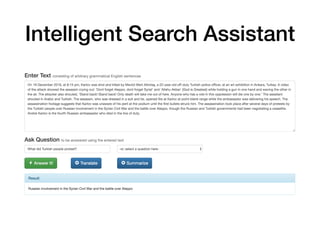

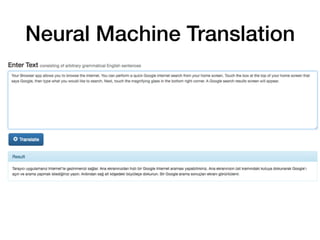

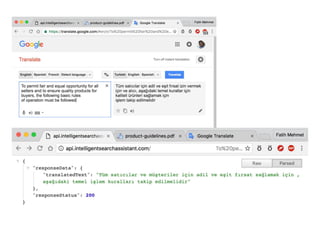

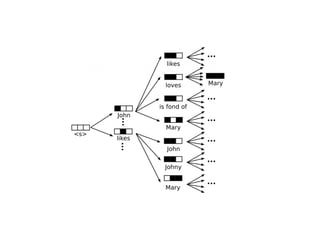

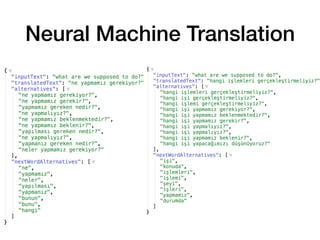

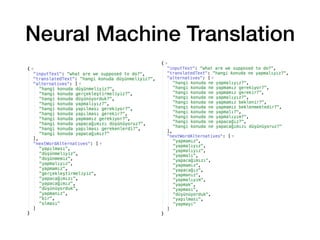

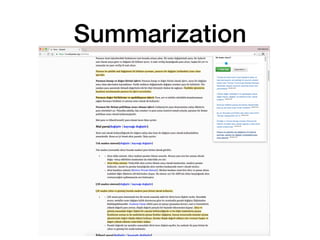

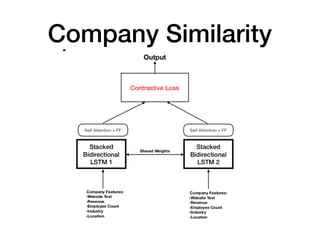

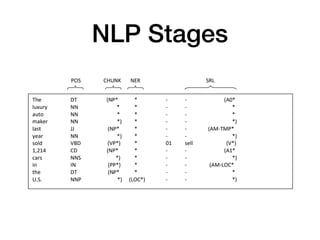

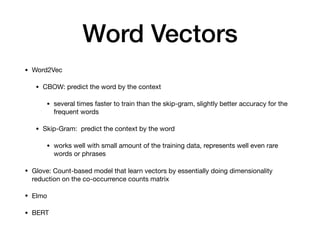

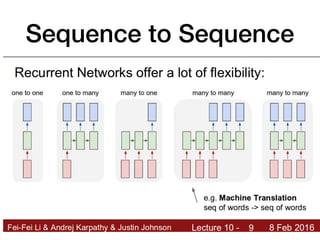

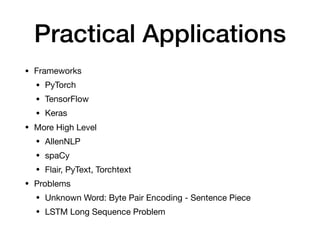

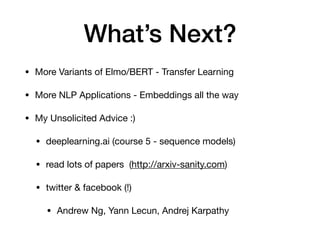

The document outlines the background and projects of Fatih Mehmet Güler in the field of natural language processing (NLP) with deep learning, including applications such as intelligent search assistants and neural machine translation. It discusses various NLP techniques and tools, including word embeddings like Word2Vec, GloVe, ELMo, and BERT, as well as frameworks like PyTorch and TensorFlow. Güler shares insights into current challenges and future directions in NLP research and applications.