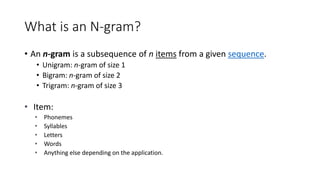

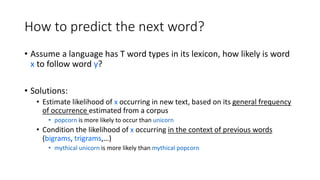

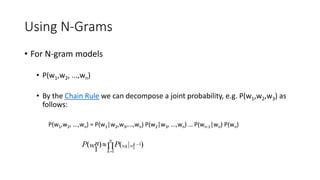

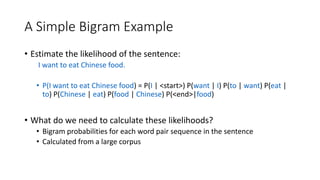

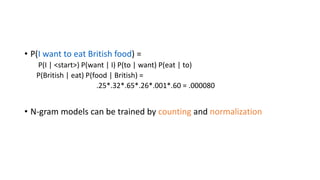

The document discusses N-gram language models. It explains that an N-gram model predicts the next likely word based on the previous N-1 words. It provides examples of unigrams, bigrams, and trigrams. The document also describes how N-gram models are used to calculate the probability of a sequence of words by breaking it down using the chain rule of probability and conditional probabilities of word pairs. N-gram probabilities are estimated from a large training corpus.