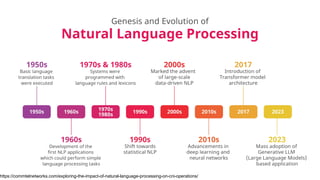

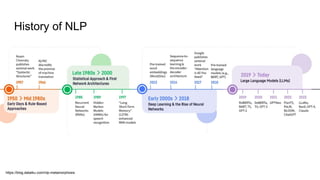

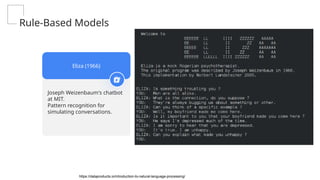

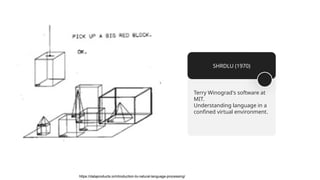

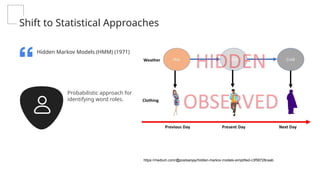

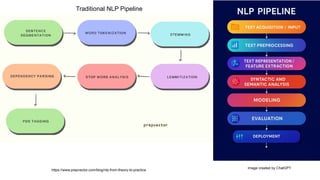

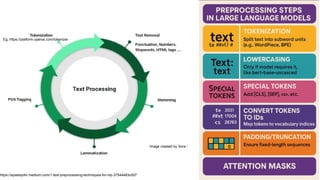

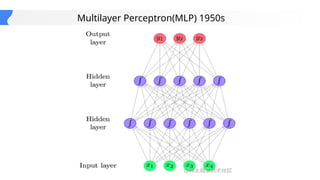

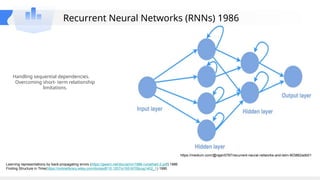

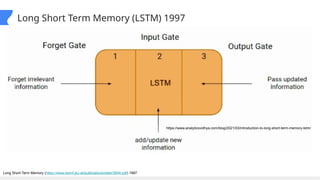

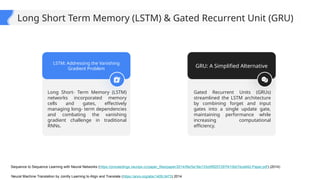

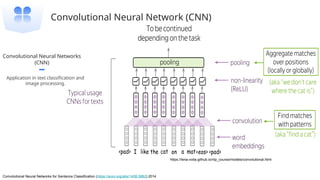

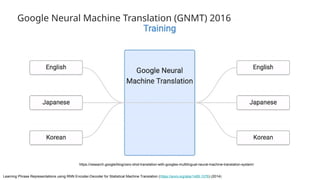

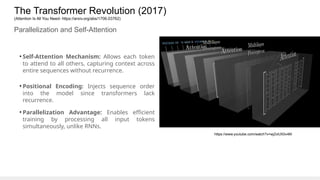

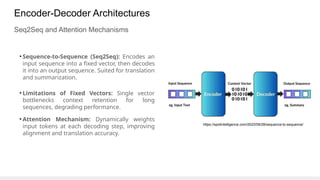

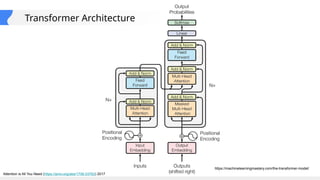

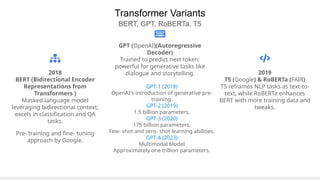

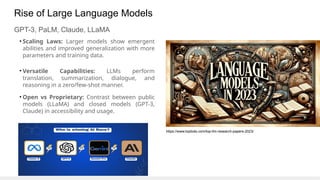

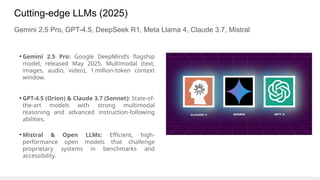

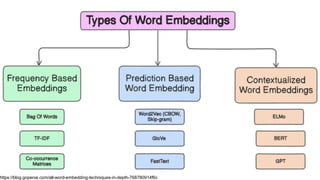

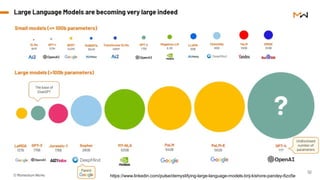

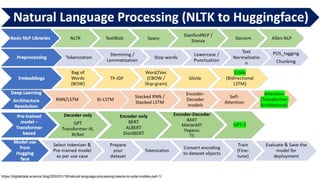

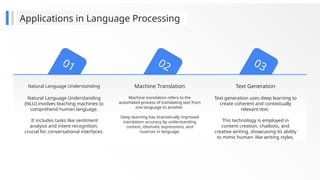

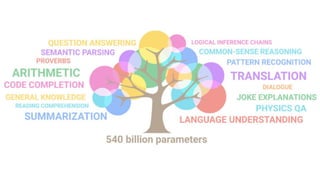

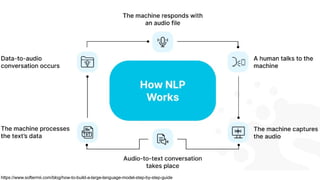

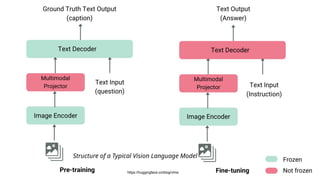

This gives an introduction to how NLP has evolved from the time of World War II till this date through the advances in approaches, architectures and word representations. From rule based approaches, it advanced to statistical approaches. from traditional machine learning algorithms it advanced to deep neural network architectures. Deep neural architectures include recurrent neural networks, long short term memory, gated recurrent units, seq2seq models, encoder decoder models, transformer architecture, upto large language models and vision language models which are multimodal in nature.