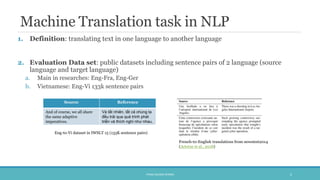

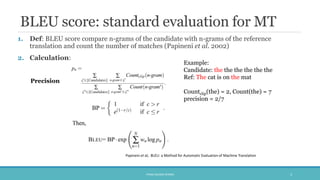

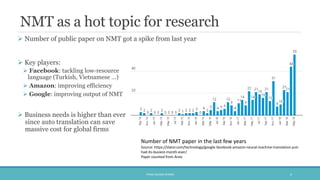

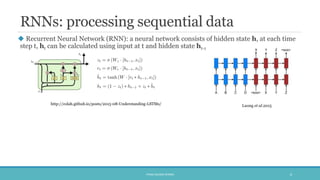

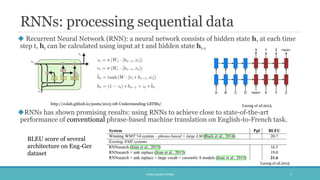

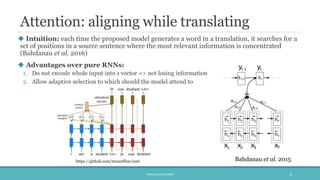

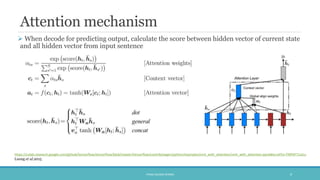

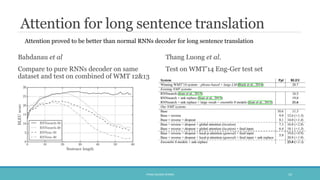

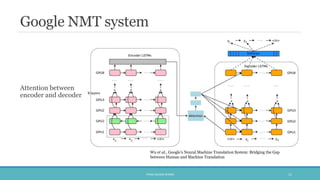

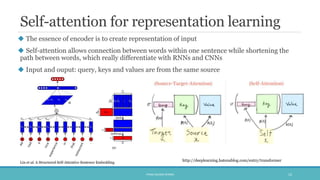

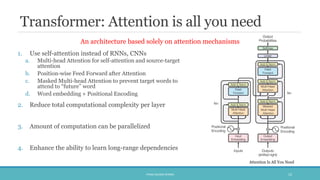

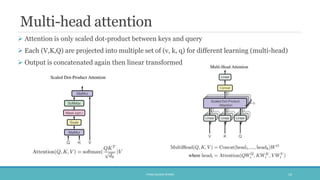

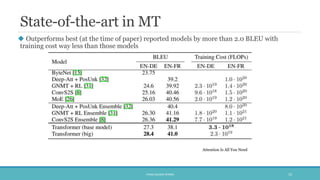

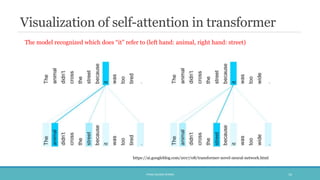

The document discusses the evolution and significance of neural machine translation (NMT), highlighting the attention mechanism as a key innovation in improving translation accuracy and efficiency. It details evaluation methods such as the BLEU score and outlines various NMT architectures, including recurrent neural networks (RNNs), attention-based models, and transformers, with a focus on enhancing the handling of long sentences and complex dependencies. Additionally, the document notes the increasing demand for effective translation solutions across various applications, including business and scientific domains.