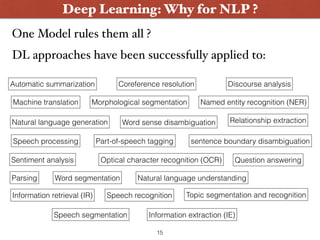

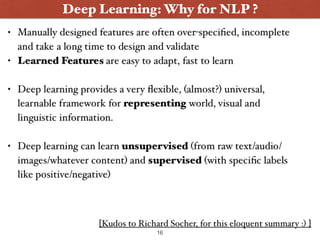

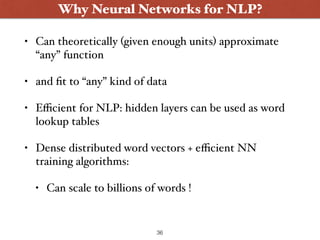

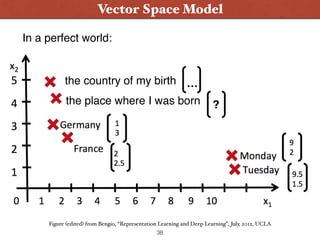

The document discusses the challenges and advancements in natural language processing (NLP), particularly focusing on deep learning techniques like word embeddings and recurrent neural networks. It outlines various applications of deep learning in NLP, such as sentiment analysis, machine translation, and text generation, while addressing issues related to language ambiguity, productivity, and cultural specificity. Additionally, it highlights the evolution and effectiveness of different neural network architectures for language understanding and modeling.

![[Karlgren 2014, NLP Sthlm Meetup]6](https://image.slidesharecdn.com/nlpguestlect15-151203142113-lva1-app6891/85/Deep-Learning-for-Natural-Language-Processing-Word-Embeddings-6-320.jpg)

![• What is the meaning of a word?

(Lexical semantics)

• What is the meaning of a sentence?

([Compositional] semantics)

• What is the meaning of a longer piece of text?

(Discourse semantics)

Semantics: Meaning

18](https://image.slidesharecdn.com/nlpguestlect15-151203142113-lva1-app6891/85/Deep-Learning-for-Natural-Language-Processing-Word-Embeddings-18-320.jpg)

![• NLP treats words mainly (rule-based/statistical

approaches at least) as atomic symbols:

• or in vector space:

• also known as “one hot” representation.

• Its problem ?

Word Representation

Love Candy Store

[0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 …]

Candy [0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 …] AND

Store [0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 …] = 0 !

19](https://image.slidesharecdn.com/nlpguestlect15-151203142113-lva1-app6891/85/Deep-Learning-for-Natural-Language-Processing-Word-Embeddings-19-320.jpg)

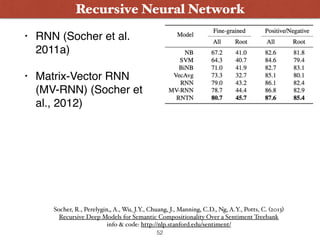

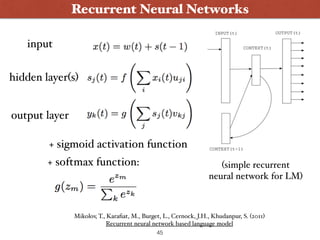

![• achieved SOTA in 2011 on

Language Modeling (WSJ AR

task) (Mikolov et al.,

INTERSPEECH 2011):

• and again at ASRU 2011:

44

Recurrent Neural Networks

“Comparison to other LMs shows that RNN

LMs are state of the art by a large margin.

Improvements inrease with more training data.”

“[ RNN LM trained on a] single core on 400M words in a few days,

with 1% absolute improvement in WER on state of the art setup”

Mikolov, T., Karafiat, M., Burget, L., Cernock, J.H., Khudanpur, S. (2011)

Recurrent neural network based language model](https://image.slidesharecdn.com/nlpguestlect15-151203142113-lva1-app6891/85/Deep-Learning-for-Natural-Language-Processing-Word-Embeddings-44-320.jpg)

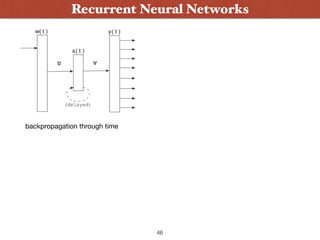

![47

Recurrent Neural Networks

backpropagation through time

class based recurrent NN

[code (Mikolov’s RNNLM Toolkit) and more info: http://rnnlm.org/ ]](https://image.slidesharecdn.com/nlpguestlect15-151203142113-lva1-app6891/85/Deep-Learning-for-Natural-Language-Processing-Word-Embeddings-47-320.jpg)