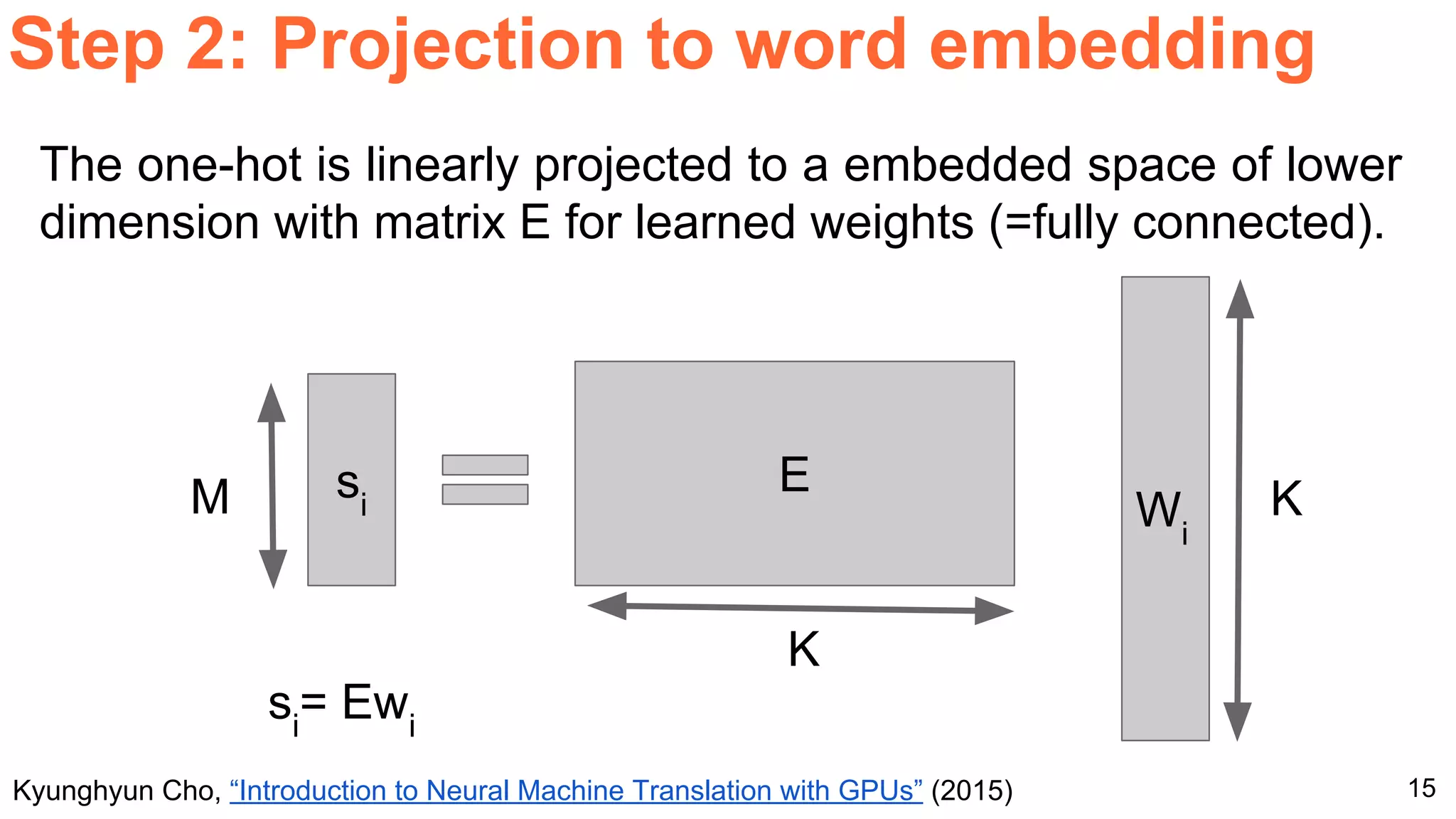

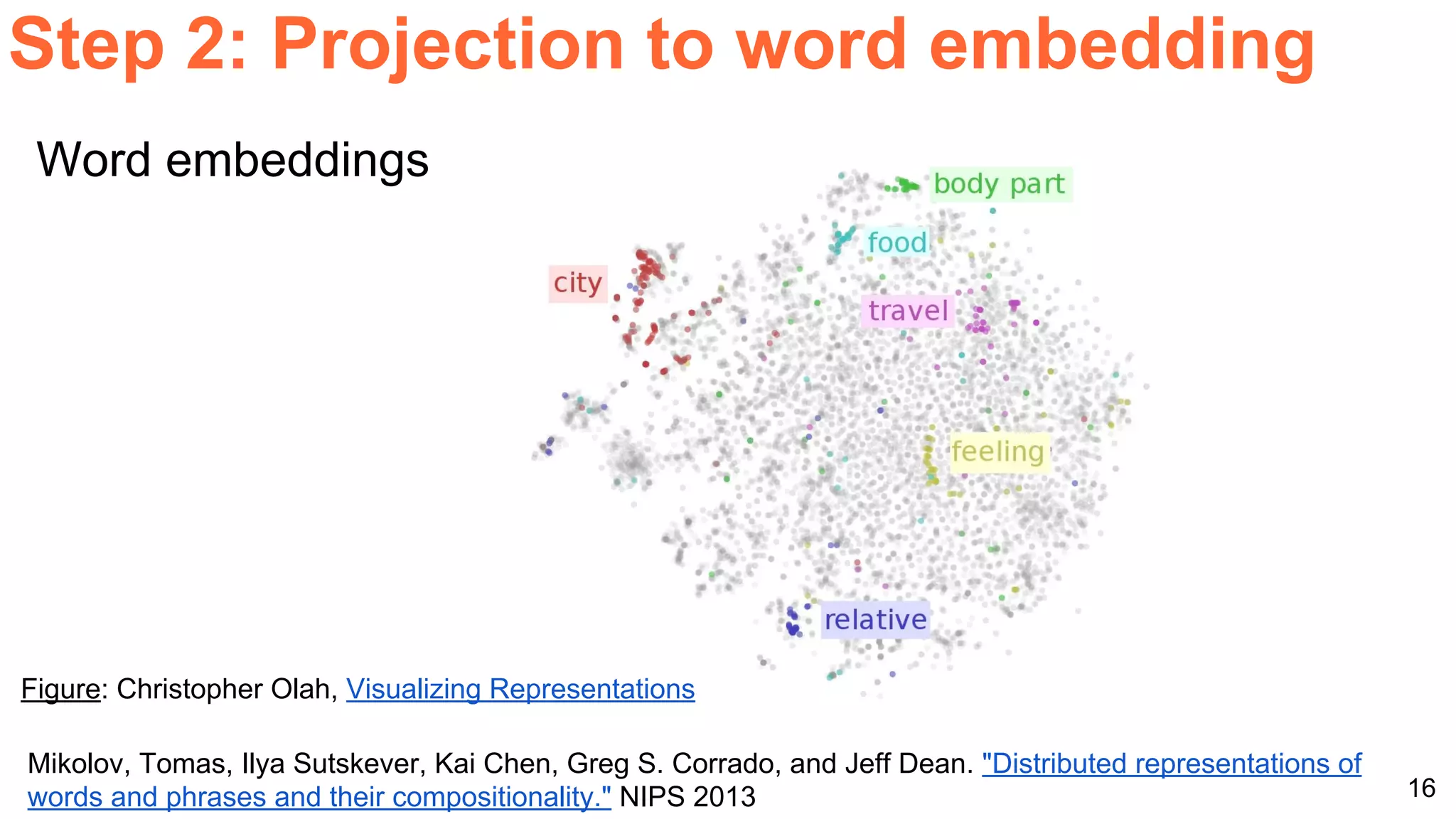

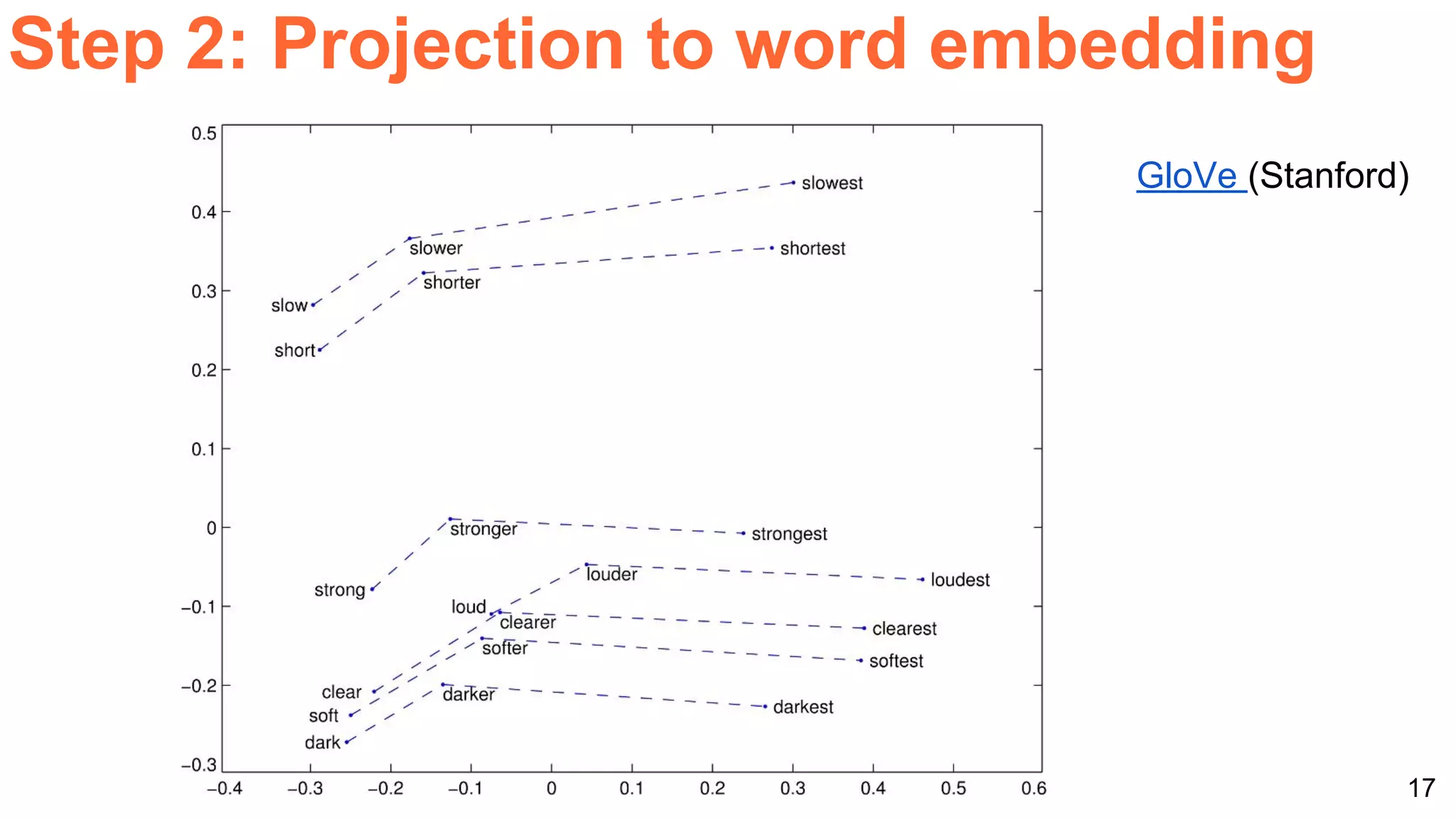

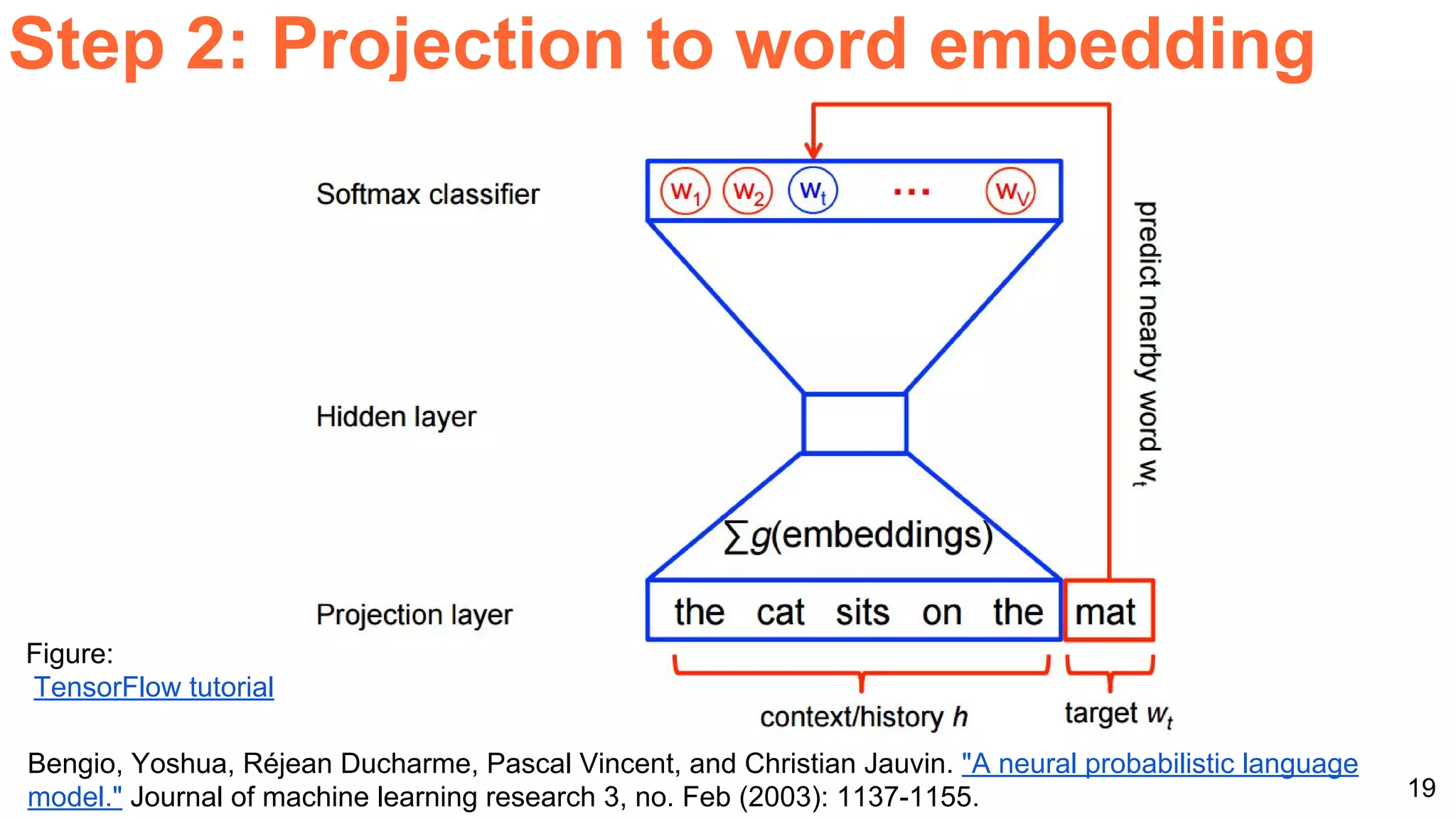

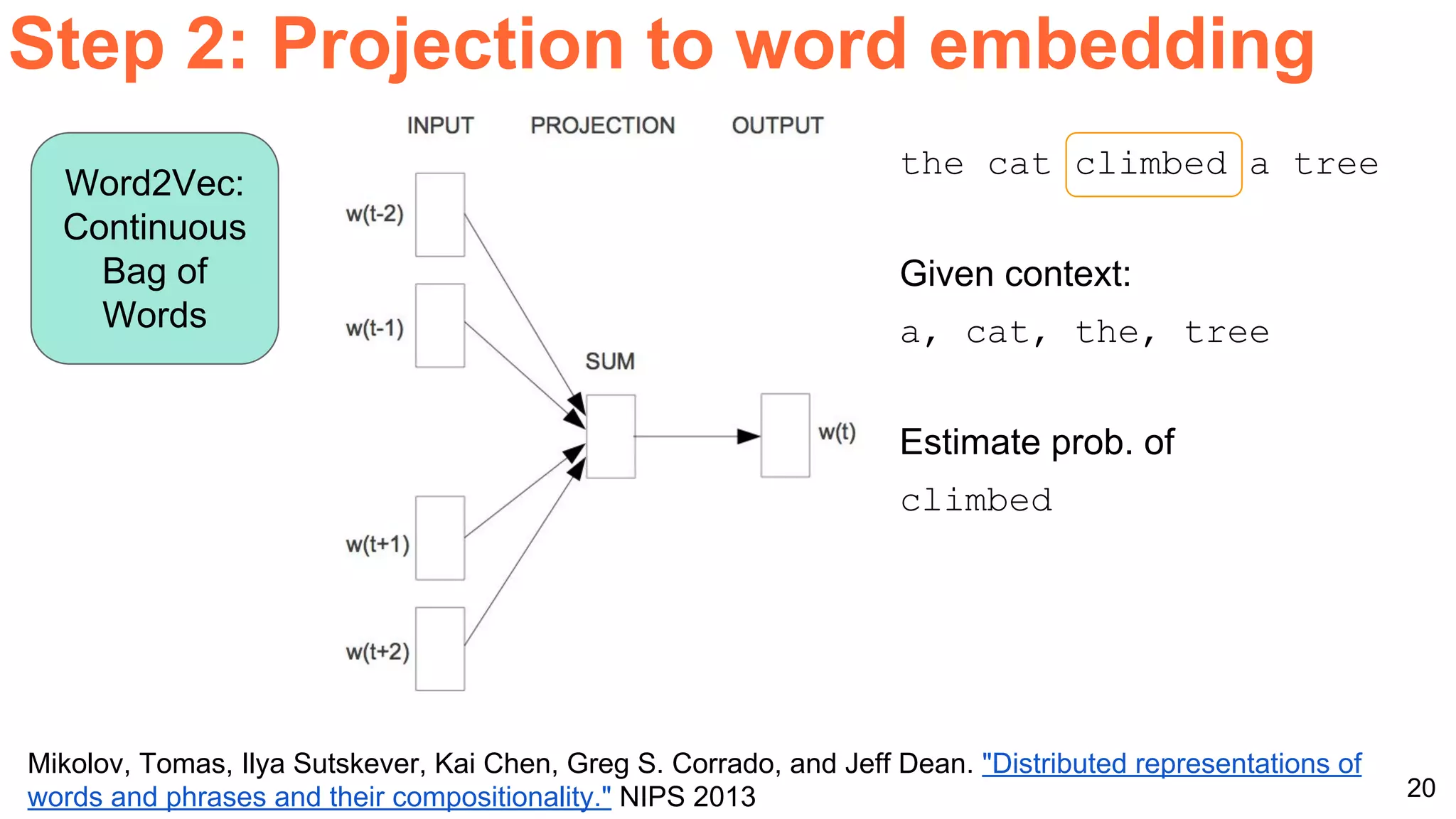

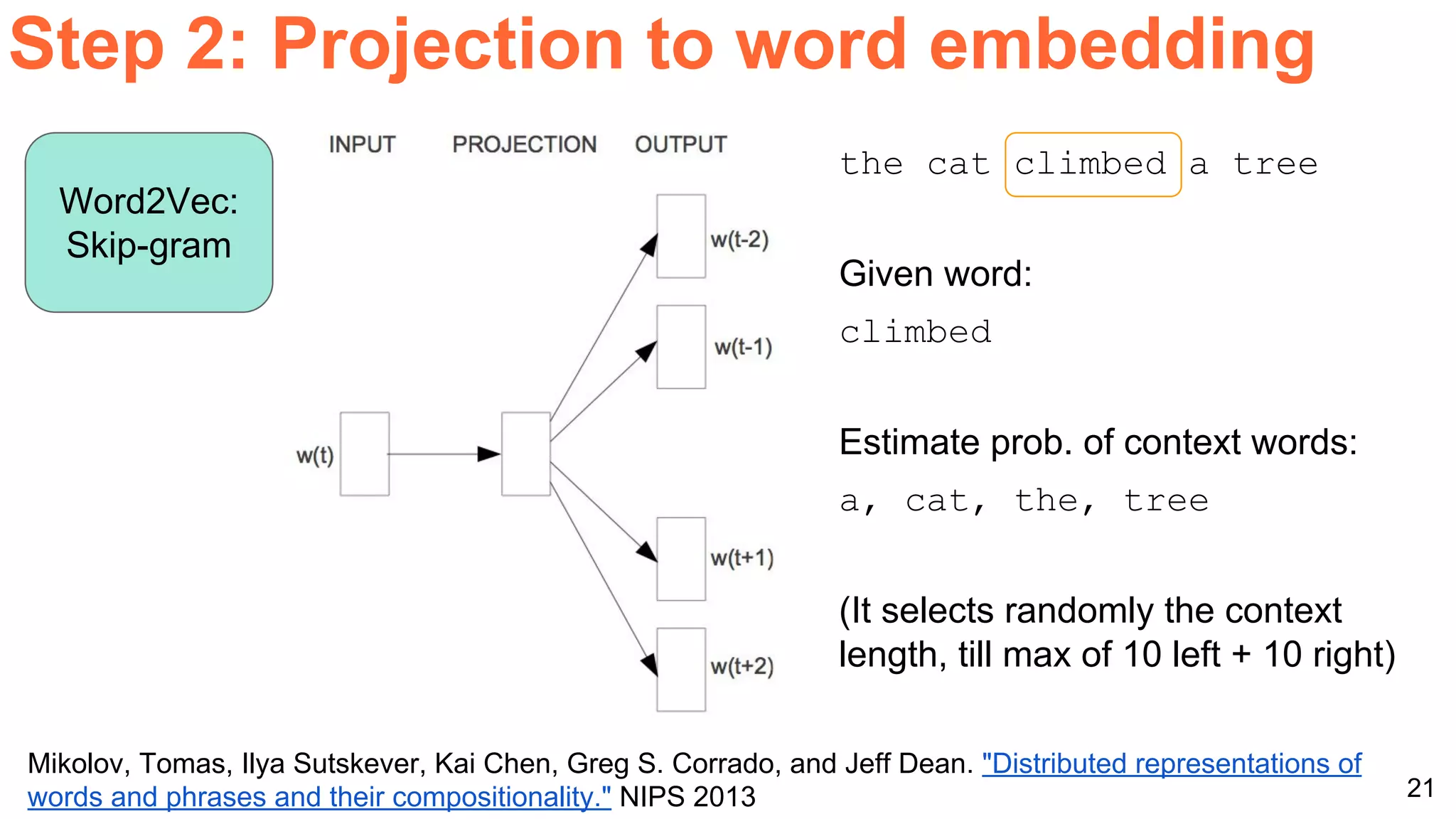

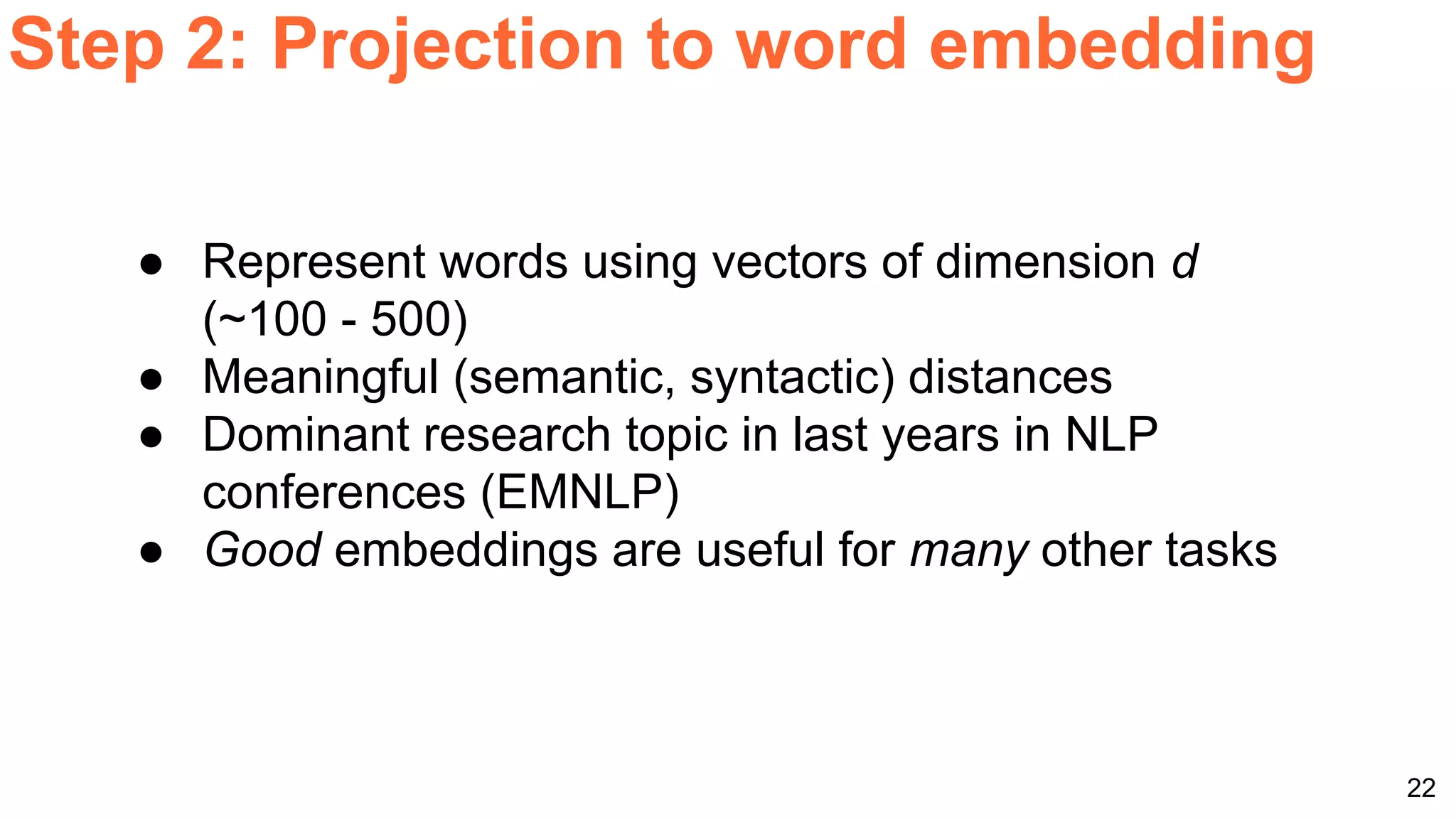

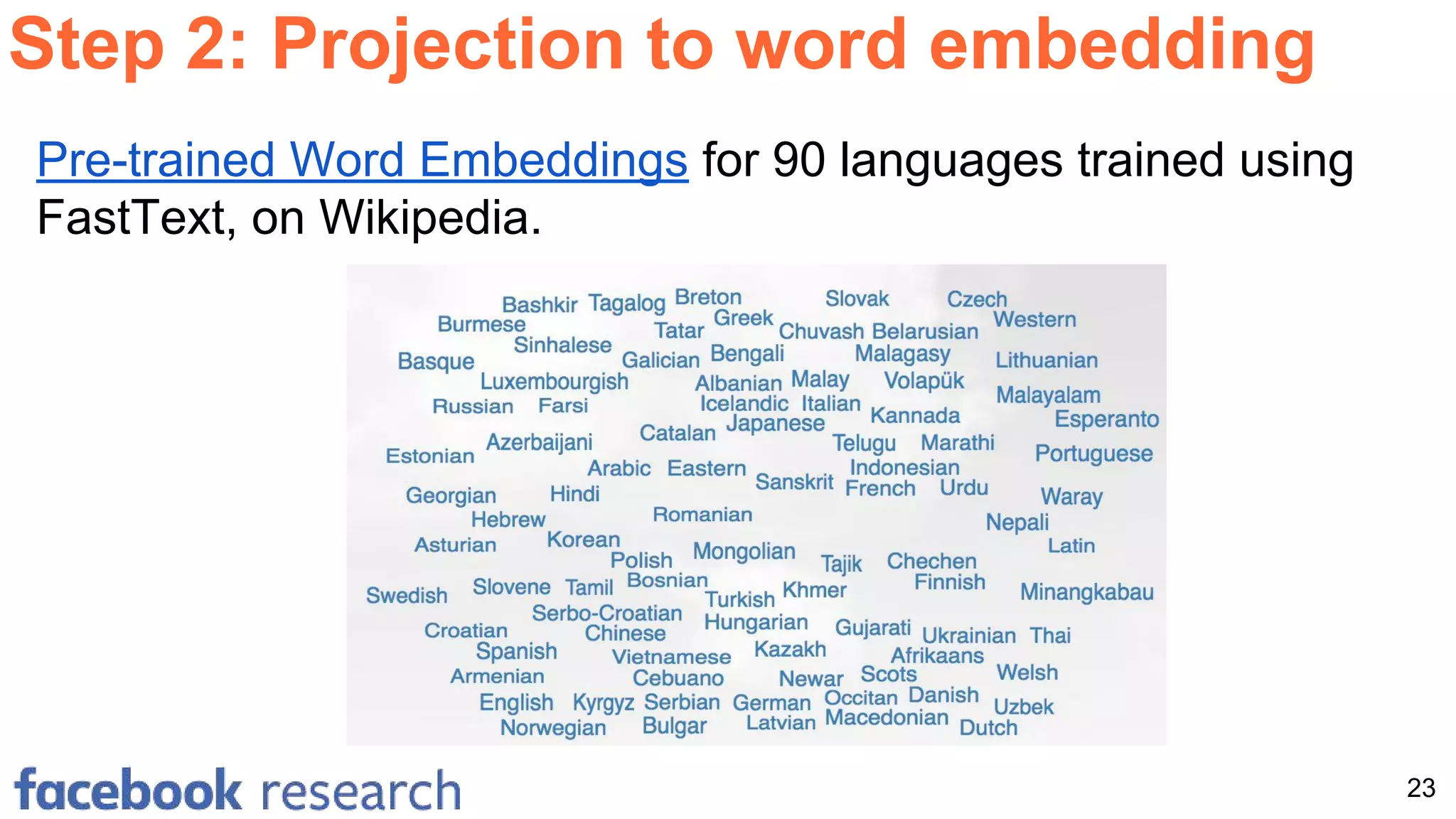

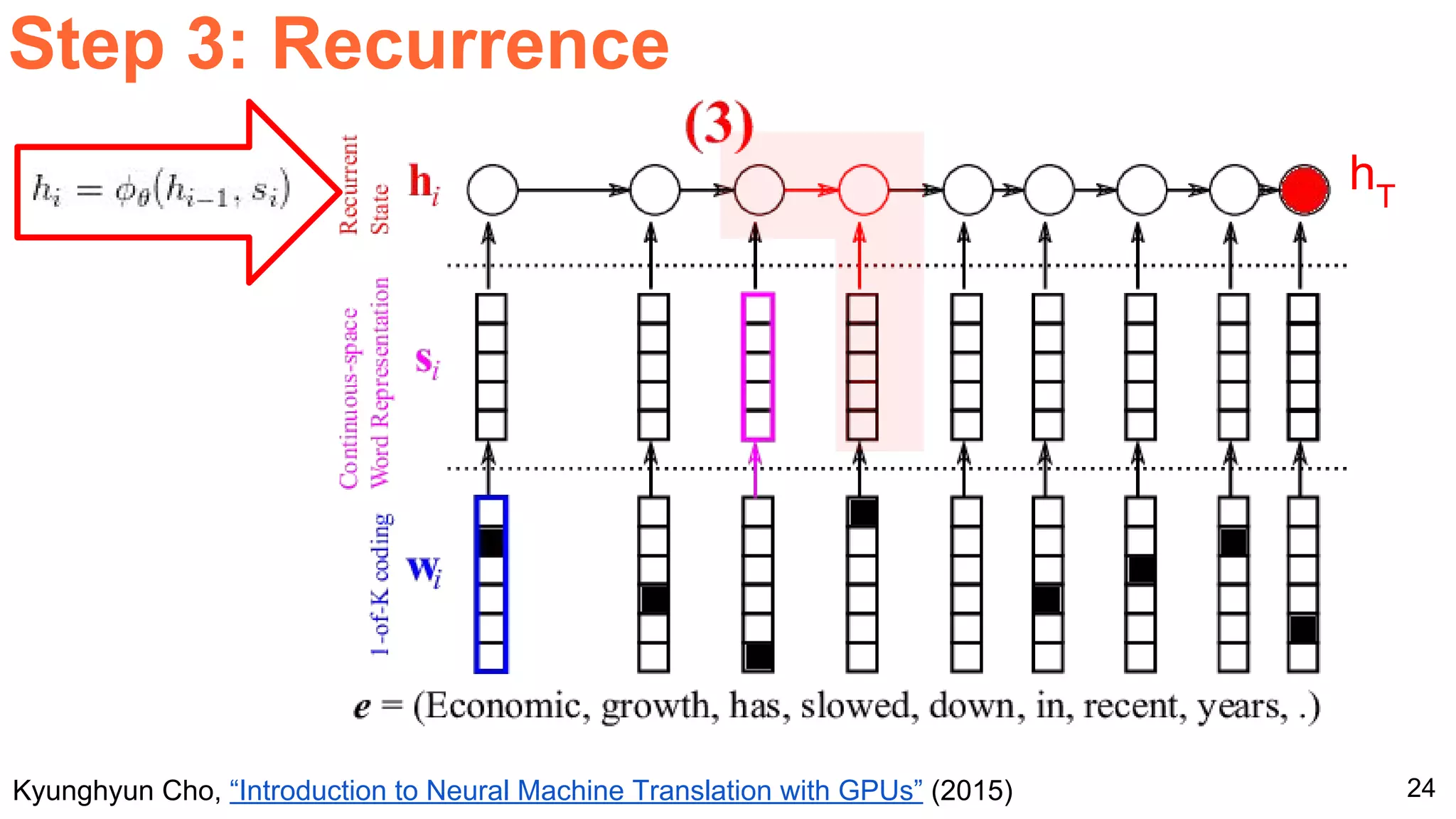

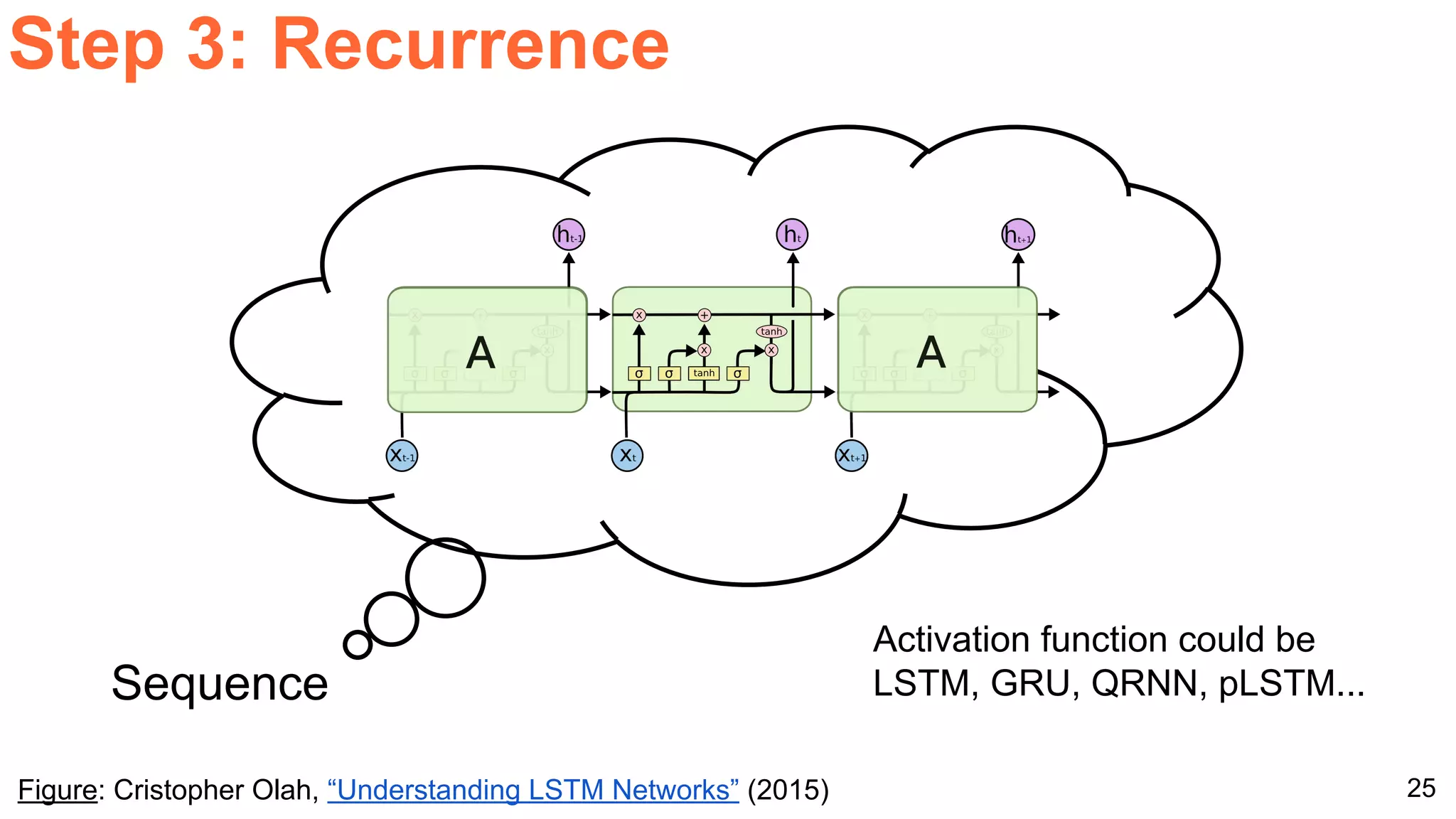

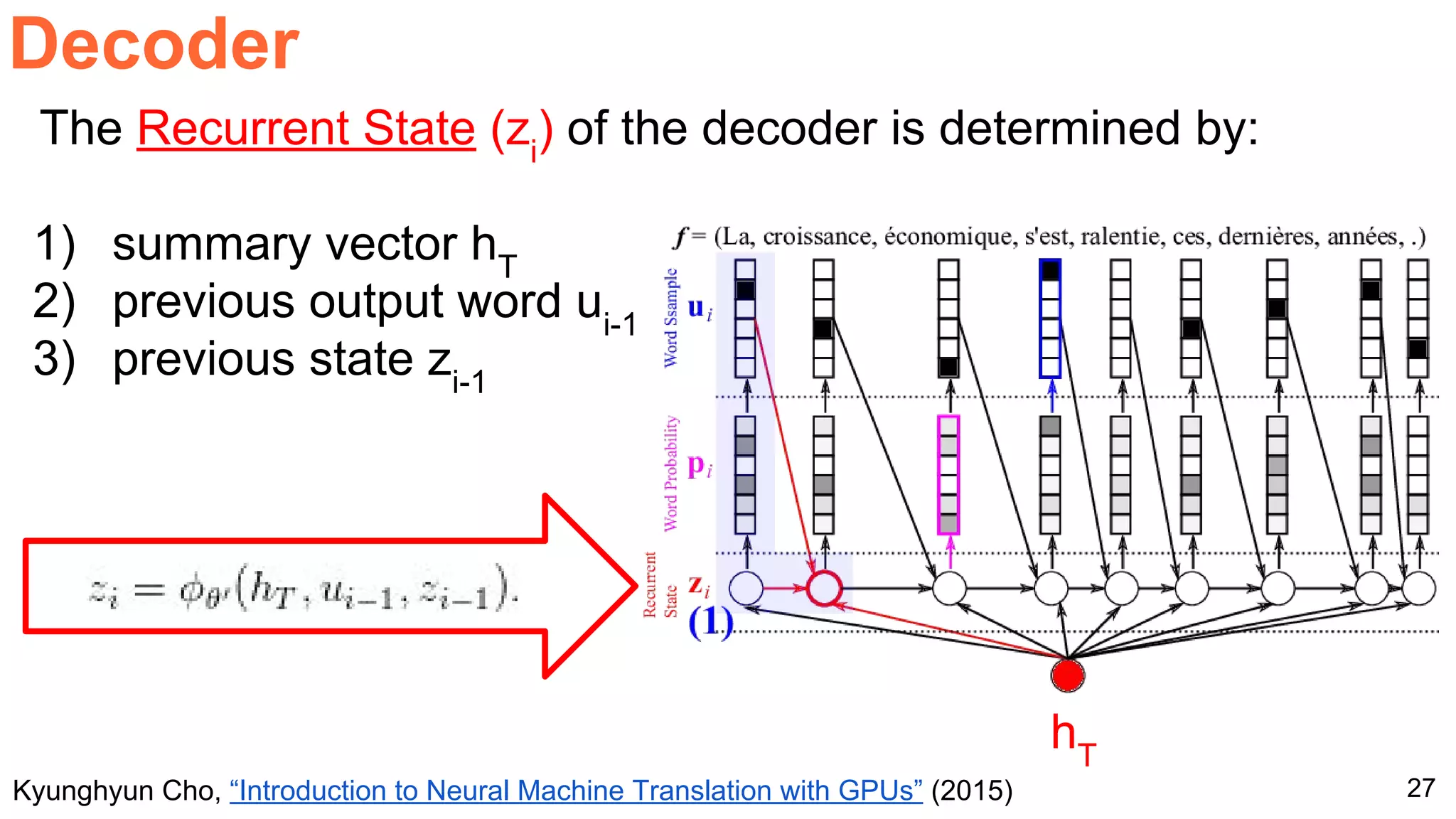

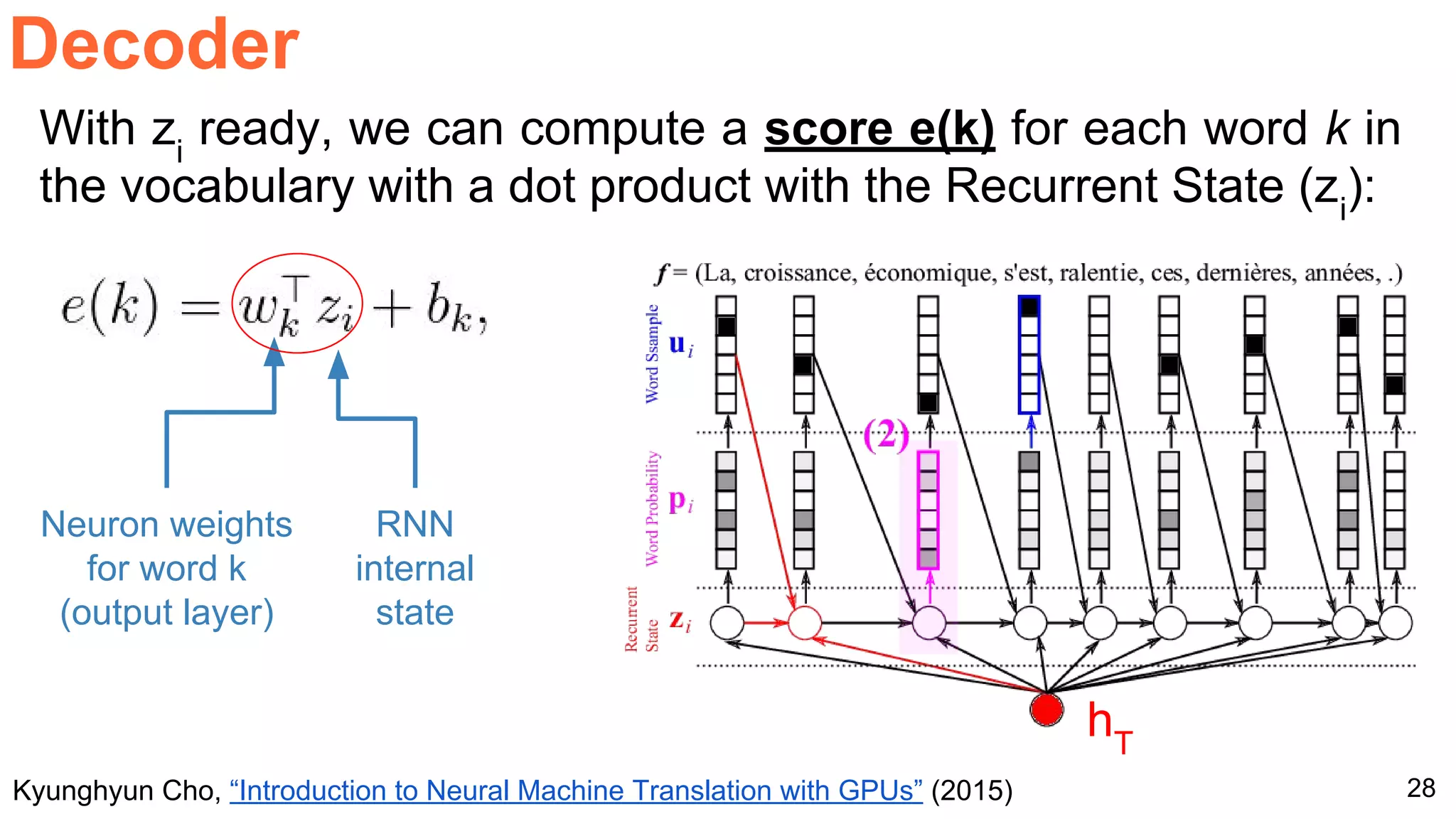

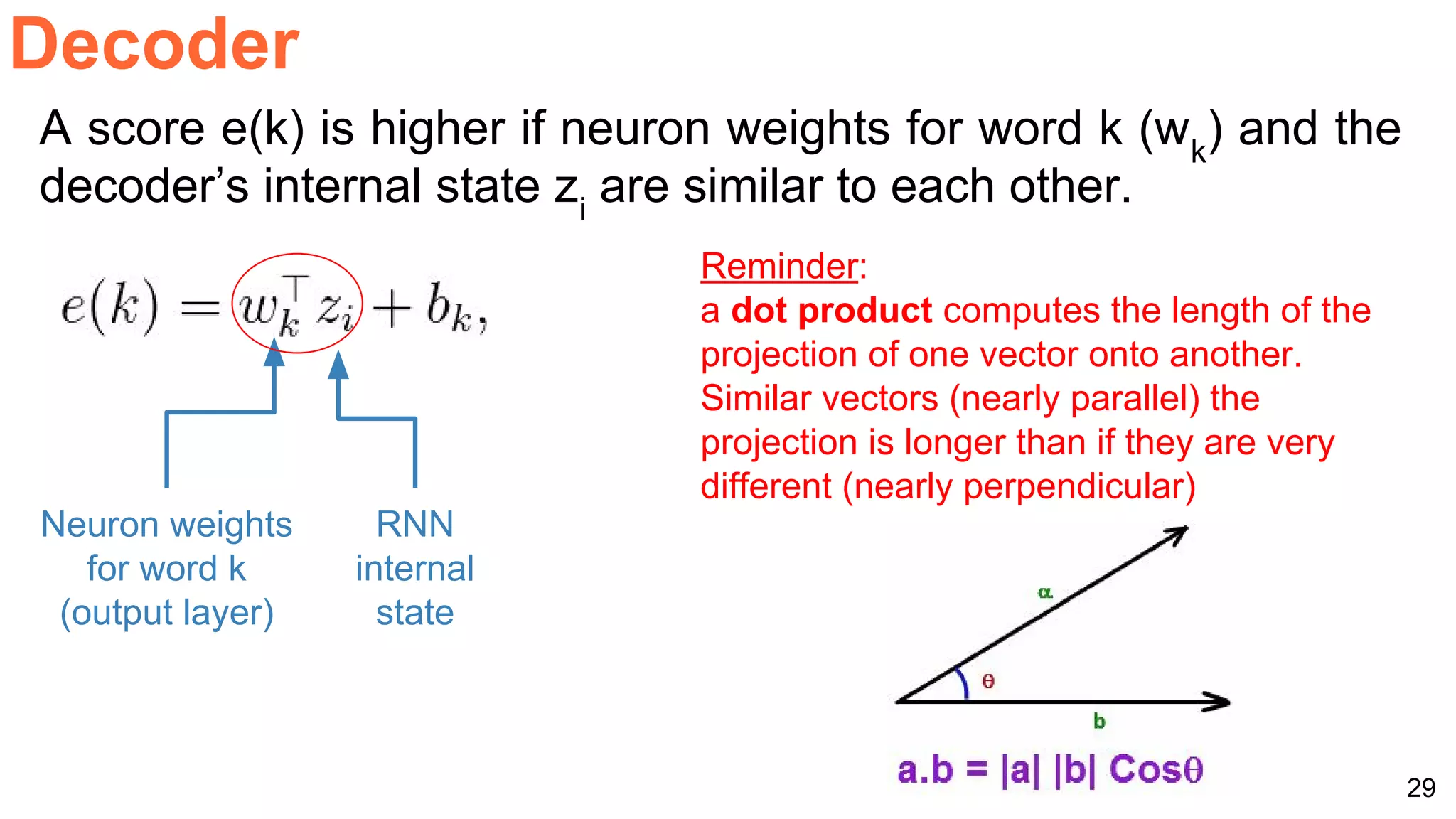

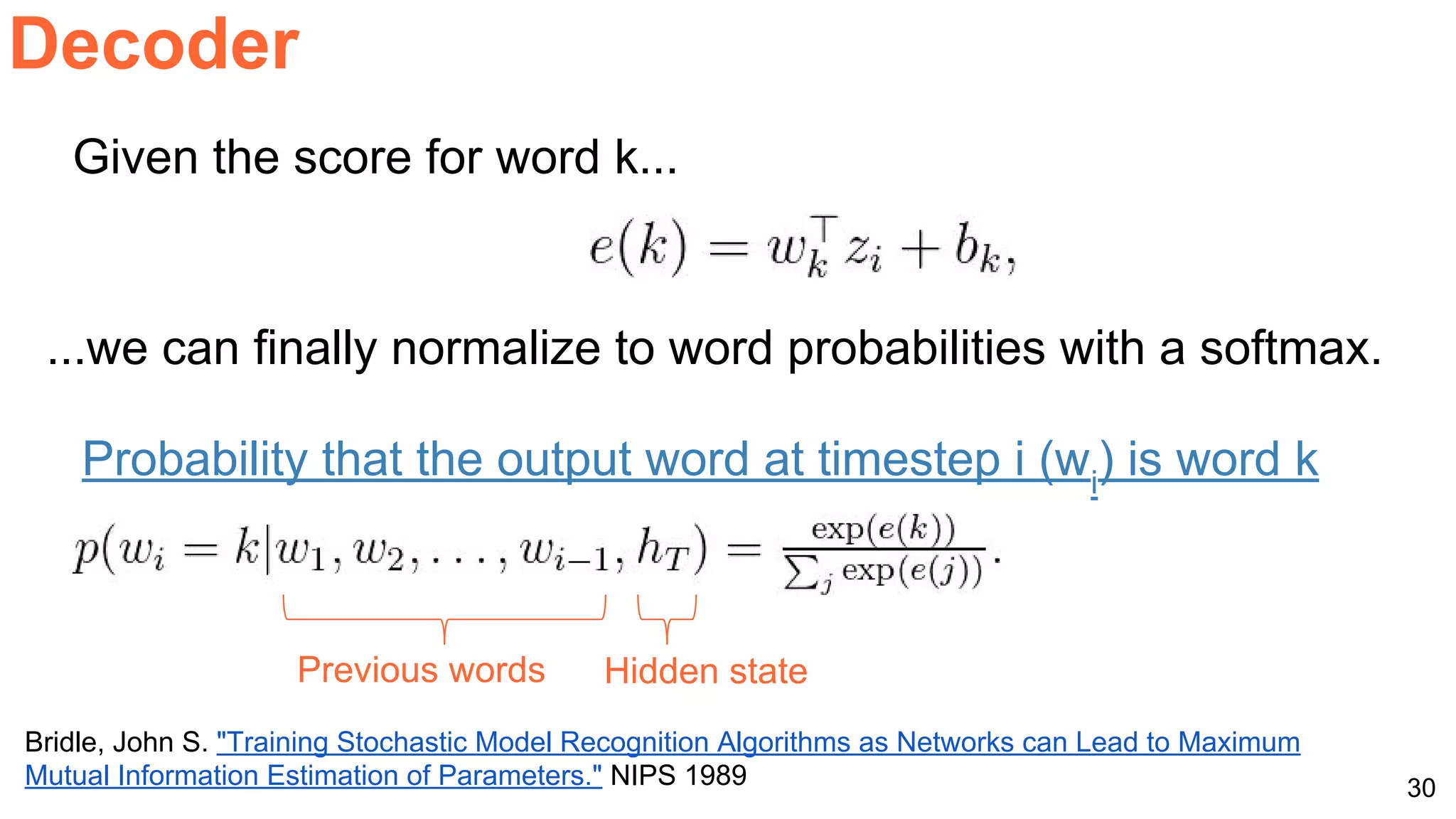

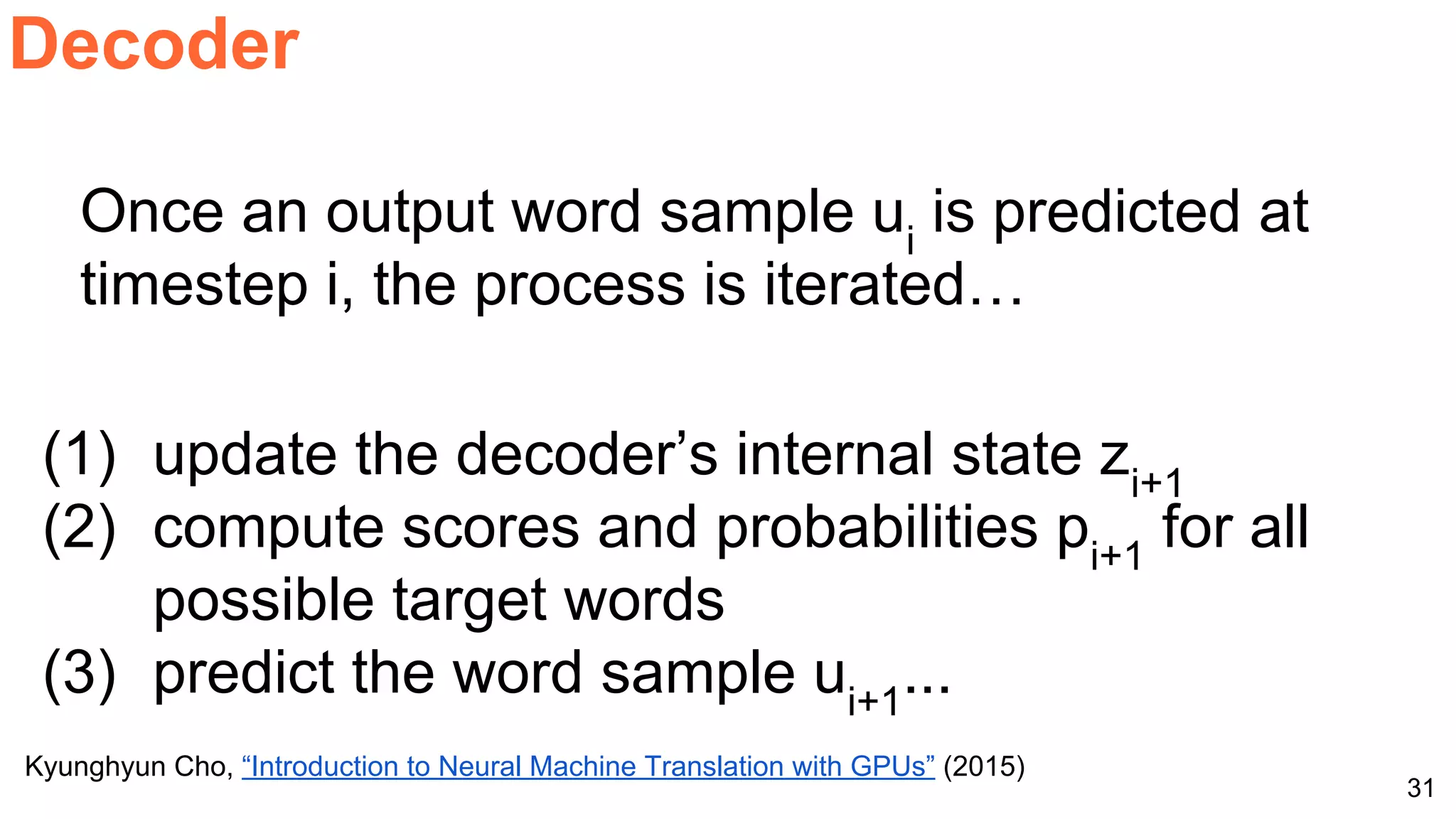

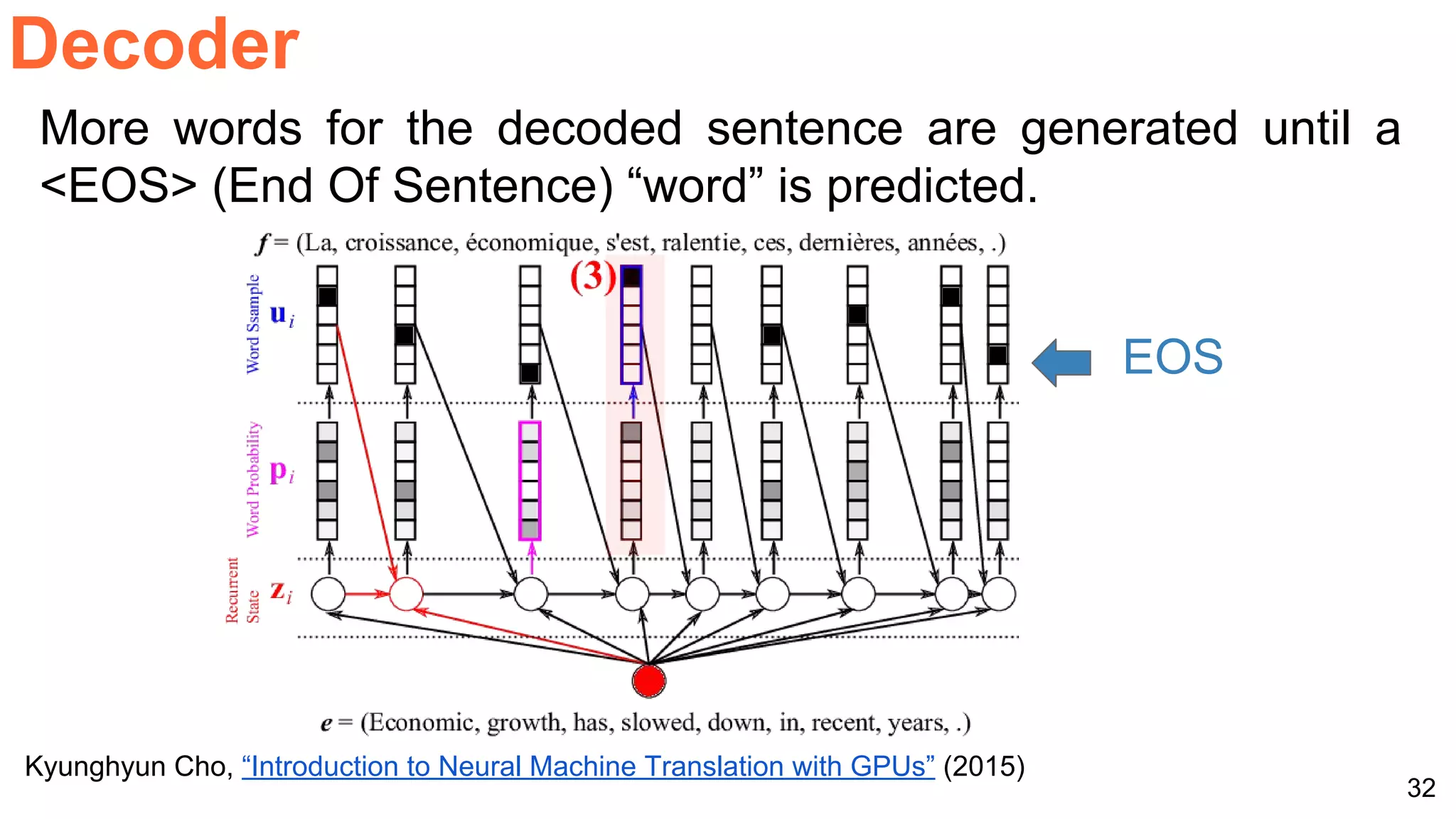

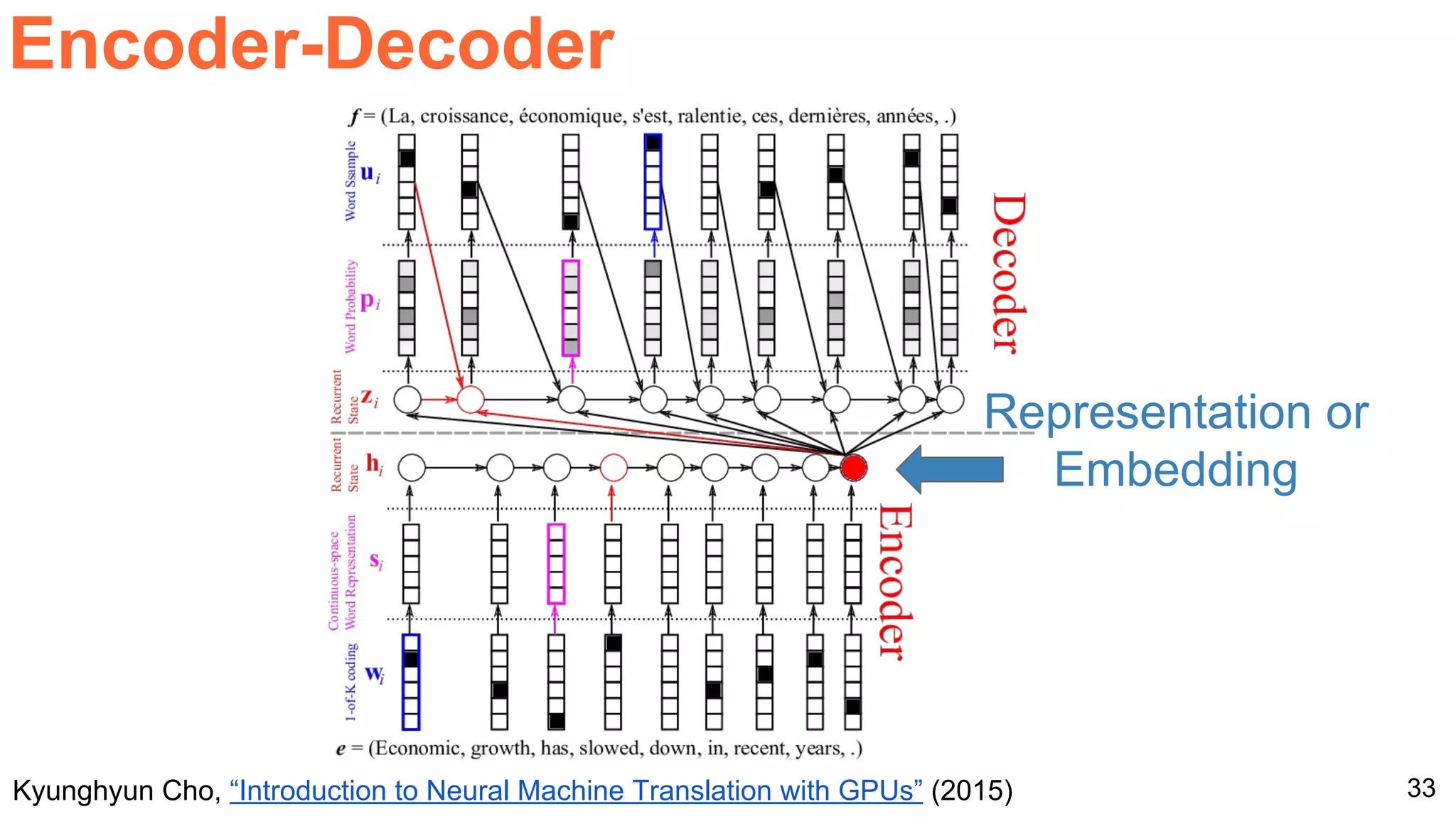

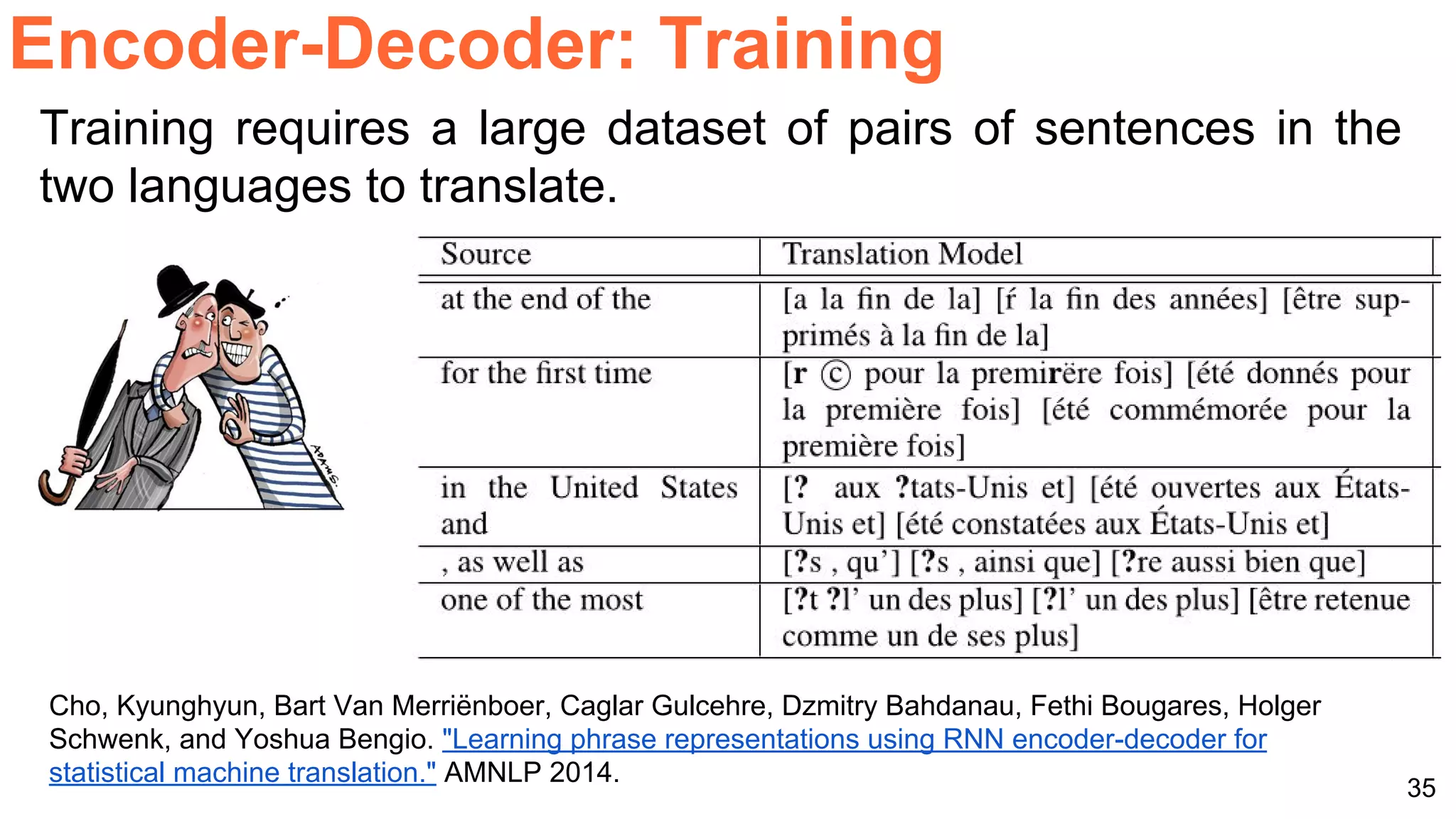

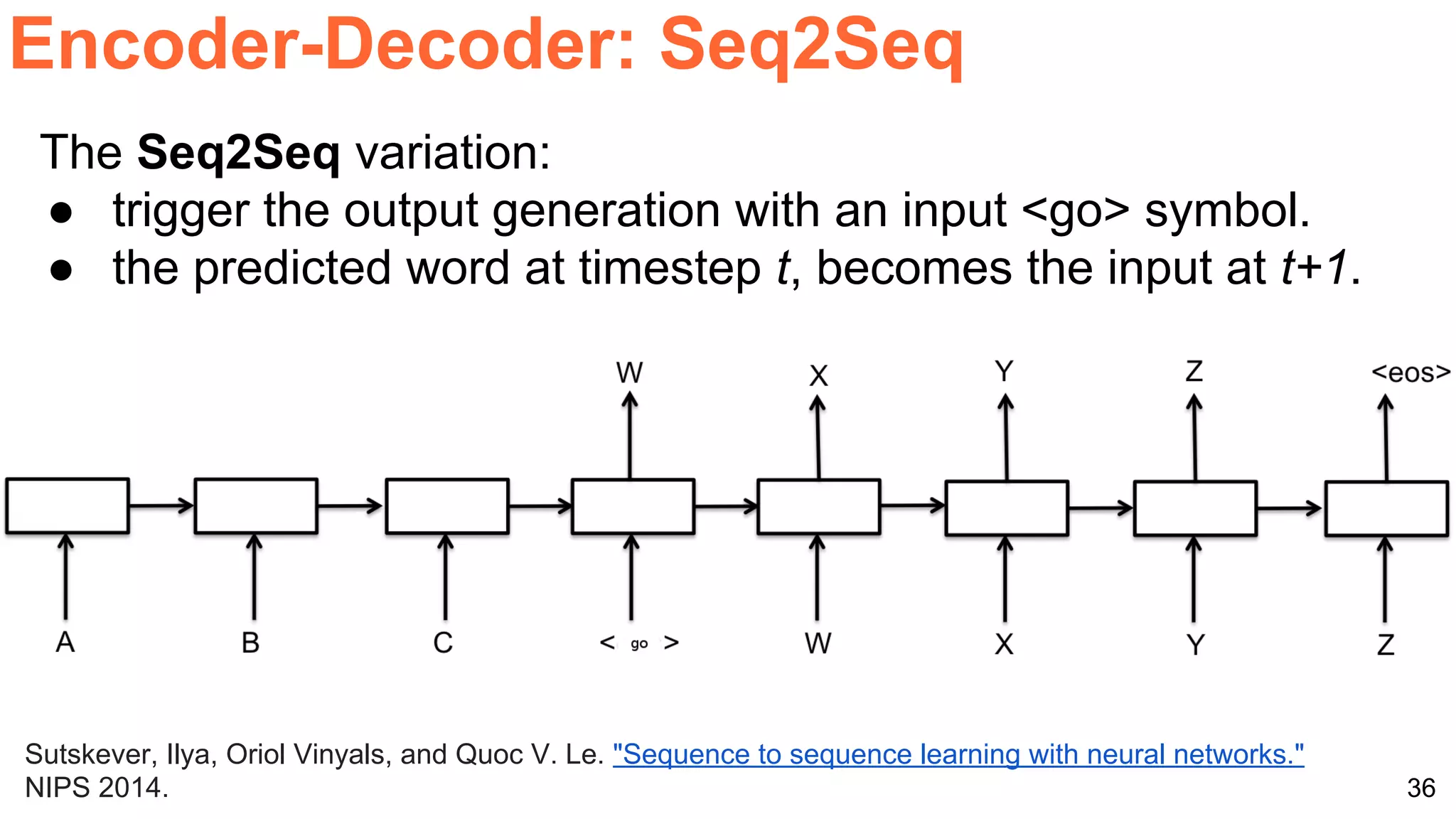

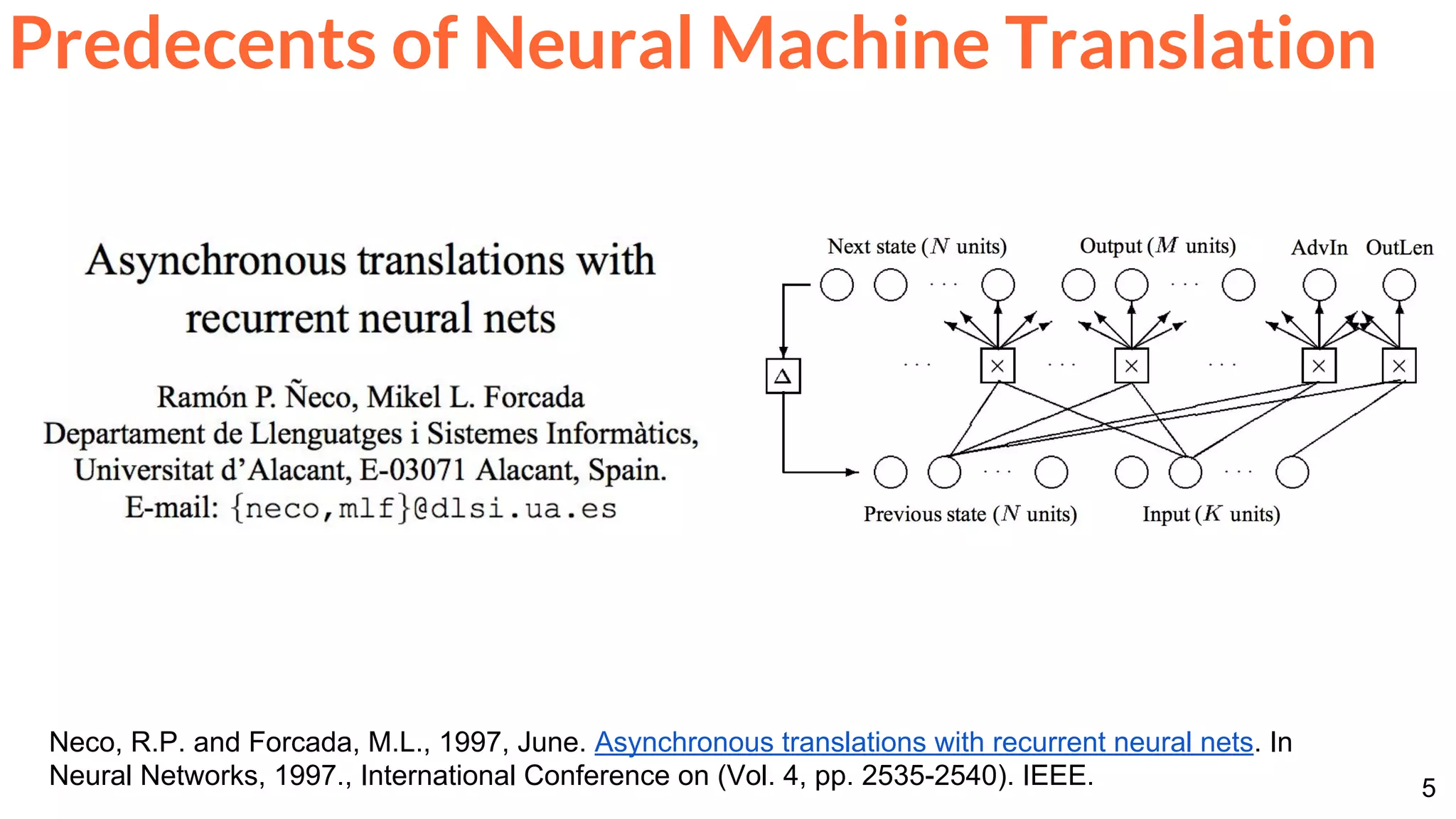

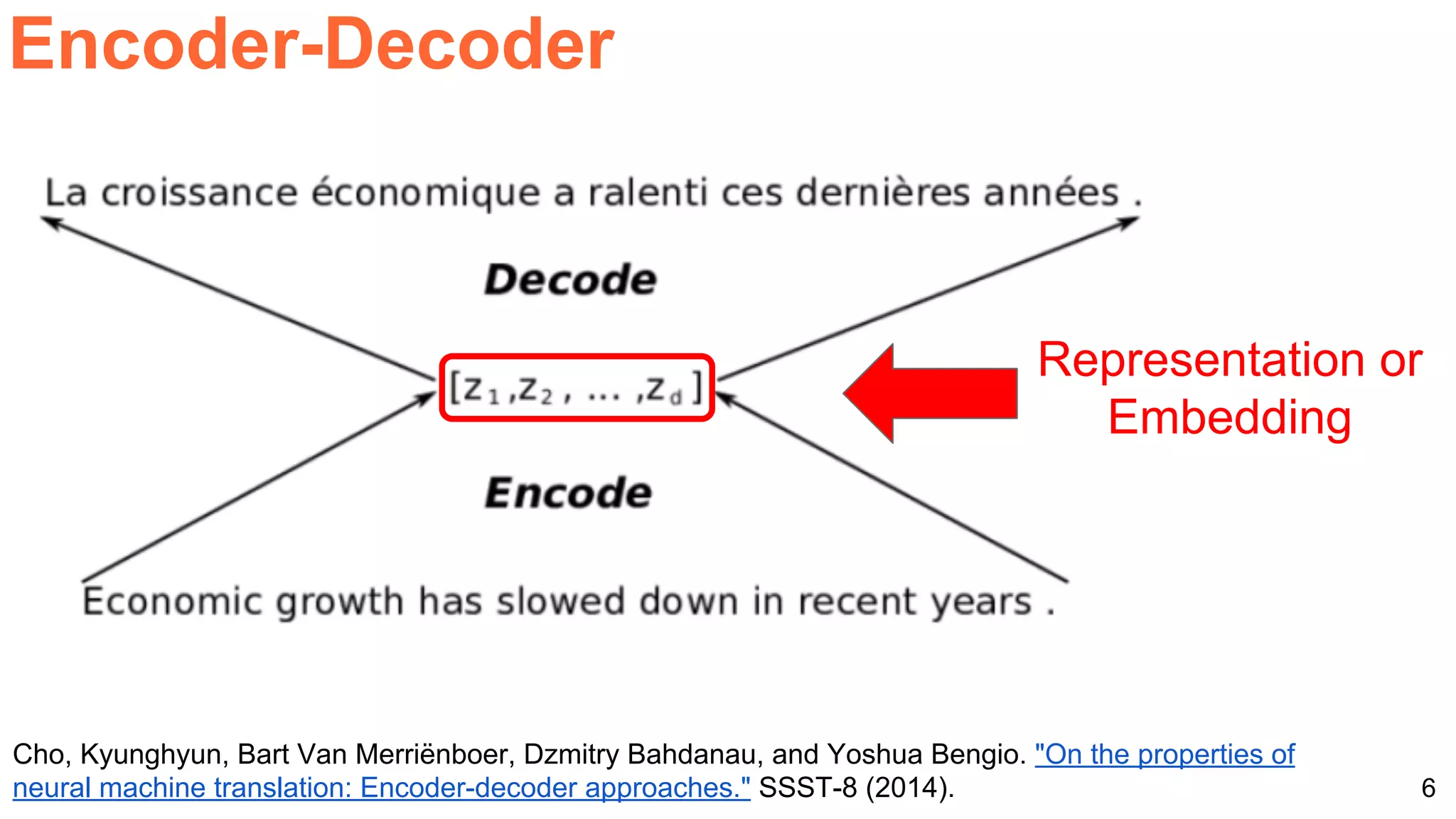

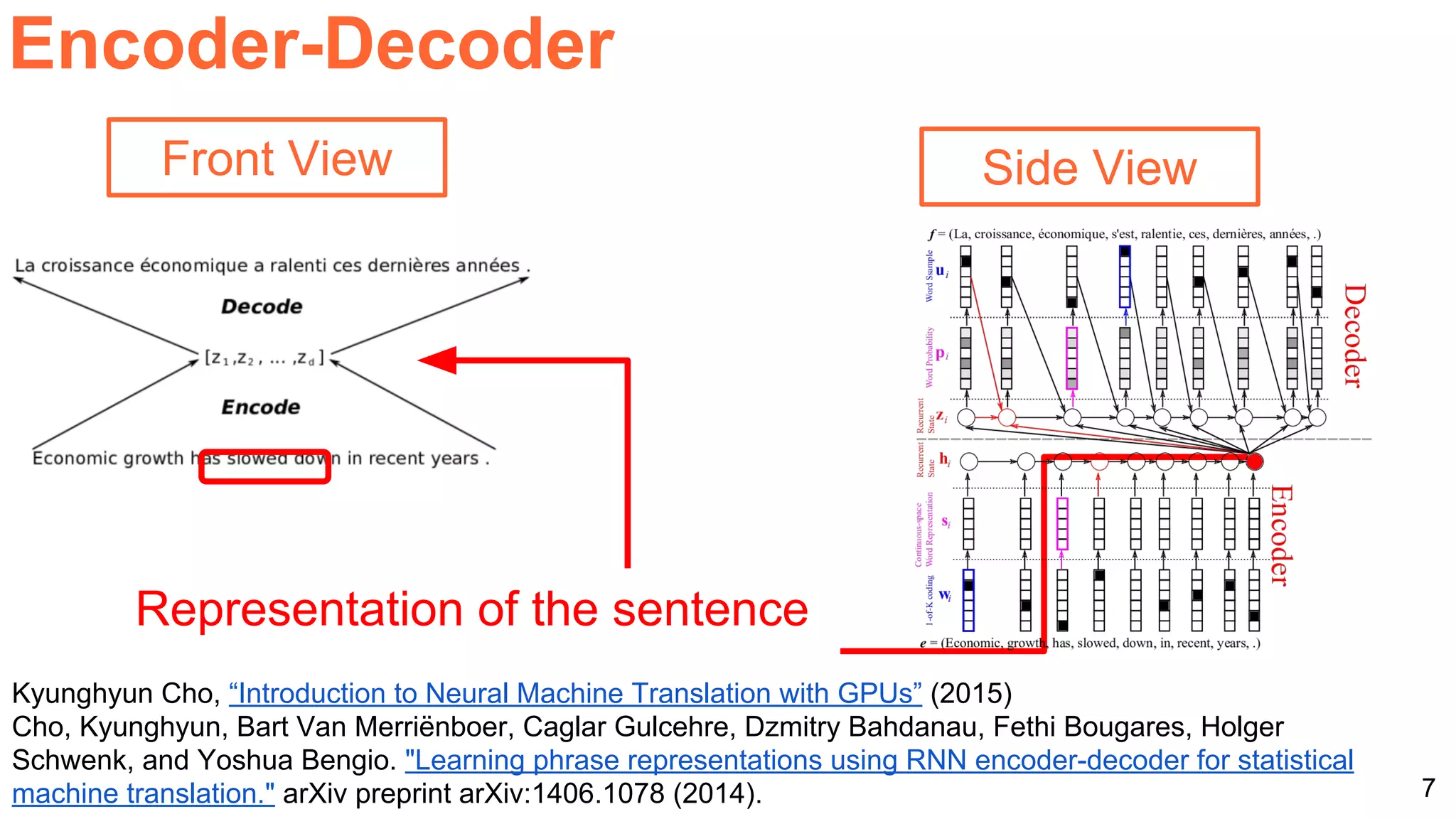

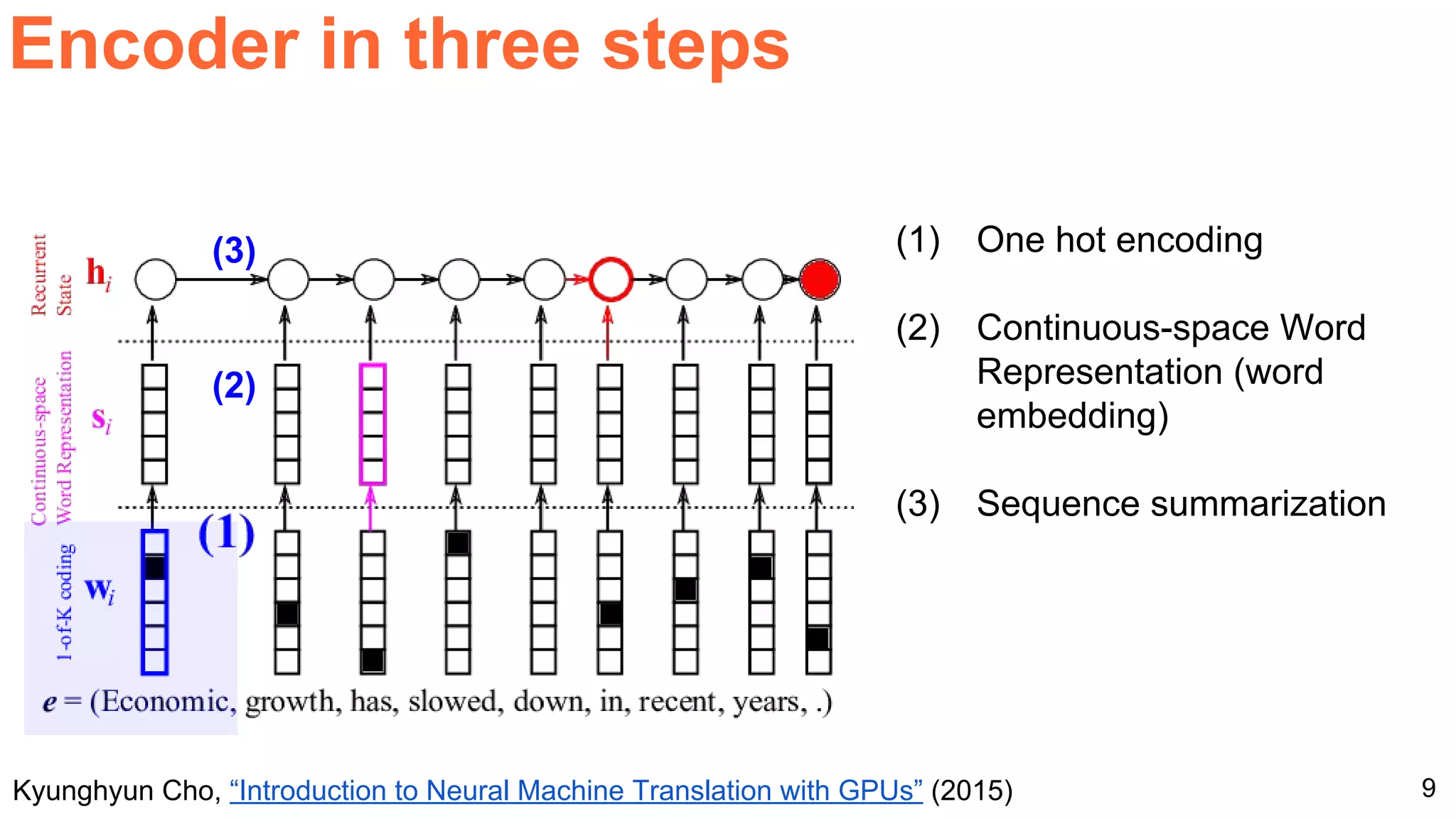

The document details a workshop presentation on neural machine translation at Dublin City University, focusing on the encoder-decoder model and its components. It discusses the stages of transforming input into output through one-hot encoding, word embeddings, and recurrent structures in neural networks. A sequence-to-sequence learning approach is emphasized, including the use of input triggers and how predicted outputs inform subsequent inputs.

![12

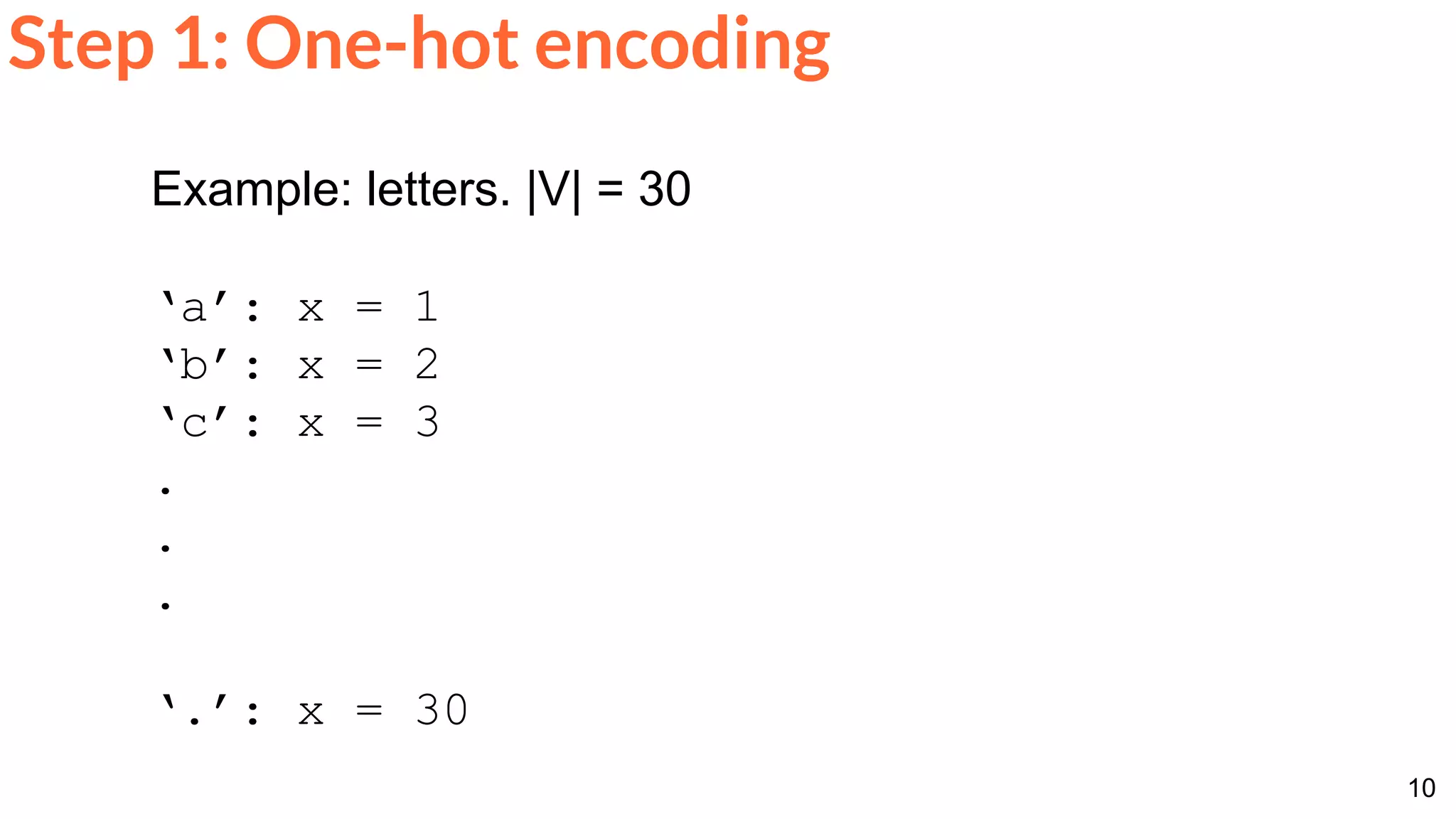

Step 1: One-hot encoding

Example: letters. |V| = 30

‘a’: xT

= [1,0,0, ..., 0]

‘b’: xT

= [0,1,0, ..., 0]

‘c’: xT

= [0,0,1, ..., 0]

.

.

.

‘.’: xT

= [0,0,0, ..., 1]](https://image.slidesharecdn.com/dlmmdcud2l10neuralmachinetranslation-170429103826/75/Neural-Machine-Translation-D2L10-Insight-DCU-Machine-Learning-Workshop-2017-12-2048.jpg)

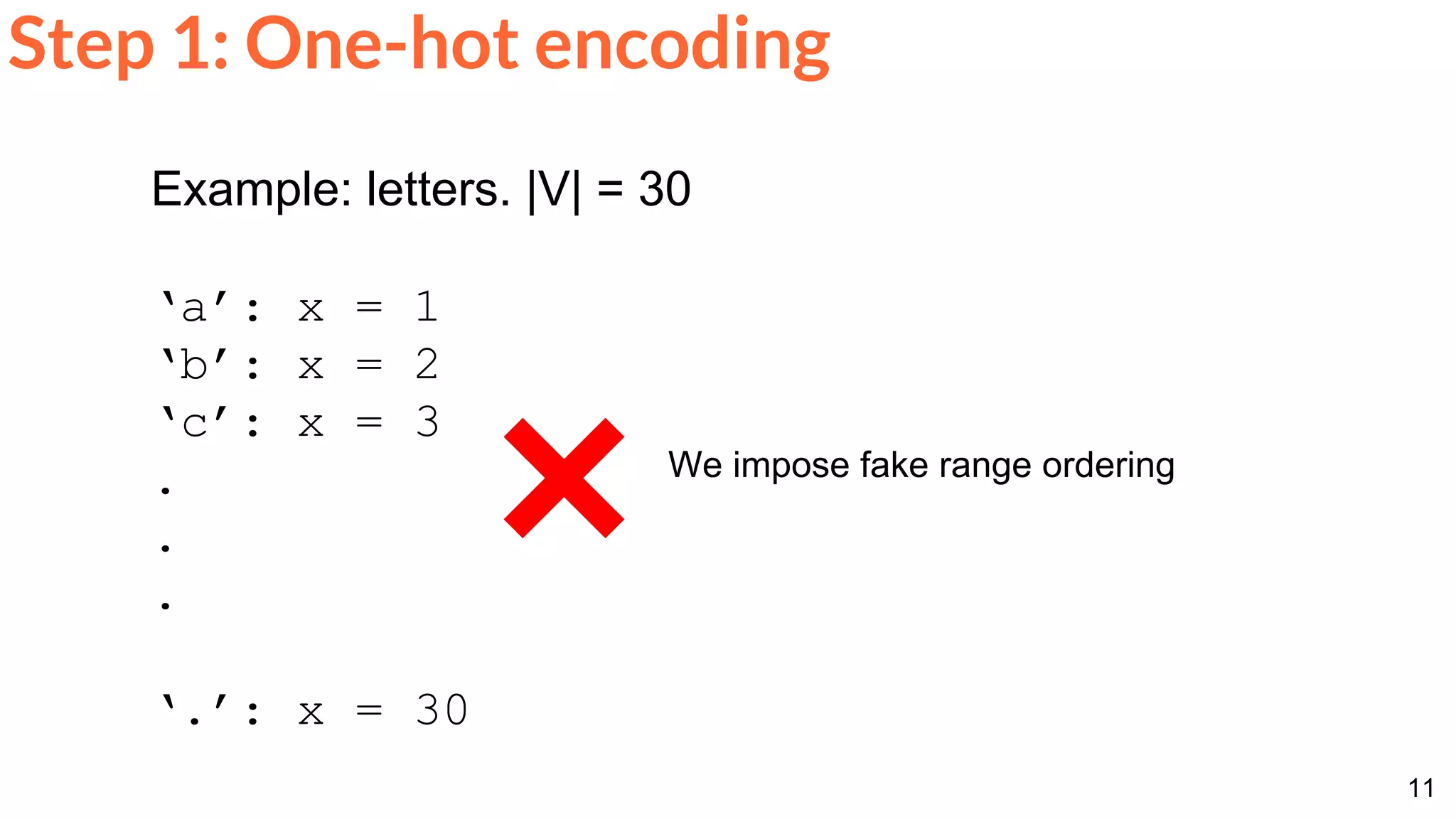

![13

Step 1: One-hot encoding

Example: words.

cat: xT

= [1,0,0, ..., 0]

dog: xT

= [0,1,0, ..., 0]

.

.

house: xT

= [0,0,0, …,0,1,0,...,0]

.

.

.

Number of words, |V| ?

B2: 5K

C2: 18K

LVSR: 50-100K

Wikipedia (1.6B): 400K

Crawl data (42B): 2M](https://image.slidesharecdn.com/dlmmdcud2l10neuralmachinetranslation-170429103826/75/Neural-Machine-Translation-D2L10-Insight-DCU-Machine-Learning-Workshop-2017-13-2048.jpg)