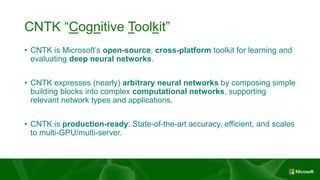

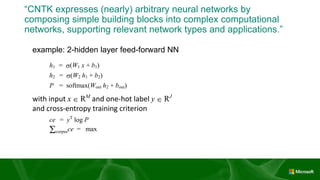

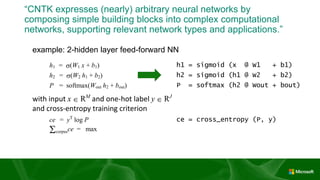

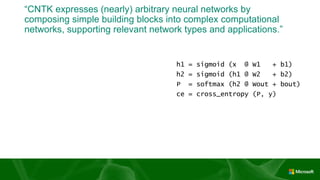

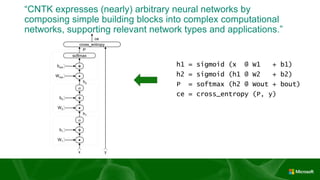

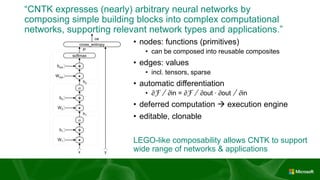

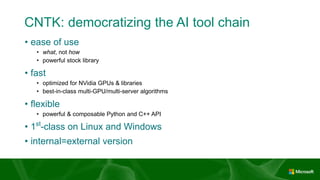

The Microsoft Cognitive Toolkit (CNTK) is Microsoft's open-source deep learning toolkit. It expresses neural networks as computational graphs composed of simple building blocks, supporting various network types and applications. CNTK is production-ready with state-of-the-art accuracy, efficiency, and scalability to multiple GPUs and servers.

![Microsoft’s historic

speech breakthrough

• Microsoft 2016 research system for

conversational speech recognition

• 5.9% word-error rate

• enabled by CNTK’s multi-server scalability

[W. Xiong, J. Droppo, X. Huang, F. Seide, M. Seltzer, A. Stolcke,

D. Yu, G. Zweig: “Achieving Human Parity in Conversational

Speech Recognition,” https://arxiv.org/abs/1610.05256]](https://image.slidesharecdn.com/20161130cognitivetoolkit-161130233503/85/html5j-7-Microsoft-Cognitive-Toolkit-CNTK-Overview-11-320.jpg)

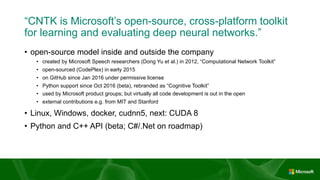

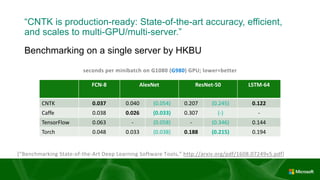

![“CNTK is production-ready: State-of-the-art accuracy, efficient,

and scales to multi-GPU/multi-server.”

Theano only supports 1 GPU

Achieved with 1-bit gradient quantization

algorithm

0

10000

20000

30000

40000

50000

60000

70000

80000

CNTK Theano TensorFlow Torch 7 Caffe

speed comparison (samples/second), higher = better

[note: December 2015]

1 GPU 1 x 4 GPUs 2 x 4 GPUs (8 GPUs)](https://image.slidesharecdn.com/20161130cognitivetoolkit-161130233503/85/html5j-7-Microsoft-Cognitive-Toolkit-CNTK-Overview-26-320.jpg)

![[html5jロボット部 第7回勉強会] Microsoft Cognitive Toolkit (CNTK) Overview](https://image.slidesharecdn.com/20161130cognitivetoolkit-161130233503/85/html5j-7-Microsoft-Cognitive-Toolkit-CNTK-Overview-32-320.jpg)

![[html5jロボット部 第7回勉強会] Microsoft Cognitive Toolkit (CNTK) Overview](https://image.slidesharecdn.com/20161130cognitivetoolkit-161130233503/85/html5j-7-Microsoft-Cognitive-Toolkit-CNTK-Overview-33-320.jpg)