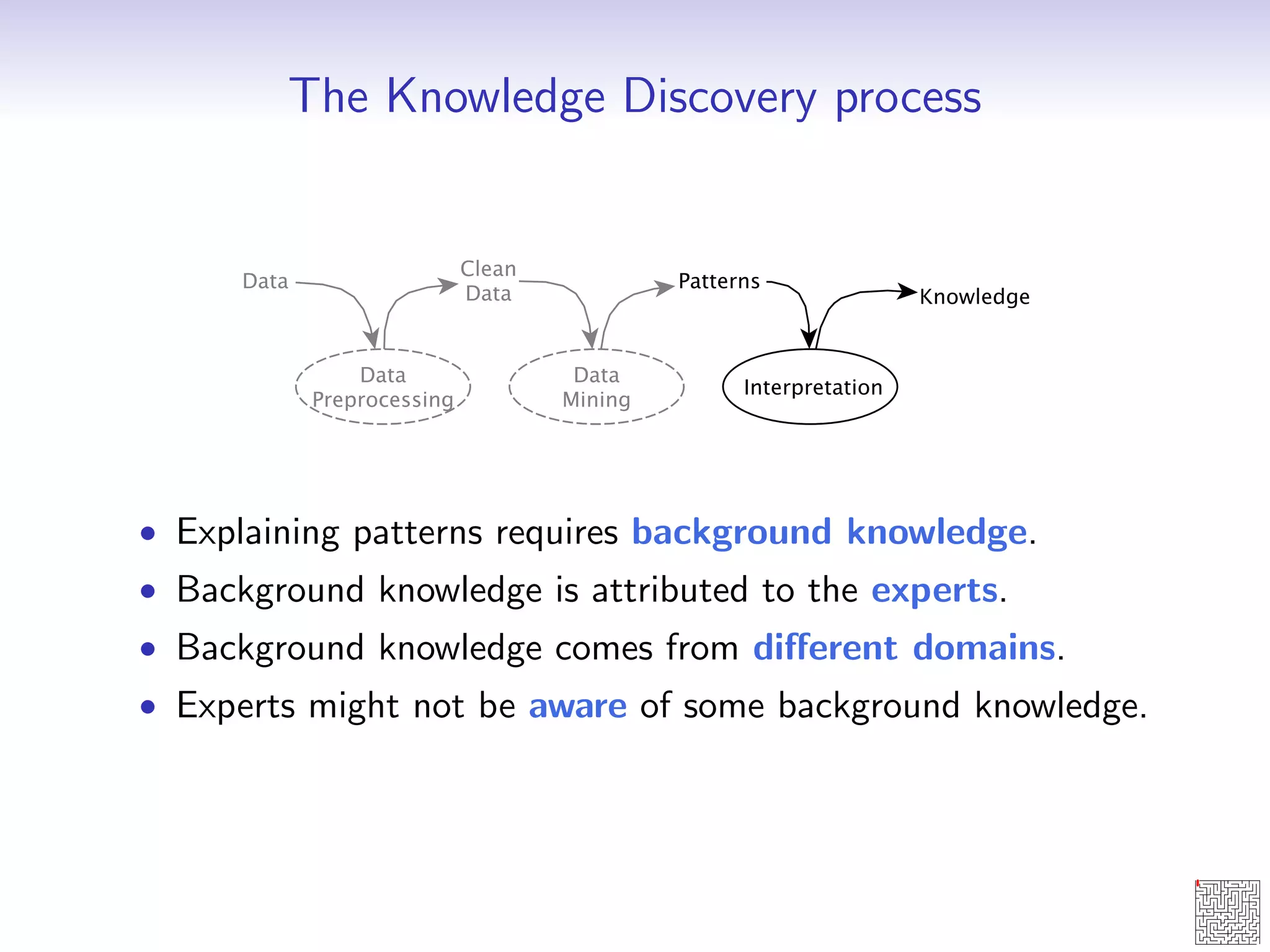

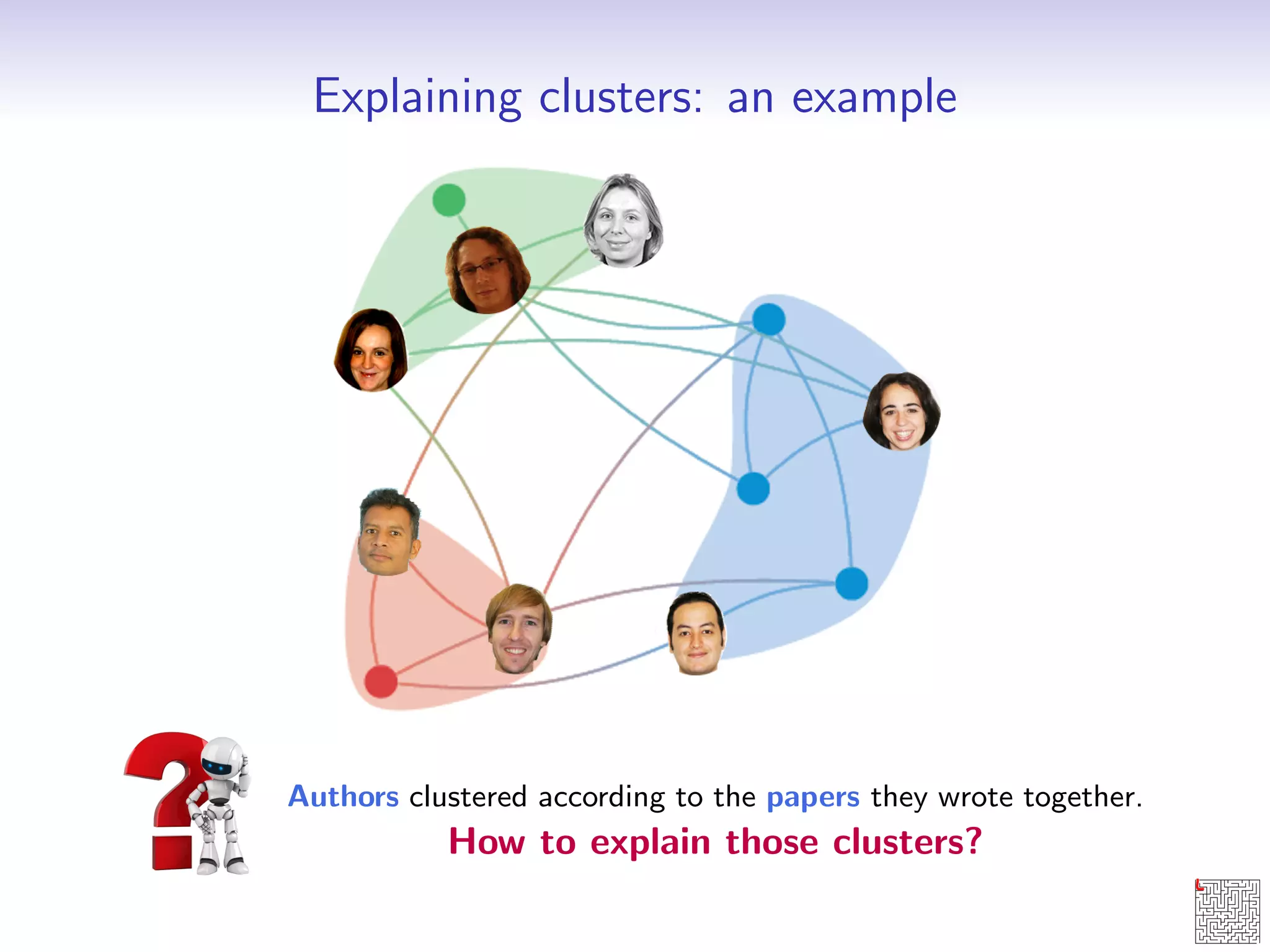

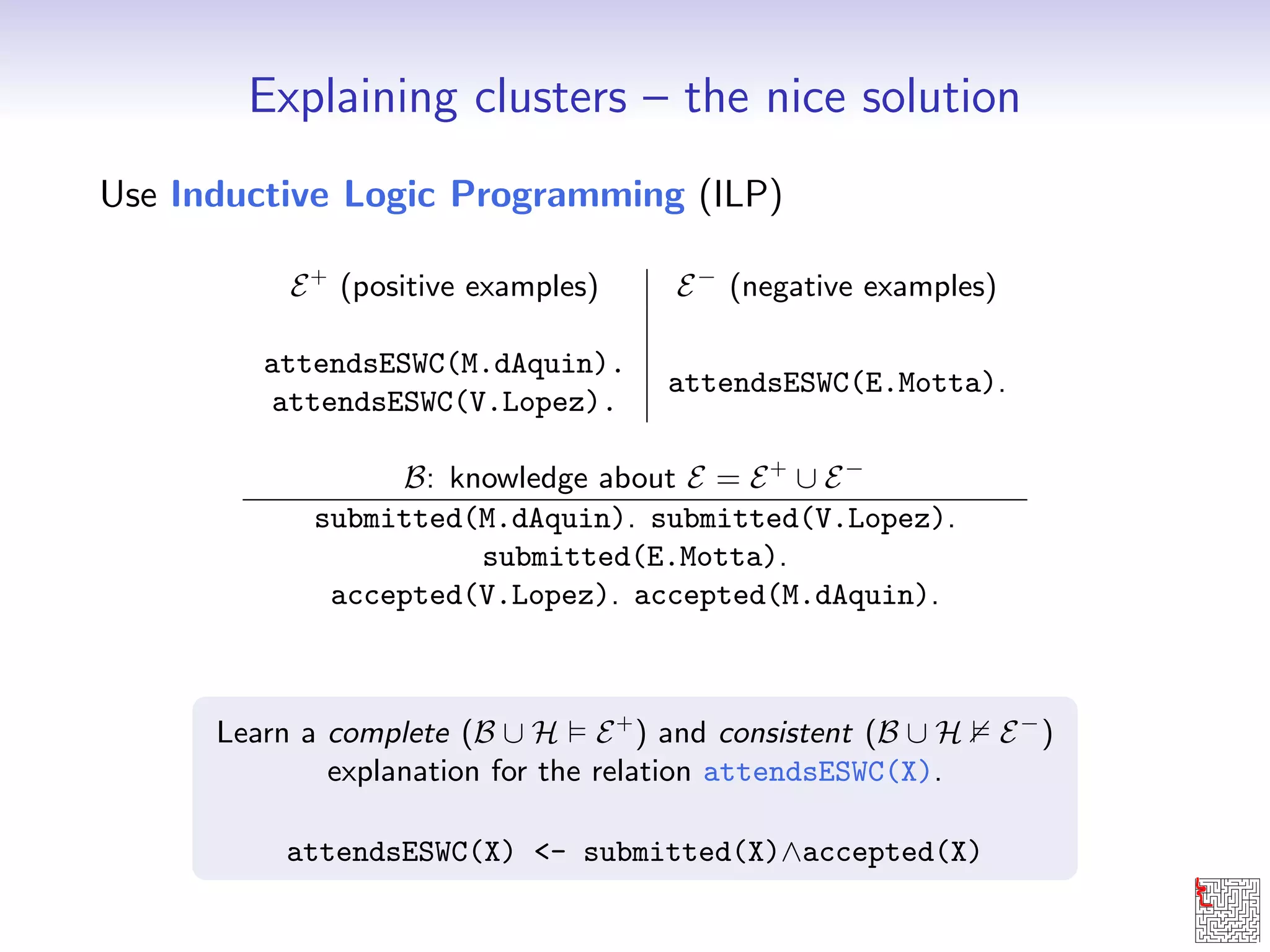

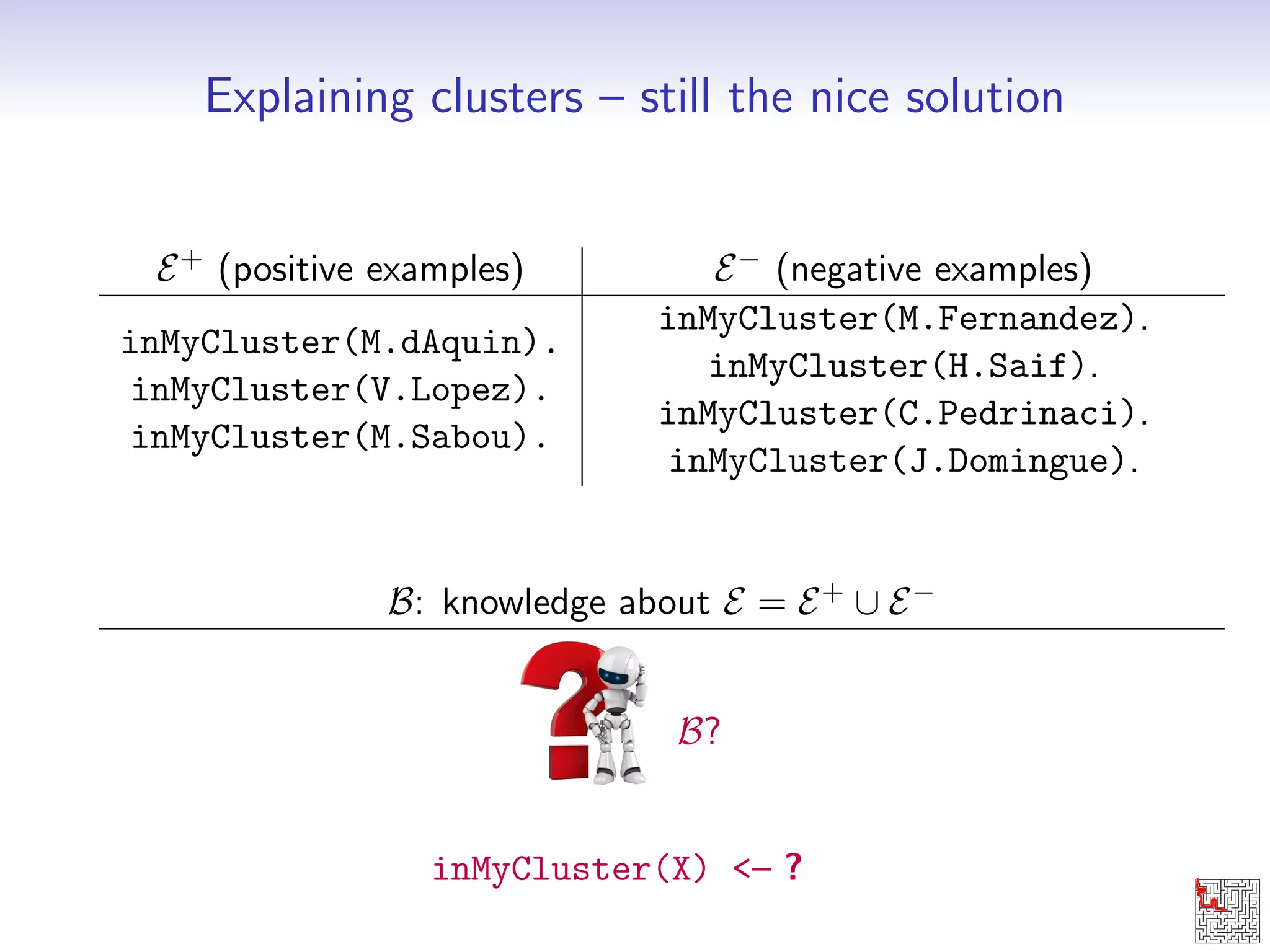

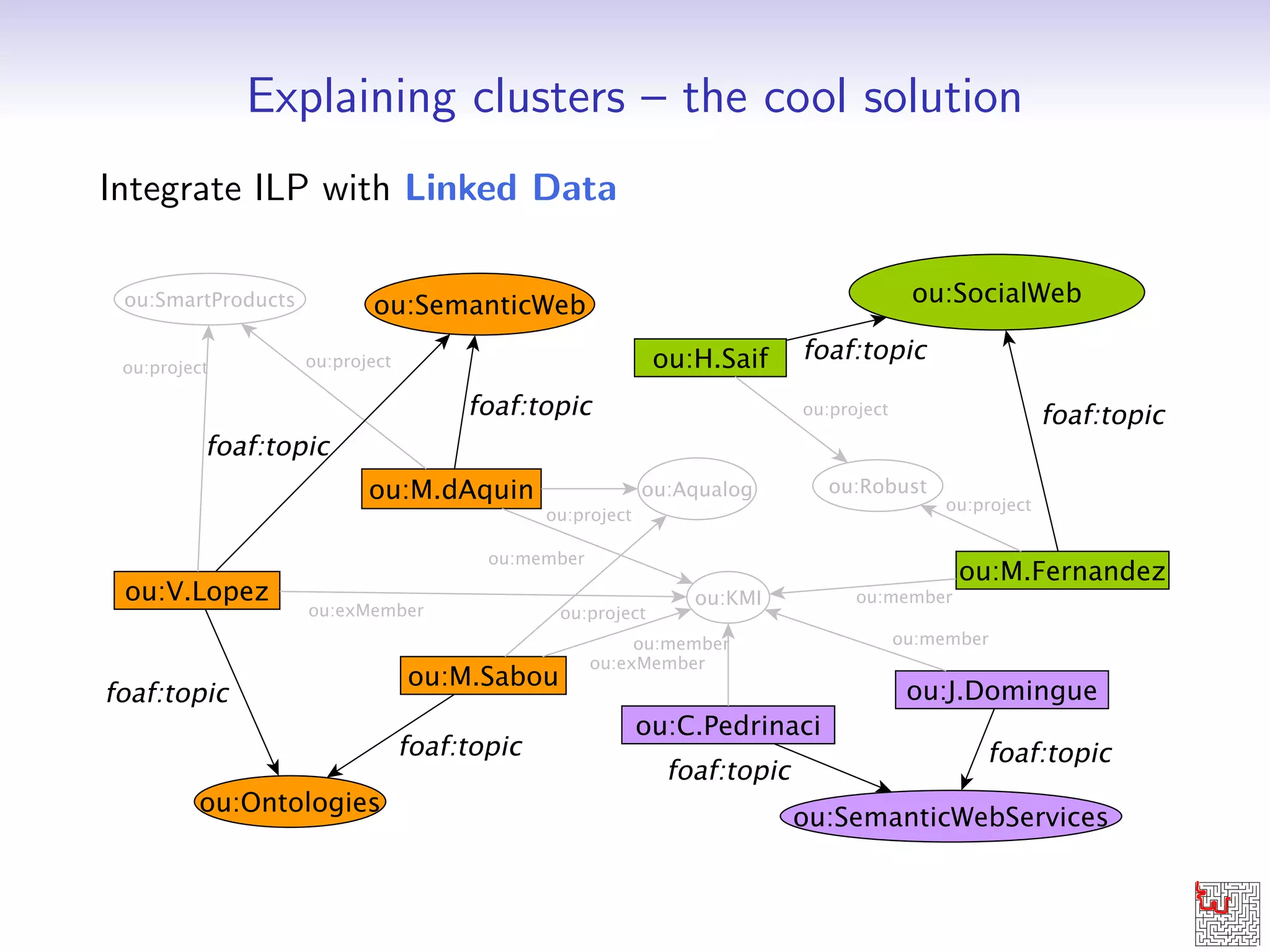

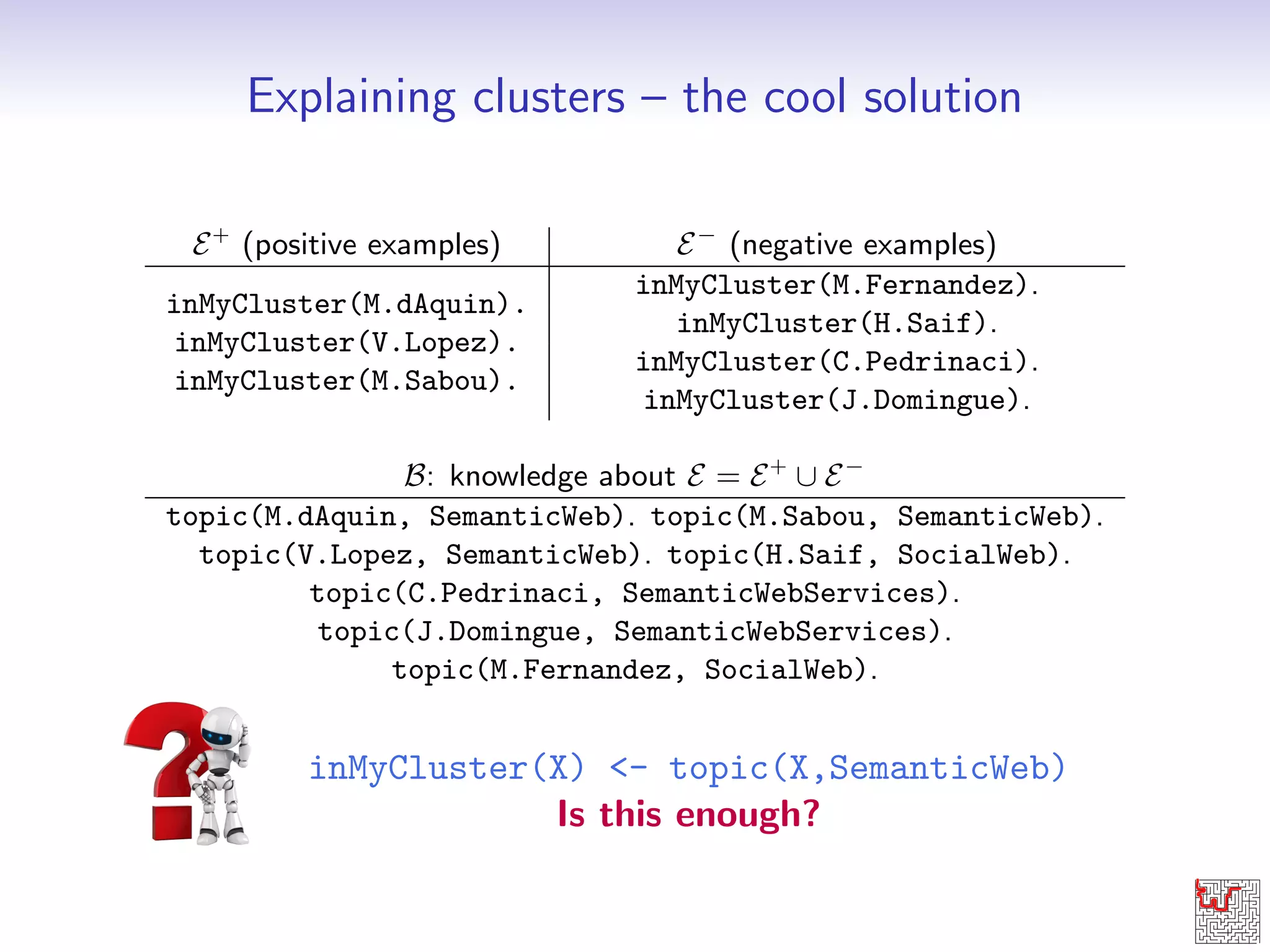

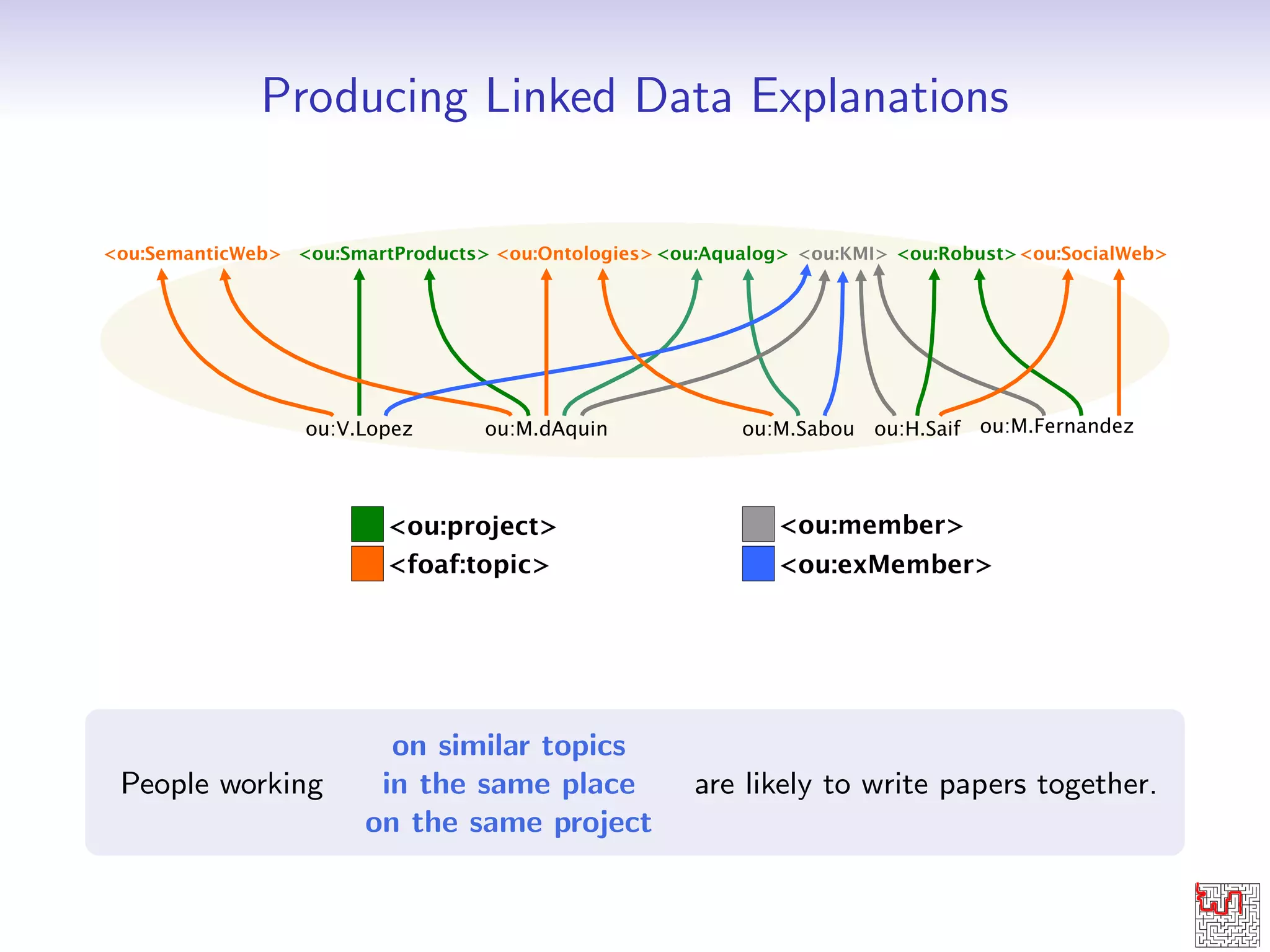

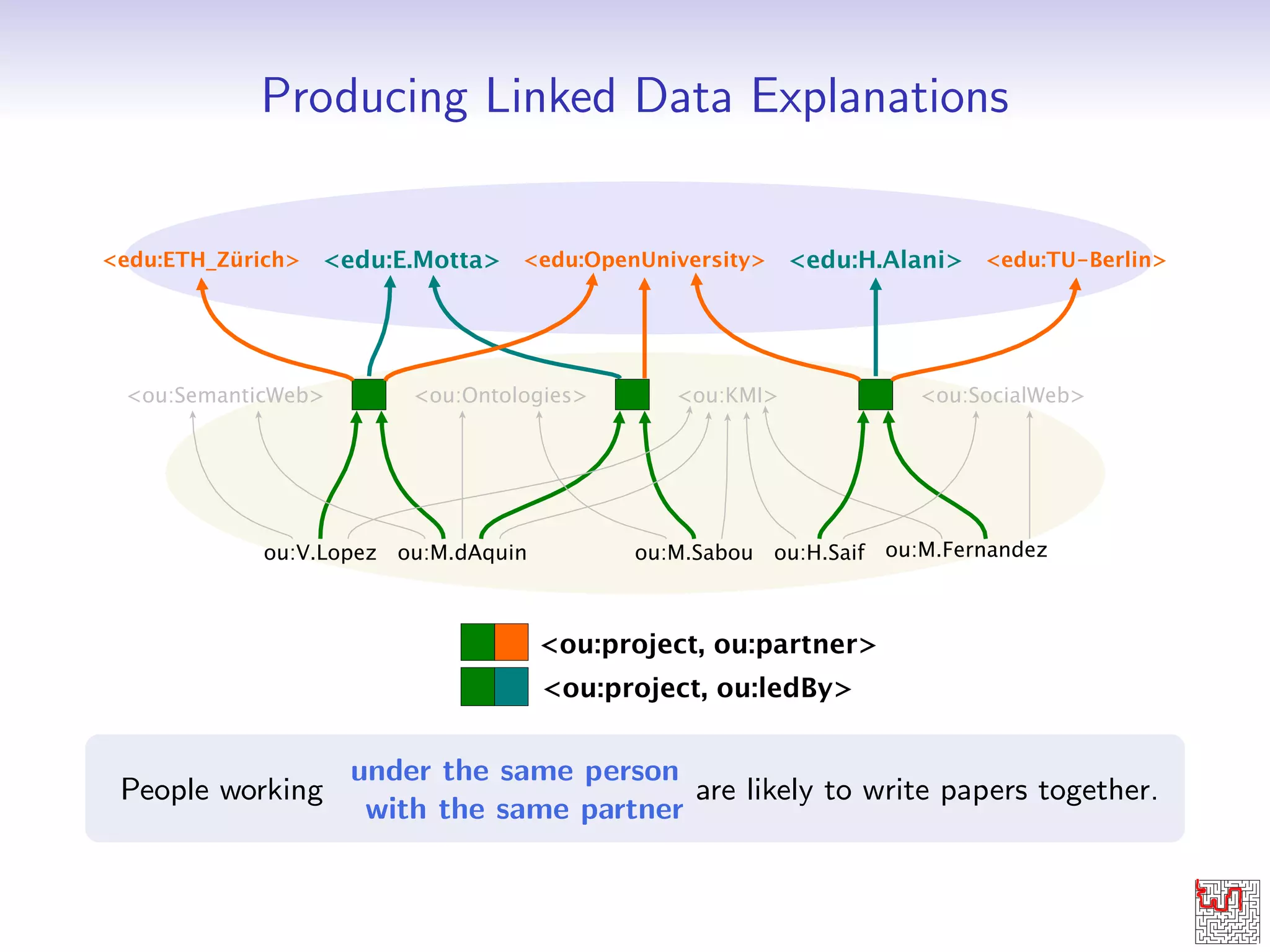

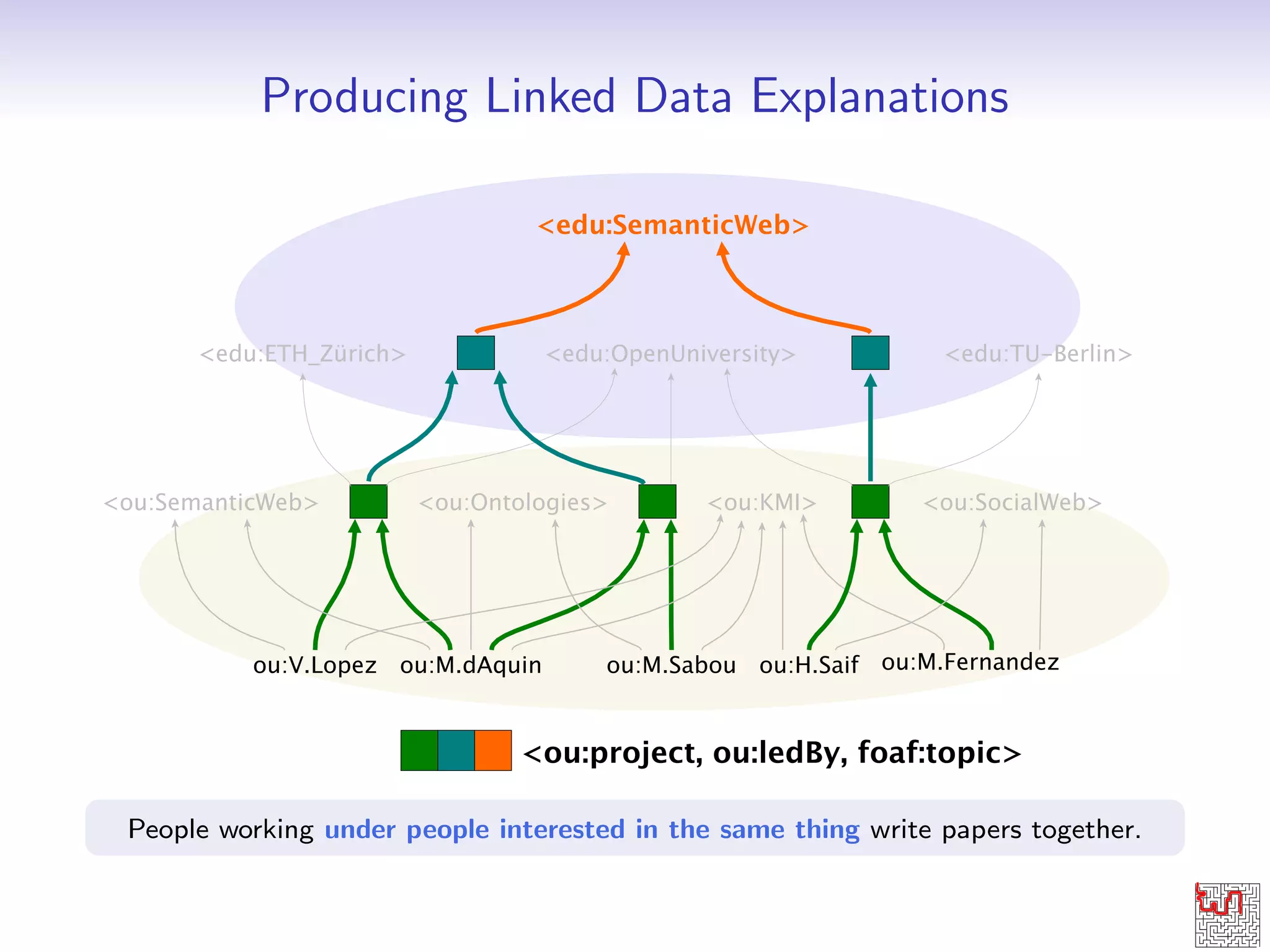

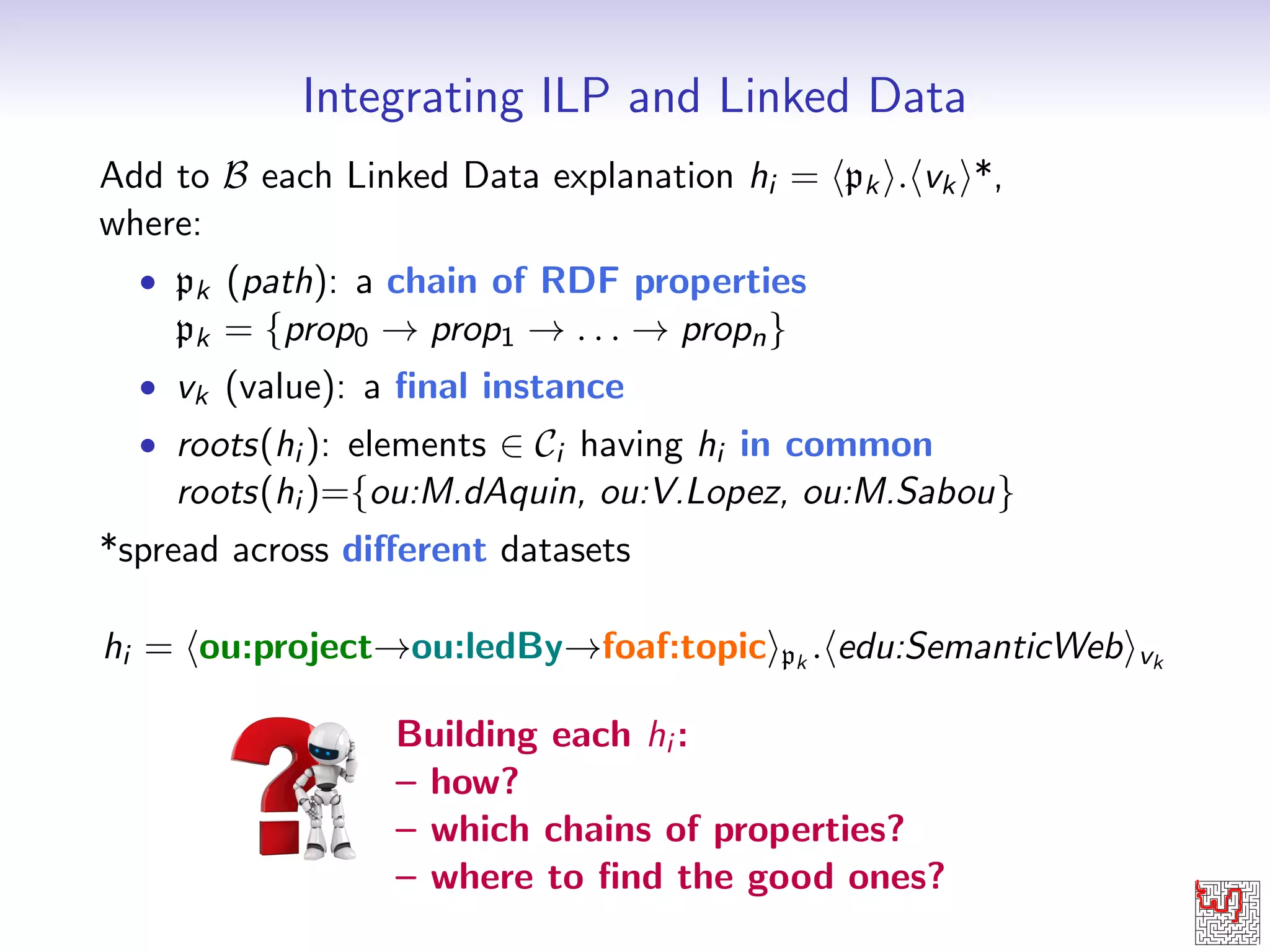

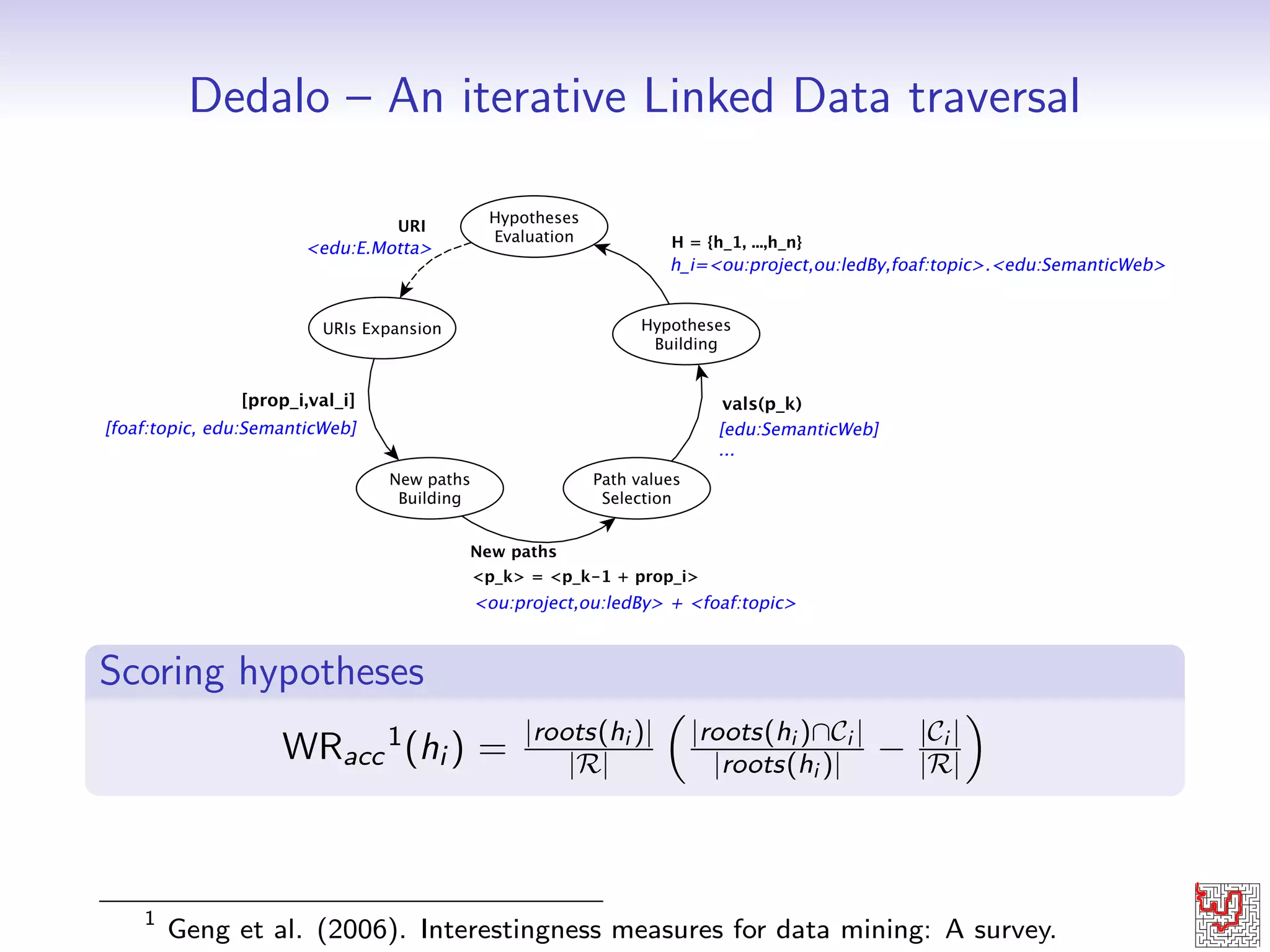

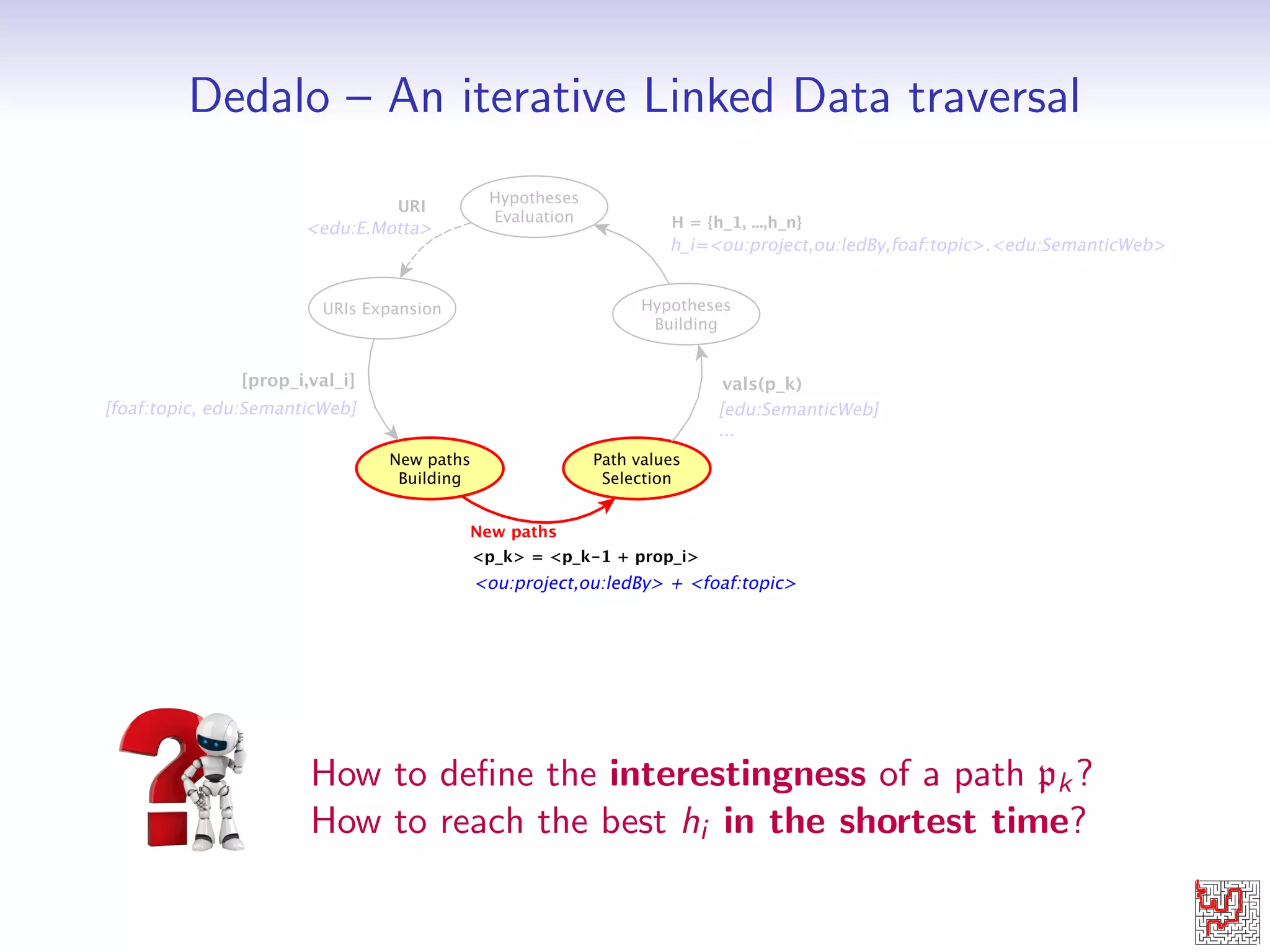

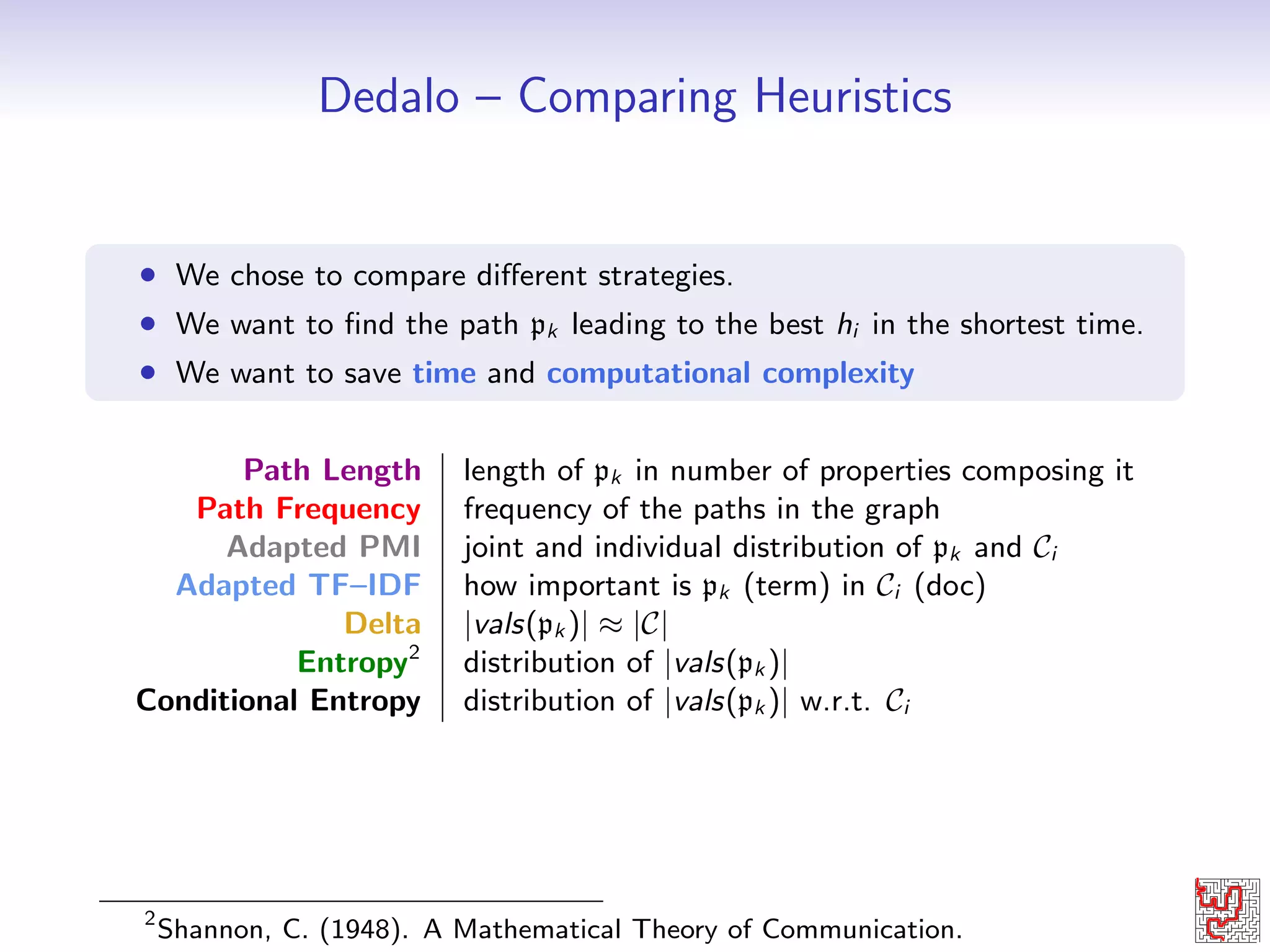

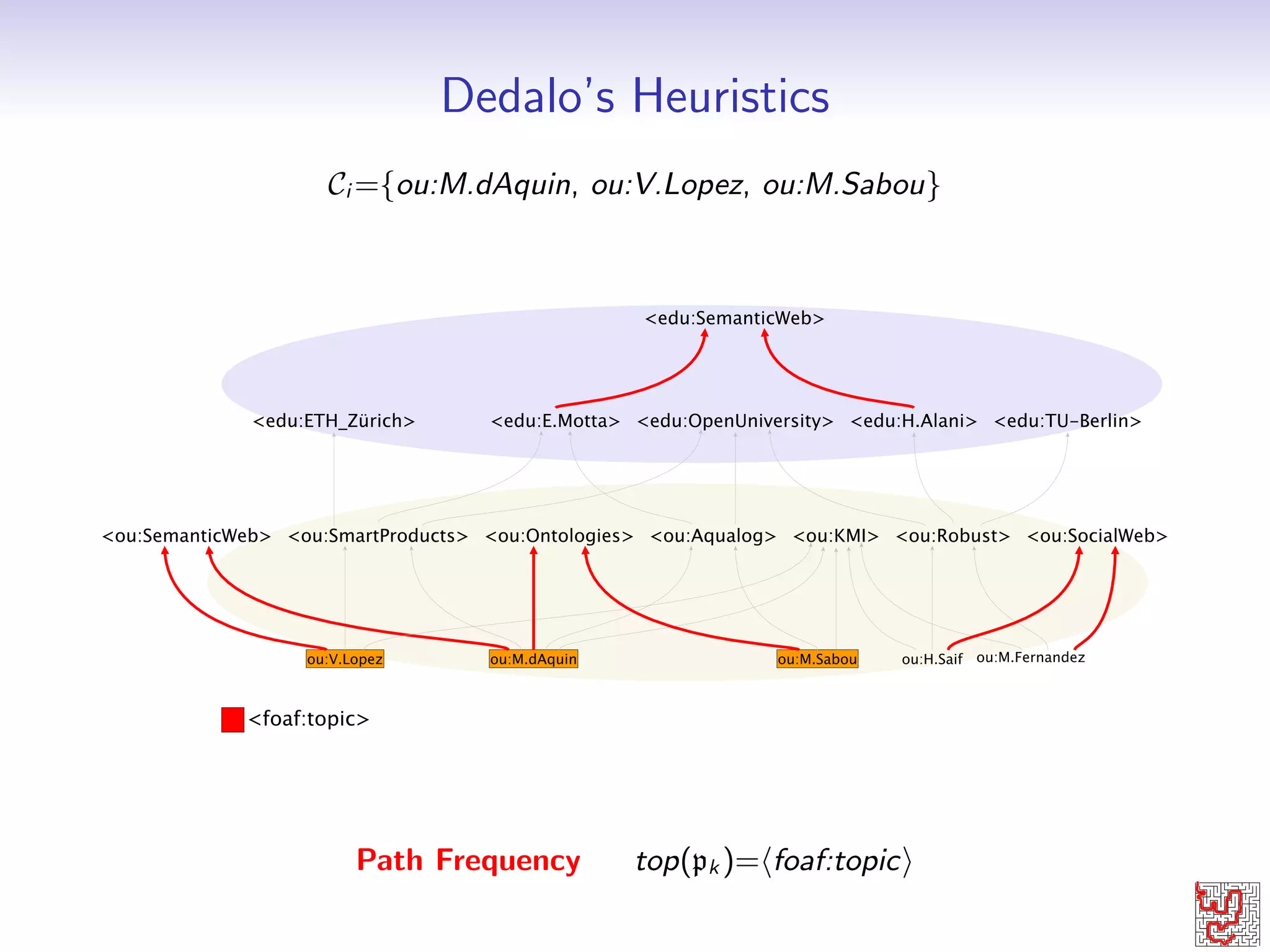

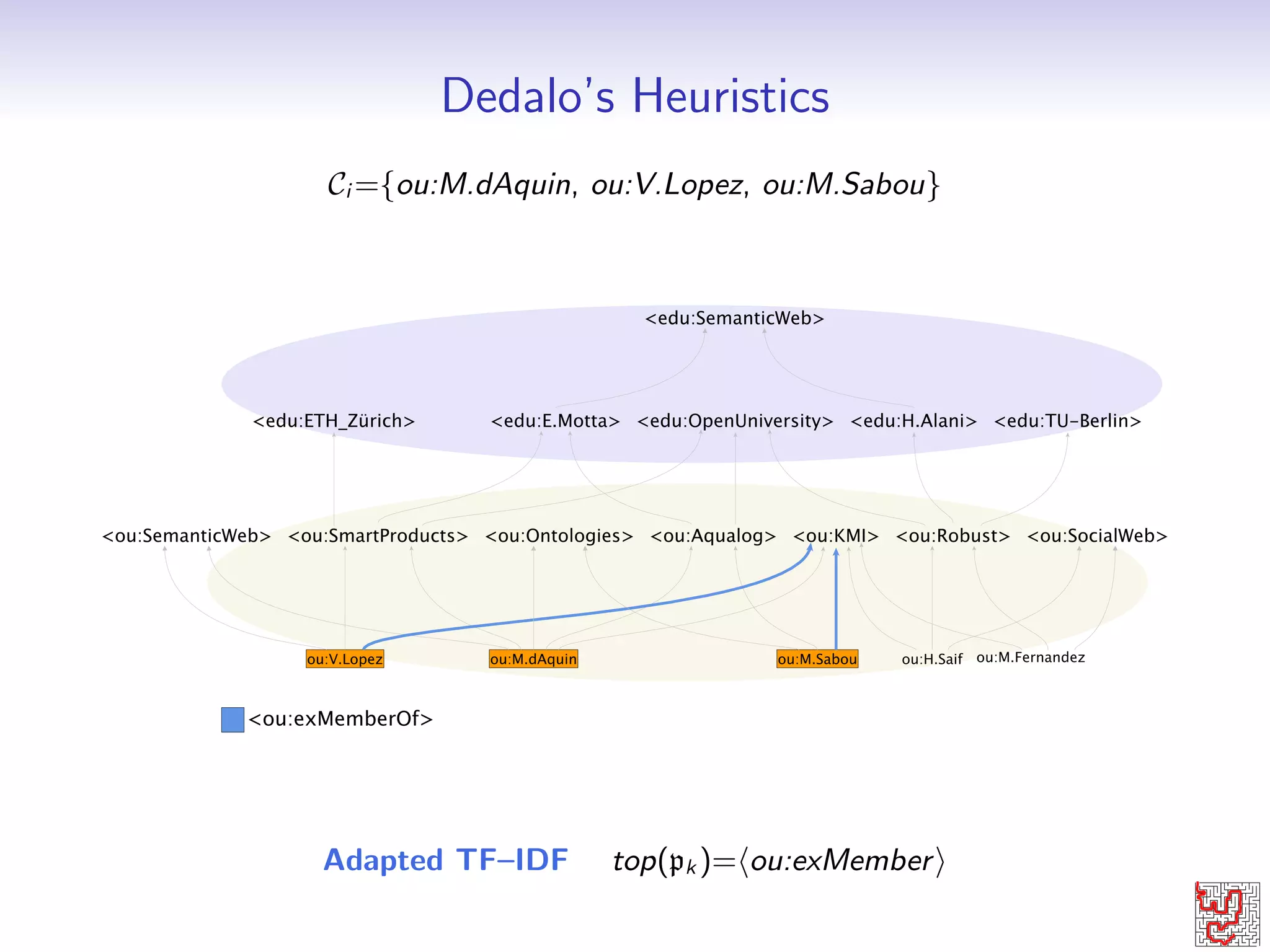

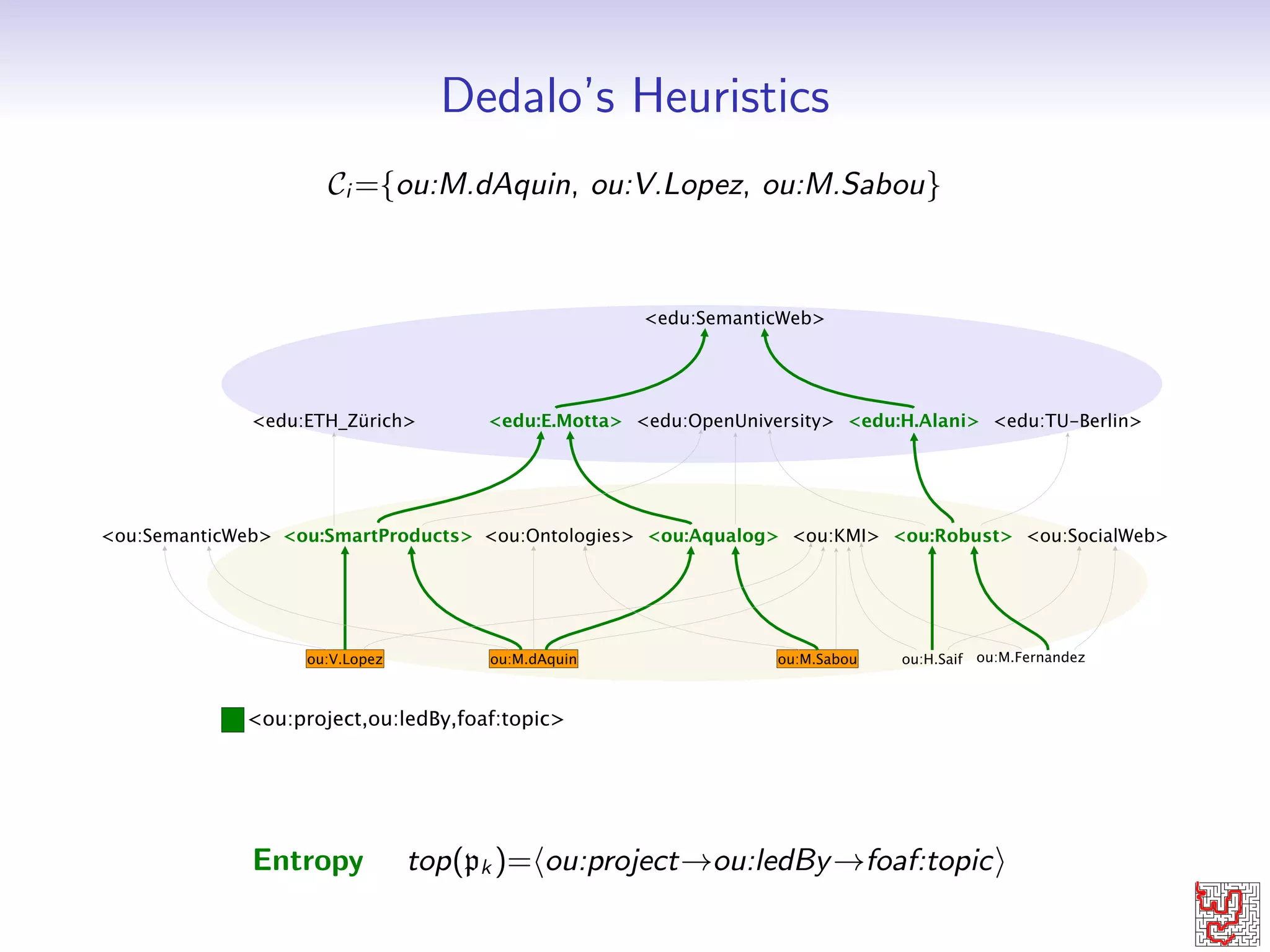

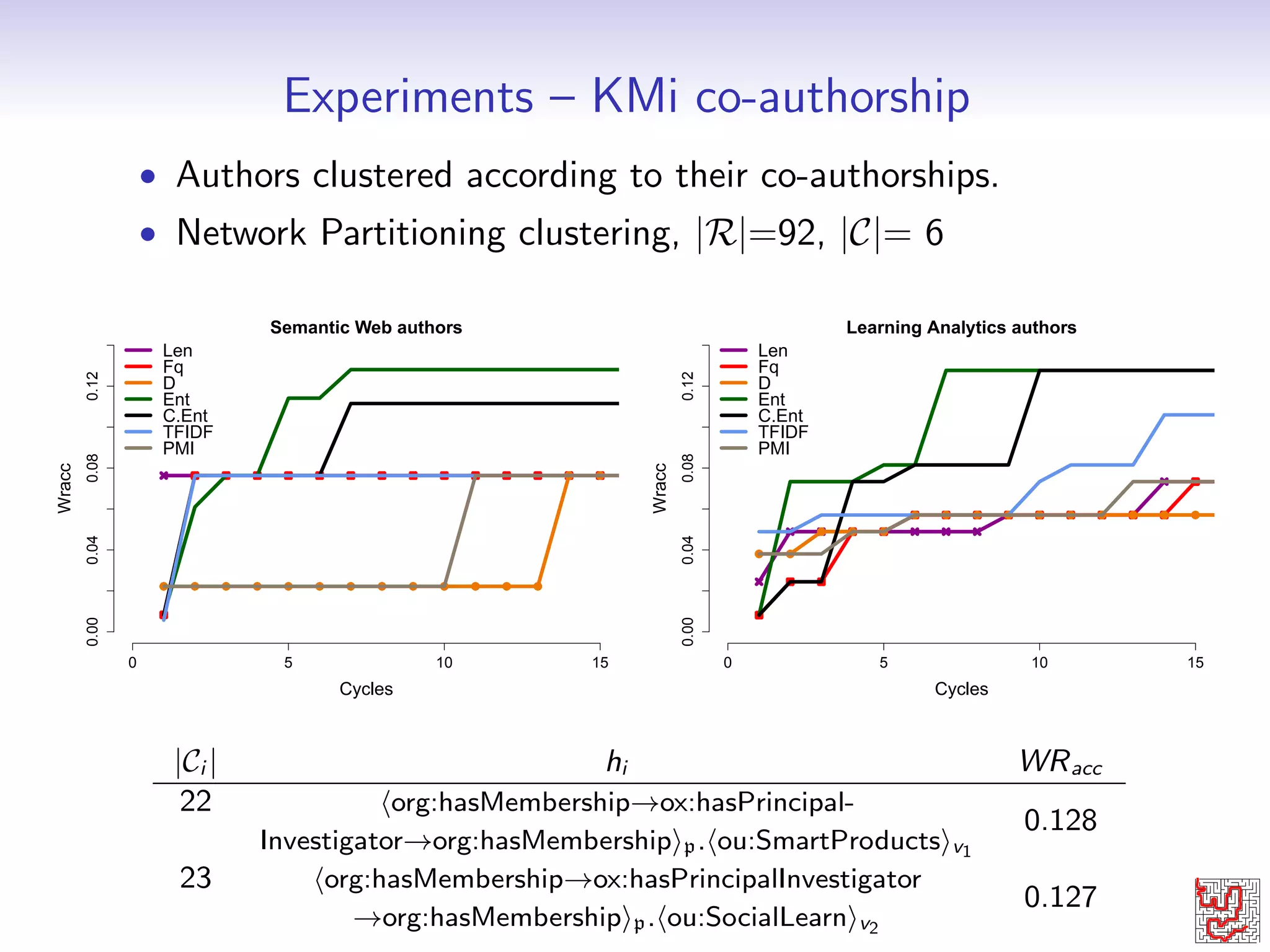

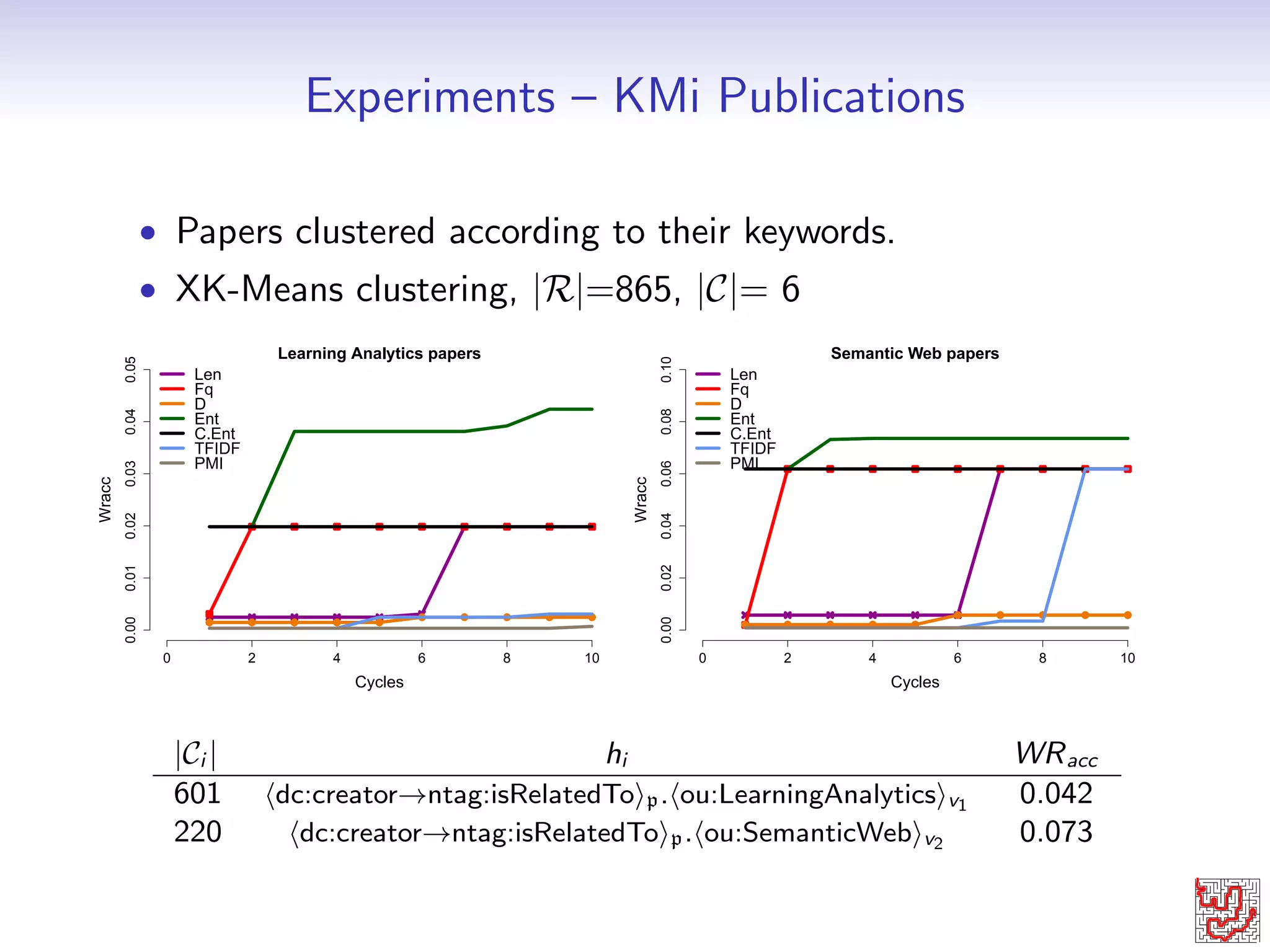

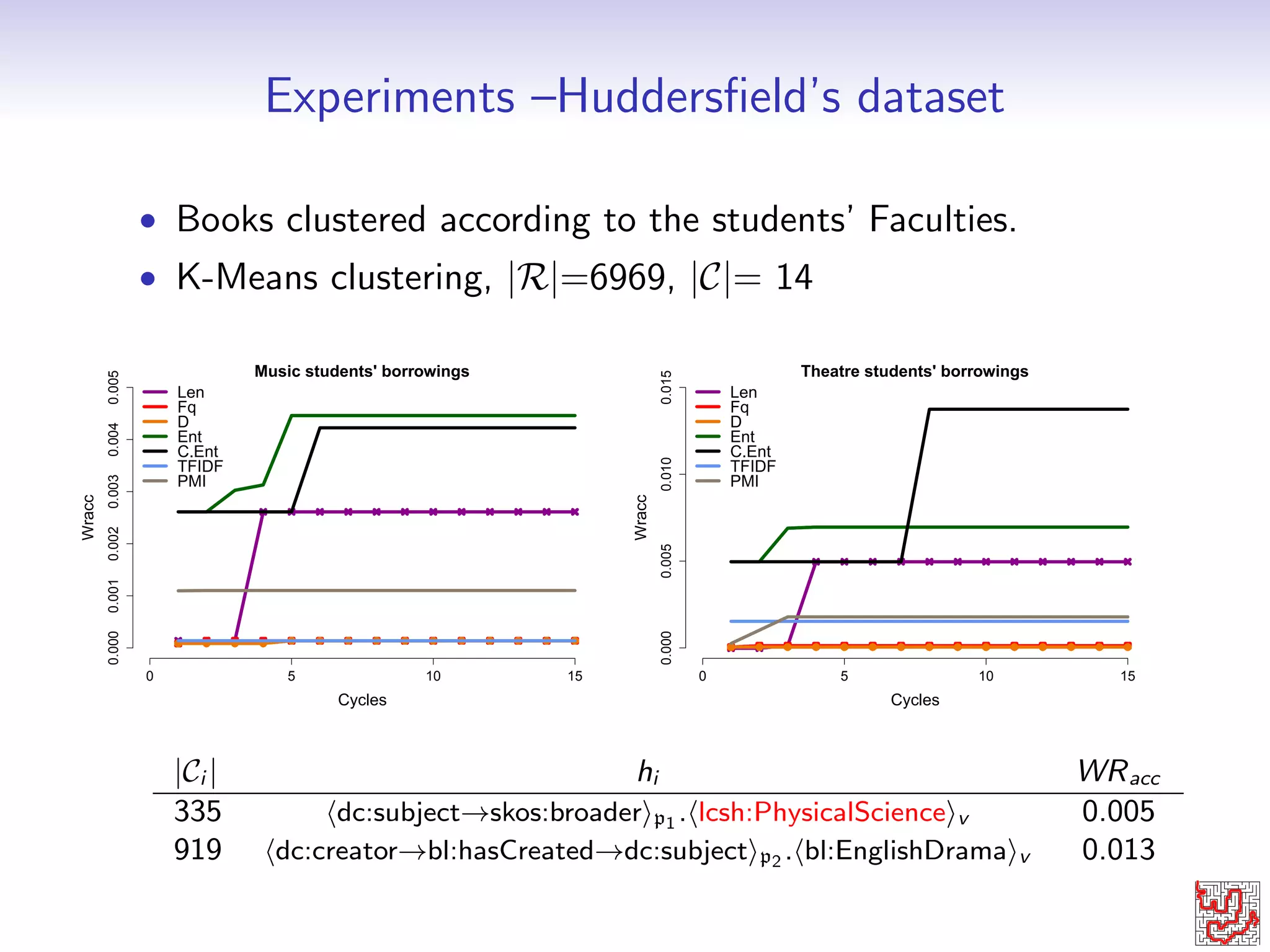

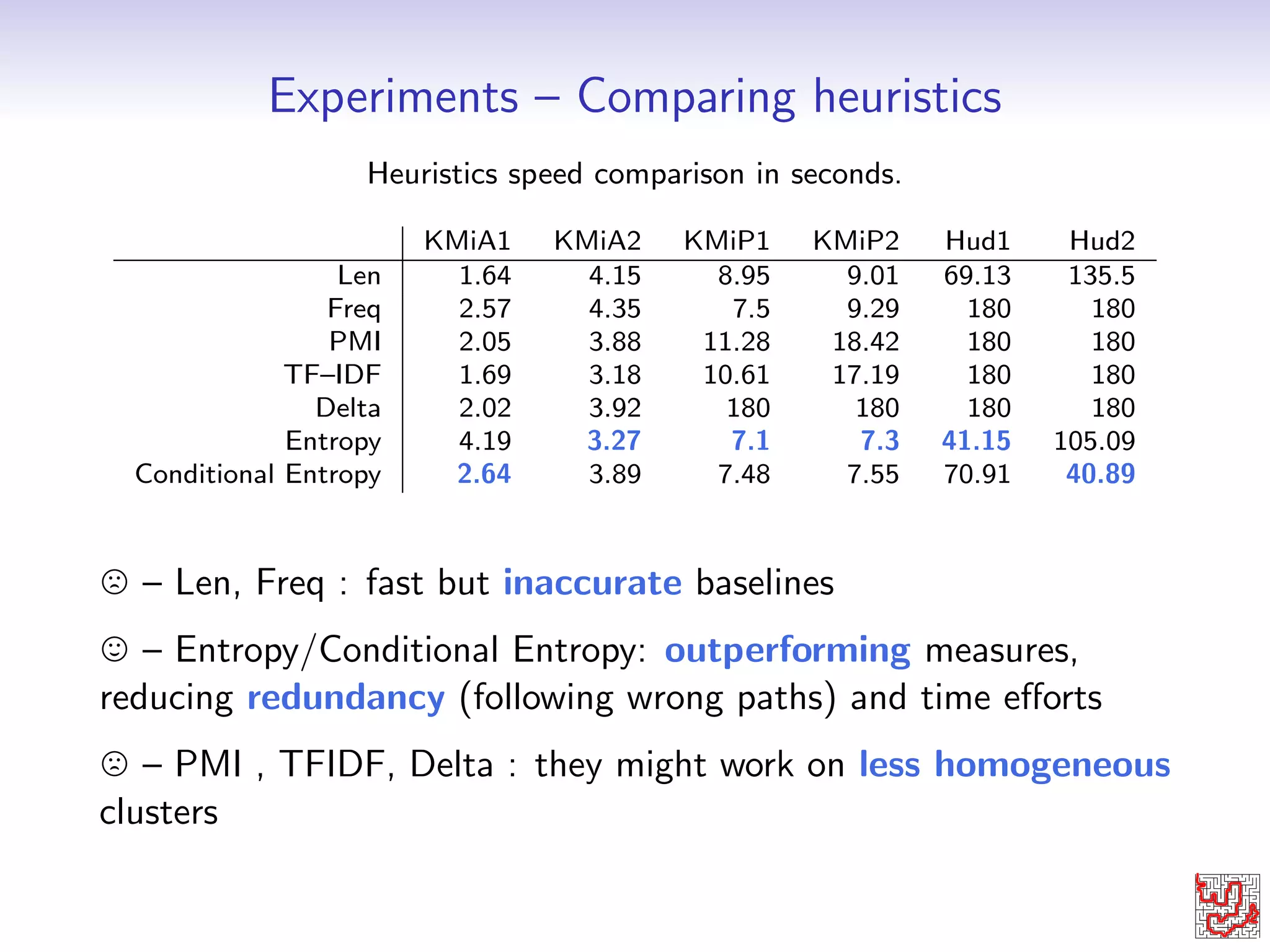

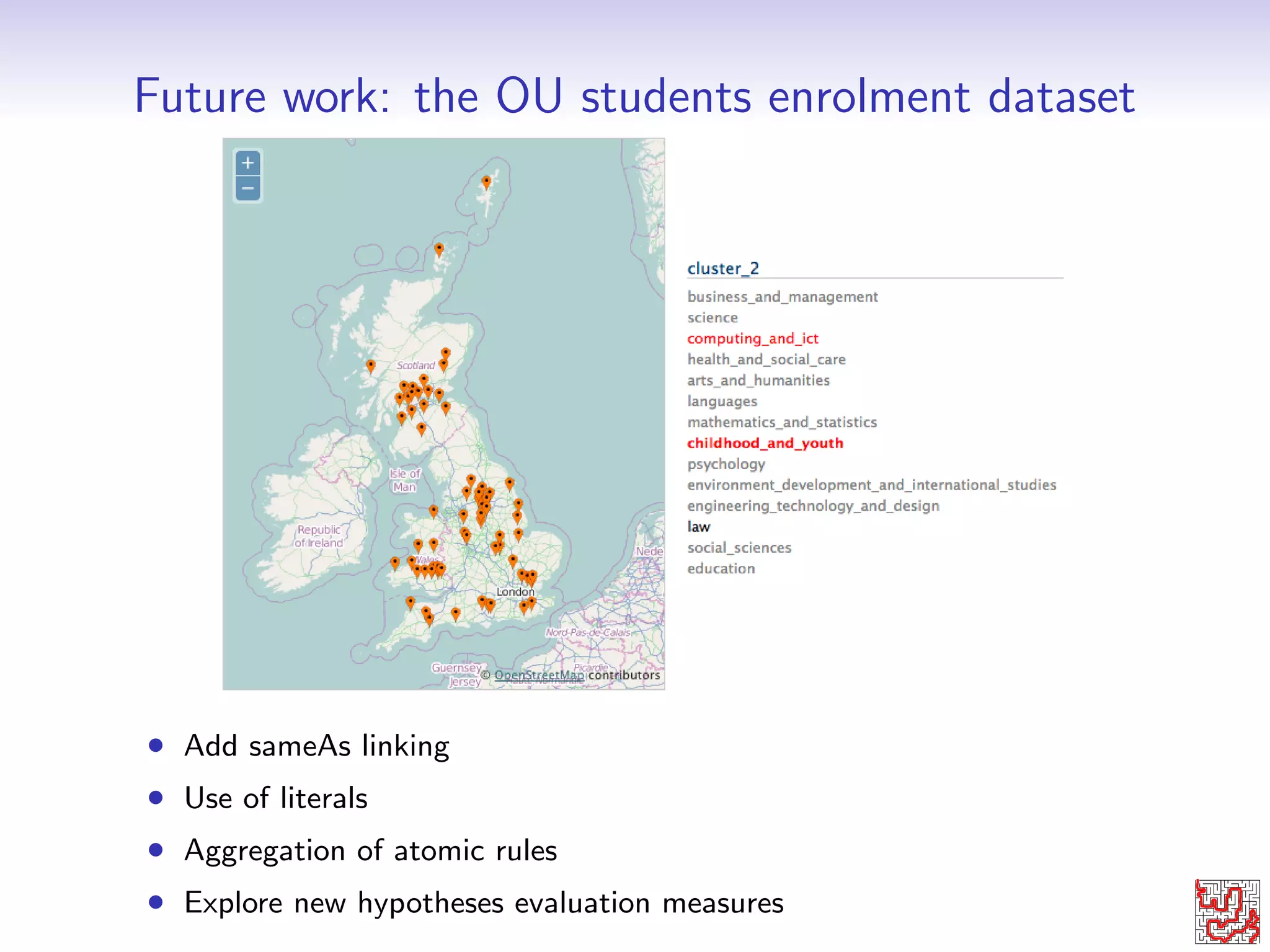

The document describes Dedalo, a system that automatically explains clusters of data by traversing linked data to find explanations. It evaluates different heuristics for guiding the traversal, finding that entropy and conditional entropy outperform other measures by reducing redundancy and search time. Experiments on authorship clusters, publication clusters, and library book borrowings demonstrate Dedalo's ability to discover explanatory linked data patterns within a limited domain. Future work includes extending Dedalo to handle more complex datasets by addressing issues such as sameAs linking and use of literals.