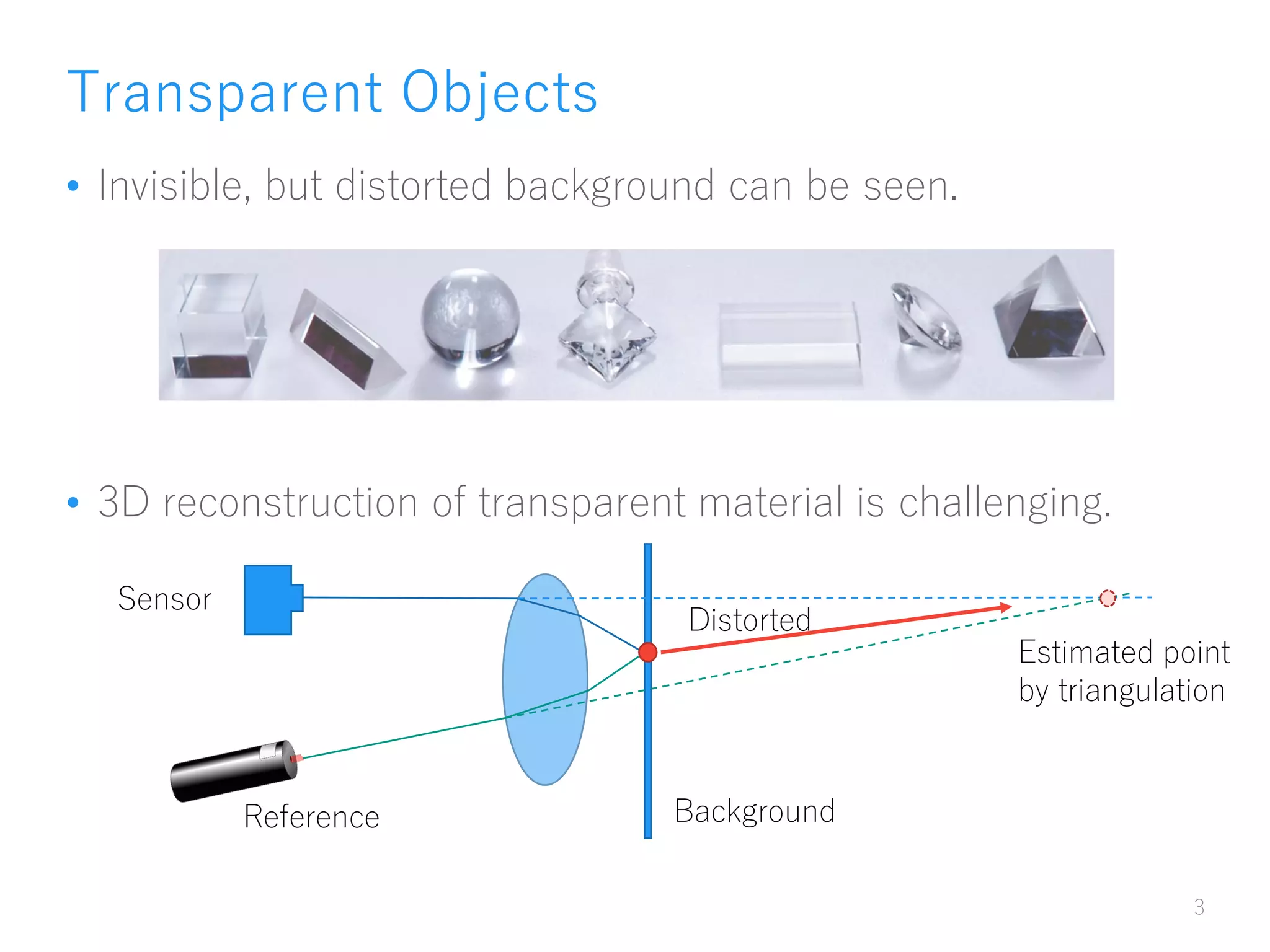

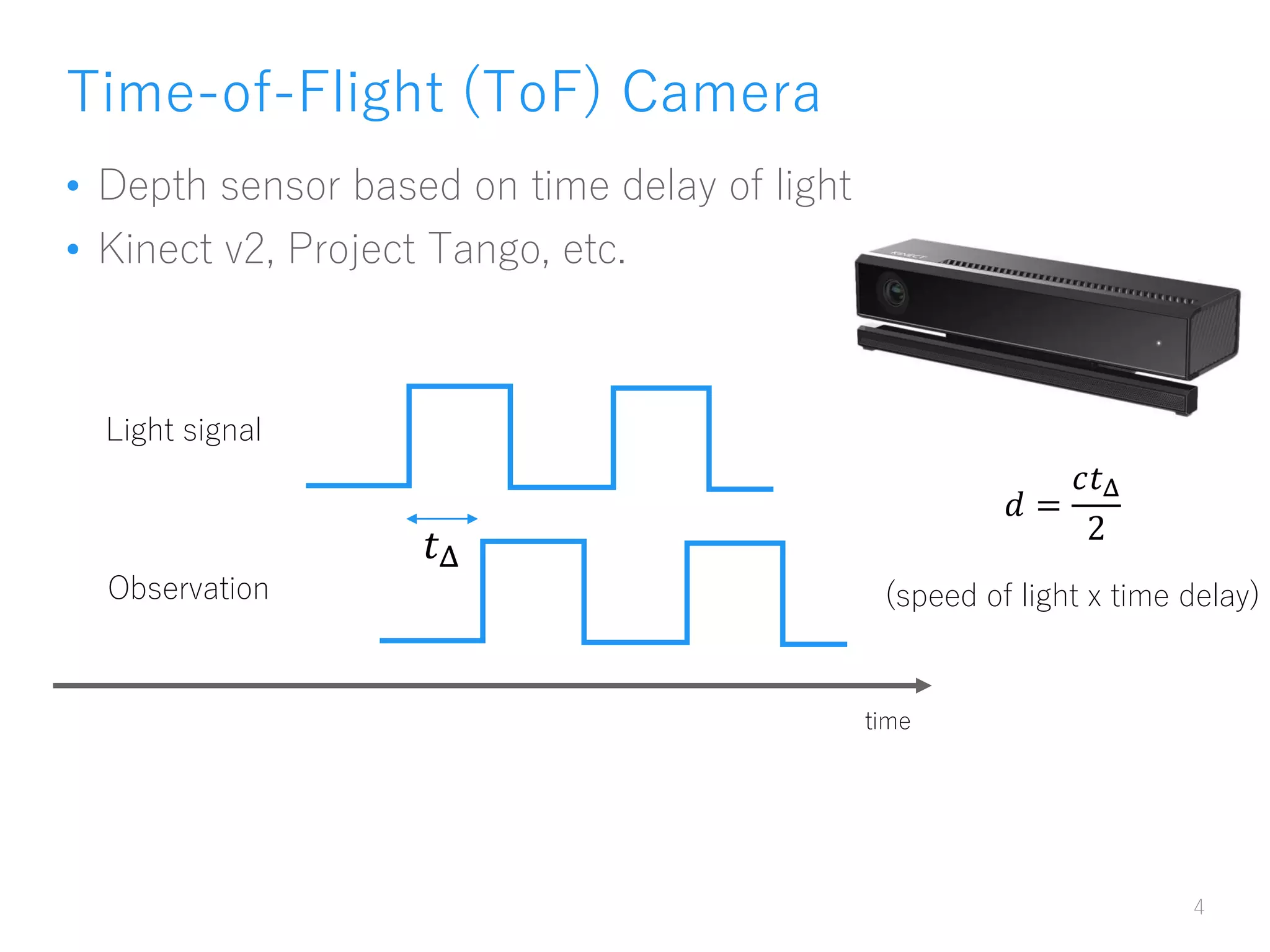

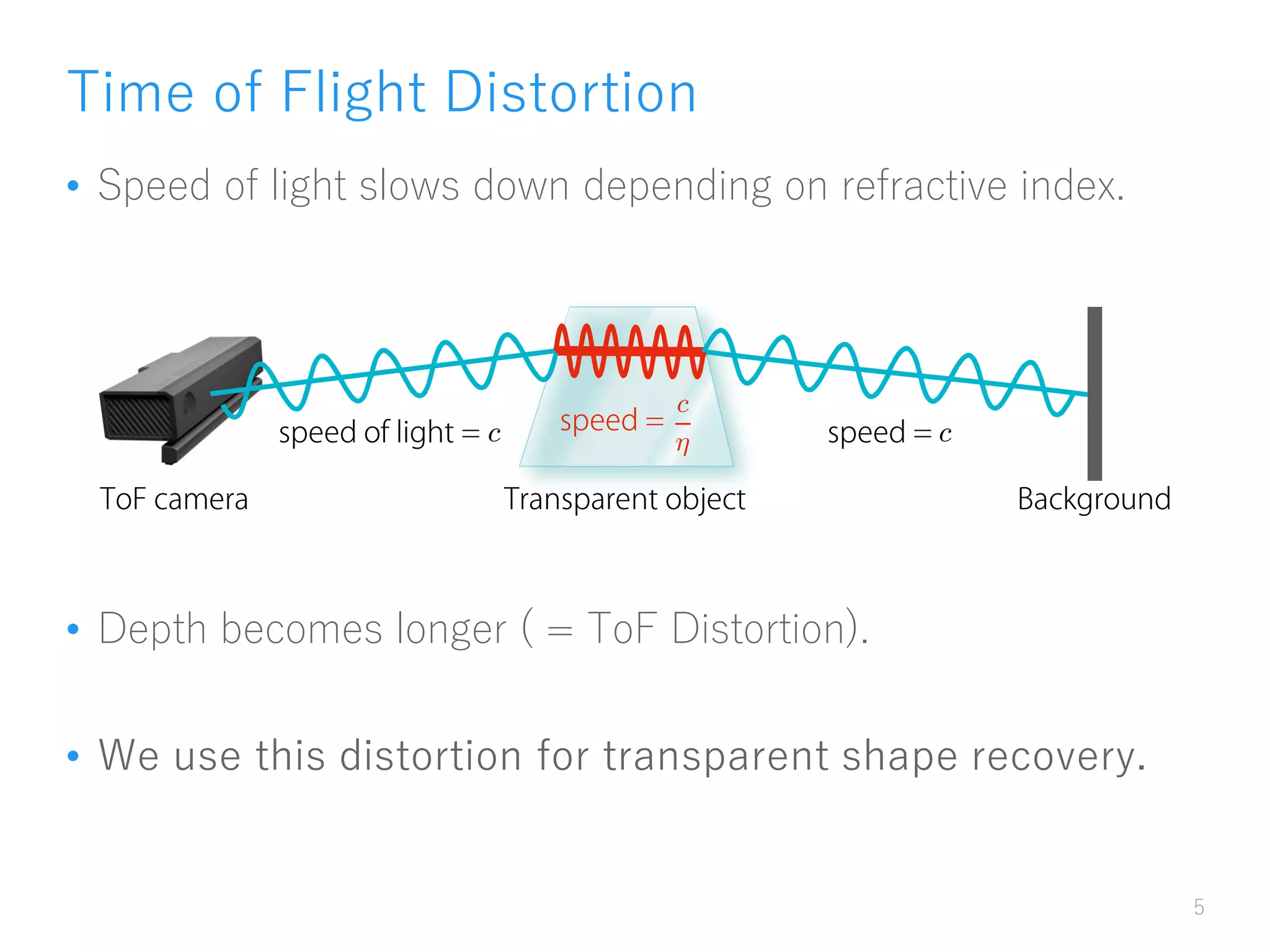

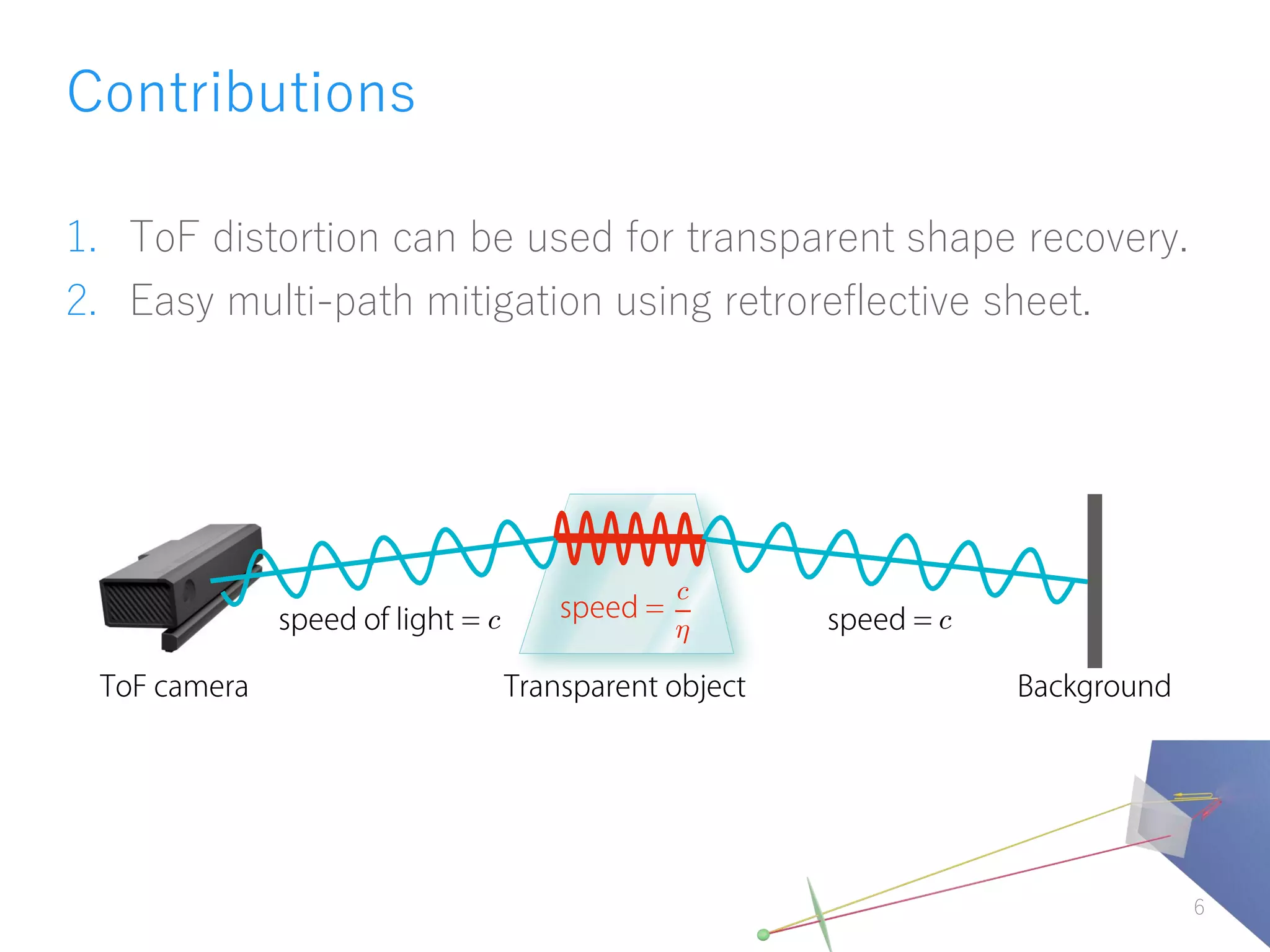

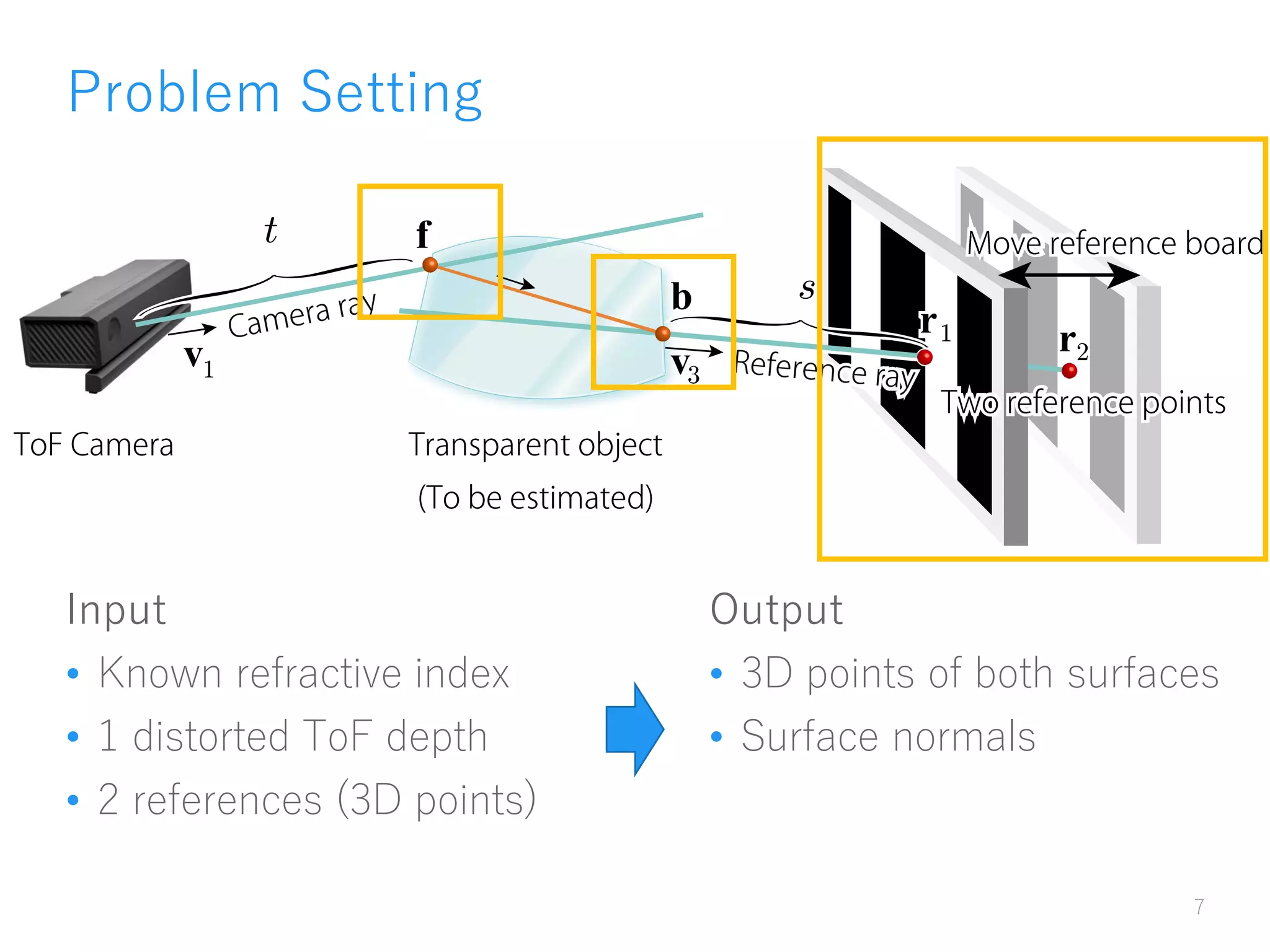

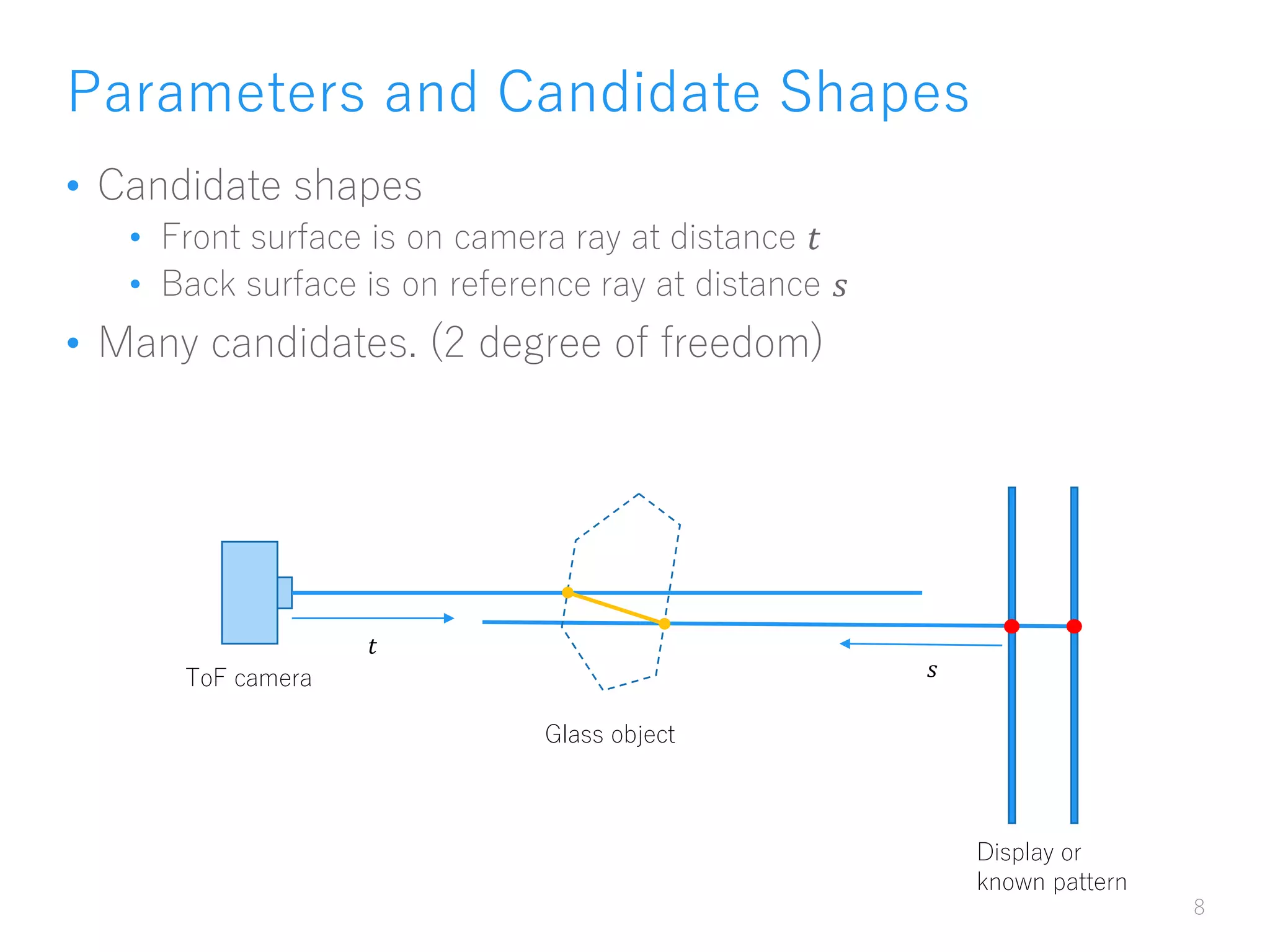

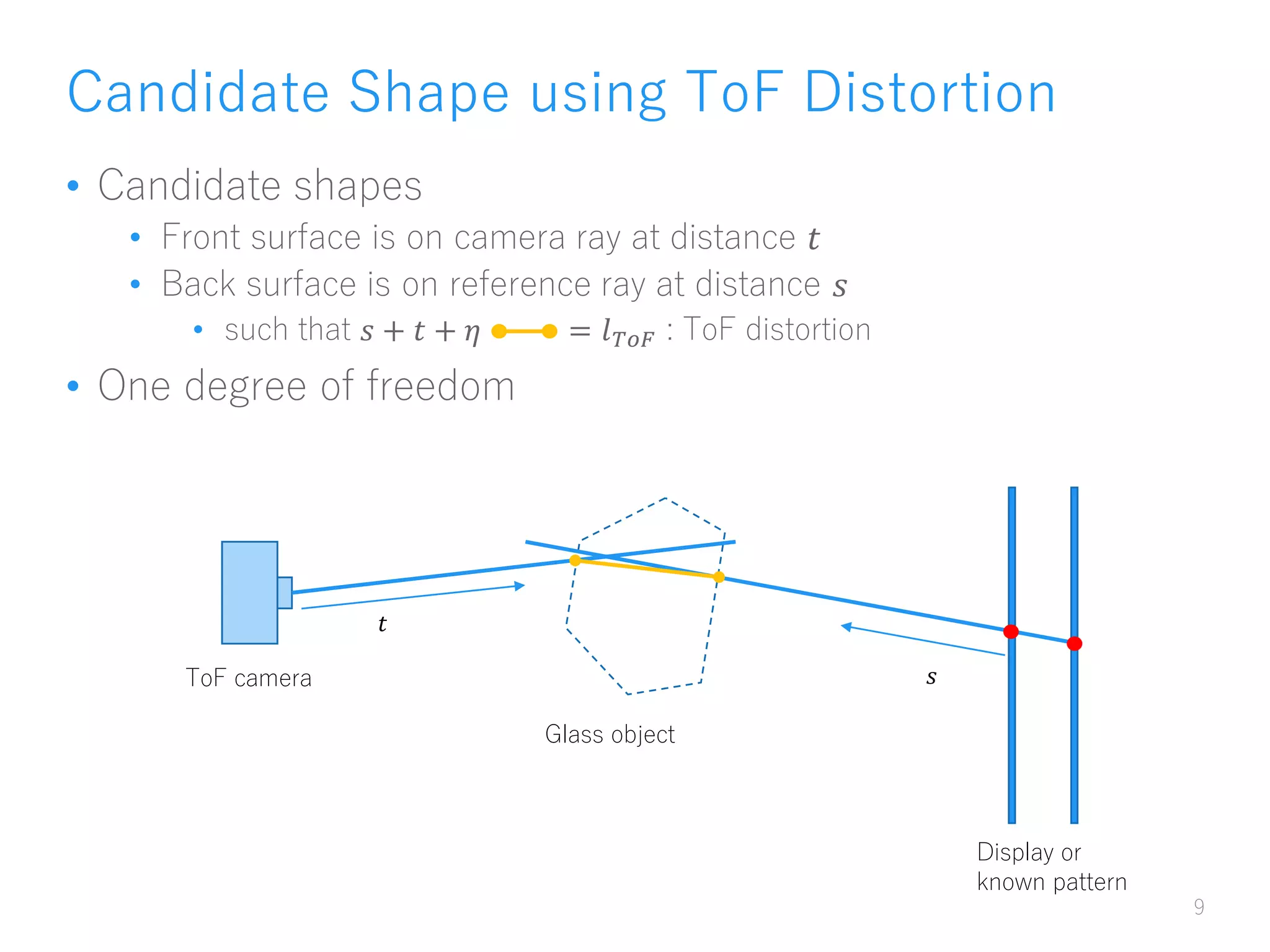

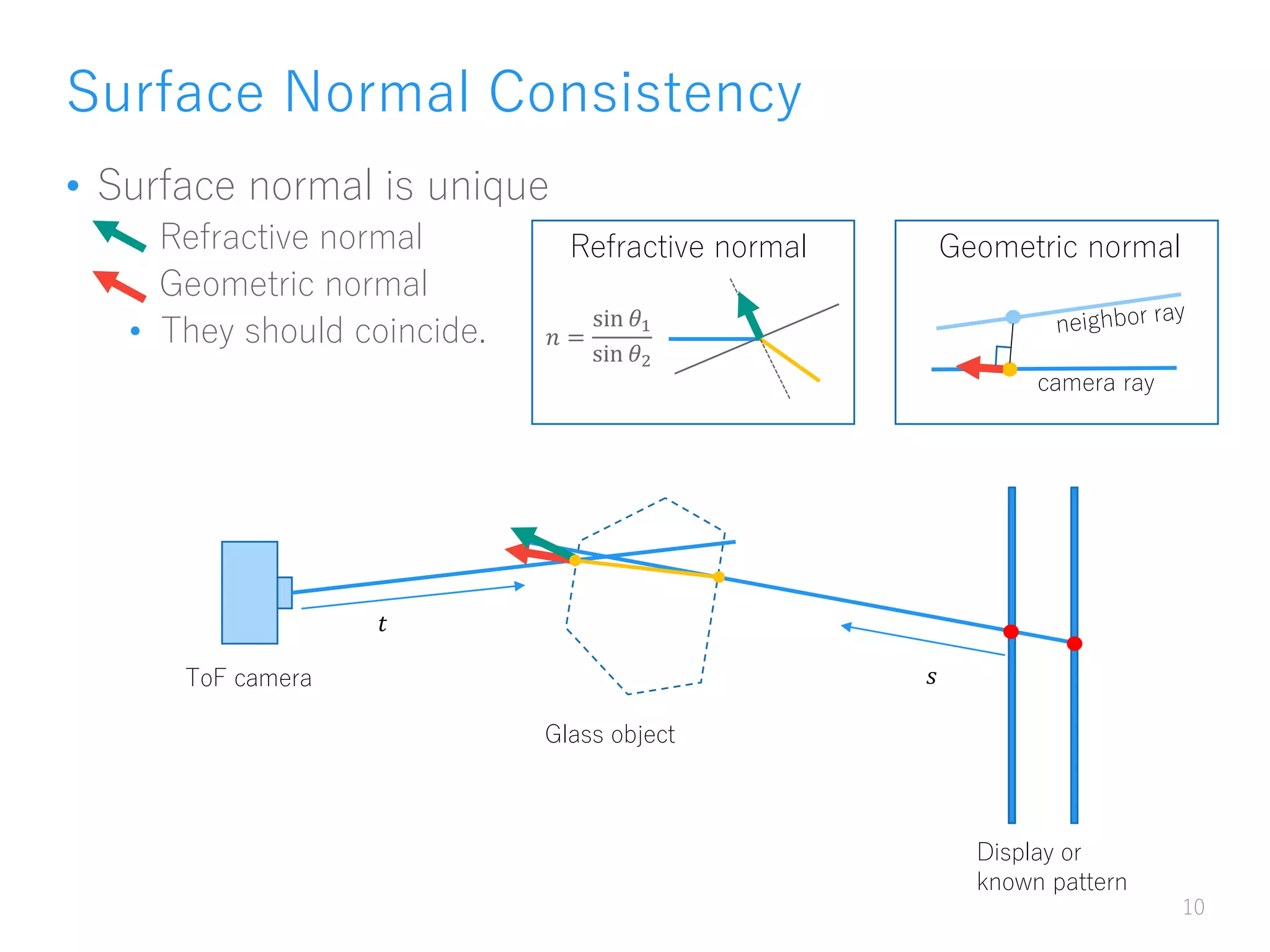

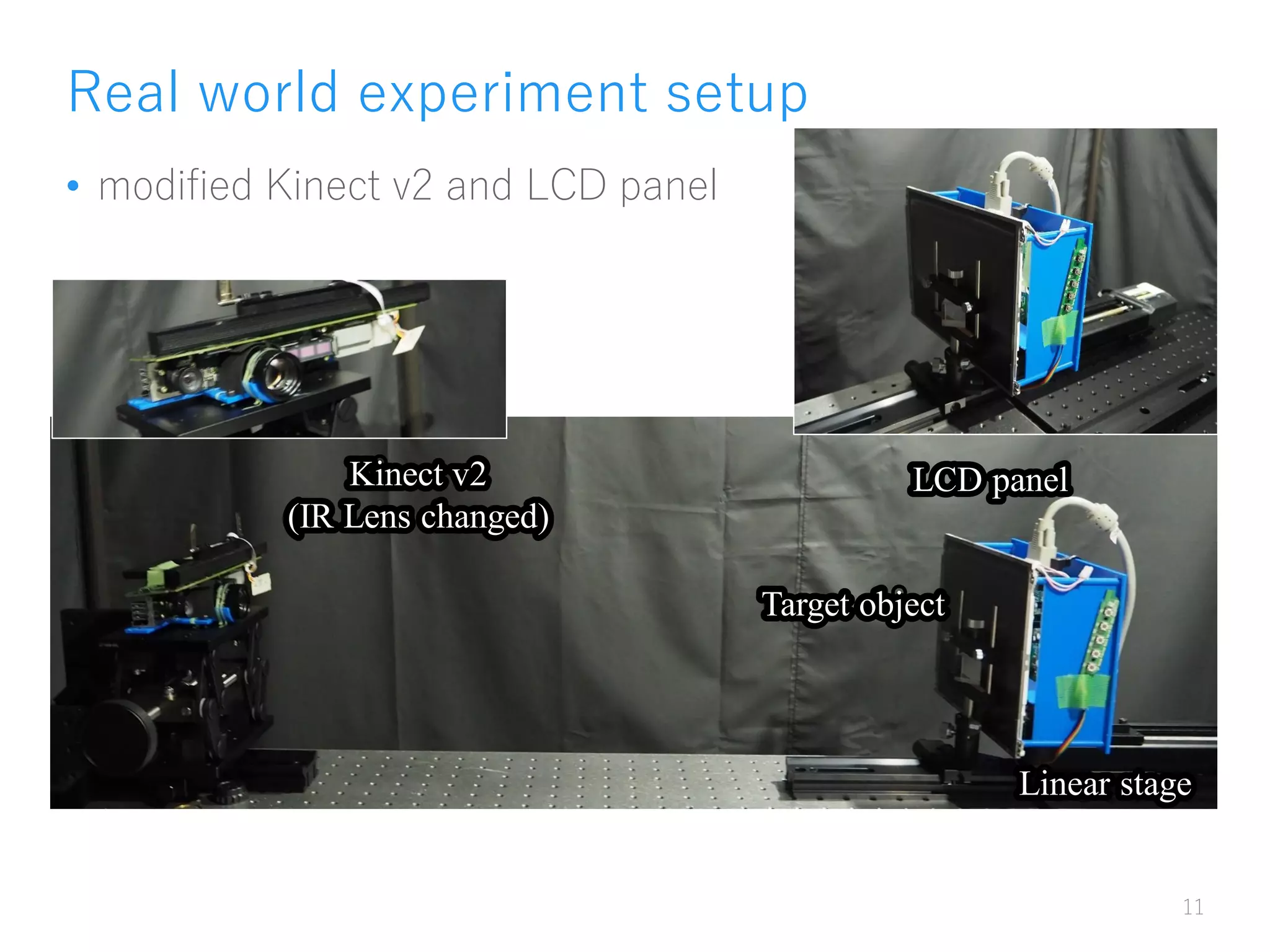

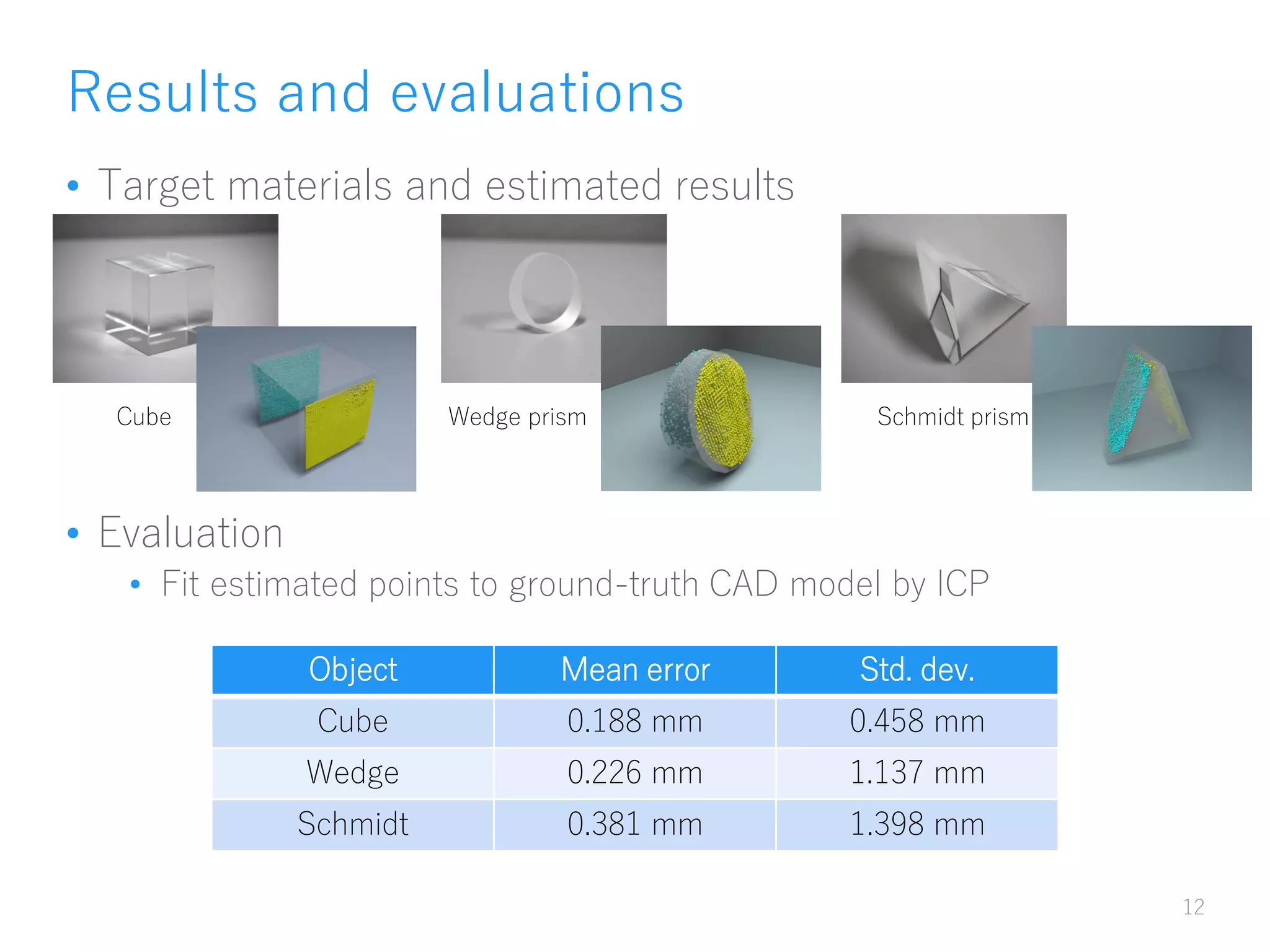

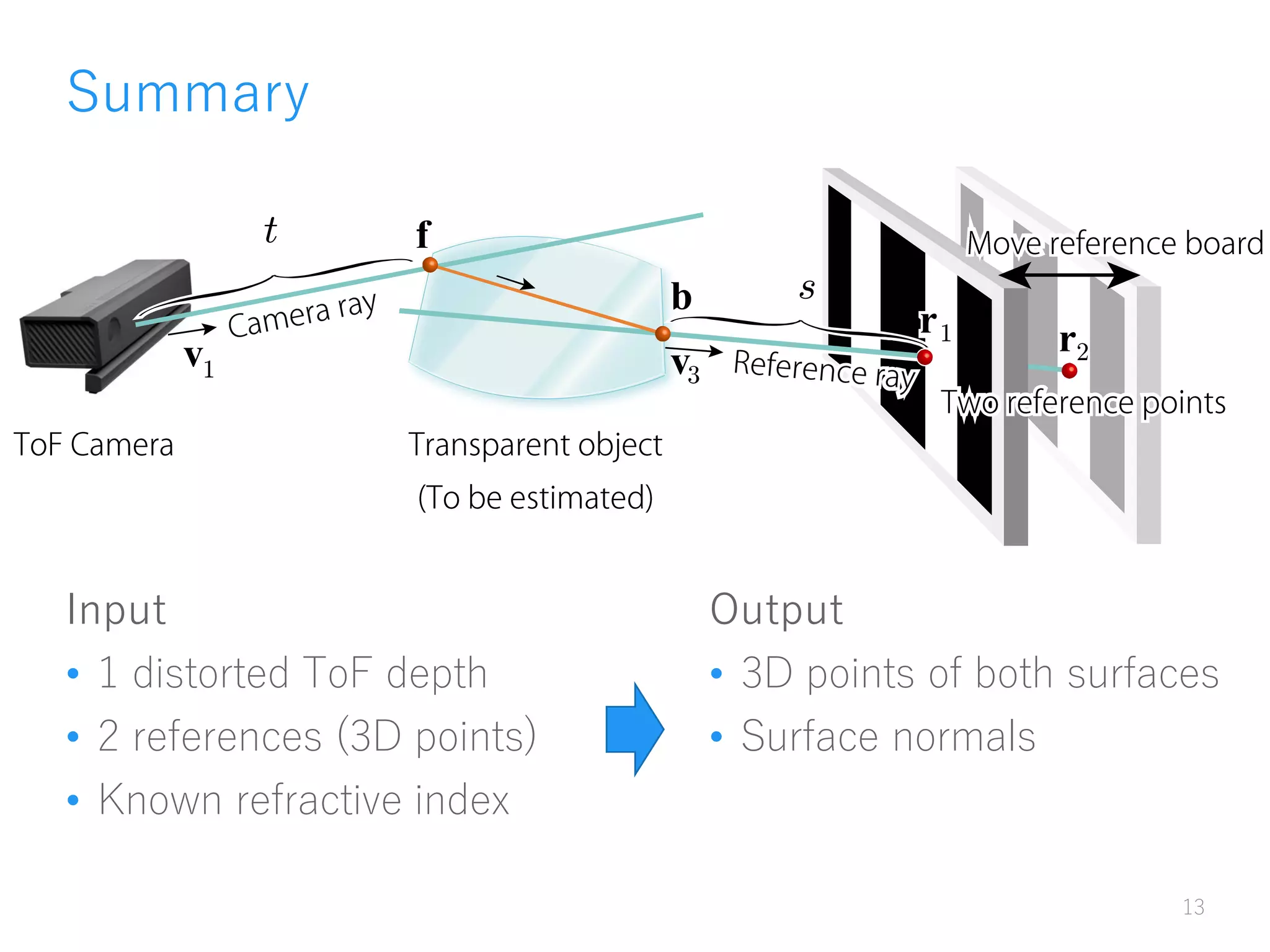

The document presents a method for recovering the shape of transparent objects by leveraging time-of-flight (ToF) distortion caused by the refractive index. Utilizing a modified ToF camera, it discusses the challenges and contributions related to 3D reconstruction, integrating parameters like known refractive index and reference 3D points for accurate depth estimation. Experimental results demonstrate the effectiveness of this approach with specific mean errors for various transparent materials.

![Time-of-Flight as alternative imager

• Light-in-flight [Gkioulekas+2015]

• Parameter tunable ToF camera (Texas instruments)

14](https://image.slidesharecdn.com/miru2016-invited-160817120414/75/MIRU2016-invited-talk-Recovering-Transparent-Shape-from-Time-of-Flight-Distortion-CVPR-2016-13-2048.jpg)

![Imaging, Analyzing using ToF Camera

• Recently Emerging Topic

[Heide+2013], [Kadambi+2013], [Naik+2013], [Godbaz+2013], [Freedman+2014],

[Lin+2014], [O’Toole+2014], [Gupta+2015], [Heide+2015], [Xiao+2015],

[Kadambi+2015], [Peters+2015], [Tadano+2015], and more!

• CVPR 2016

• 1 oral, 2 posters (including ours)

[Kadambi et al.], [Su et al.]

• SIGGRAPH 2016

• 2 technical papers.

[Shrestha et al.], [Kadambi et al.]

15

We will continue working on ToF camera](https://image.slidesharecdn.com/miru2016-invited-160817120414/75/MIRU2016-invited-talk-Recovering-Transparent-Shape-from-Time-of-Flight-Distortion-CVPR-2016-14-2048.jpg)