Embed presentation

Download to read offline

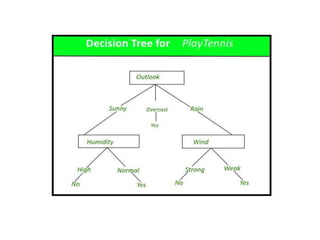

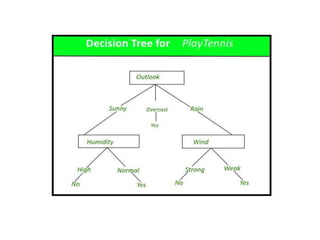

The document provides an overview of various machine learning algorithms and concepts including supervised learning, unsupervised learning, decision trees, statistical learning models, Naive Bayes models, the EM algorithm, and reinforcement learning. It discusses key aspects of each topic such as how supervised learning uses labeled training data while unsupervised learning discovers patterns in unlabeled data. Decision trees use flowcharts to classify data points and statistical learning theory provides a framework for predictive machine learning models.