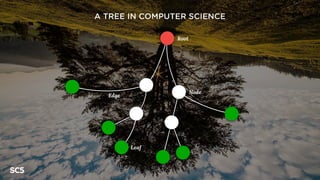

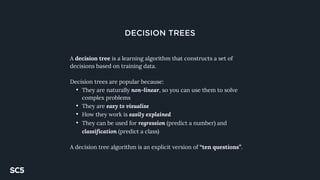

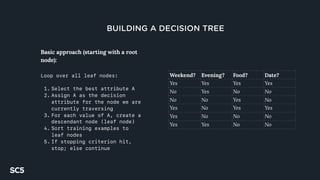

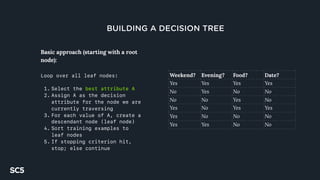

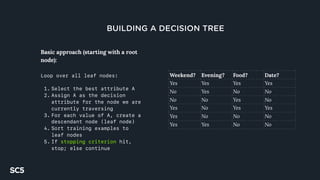

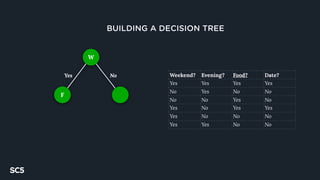

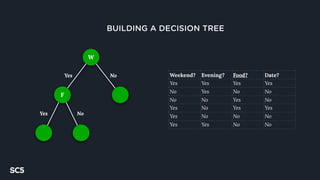

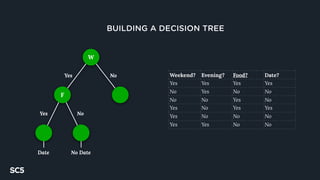

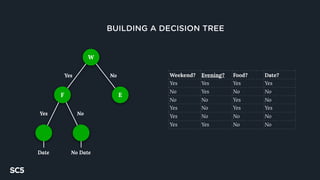

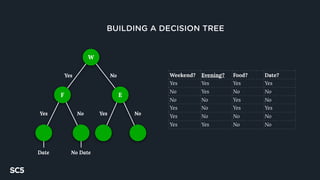

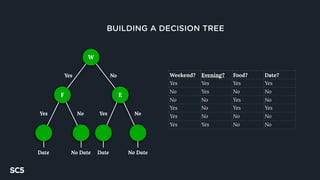

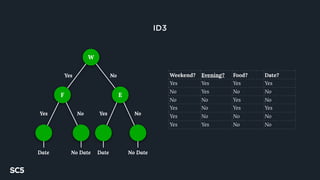

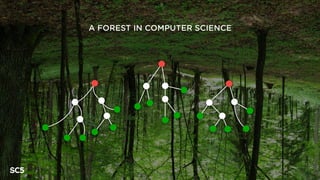

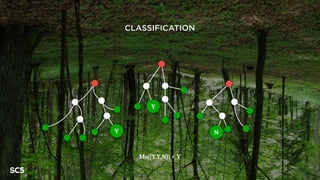

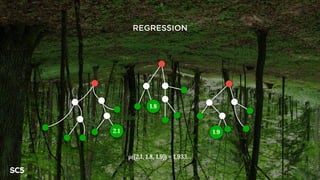

The document discusses decision trees and random forests machine learning algorithms. It explains that decision trees are simple algorithms that can be used for classification and regression problems. Random forests improve on decision trees by constructing multiple trees on randomly sampled data and averaging their predictions, which helps prevent overfitting issues. The document provides examples of how decision trees are constructed and how random forests make predictions through voting or averaging tree predictions.