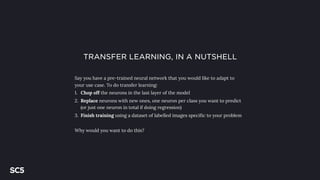

The document discusses transfer learning in machine learning, emphasizing its utility when data is scarce. It outlines how to adapt pre-trained models for specific tasks, allowing for effective learning even with minimal labeled data. Key steps include modifying the model architecture and utilizing various tools and services for implementation.