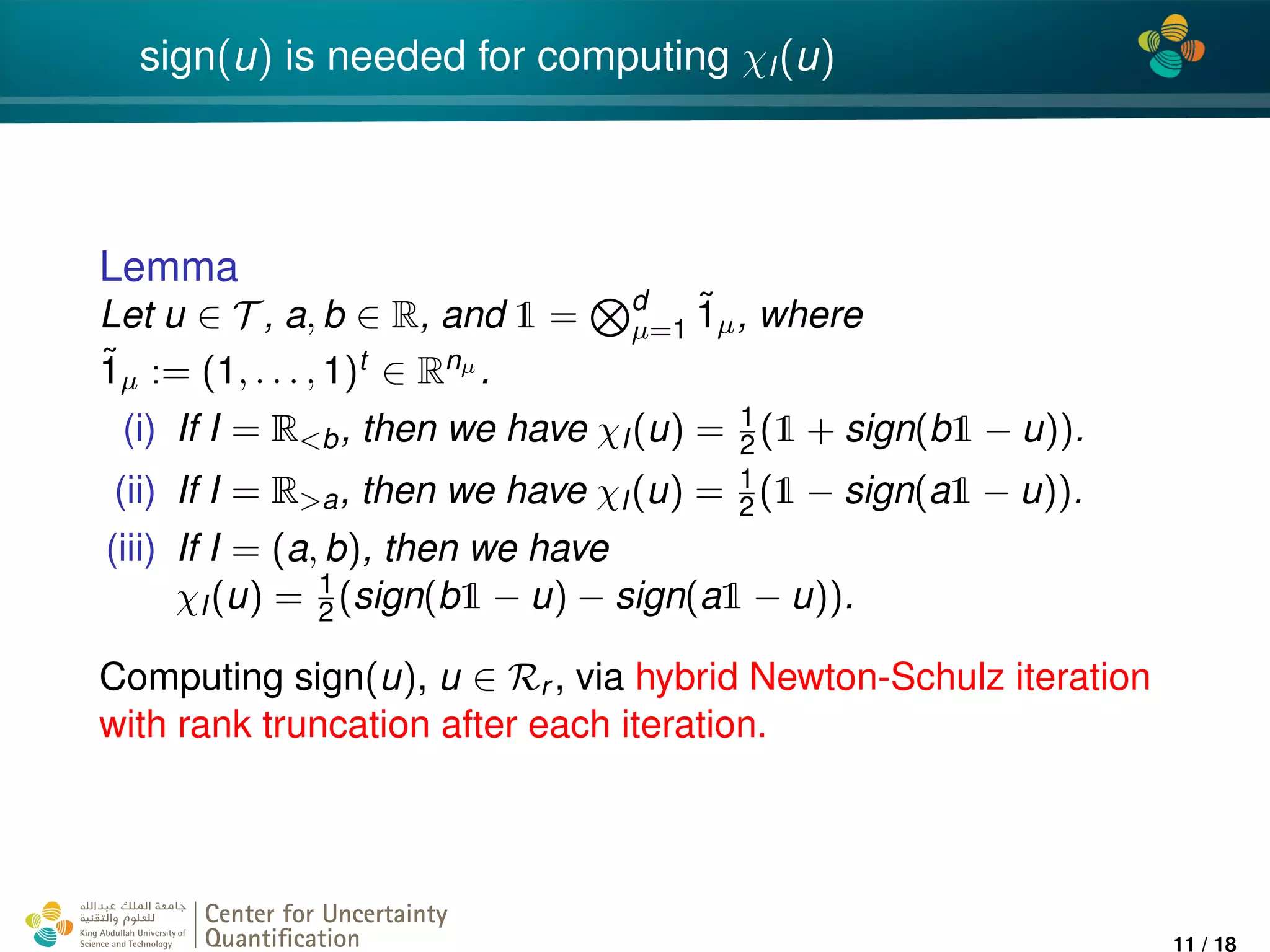

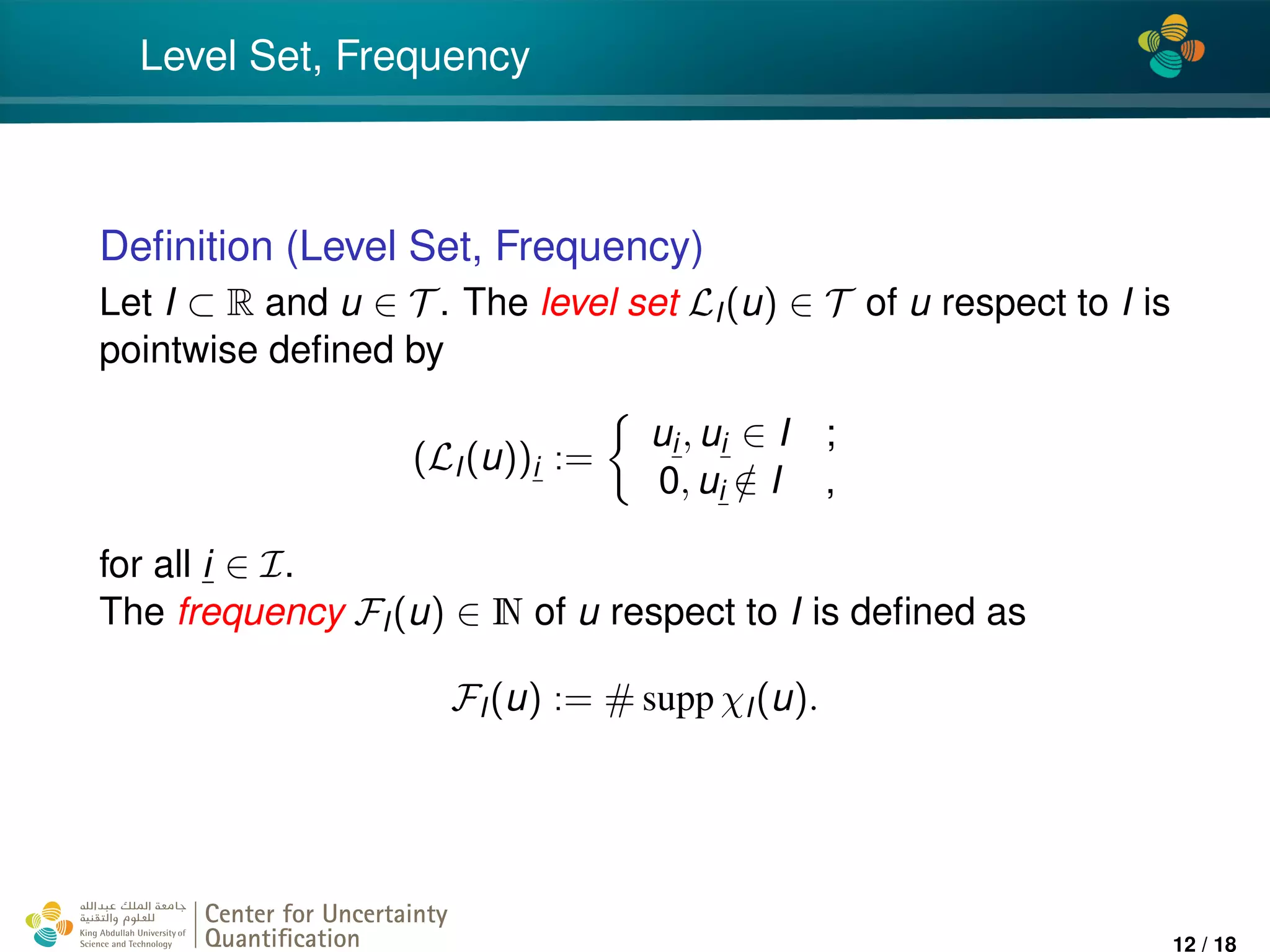

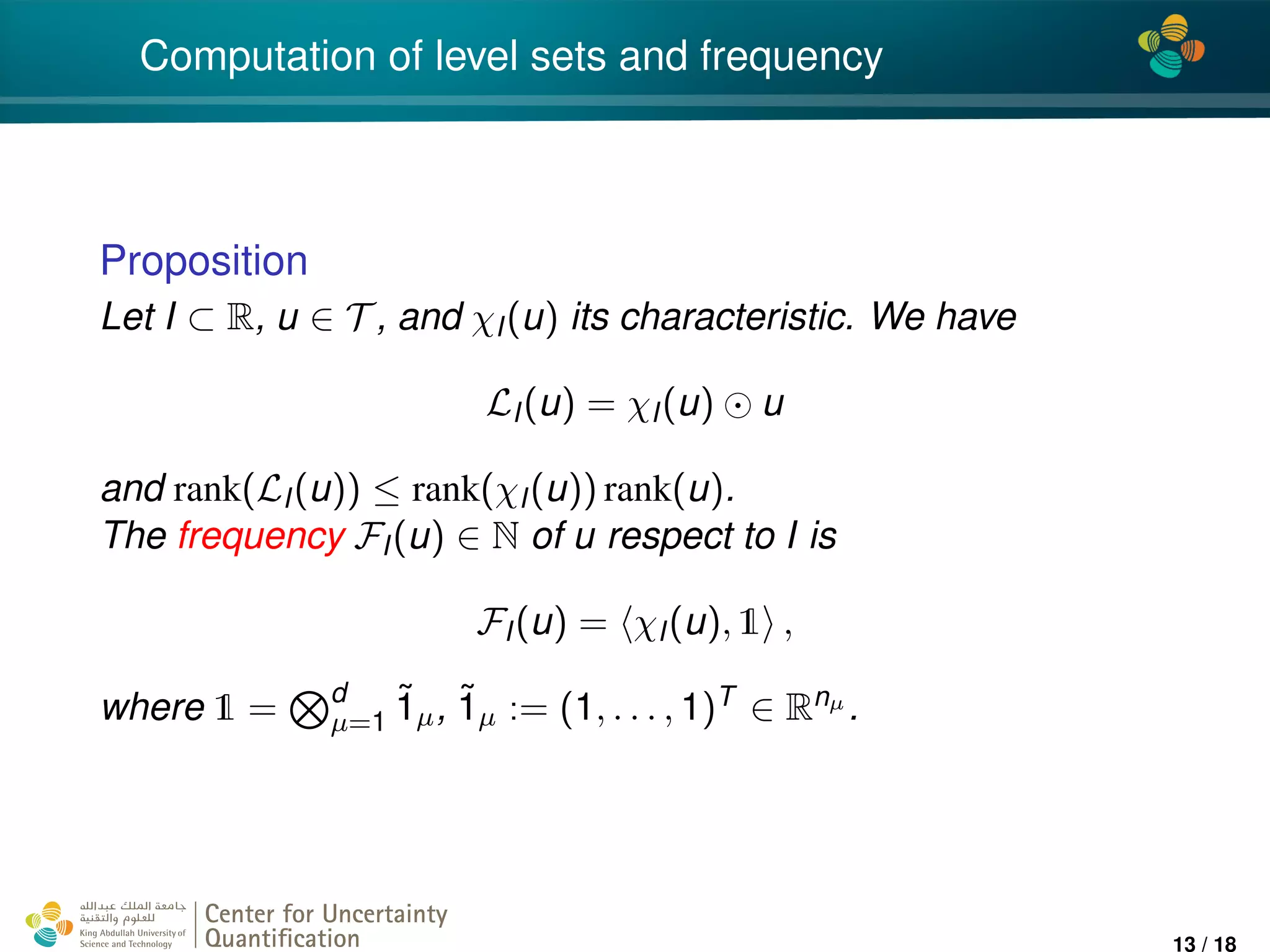

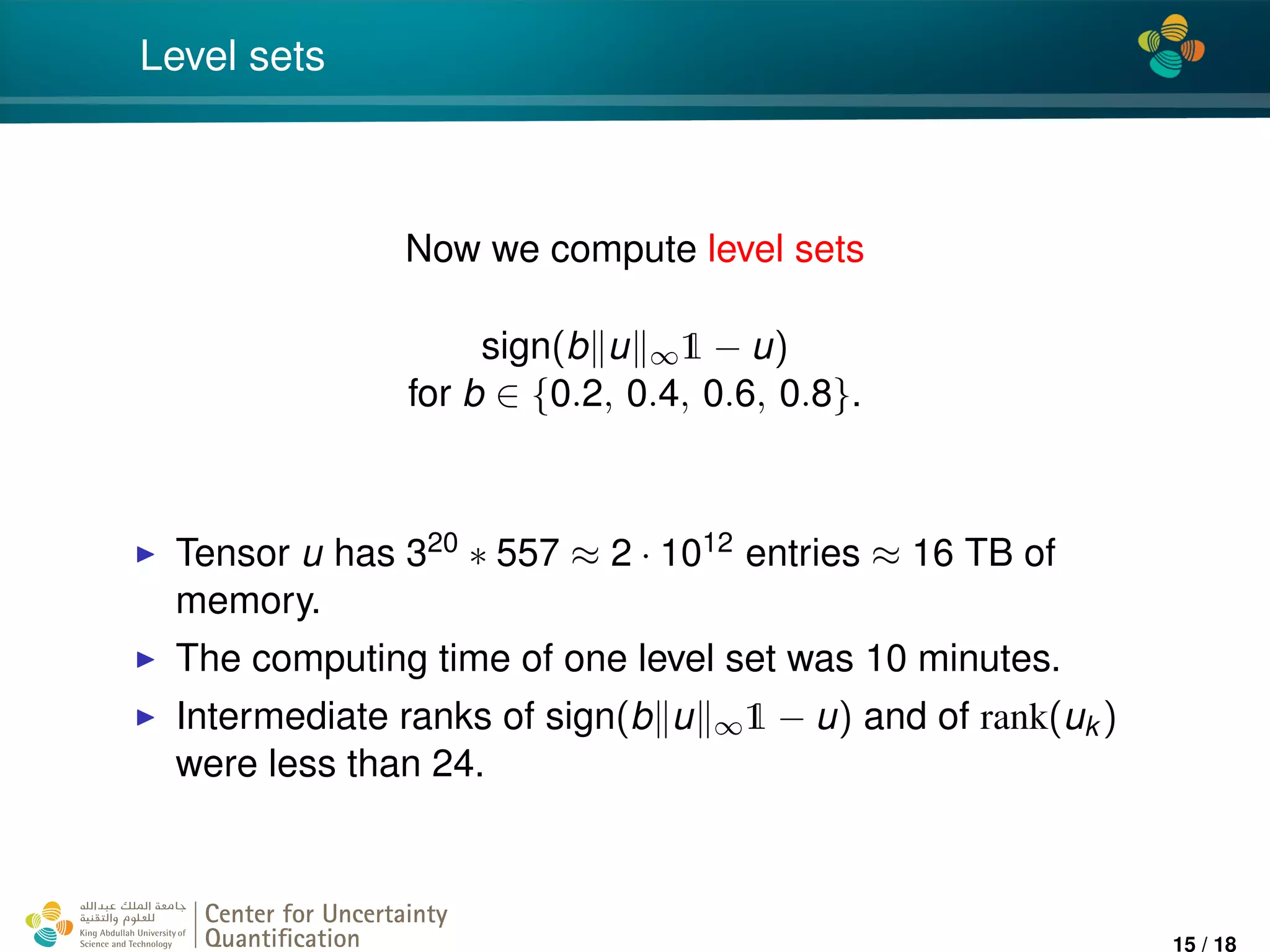

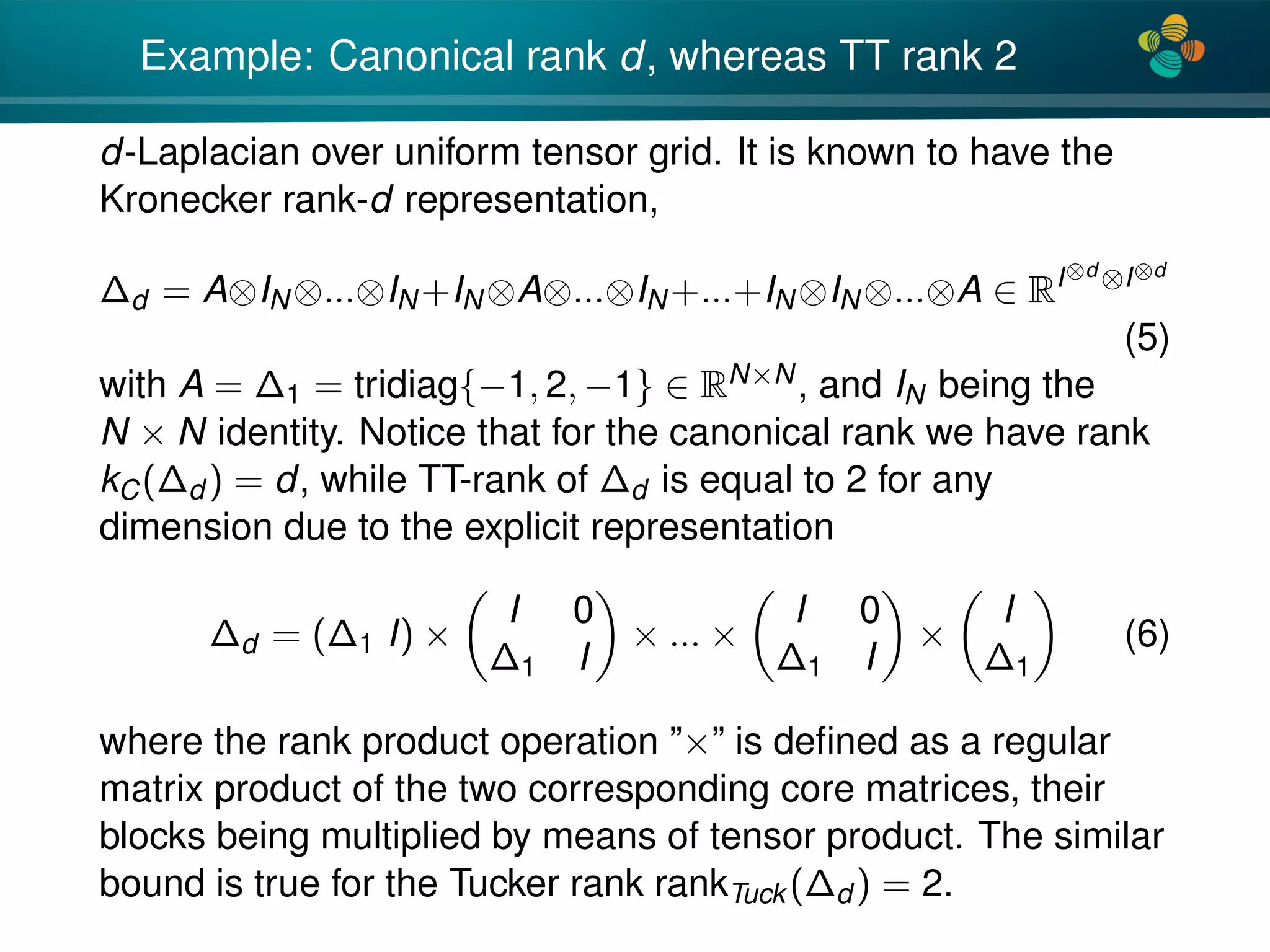

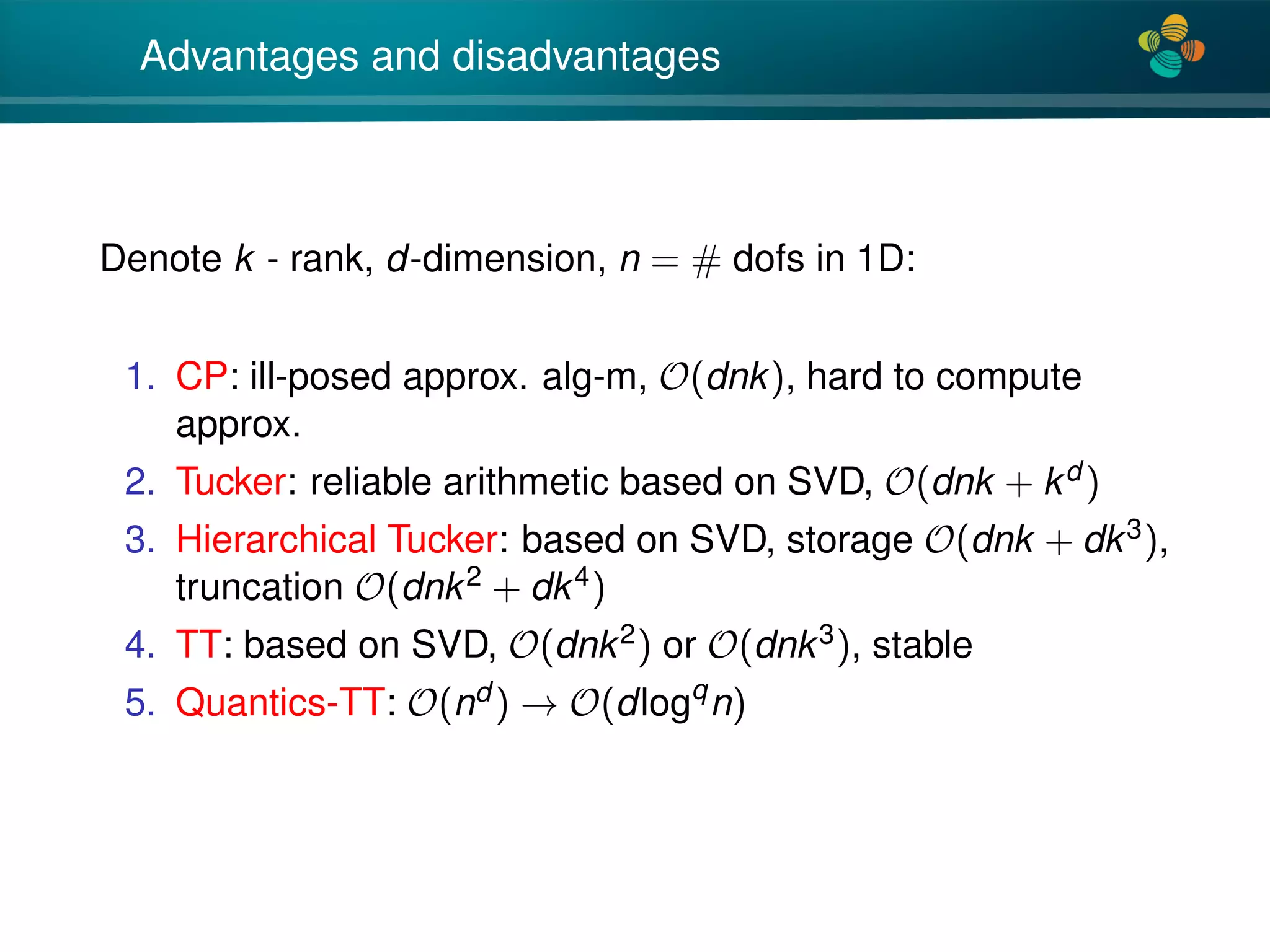

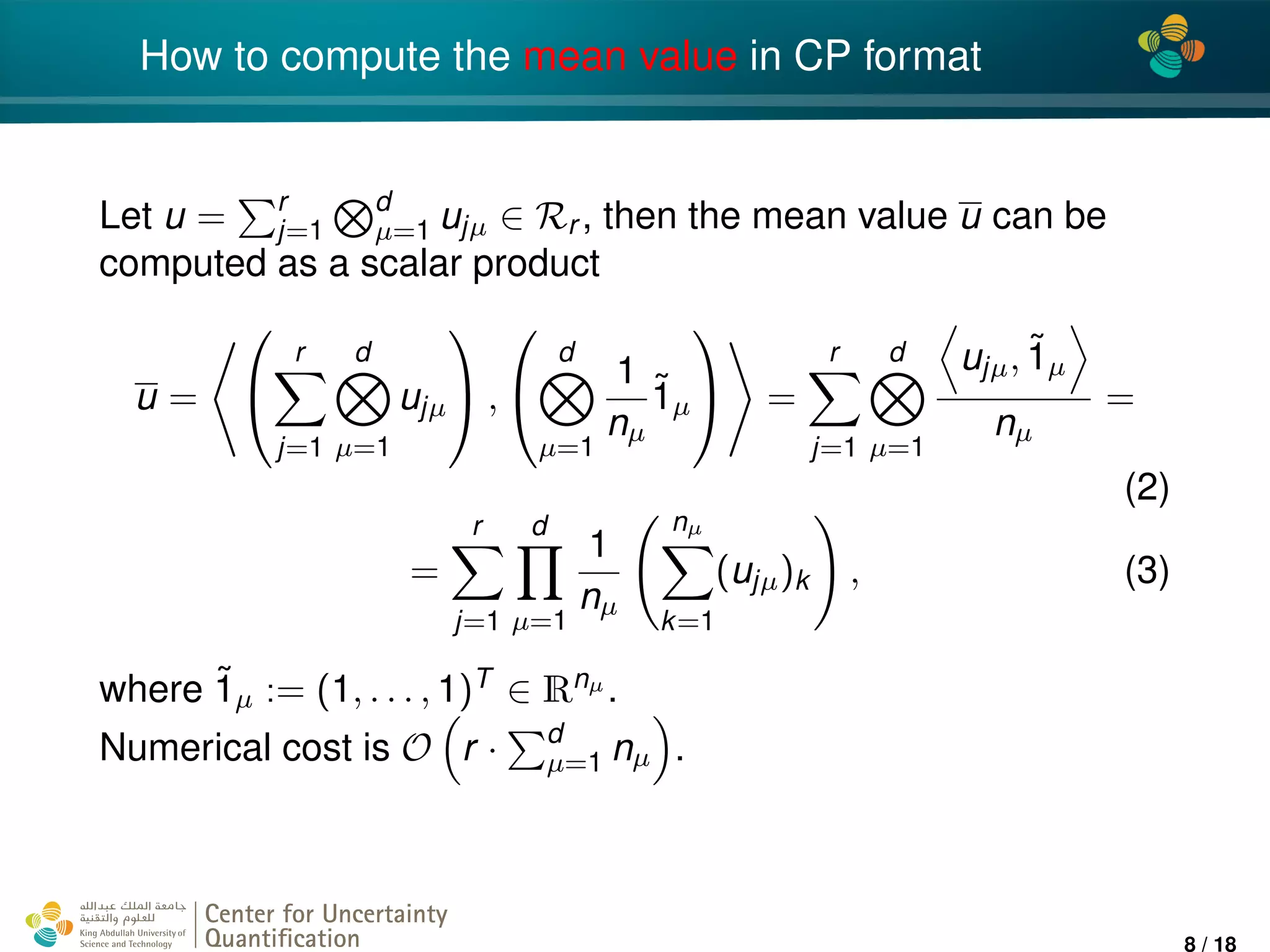

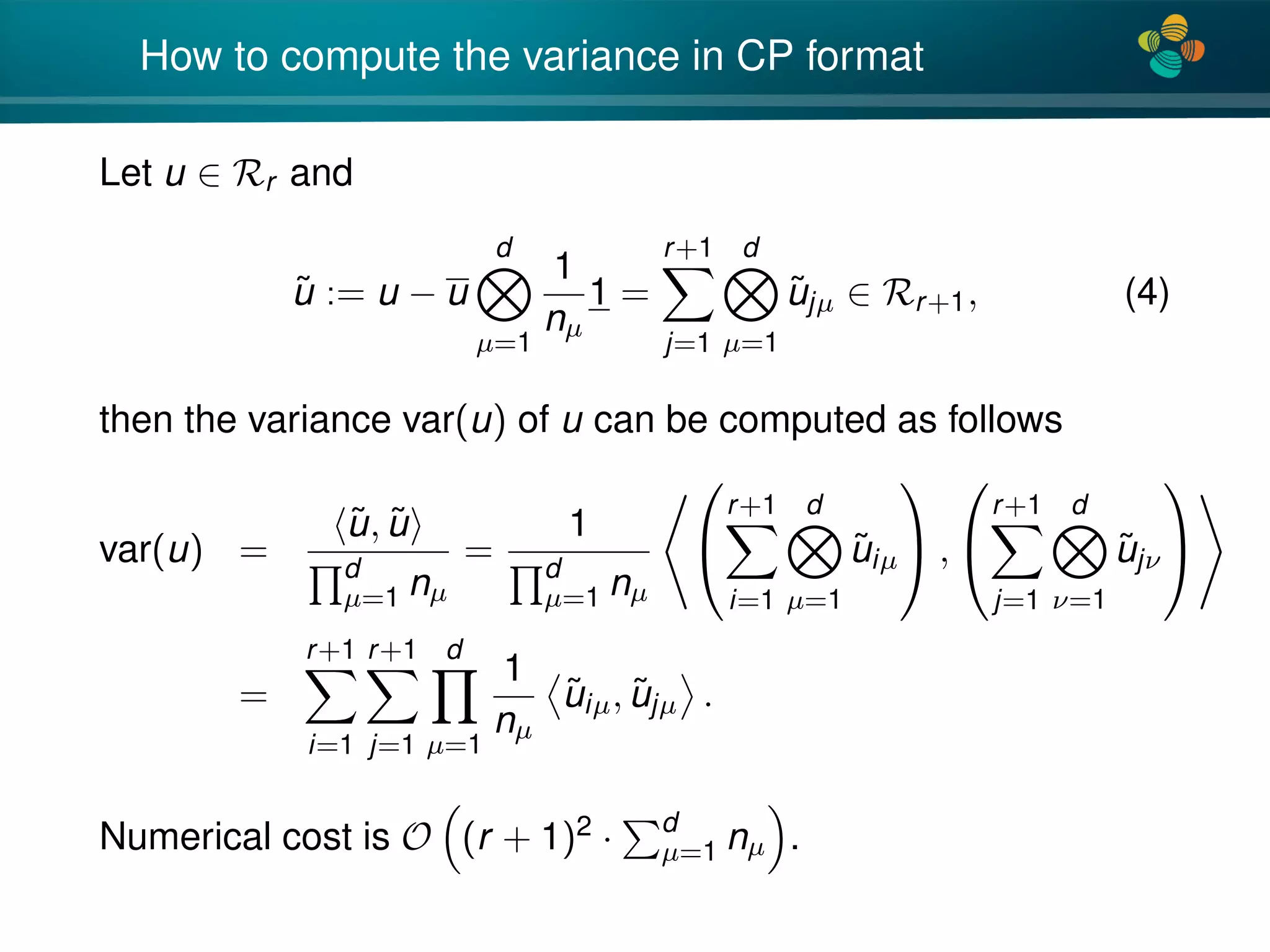

The document discusses low-rank tensor methods for high-dimensional data analysis, detailing various techniques for efficient computation and approximation in tensor formats. It covers methodologies for evaluating specific parameters, finding maxima and minima, and computing level sets through tensor representations. The content also addresses the computational complexities of different tensor formats and their respective advantages and disadvantages.

![4*

My previous work

After applying the stochastic Galerkin method, obtain:

Ku = f, where all ingredients are represented in a tensor format

Compute max{u}, var(u), level sets of u, sign(u)

[1] Efficient Analysis of High Dimensional Data in Tensor Formats,

Espig, Hackbusch, A.L., Matthies and Zander, 2012.

Research which ingredients influence on the tensor rank of K

[2] Efficient low-rank approximation of the stochastic Galerkin matrix in tensor formats,

W¨ahnert, Espig, Hackbusch, A.L., Matthies, 2013.

Approximate κ(x, ω), stochastic Galerkin operator K in Tensor

Train (TT) format, solve for u, postprocessing

[3] Polynomial Chaos Expansion of random coefficients and the solution of stochastic

partial differential equations in the Tensor Train format, Dolgov, Litvinenko, Khoromskij, Matthies, 2016.

Center for Uncertainty

Quantification

ation Logo Lock-up

-2 / 18](https://image.slidesharecdn.com/litvinenkoespigsiamcse17-170302223418/75/Low-rank-methods-for-analysis-of-high-dimensional-data-SIAM-CSE-talk-2017-3-2048.jpg)

![4*

Typical quantities of interest

Keeping all input and intermediate data in a tensor

representation one wants to perform different tasks:

evaluation for specific parameters (ω1, . . . , ωM),

finding maxima and minima,

finding ‘level sets’ (needed for histogram and probability

density).

Example of level set: all elements of a high dimensional tensor

from the interval [0.7, 0.8].

Center for Uncertainty

Quantification

ation Logo Lock-up

-1 / 18](https://image.slidesharecdn.com/litvinenkoespigsiamcse17-170302223418/75/Low-rank-methods-for-analysis-of-high-dimensional-data-SIAM-CSE-talk-2017-4-2048.jpg)

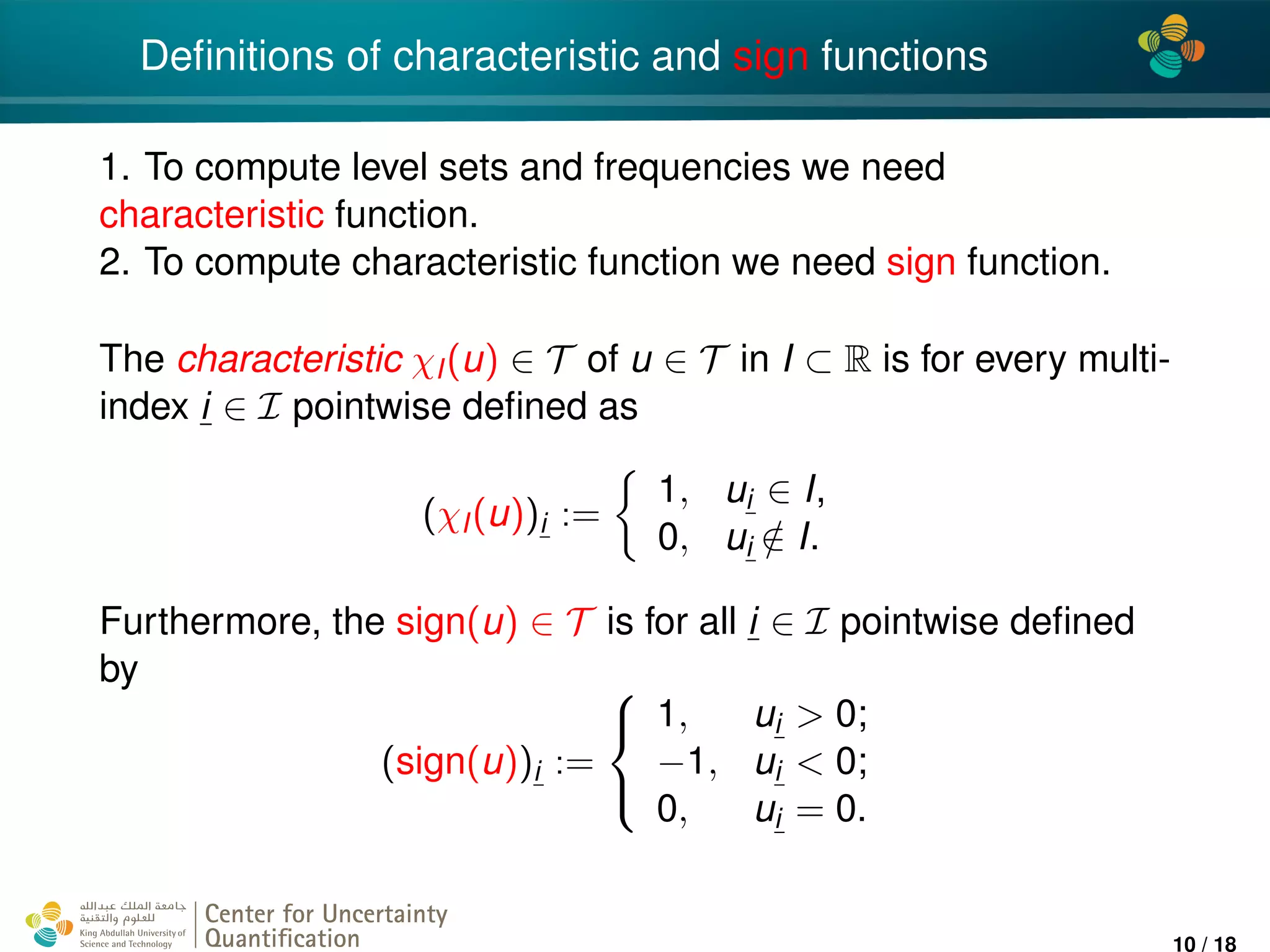

![4*

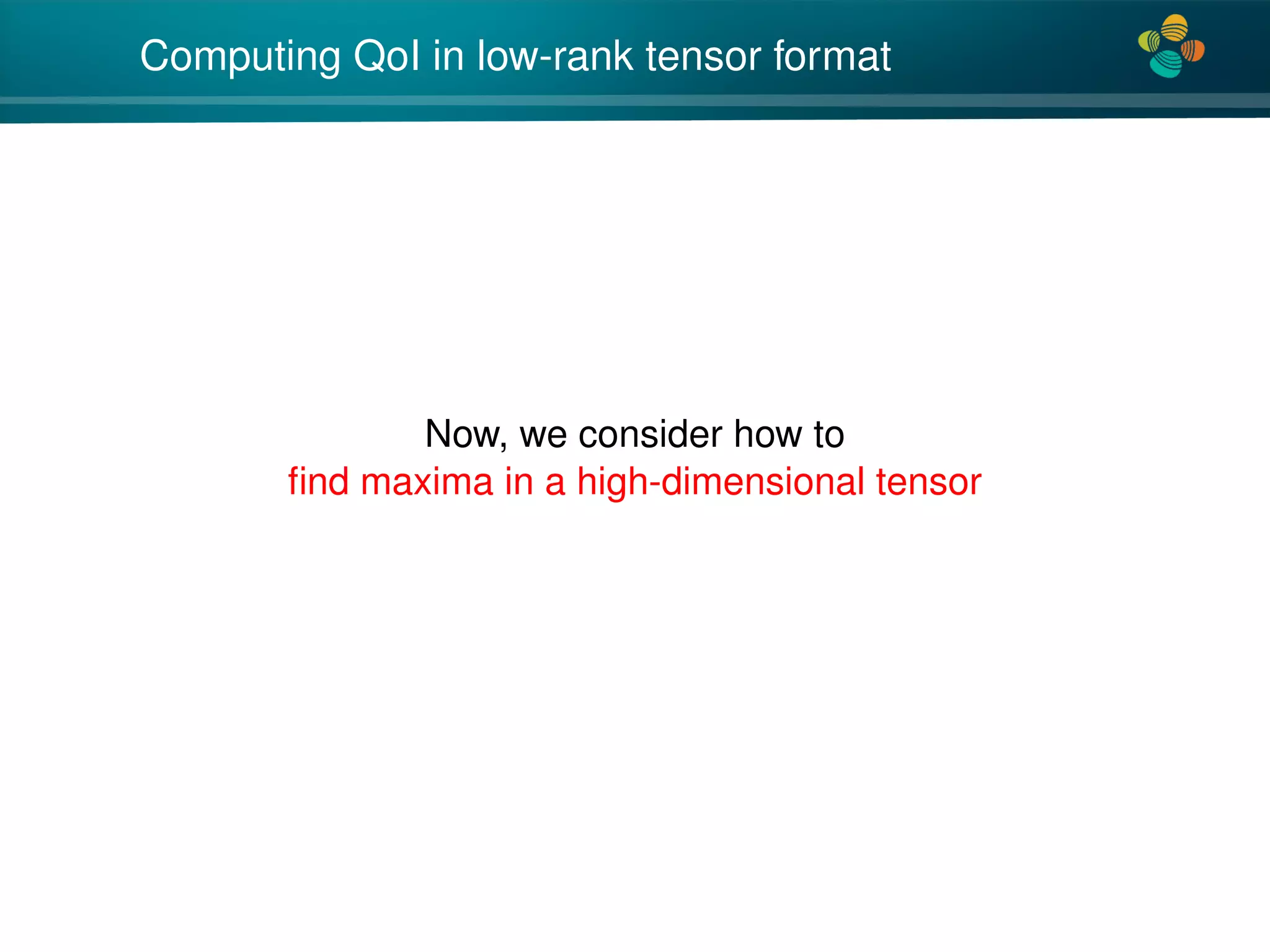

Canonical and Tucker tensor formats

[Pictures are taken from B. Khoromskij and A. Auer lecture course]

Storage: O(nd ) → O(dRn) and O(Rd + dRn).

Center for Uncertainty

Quantification

ation Logo Lock-up

1 / 18](https://image.slidesharecdn.com/litvinenkoespigsiamcse17-170302223418/75/Low-rank-methods-for-analysis-of-high-dimensional-data-SIAM-CSE-talk-2017-6-2048.jpg)

![4*

Definition of tensor of order d

Tensor of order d is a multidimensional array over a d-tuple

index set I = I1 × · · · × Id ,

A = [ai1...id

: i ∈ I ] ∈ RI

, I = {1, ..., n }, = 1, .., d.

A is an element of the linear space

Vn =

d

=1

V , V = RI

equipped with the Euclidean scalar product ·, · : Vn × Vn → R,

defined as

A, B :=

(i1...id )∈I

ai1...id

bi1...id

, for A, B ∈ Vn.

Let T := d

µ=1 Rnµ ,

RR(T ) := R

i=1

d

µ=1 viµ ∈ T : viµ ∈ Rnµ ,

Center for Uncertainty

Quantification

ation Logo Lock-up

2 / 18](https://image.slidesharecdn.com/litvinenkoespigsiamcse17-170302223418/75/Low-rank-methods-for-analysis-of-high-dimensional-data-SIAM-CSE-talk-2017-7-2048.jpg)

![4*

Tensor and Matrices

Rank-1 tensor

A = u1 ⊗ u2 ⊗ ... ⊗ ud =:

d

µ=1

uµ

Ai1,...,id

= (u1)i1

· ... · (ud )id

Rank-1 tensor A = u ⊗ v, matrix A = uvT , A = vuT , u ∈ Rn,

v ∈ Rm,

Rank-k tensor A = k

i=1 ui ⊗ vi, matrix A = k

i=1 uivT

i .

Kronecker product of n × n and m × m matrices is a new block

matrix A ⊗ B ∈ Rnm×nm, whose ij-th block is [AijB].

Center for Uncertainty

Quantification

ation Logo Lock-up

4 / 18](https://image.slidesharecdn.com/litvinenkoespigsiamcse17-170302223418/75/Low-rank-methods-for-analysis-of-high-dimensional-data-SIAM-CSE-talk-2017-9-2048.jpg)

![4*

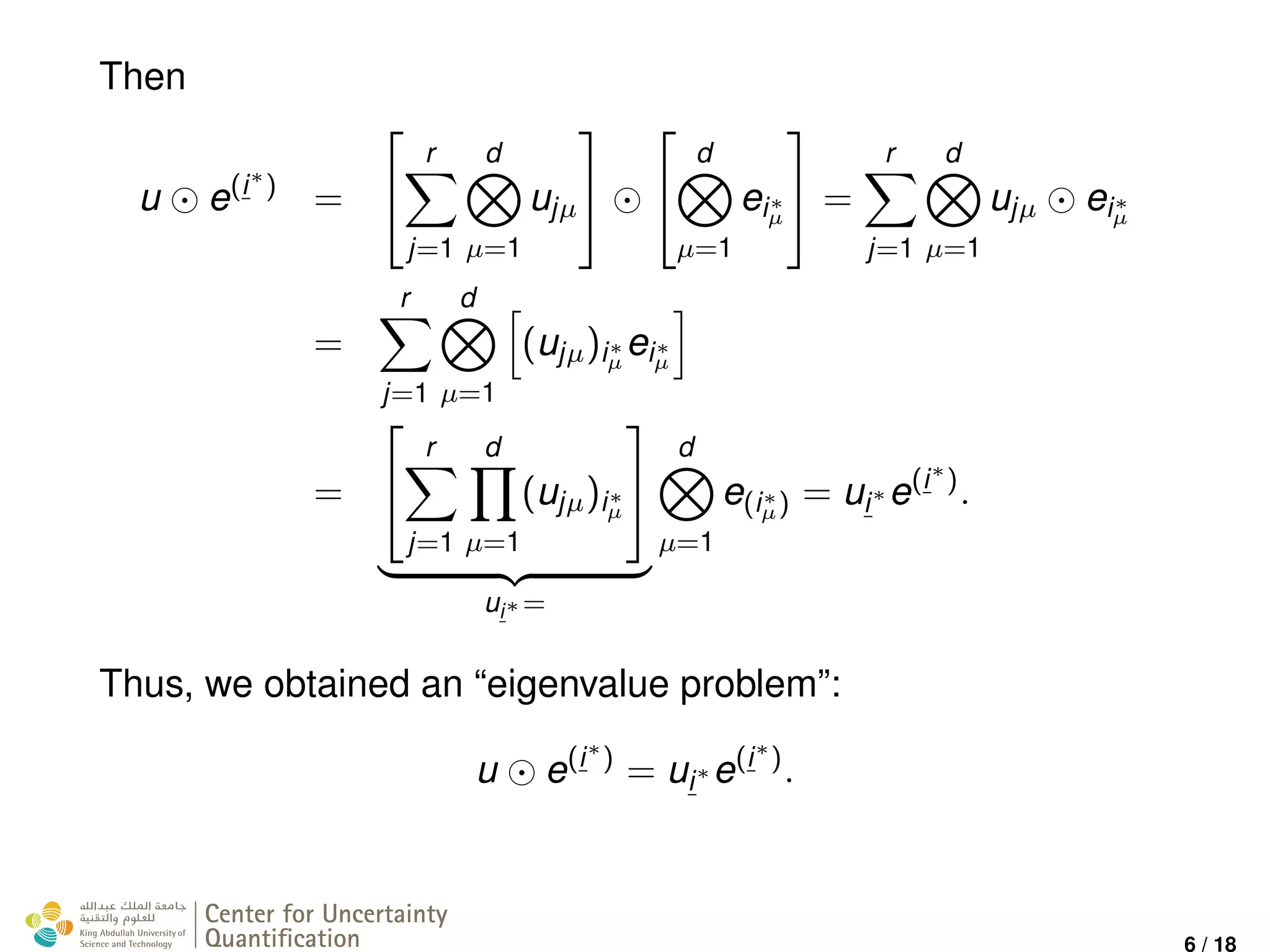

Computing u ∞, u ∈ Rr by vector iteration

By defining the following diagonal matrix

D(u) :=

r

j=1

d

µ=1

diag (ujµ) µ µ∈N≤nµ

(1)

with representation rank r, obtain D(u)v = u v.

Now apply the well-known vector iteration method (with rank

truncation) to

D(u)e(i∗

)

= ui∗ e(i∗

)

,

obtain u ∞.

[Approximate iteration, Khoromskij, Hackbusch, Tyrtyshnikov 05],

and [Espig, Hackbusch 2010]

Center for Uncertainty

Quantification

ation Logo Lock-up

7 / 18](https://image.slidesharecdn.com/litvinenkoespigsiamcse17-170302223418/75/Low-rank-methods-for-analysis-of-high-dimensional-data-SIAM-CSE-talk-2017-13-2048.jpg)

![4*

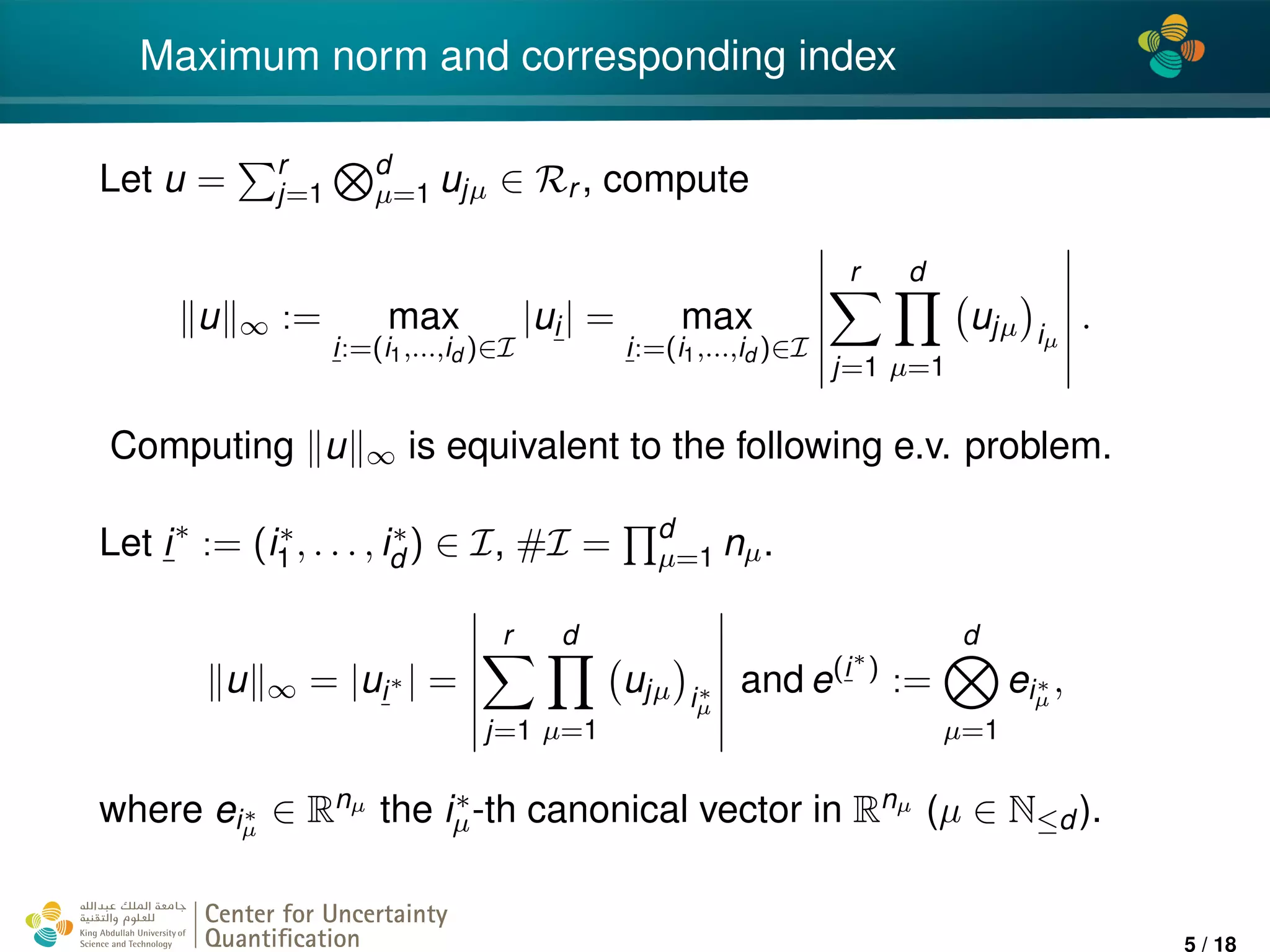

Computing QoI in low-rank tensor format

Now, we consider how to

find ‘level sets’,

for instance, all entries of tensor u from interval [a, b].](https://image.slidesharecdn.com/litvinenkoespigsiamcse17-170302223418/75/Low-rank-methods-for-analysis-of-high-dimensional-data-SIAM-CSE-talk-2017-16-2048.jpg)