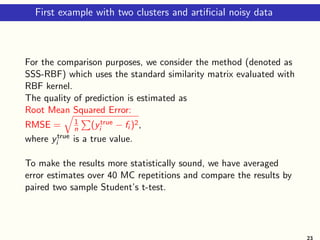

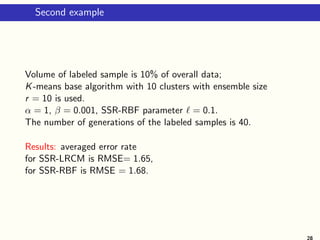

This document summarizes a semi-supervised regression method that combines graph Laplacian regularization with cluster ensemble methodology. It proposes using a weighted averaged co-association matrix from the cluster ensemble as the similarity matrix in graph Laplacian regularization. The method (SSR-LRCM) finds a low-rank approximation of the co-association matrix to efficiently solve the regression problem. Experimental results on synthetic and real-world datasets show SSR-LRCM achieves significantly better prediction accuracy than an alternative method, while also having lower computational costs for large datasets. Future work will explore using a hierarchical matrix approximation instead of low-rank.

![Combined semi-supervised regression and ensemble clustering

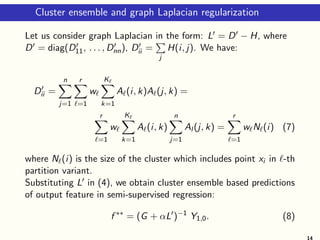

Graph Laplacian regularization:

find f ∗ such that f ∗ = arg min

f ∈Rn

Q(f ), where

Q(f ) :=

1

2

xi ∈X1

(fi − yi )2

+ α

xi ,xj ∈X

wij (fi − fj )2

+ β||f ||2

,

(1)

f = (f1, . . . , fn) is a vector of predicted outputs: fi = f (xi );

α, β > 0 are regularization parameters,

W = (wij ) is data similarity matrix, e.g. Mat´ern family:

W (h) = σ2

2ν−1Γ(ν)

h ν

Kν

h

with three parameters , ν, and σ2.

[1st term in (1) minimizes fitting error on labeled data; the 2nd term aims to

obtain ”smooth” predictions on both labeled and unlabeled sample; 3rd - is

Tikhonov’s regularizer]

8](https://image.slidesharecdn.com/talklitvinenkocrete-190623195510/85/Semi-Supervised-Regression-using-Cluster-Ensemble-8-320.jpg)

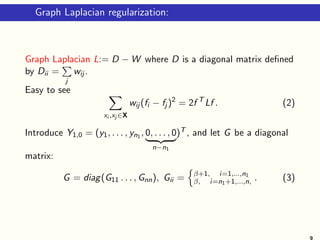

![Co-association matrix of cluster ensemble

Use a co-association matrix of cluster ensemble as similarity matrix

in (1).

Let us consider a set of partition variants {P }r

=1, where

P = {C ,1, . . . , C ,K }, C ,k ⊂ X,

C ,k C ,k = ∅,

K is number of clusters in -th partition.

For each P we determine matrix H = (h (i, j))n

i,j=1 with elements

indicating whether a pair xi , xj belong to the same cluster in -th

variant or not:

h (i, j) = I[c (xi ) = c (xj )], where

I(·) is indicator function (I[true] = 1, I[false] = 0),

c (x) is cluster label assigned to x.

11](https://image.slidesharecdn.com/talklitvinenkocrete-190623195510/85/Semi-Supervised-Regression-using-Cluster-Ensemble-11-320.jpg)

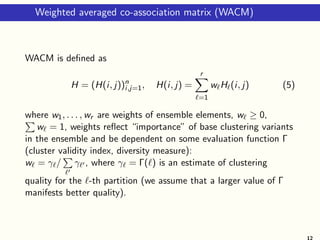

![Low-rank approximation of WACM

Proposition 1. Weighted averaged co-association matrix admits

low-rank decomposition in the form:

H = BBT

, B = [B1B2 . . . Br ] (6)

where B is a block matrix, B =

√

w A , A is (n × K ) cluster

assignment matrix for -th partition: A (i, k) = I[c(xi ) = k],

i = 1, . . . , n, k = 1, . . . , K .

13](https://image.slidesharecdn.com/talklitvinenkocrete-190623195510/85/Semi-Supervised-Regression-using-Cluster-Ensemble-13-320.jpg)