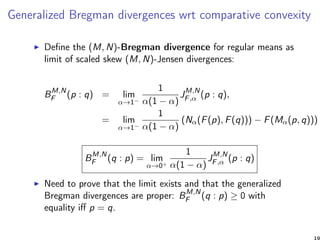

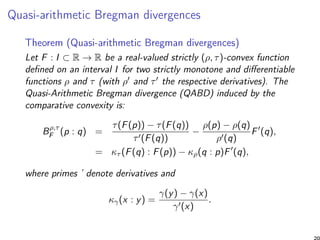

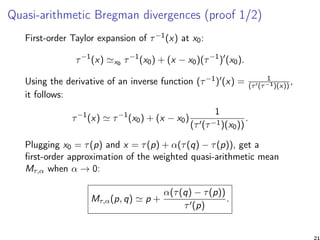

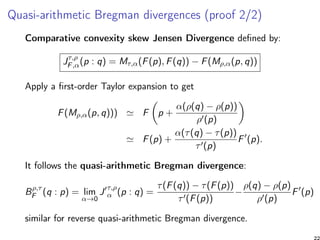

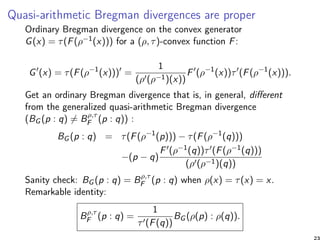

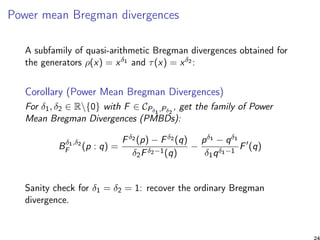

This document discusses generalized divergences and comparative convexity. It introduces Jensen divergences, Bregman divergences, and their generalizations to quasi-arithmetic and weighted means. Quasi-arithmetic Bregman divergences are defined for strictly (ρ,τ)-convex functions using two strictly monotone functions ρ and τ. Power mean Bregman divergences are obtained as a subfamily when ρ(x)=xδ1 and τ(x)=xδ2. A criterion is given to check (ρ,τ)-convexity by testing the ordinary convexity of the transformed function G=Fρ,τ.

![Midpoint convexity, continuity and convexity

In 1906, Jensen [9] introduced the midpoint convex

property of a function F:

F(p) + F(q)

2

≥ F

p + q

2

, ∀p, q ∈ X.

A continuous function F satisfying the midpoint convexity

implies it also obeys the convexity property [14]:

∀p, q, ∀λ ∈ [0, 1], F(λp + (1 − λ)q) ≤ λF(p) + (1 − λ)F(q).

When inequality is strict for distinct points and λ ∈ (0, 1), this

inequality defines the strict convex property of F.

A function satisfying only the midpoint convexity inequality

may not be continuous [11] (and hence not convex).

3](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-3-320.jpg)

![Jensen divergences and skew Jensen divergences

A divergence D(p, q) is proper iff D(p, q) ≥ 0 with equality iff

p = q.

Jensen divergence (Burbea and Rao divergence [6]) defined for

a strictly convex function F ∈ F<:

JF (p, q):=

F(p) + F(q)

2

− F

p + q

2

.

Skew Jensen divergences [19, 15] for α ∈ (0, 1):

JF,α(p : q):=(1 − α)F(p) + αF(q) − F((1 − α)p + αq),

with JF,α(q : p) = JF,1−α(p : q).

4](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-4-320.jpg)

![Bregman divergences

Bregman divergences [4] (1967) for a strictly convex and

differentiable generator F:

BF (p : q):=F(p) − F(q) − (p − q) F(q).

Scaled skew Jensen divergences [19, 15]:

JF,α(p : q):=

1

α(1 − α)

JF,α(p : q),

with limit cases recovering Bregman divergences:

lim

α→1−

JF,α(p : q) = BF (p : q),

lim

α→0+

JF,α(p : q) = BF (q : p).

5](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-5-320.jpg)

![Jensen midpoint convexity wrt comparative convexity [14]

Let M(·, ·) and N(·, ·) be two abstract mean functions [5].

min{p, q} ≤ M(p, q) ≤ max{p, q}. (1)

Functions F ∈ C≤

M,N is said midpoint (M, N)-convex iff

∀p, q ∈ X, F(M(p, q)) ≤ N(F(p), F(q)).

When M(p, q) = N(p, q) = A(p, q) = p+q

2 are the arithmetic

means, recover the ordinary Jensen midpoint convexity:

∀p, q ∈ X, F

p + q

2

≤

F(p) + F(q)

2

Note that there exist discontinuous functions that satisfy the

Jensen midpoint convexity.

7](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-7-320.jpg)

![Regular means

A mean is said regular if it is

homogeneous: M(λp, λq) = λM(p, q) for any λ > 0

symmetric: M(p, q) = M(q, p)

continuous,

increasing in each variable.

Examples of regular means: power means or Hölder means [8]:

Pδ(x, y) =

xδ + yδ

2

1

δ

, P0(x, y) = G(x, y) =

√

xy

belong to a broader family of quasi-arithmetic means. For a

continuous and strictly increasing function f : I ⊂ R → J ⊂ R:

Mf (p, q):=f −1 f (p) + f (q)

2

.

also called Kolmogorov-Nagumo-de Finetti means [10, 13, 7].

9](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-9-320.jpg)

![Lagrange means [3]

Lagrange means [3] (Lagrangean means) are derived from the mean

value theorem.

Assume wlog that p < q so that the mean m ∈ [p, q].

From the mean value theorem, we have for a differentiable function

f :

∃λ ∈ [p, q] : f (λ) =

f (q) − f (p)

q − p

.

Thus when f is a monotonic function, its inverse function f −1 is

well-defined, and the unique mean value mean λ ∈ [p, q] can be

defined as:

Lf (p, q) = λ = (f )−1 f (q) − f (p)

q − p

.

11](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-11-320.jpg)

![An example of a Lagrange mean [3]

For example, f (x) = log(x) and f (x) = (f )−1(x) = 1

x , we get the

logarithmic mean (L), that is not a quasi-arithmetic mean:

m(p, q) =

0 if p = 0 or q = 0,

x if p = q,

q−p

log q−log p otherwise,

12](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-12-320.jpg)

![Cauchy means [12]

Two positive differentiable and strictly monotonic functions f

and g such that f

g has an inverse function.

The Cauchy mean-value mean:

Cf ,g (p, q) =

f

g

−1

f (q) − f (p)

g(q) − g(p)

, q = p,

with Cf ,g (p, p) = p.

Cauchy means as Lagrange means [12] by the following

identity:

Cf ,g (p, q) = Lf ◦g−1 (g(p), g(q))

since ((f ◦ g−1)(x)) = f (g−1(x))

g (g−1(x))

:

Proof:

Lf ◦g−1 (g(p), g(q)) = ((f ◦ g

−1

) (g(x)))

−1 (f ◦ g−1

)(g(q)) − (f ◦ g−1

)(g(p))

g(q) − g(p)

,

=

f (g−1

(g(x)))

g (g−1(g(x)))

−1

f (q) − f (p)

g(q) − g(p)

,

= Cf ,g (p, q).

13](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-13-320.jpg)

![Stolarsky regular means

The Stolarsky regular means are not quasi-arithmetic means nor

mean-value means [5], and are defined by:

Sp(x, y) =

xp − yp

p(x − y)

1

p−1

, p ∈ {0, 1}.

In limit cases, the Stolarsky family of means yields the logarithmic

mean (L) when p → 0:

L(x, y) =

y − x

log y − log x

,

and the identric mean (I) when p → 1:

I(x, y) =

yy

xx

1

y−x

.

14](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-14-320.jpg)

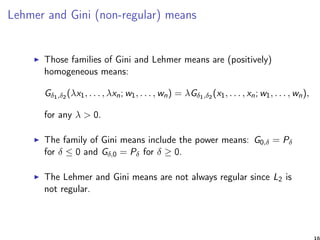

![Lehmer and Gini (non-regular) means

Weighted Lehmer mean [2] of order δ is defined for δ ∈ R

as:

Lδ(x1, . . . , xn; w1, . . . , wn) =

n

i=1 wi xδ+1

i

n

i=1 wi xδ

i

.

The Lehmer means intersect with the Hölder means only for

the arithmetic (A), geometric (G) and harmonic (H) means.

Lehmer barycentric means belong to Gini means:

Gδ1,δ2 (x1, . . . , xn; w1, . . . , wn) =

n

i=1 wi xδ1

i

n

i=1 wi xδ2

i

1

δ1−δ2

,

when δ1 = δ2, and

Gδ1,δ2 (x1, . . . , xn; w1, . . . , wn) =

n

i=1

x

wi xδ

i

i

1

n

i=1

wi xδ

i

,

when δ1 = δ2 = δ.

15](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-15-320.jpg)

![Weighted abstract means and (M, N)-convexity

Consider weighted means M and N,

Mα(p, q):=M(p, q; 1 − α, α).

Function F is (M, N)-convex if and only if:

F(M(p, q; 1 − α, α)) ≤ N(F(p), F(q); 1 − α, α).

For regular means and continuous function F, define the skew

(M, N)-Jensen divergence:

JM,N

F,α (p : q) = Nα(F(p), F(q)) − F(Mα(p, q)) ≥ 0

We have JM,N

F,α (q, p) = JM,N

F,1−α(p : q)

Continuous function + midpoint convexity = (M, N)-convexity

property (Theorem A of [14]):

Nα(F(p), F(q)) ≥ F(Mα(p, q)), ∀p, q ∈ X, ∀α ∈ [0, 1].

17](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-17-320.jpg)

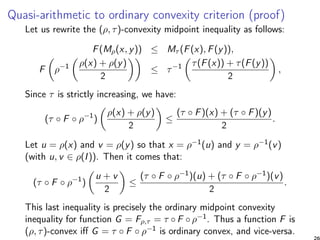

![Quasi-arithmetic to ordinary convexity criterion

To check whether a function F is (M, N)-convex or not for

quasi-arithmetic means Mρ and Mτ , we use an equivalent test to

ordinary convexity:

Lemma ((ρ, τ)-convexity ↔ ordinary convexity [1])

Let ρ : I → R and τ : J → R be two continuous and strictly

monotone real-valued functions with τ increasing, then function

F : I → J is (ρ, τ)-convex iff function G = Fρ,τ = τ ◦ F ◦ ρ−1 is

(ordinary) convex on ρ(I).

F ∈ C≤

ρ,τ ⇔ G = τ ◦ F ◦ ρ−1

∈ C≤](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-25-320.jpg)

![Summary of contributions [17, 16]

defined generalized Jensen divergences and generalized

Bregman divergences using comparative convexity

reported an explicit formula for the quasi-arithmetic Bregman

divergences (QABD)

The QABD can be interpreted as a conformal Bregman

divergence [18] on the ρ-representation

emphasizes that the theory of means [5] is at the very heart of

distances.

see arXiv report for furthre results including a generalization of

Bhattacharyya statistical distance using comparable means

27](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-27-320.jpg)

![References I

[1] John Aczél.

A generalization of the notion of convex functions.

Det Kongelige Norske Videnskabers Selskabs Forhandlinger, Trondheim, 19(24):87–90, 1947.

[2] Gleb Beliakov, Humberto Bustince Sola, and Tomasa Calvo Sánchez.

A practical guide to averaging functions, volume 329.

Springer, 2015.

[3] Lucio R Berrone and Julio Moro.

Lagrangian means.

Aequationes Mathematicae, 55(3):217–226, 1998.

[4] Lev M Bregman.

The relaxation method of finding the common point of convex sets and its application to the

solution of problems in convex programming.

USSR computational mathematics and mathematical physics, 7(3):200–217, 1967.

[5] Peter S Bullen, Dragoslav S Mitrinovic, and M Vasic.

Means and their Inequalities, volume 31.

Springer Science & Business Media, 2013.

[6] Jacob Burbea and C Rao.

On the convexity of some divergence measures based on entropy functions.

IEEE Transactions on Information Theory, 28(3):489–495, 1982.

[7] Bruno De Finetti.

Sul concetto di media.

3:369Ű396, 1931.

Istituto italiano degli attuari.

[8] Otto Ludwig Holder.

Über einen Mittelwertssatz.

Nachr. Akad. Wiss. Gottingen Math.-Phys. Kl., pages 38–47, 1889.

28](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-28-320.jpg)

![References II

[9] Johan Ludwig William Valdemar Jensen.

Sur les fonctions convexes et les inégalités entre les valeurs moyennes.

Acta mathematica, 30(1):175–193, 1906.

[10] Andrey Nikolaevich Kolmogorov.

Sur la notion de moyenne.

12:388Ű391, 1930.

Acad. Naz. Lincei Mem. Cl. Sci. His. Mat. Natur. Sez.

[11] Gyula Maksa and Zsolt Páles.

Convexity with respect to families of means.

Aequationes mathematicae, 89(1):161–167, 2015.

[12] Janusz Matkowski.

On weighted extensions of Cauchy’s means.

Journal of mathematical analysis and applications, 319(1):215–227, 2006.

[13] Mitio Nagumo.

Über eine klasse der mittelwerte.

In Japanese journal of mathematics: transactions and abstracts, volume 7, pages 71–79. The

Mathematical Society of Japan, 1930.

[14] Constantin P. Niculescu and Lars-Erik Persson.

Convex functions and their applications: A contemporary approach.

Springer Science & Business Media, 2006.

[15] Frank Nielsen and Sylvain Boltz.

The Burbea-Rao and Bhattacharyya centroids.

IEEE Transactions on Information Theory, 57(8):5455–5466, 2011.

29](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-29-320.jpg)

![References III

[16] Frank Nielsen and Richard Nock.

Generalizing Jensen and Bregman divergences with comparative convexity and the statistical

Bhattacharyya distances with comparable means.

CoRR, abs/1702.04877, 2017.

[17] Frank Nielsen and Richard Nock.

Generalizing skew Jensen divergences and Bregman divergences with comparative convexity.

IEEE Signal Processing Letters, 24(8):1123–1127, Aug 2017.

[18] Richard Nock, Frank Nielsen, and Shun-ichi Amari.

On conformal divergences and their population minimizers.

IEEE Trans. Information Theory, 62(1):527–538, 2016.

[19] Jun Zhang.

Divergence function, duality, and convex analysis.

Neural Computation, 16(1):159–195, 2004.

30](https://image.slidesharecdn.com/slide-comparativeconvexity-170802020142/85/Bregman-divergences-from-comparative-convexity-30-320.jpg)