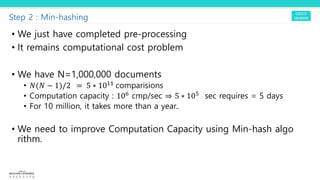

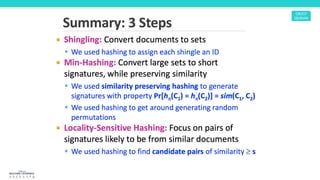

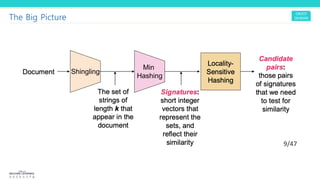

This document summarizes the key steps in the locality sensitive hashing (LSH) algorithm for finding similar documents:

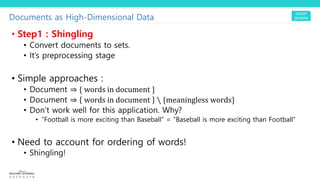

1. Documents are converted to sets of shingles (sequences of tokens) to represent them as high-dimensional data points.

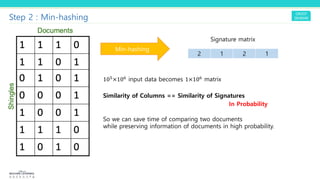

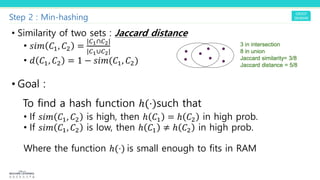

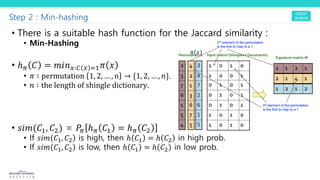

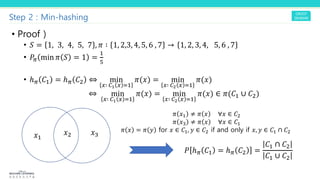

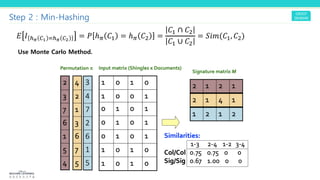

2. MinHashing is applied to generate signatures (hashes) for each document such that similar documents are likely to have the same signatures. This compresses the data into a signature matrix.

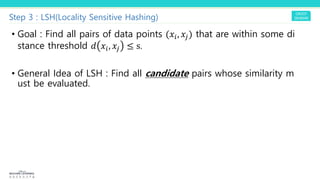

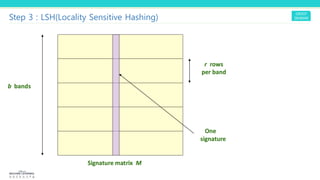

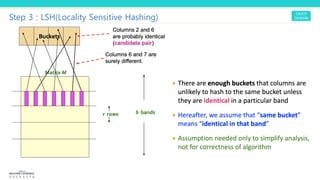

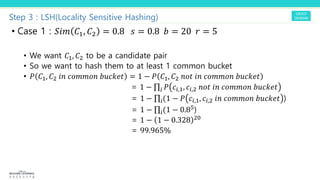

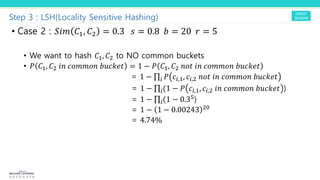

3. LSH uses the signature matrix to hash similar documents into the same buckets with high probability, finding candidate pairs for further similarity evaluation and filtering out dissimilar pairs from consideration. This improves the computation efficiency over directly comparing all pairs.

![Step 1 : Shingling

• A k-shingle for a document is a sequence of k-tokens that appea

rs in the document

• Example : k=2 document D=abcab

• Shingling -> {ab, bc, ca, ab} -> {ab, bc, ca}

• Hash the shingles -> {1, 5, 7} = [1, 0, 0, 0, 1, 0, 1]

• If you worry about order of shingles yet, pich k large enough

• K=5 is OK for short documents](https://image.slidesharecdn.com/lshlocalitysensitivehashing-200420143441/85/Locality-sensitive-hashing-9-320.jpg)