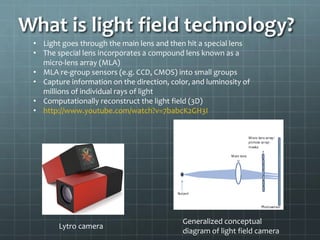

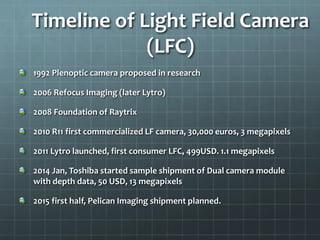

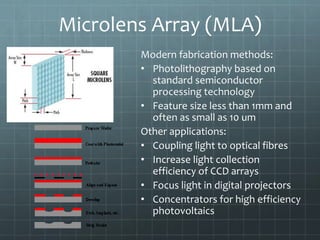

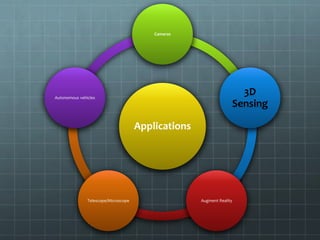

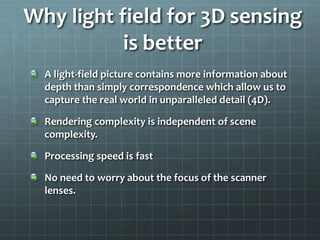

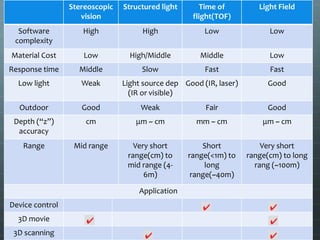

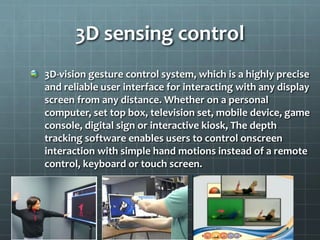

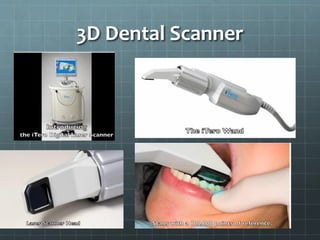

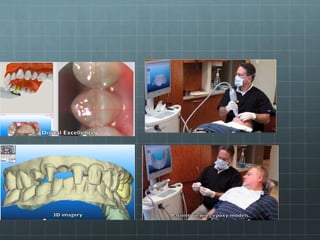

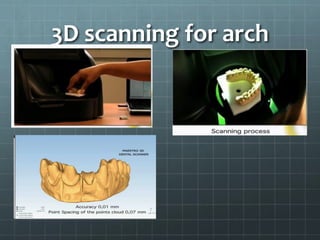

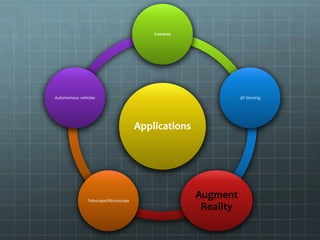

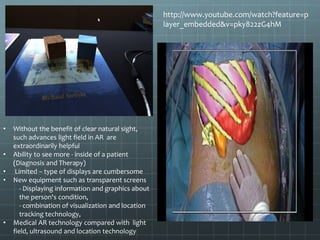

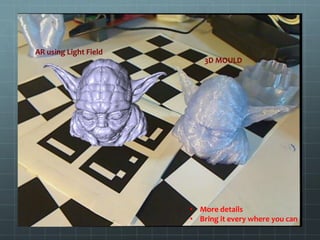

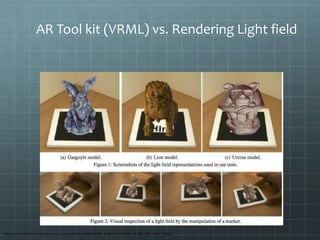

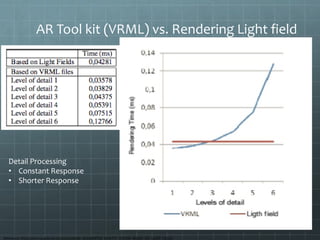

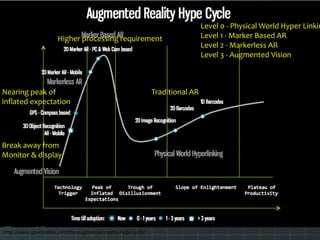

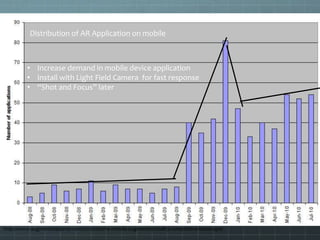

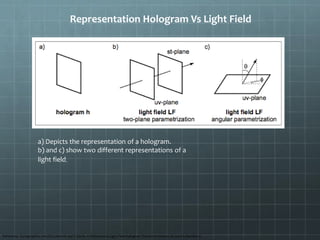

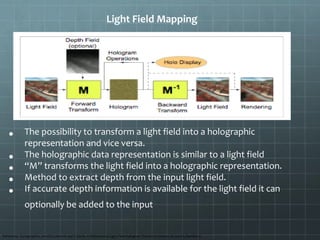

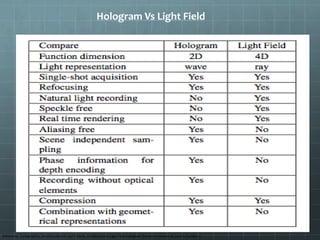

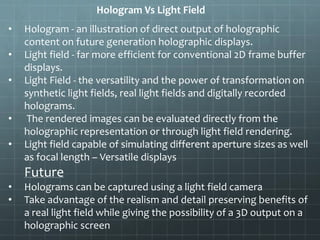

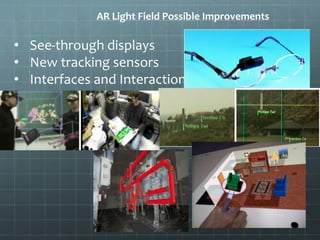

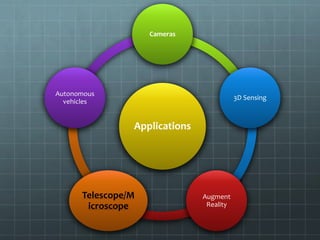

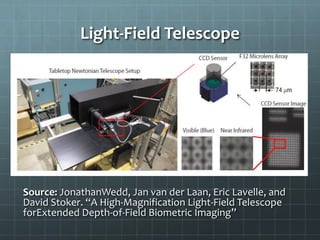

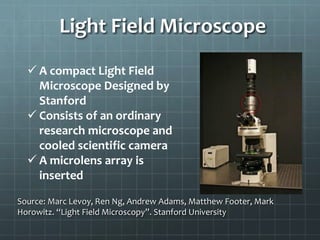

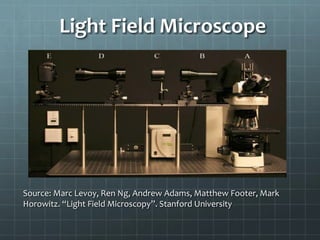

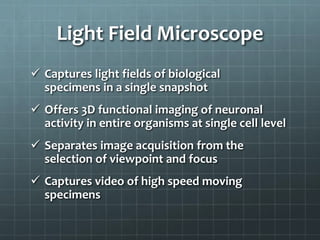

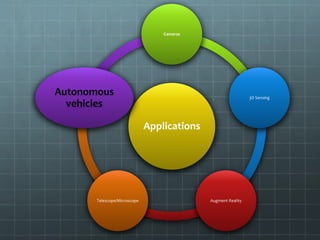

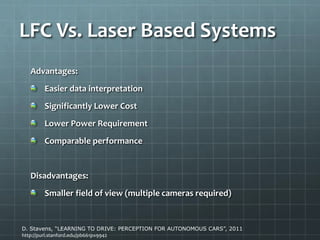

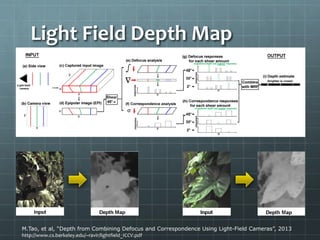

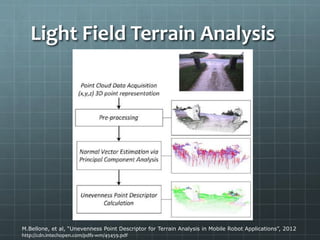

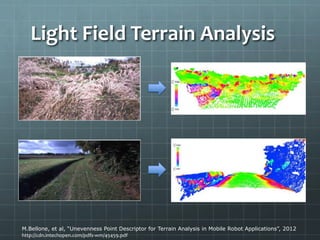

Light field technology captures and reconstructs 3D data by using a micro-lens array to gather information on light rays' direction, color, and luminosity. Its applications span across various domains including 3D sensing, augmented reality, and autonomous vehicles, offering advantages like depth imaging and enhanced visualization. Future advancements could further simplify and accelerate 3D applications, expanding its utility across multiple sectors.