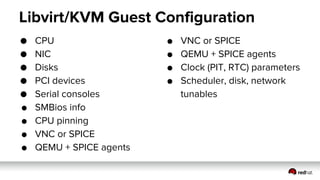

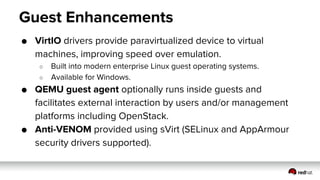

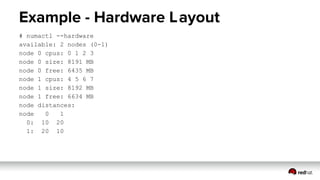

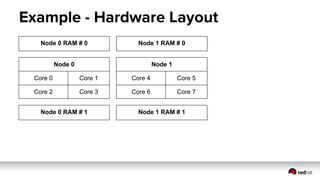

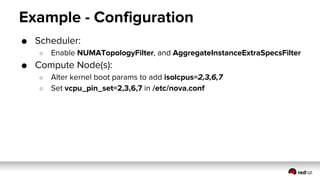

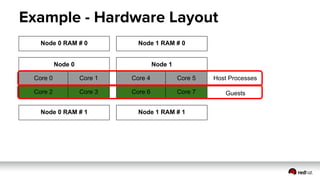

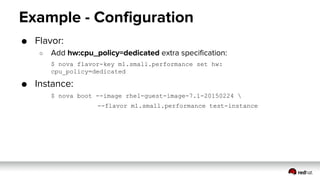

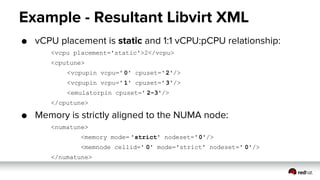

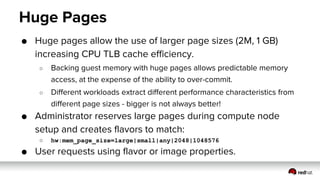

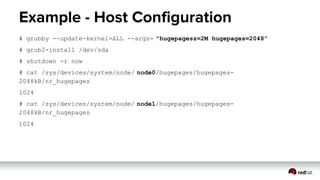

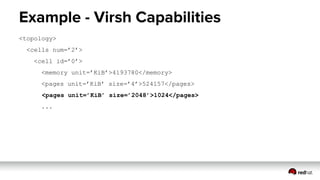

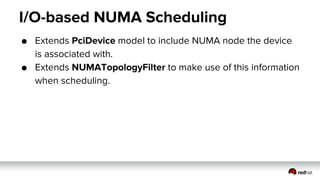

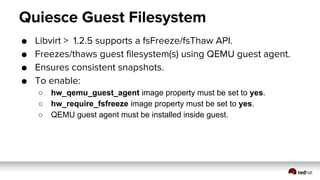

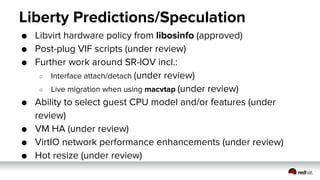

This document summarizes OpenStack Compute features related to the Libvirt/KVM driver, including updates in Kilo and predictions for Liberty. Key Kilo features discussed include CPU pinning for performance, huge page support, and I/O-based NUMA scheduling. Predictions for Liberty include improved hardware policy configuration, post-plug networking scripts, further SR-IOV support, and hot resize capability. The document provides examples of how these features can be configured and their impact on guest virtual machine configuration and performance.

![Libvirt/KVM

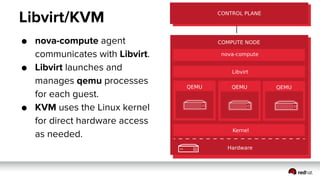

● Driver used for 85% of production OpenStack deployments. [1]

● Free and Open Source Software end-to-end stack:

○ Libvirt - Abstraction layer providing an API for hypervisor and virtual

machine lifecycle management. Supports many hypervisors and

architectures.

○ Qemu - Machine emulator able to use dynamic translation, or with

hypervisor assistance (e.g. KVM) virtualization.

○ KVM - Kernel-based-virtual machine is a kernel module providing full

virtualization for the Linux kernel .

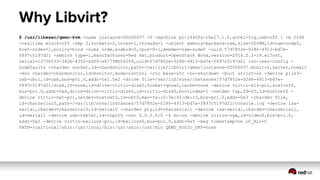

● Why Libvirt instead of speaking straight to QEMU?

[1] http://superuser.openstack.org/articles/openstack-users-share-how-their-deployments-stack-up](https://image.slidesharecdn.com/compute-libvirt-kvm-driver-march-20152-150518210901-lva1-app6892/85/Libvirt-KVM-Driver-Update-Kilo-8-320.jpg)