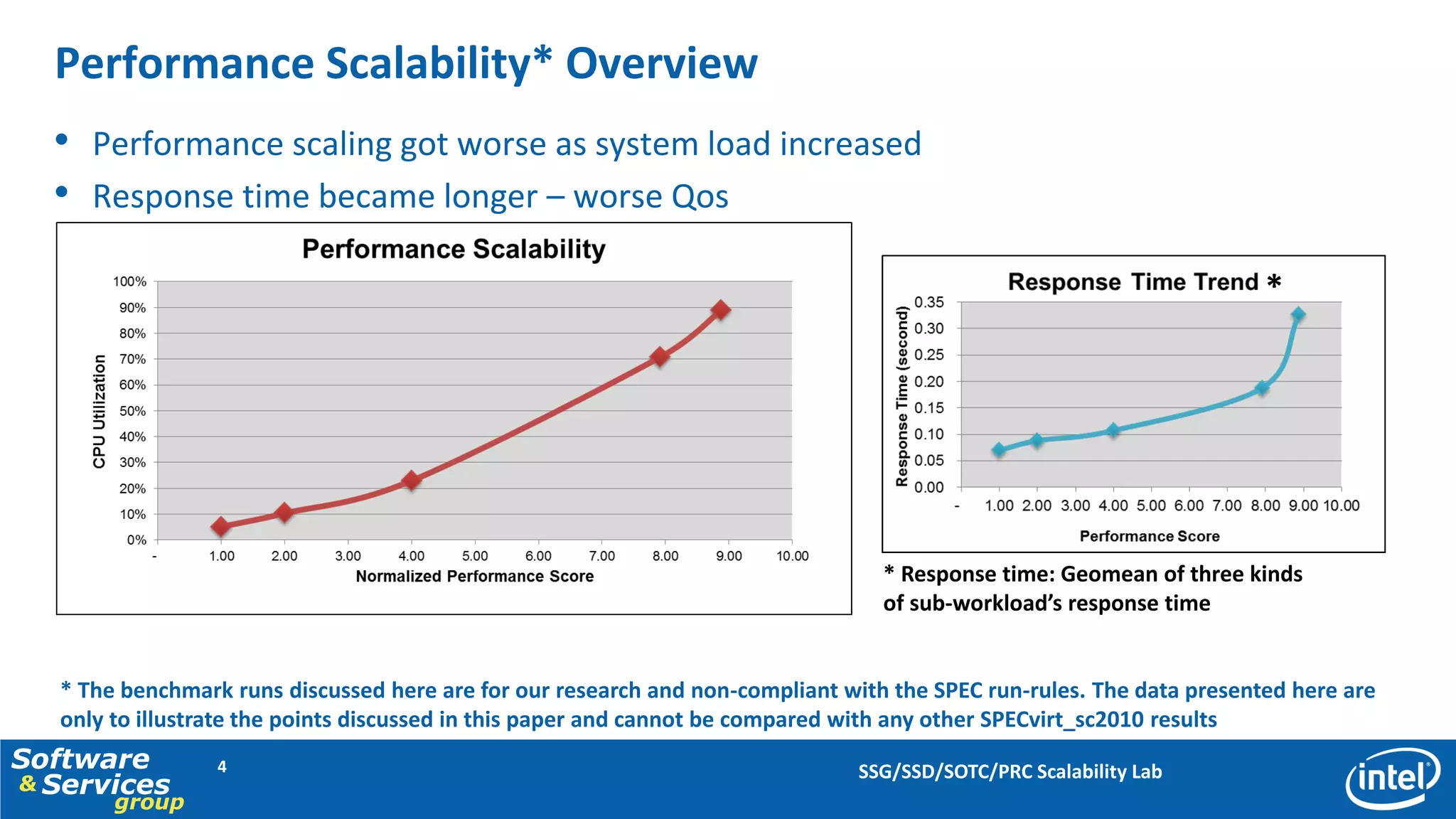

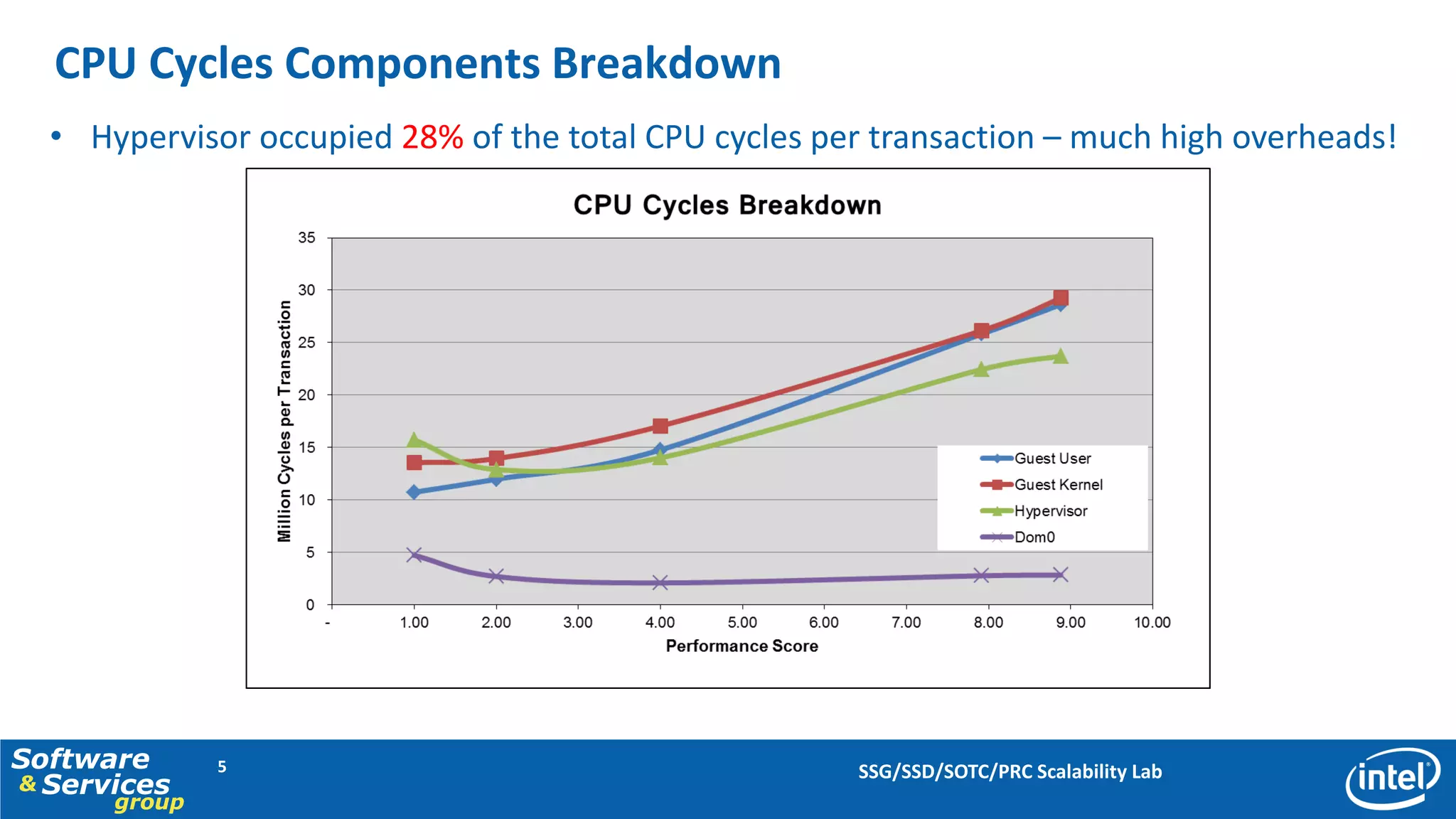

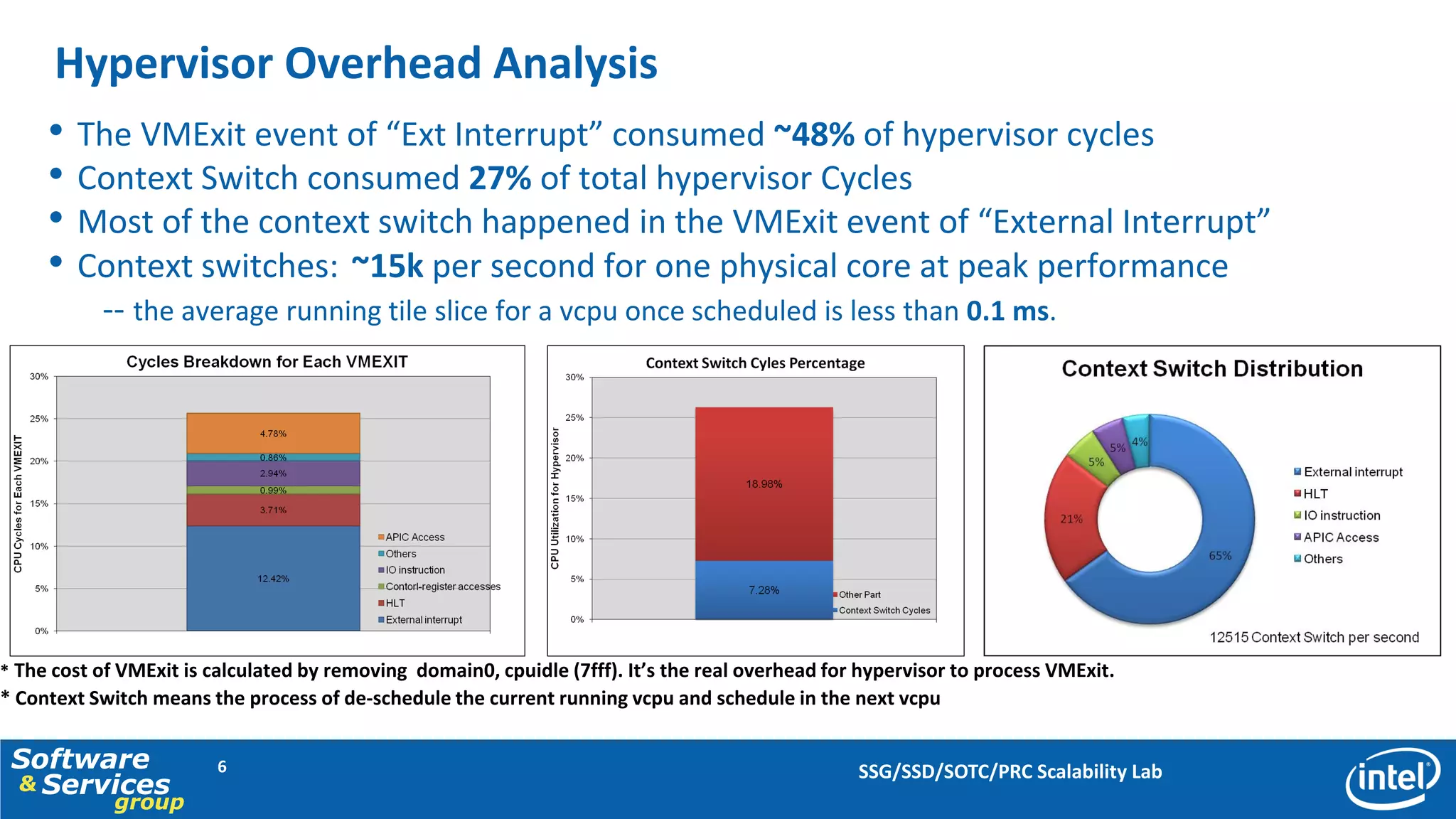

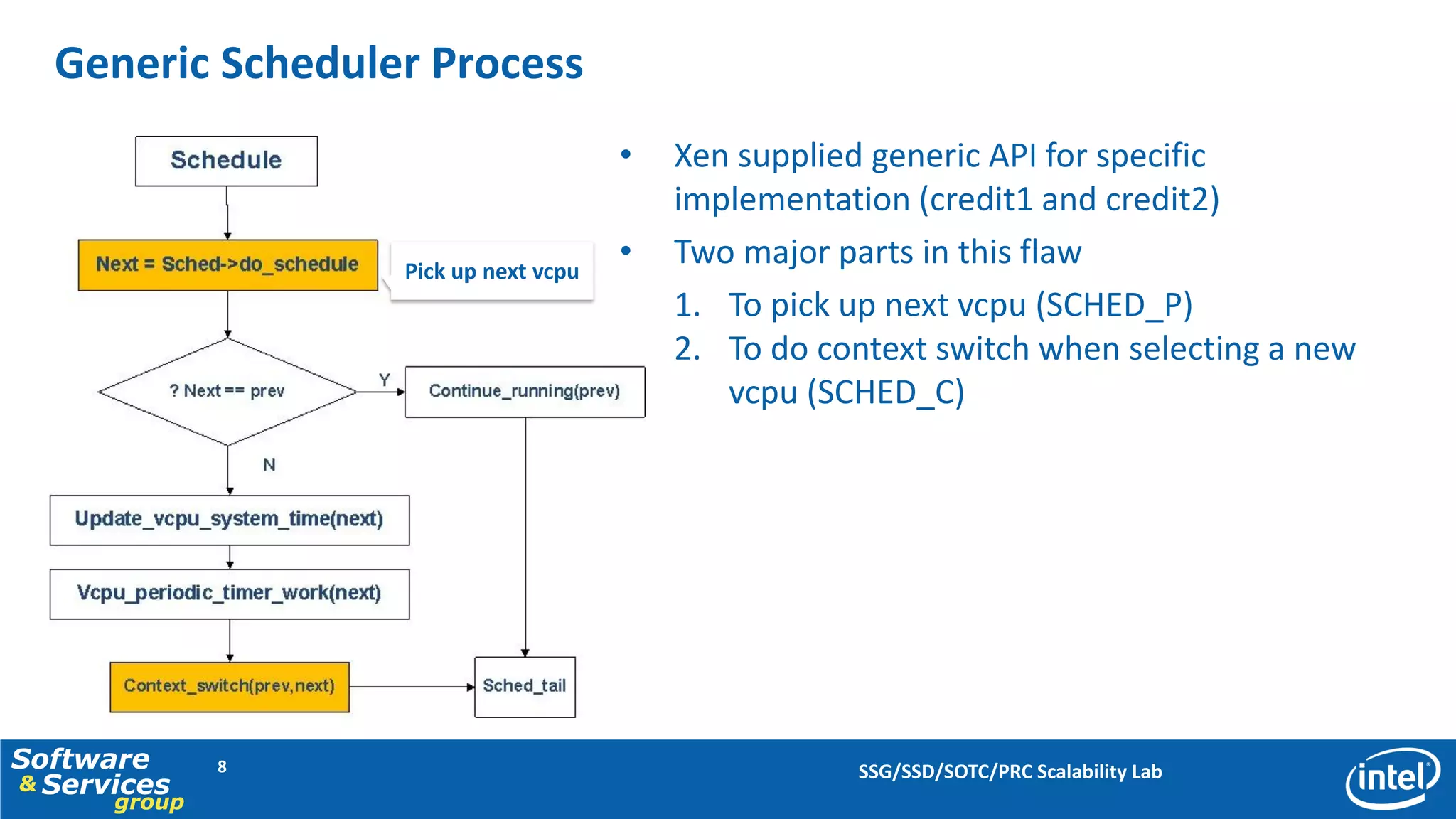

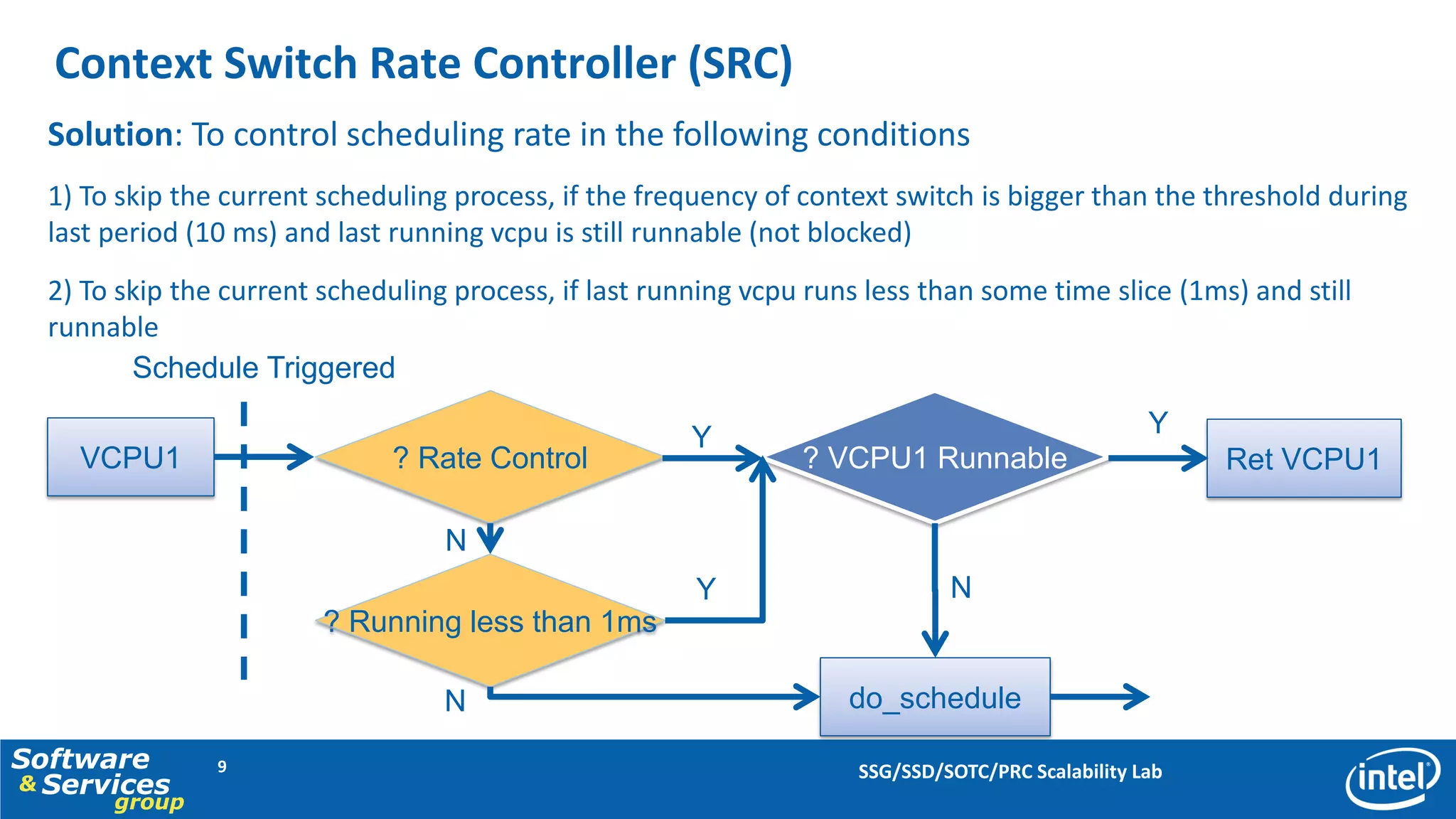

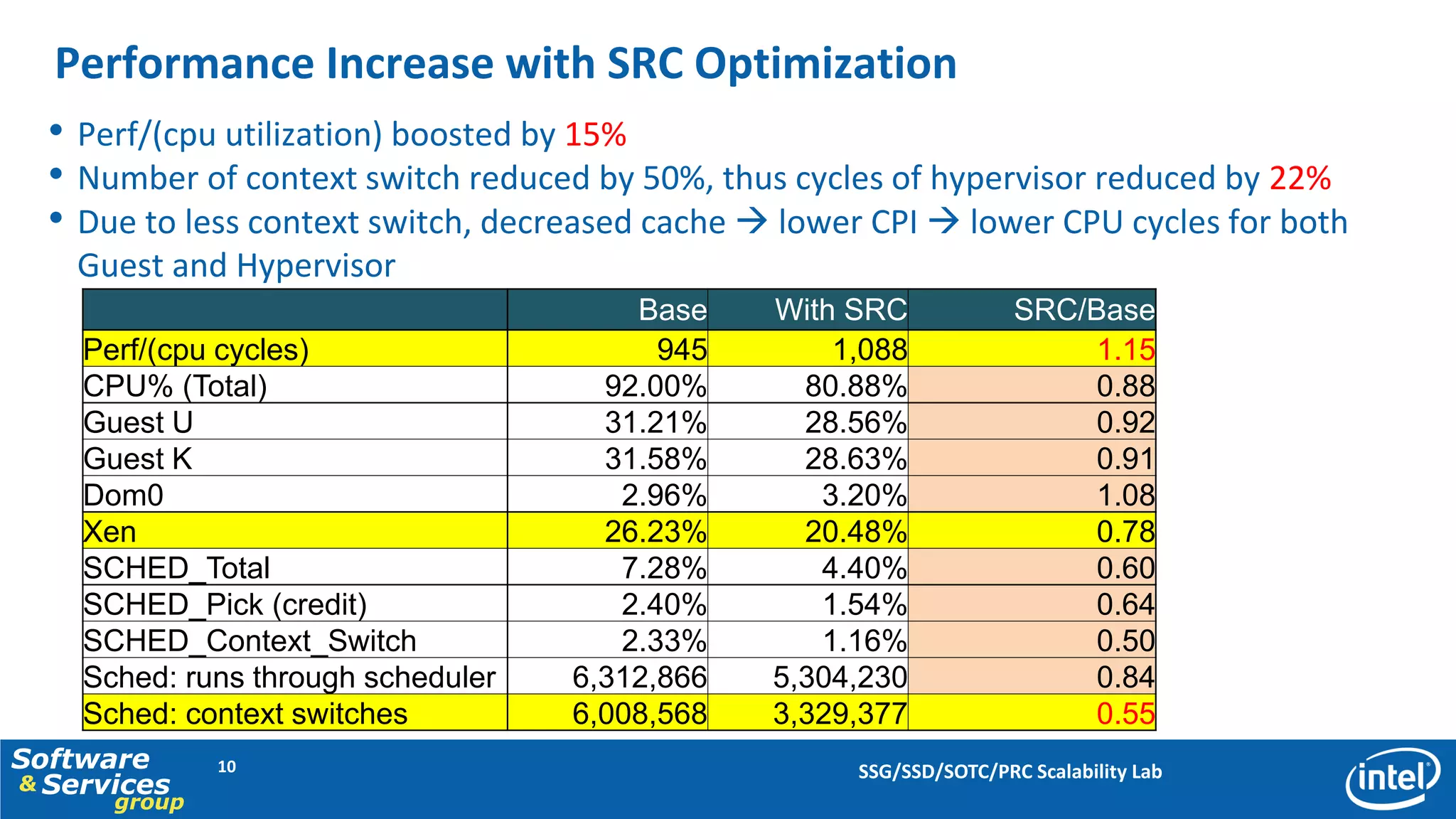

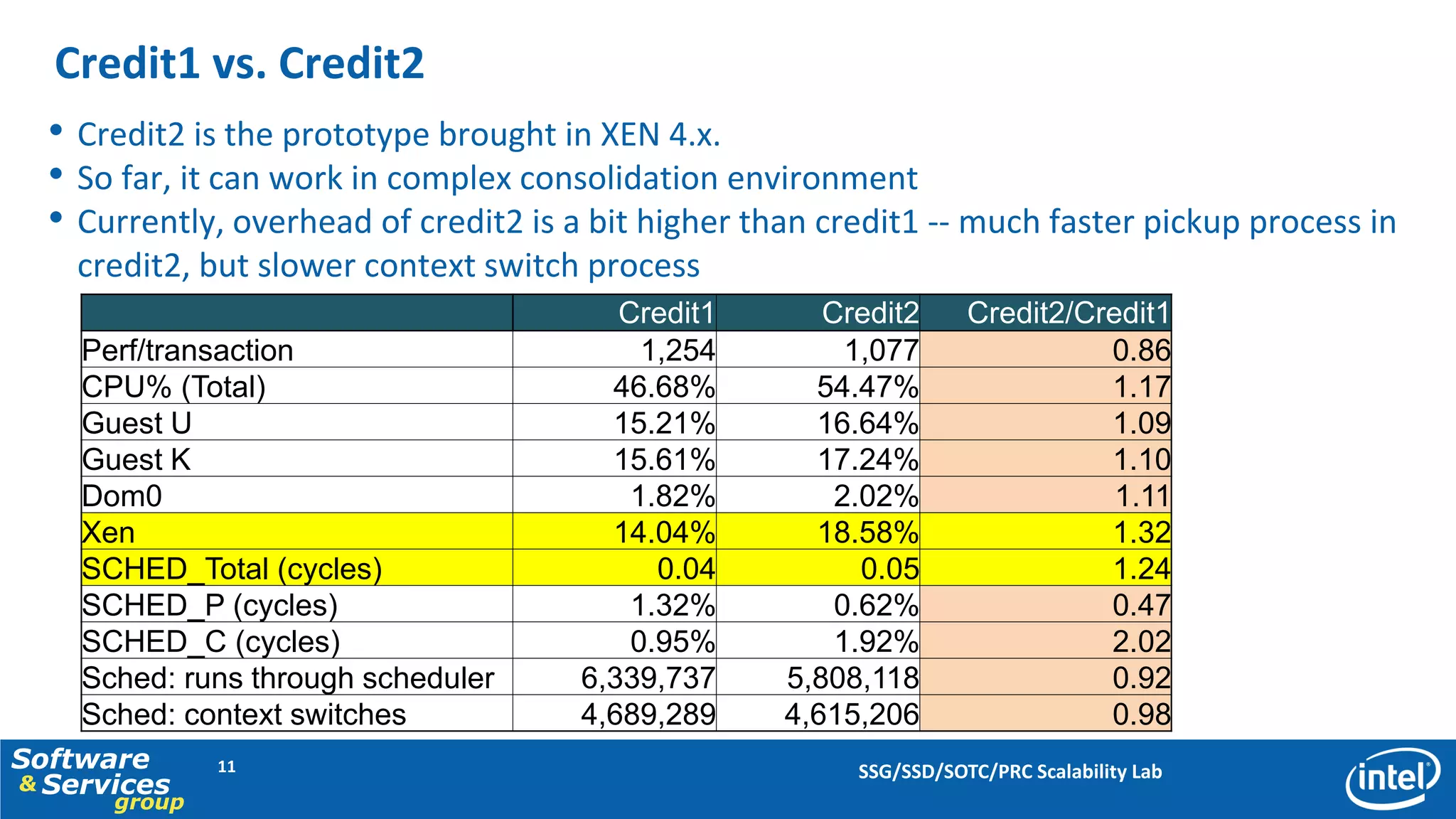

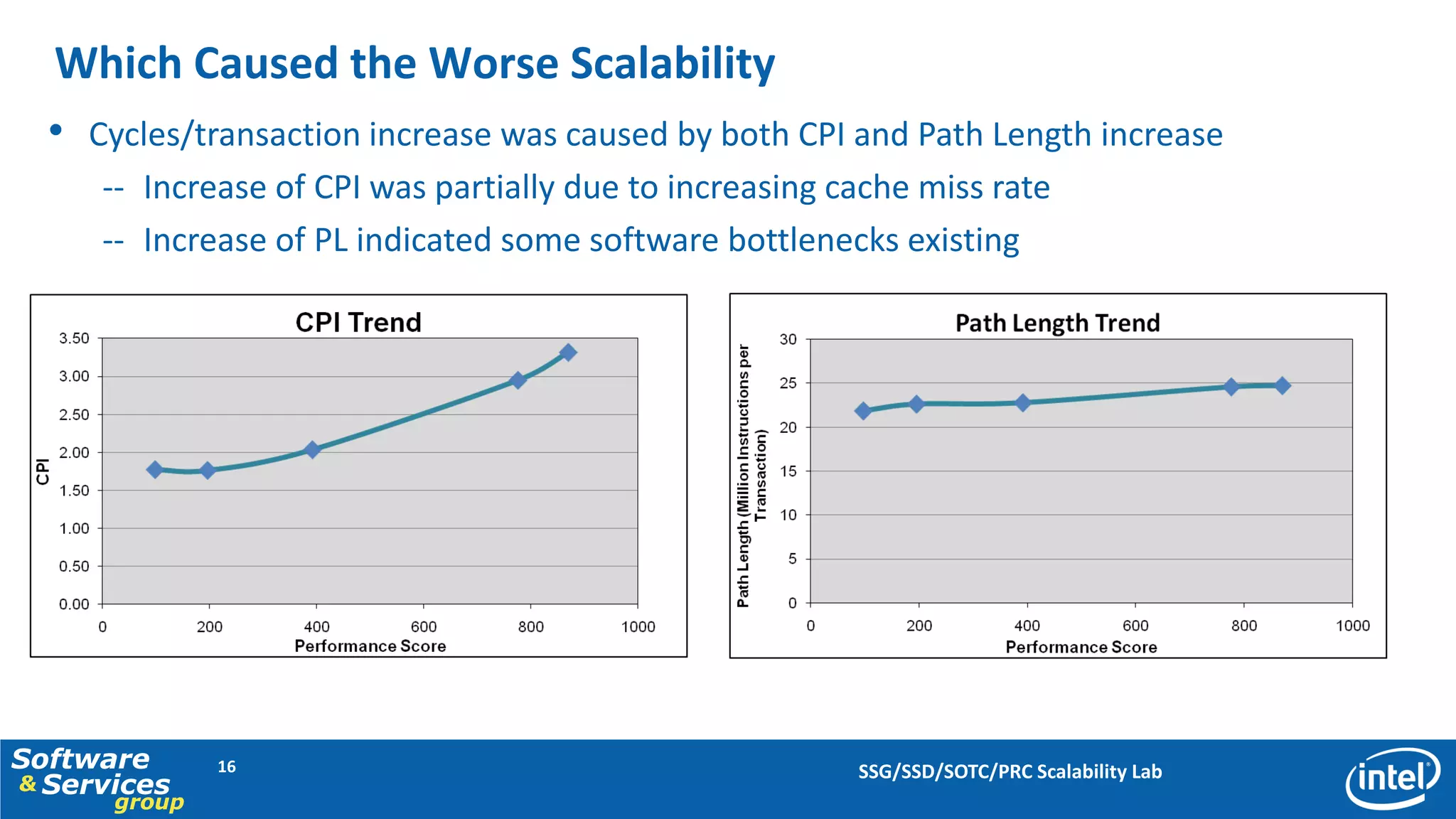

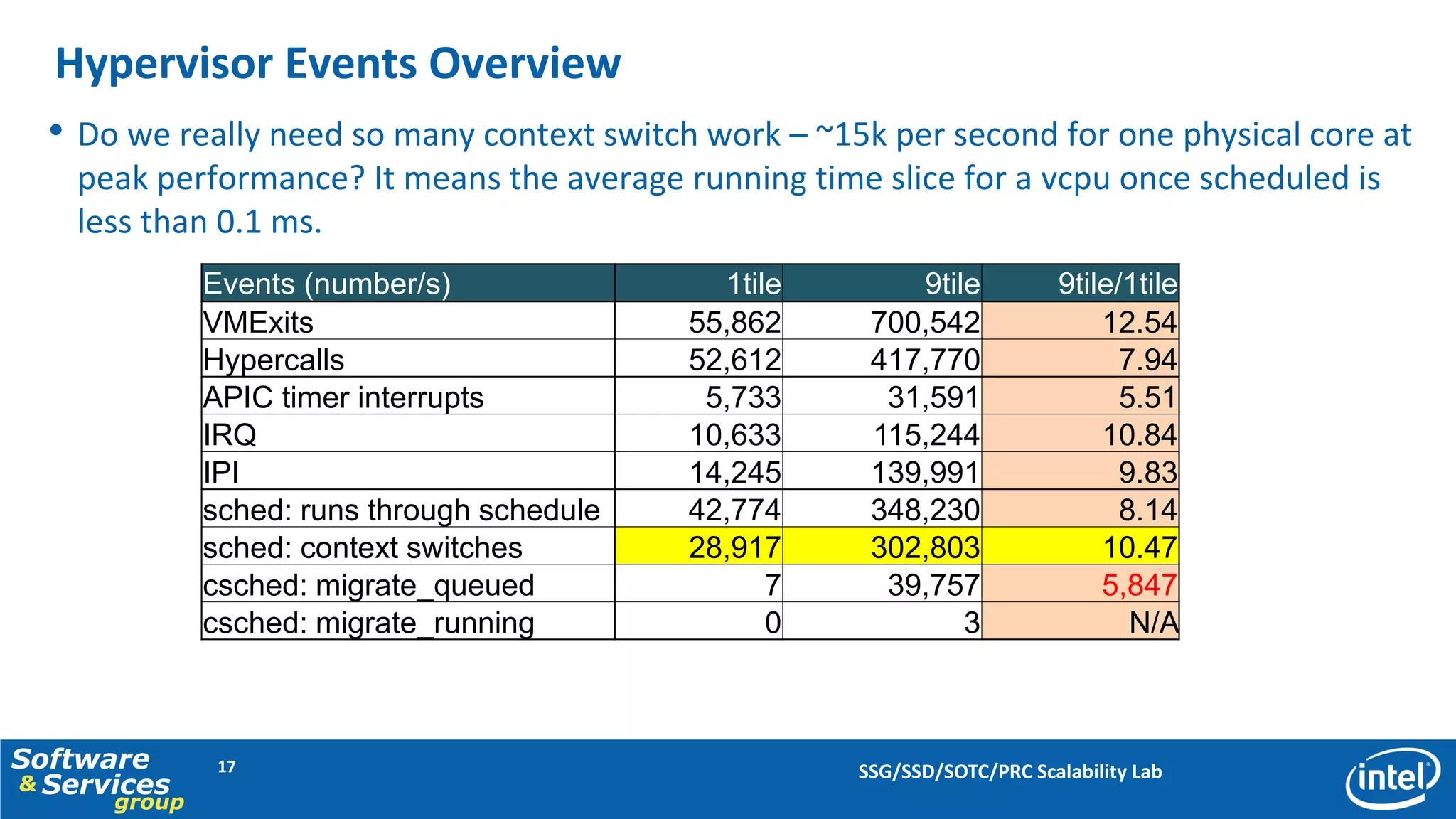

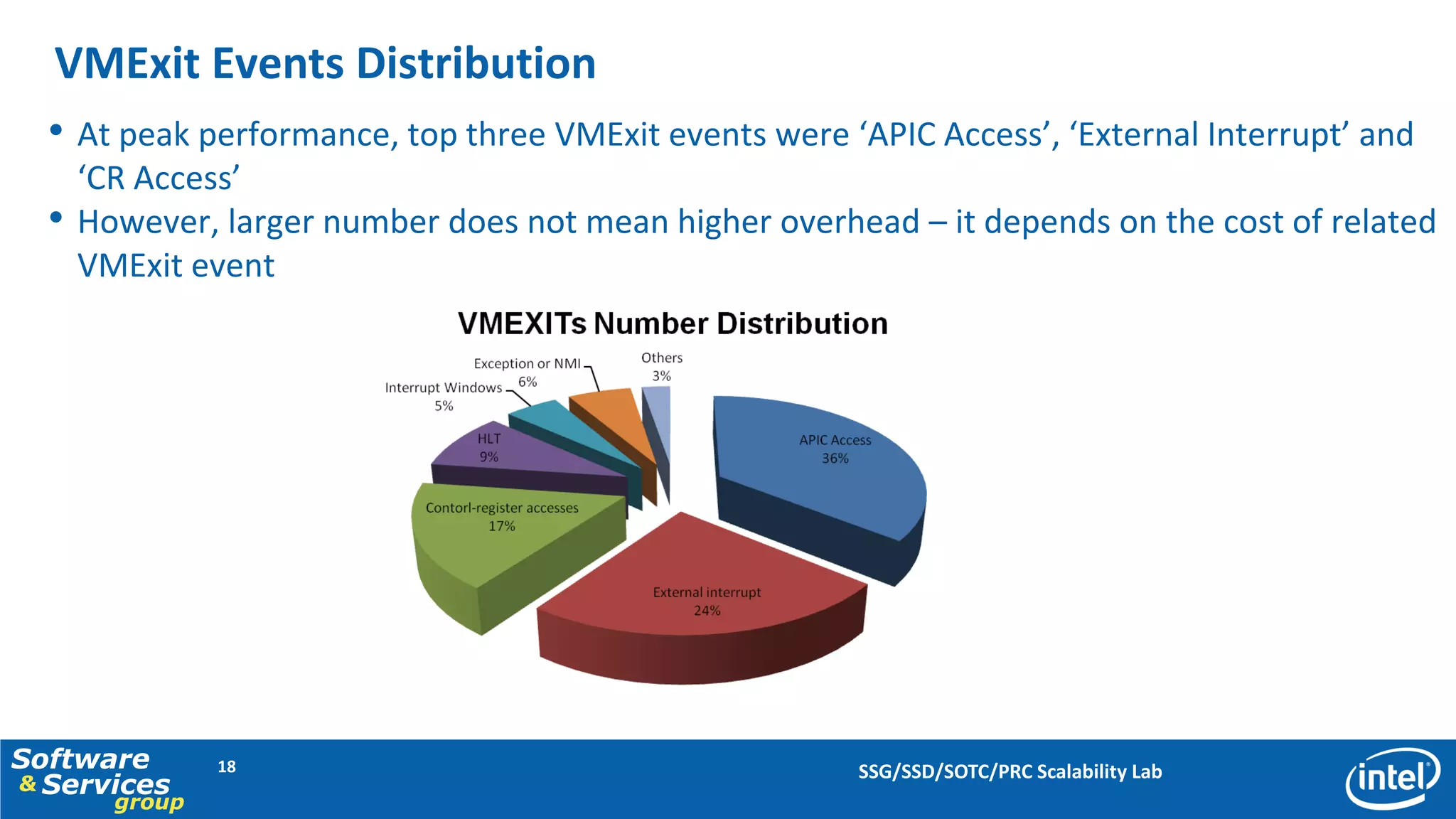

This document discusses server consolidation challenges on multi-core systems. It finds that hypervisor overhead increases significantly under high system load. Frequent context switching accounts for a large portion of hypervisor CPU cycles. Optimizing the credit scheduler to reduce context switching frequency improves performance by lowering hypervisor overhead by 22% and increasing performance per CPU utilization by 15%.