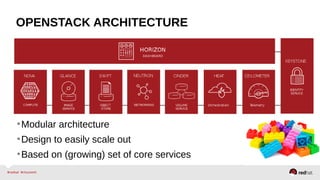

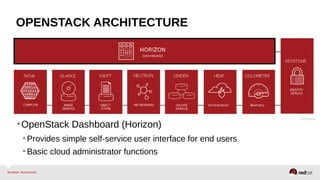

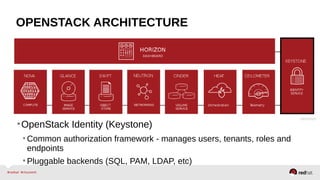

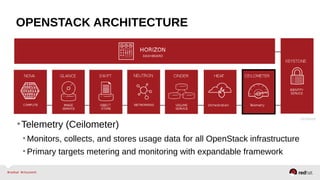

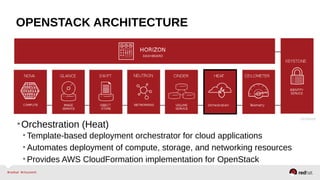

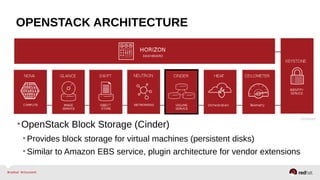

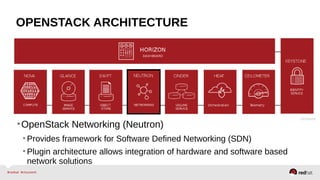

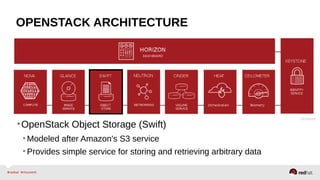

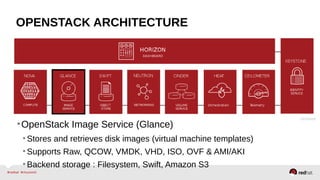

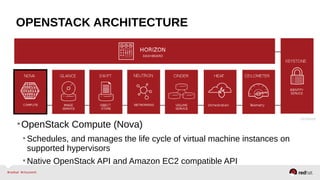

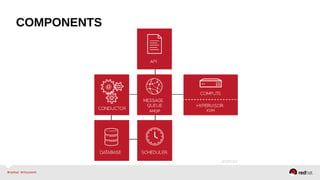

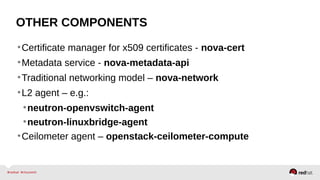

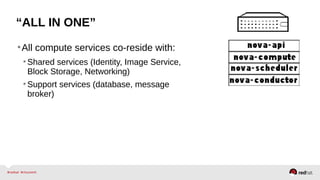

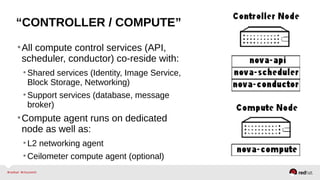

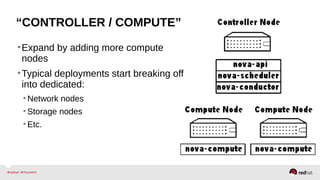

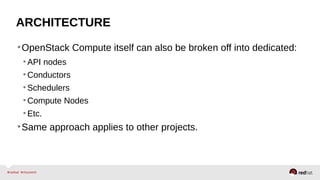

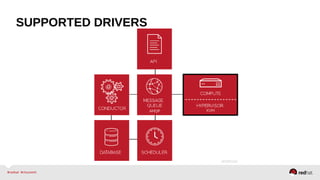

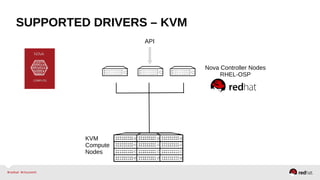

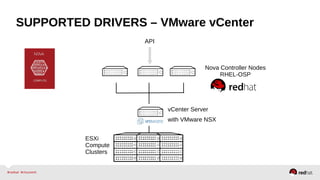

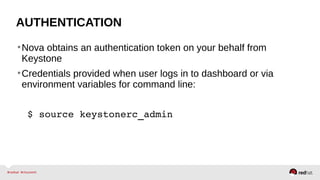

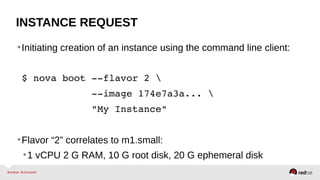

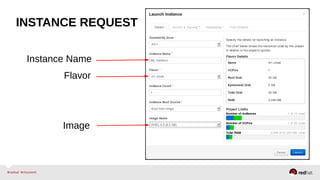

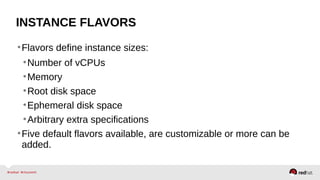

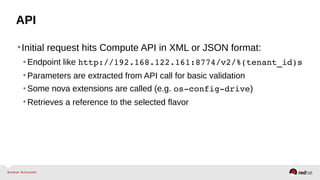

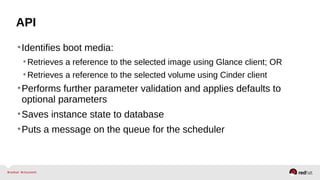

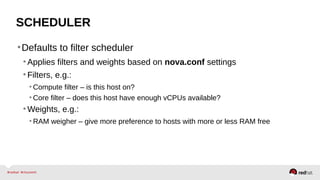

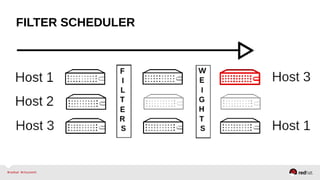

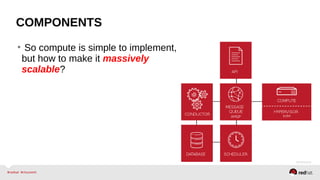

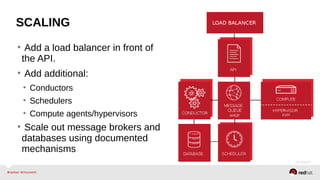

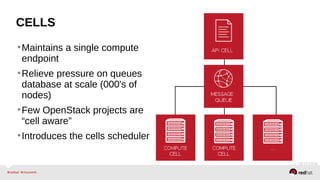

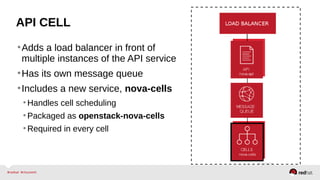

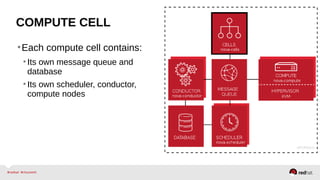

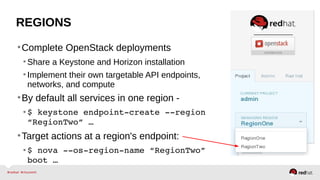

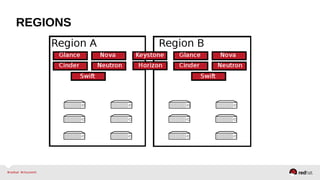

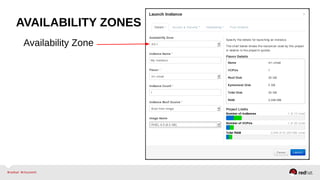

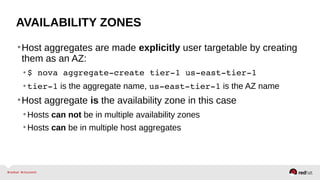

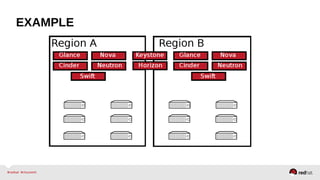

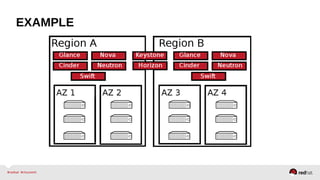

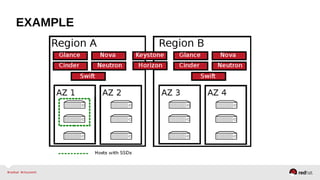

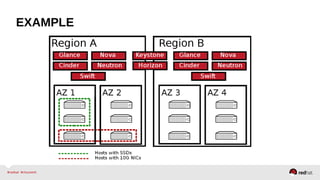

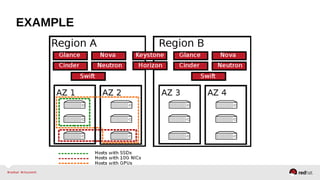

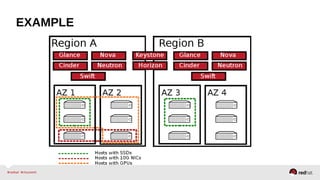

The document provides a comprehensive overview of OpenStack architecture and components, focusing on the compute services managed by Nova. It discusses instance lifecycle, scaling options, and the segregation of compute resources, emphasizing best practices and new features. Additionally, it outlines the integration with Red Hat Enterprise Linux and support for various drivers, presenting a detailed technical guide for managing cloud infrastructure.