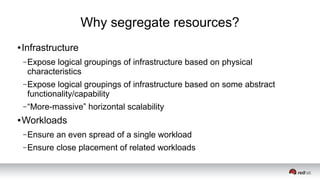

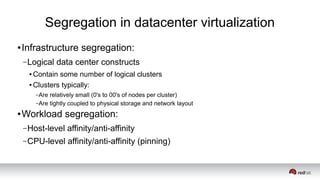

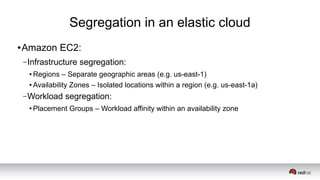

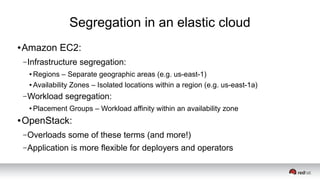

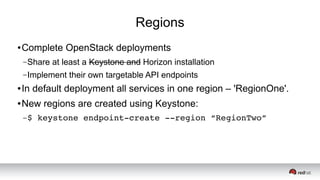

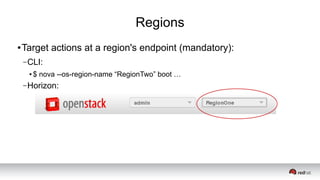

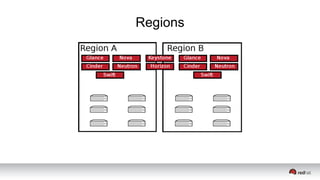

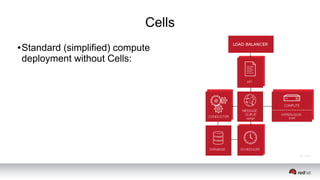

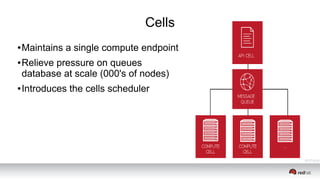

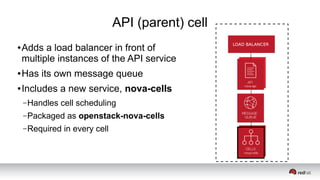

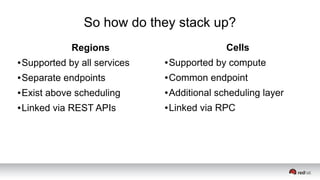

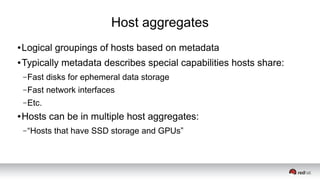

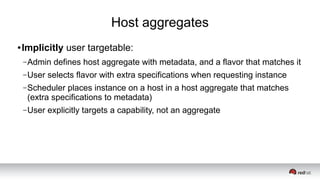

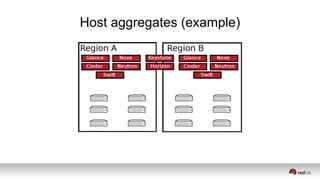

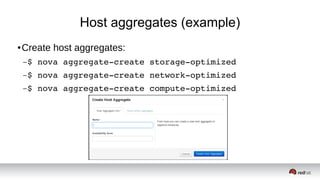

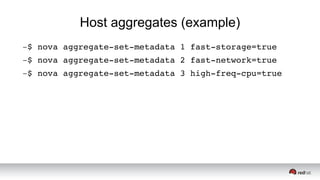

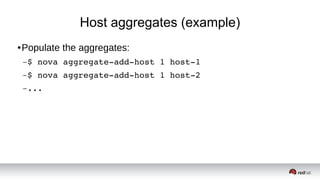

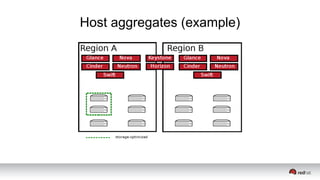

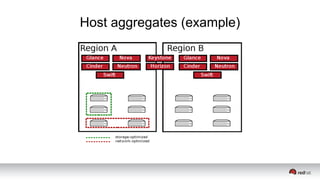

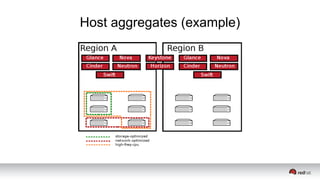

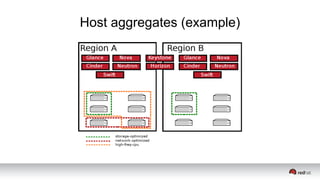

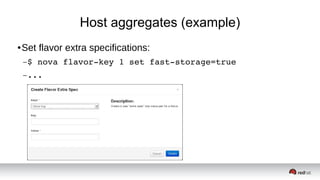

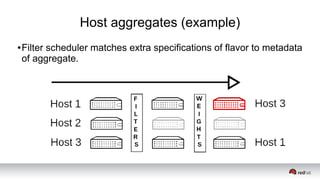

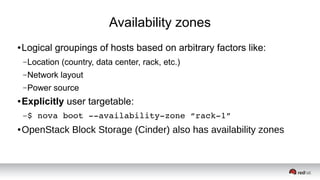

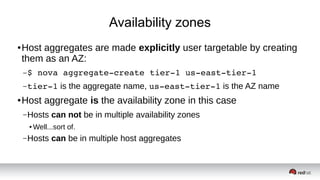

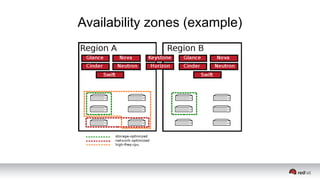

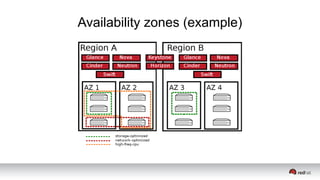

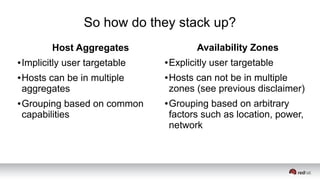

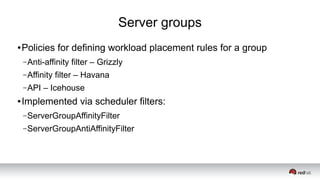

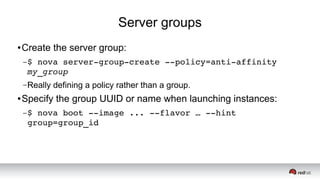

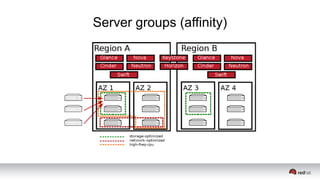

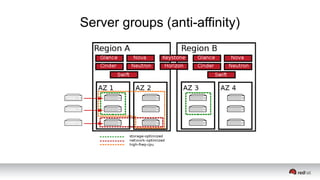

This document discusses resource segregation techniques in OpenStack clouds. It describes how infrastructure resources like compute hosts can be logically grouped using regions, cells, host aggregates, and availability zones. It also discusses workload segregation using server groups that define affinity and anti-affinity rules for instance placement. The goals of segregation include isolating workloads, ensuring high availability, and enabling horizontal scaling of the infrastructure.