This document describes the 5 steps of principal component analysis (PCA):

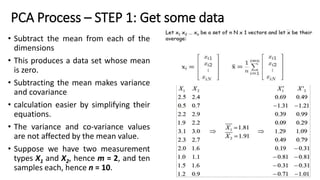

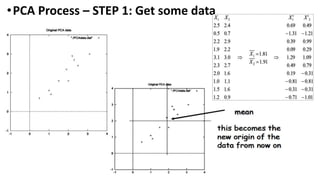

1) Subtract the mean from each dimension of the data to center it around zero.

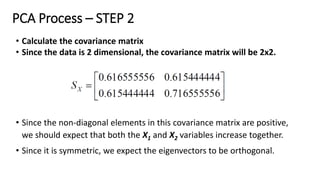

2) Calculate the covariance matrix of the data.

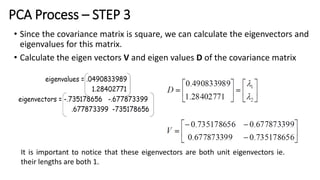

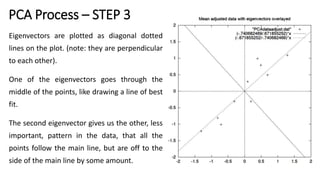

3) Calculate the eigenvalues and eigenvectors of the covariance matrix.

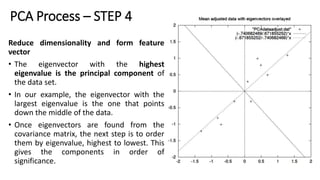

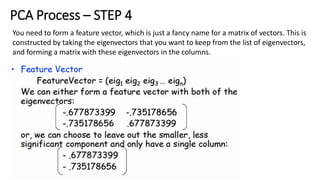

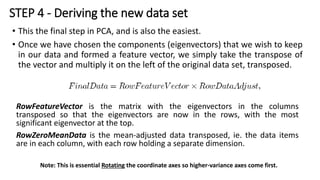

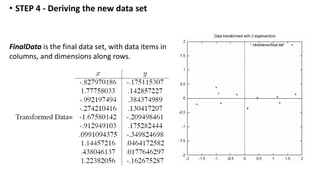

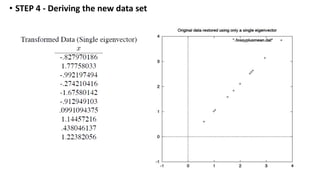

4) Form a feature vector by selecting eigenvectors corresponding to largest eigenvalues. Project the data onto this to reduce dimensions.

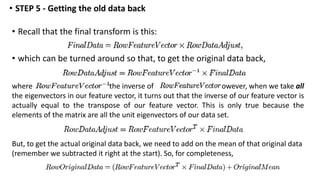

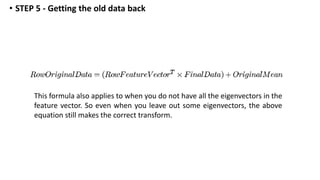

5) To reconstruct the data, take the transpose of the feature vector and multiply it with the projected data, then add the mean back.