The document provides an overview of principal component analysis (PCA), including:

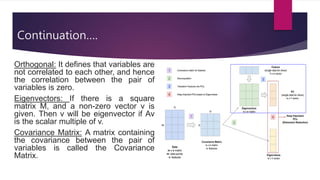

- PCA is a dimensionality reduction technique that transforms variables into uncorrelated principal components.

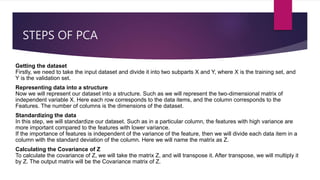

- The steps of PCA involve standardizing data, calculating the covariance matrix, and determining principal components through eigendecomposition of the covariance matrix.

- PCA reduces dimensionality while preserving as much information as possible, helping with issues like data storage, algorithm speed, and visualization. However, it does not directly interpret the original features.