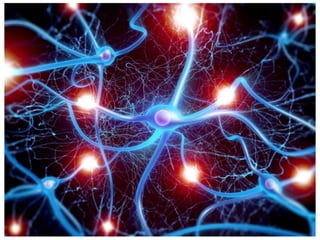

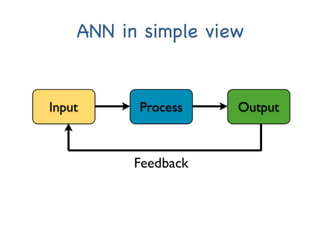

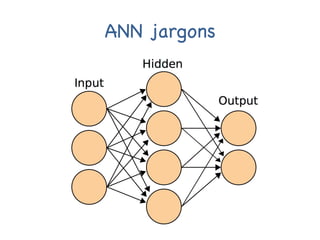

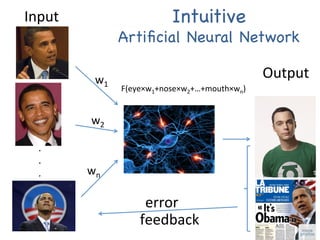

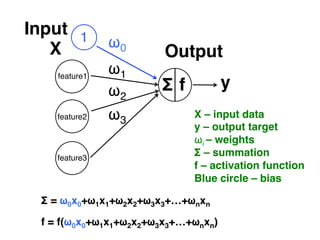

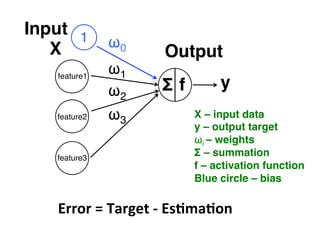

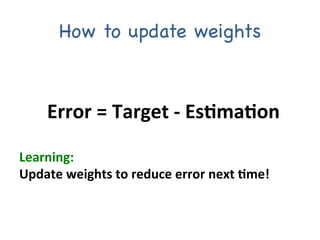

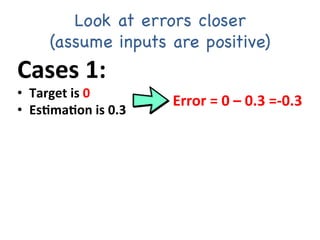

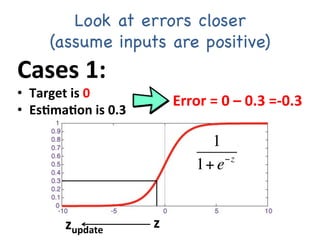

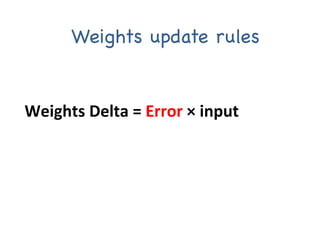

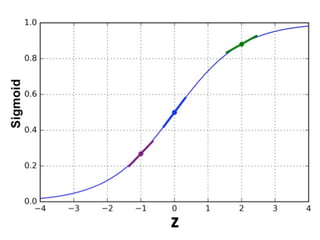

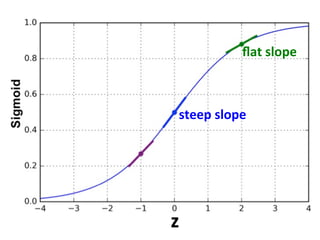

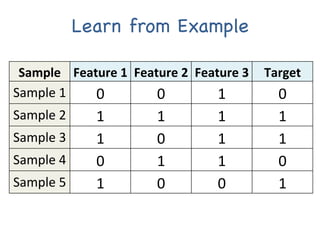

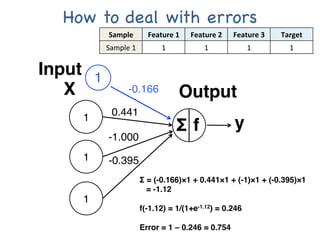

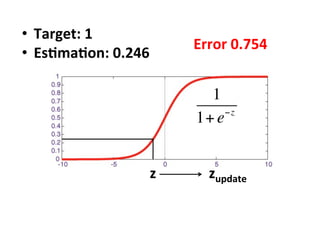

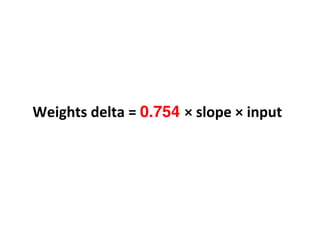

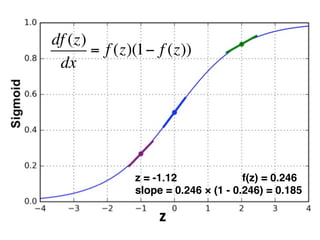

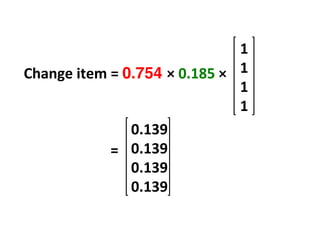

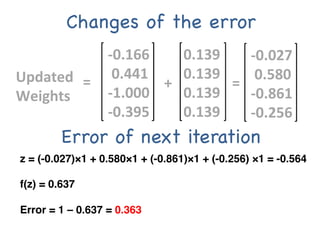

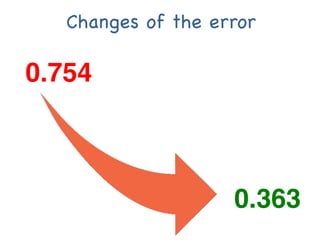

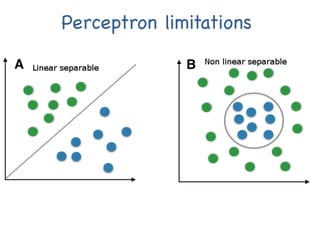

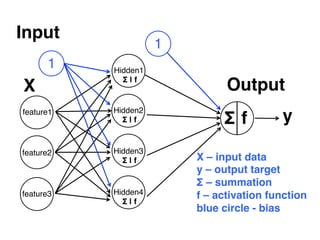

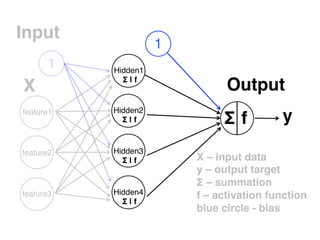

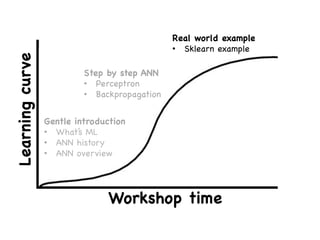

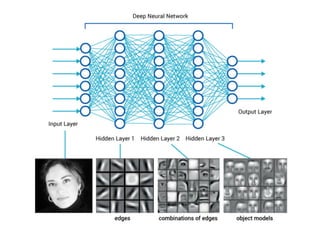

The document provides an introductory overview of artificial neural networks (ANNs) and machine learning, discussing their history, key concepts, and applications. It explains ANN structure, learning process, error reduction, and different cases related to error handling in predictions. Additionally, it references various resources and examples for further learning about ANN and machine learning practices.