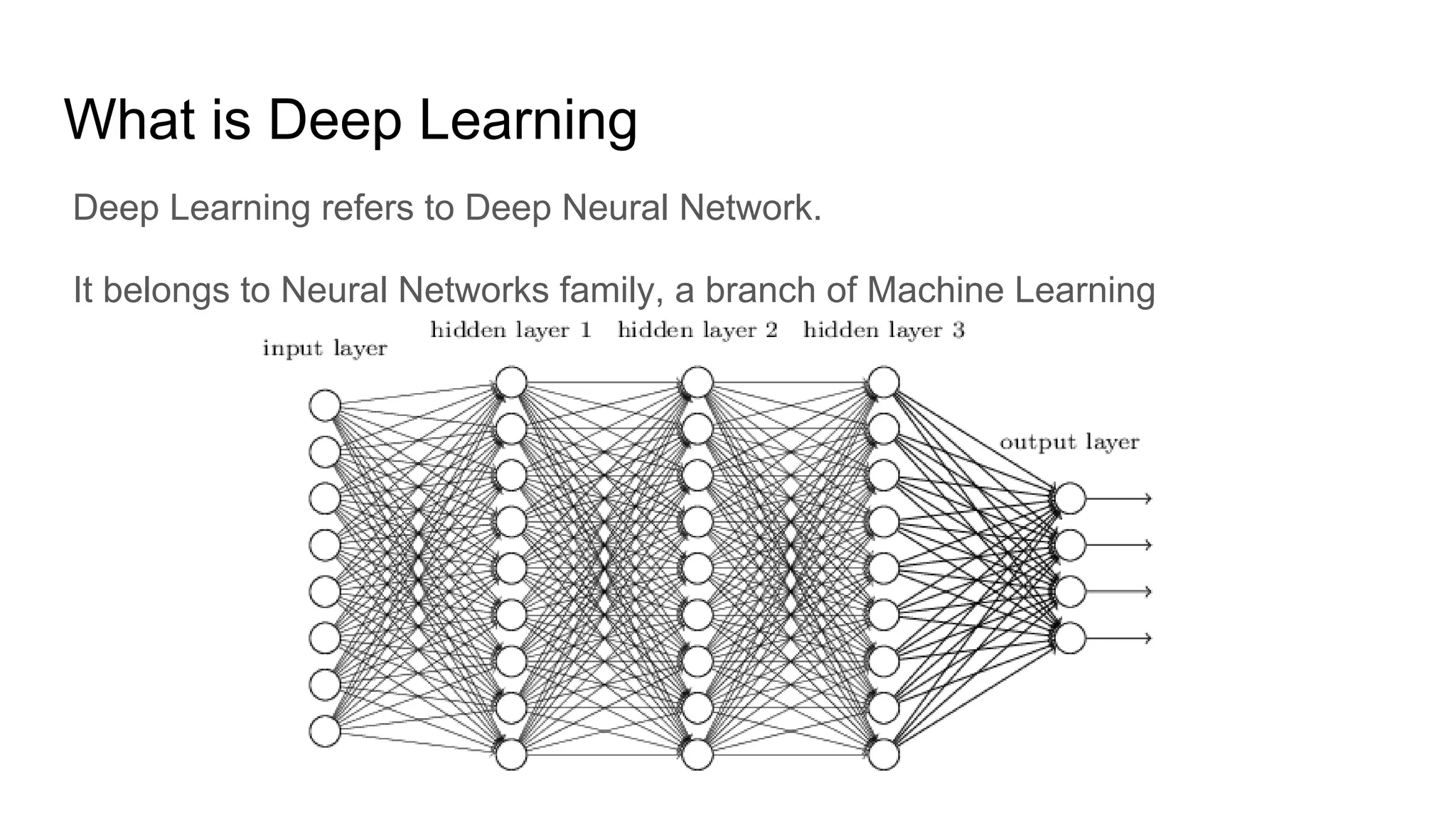

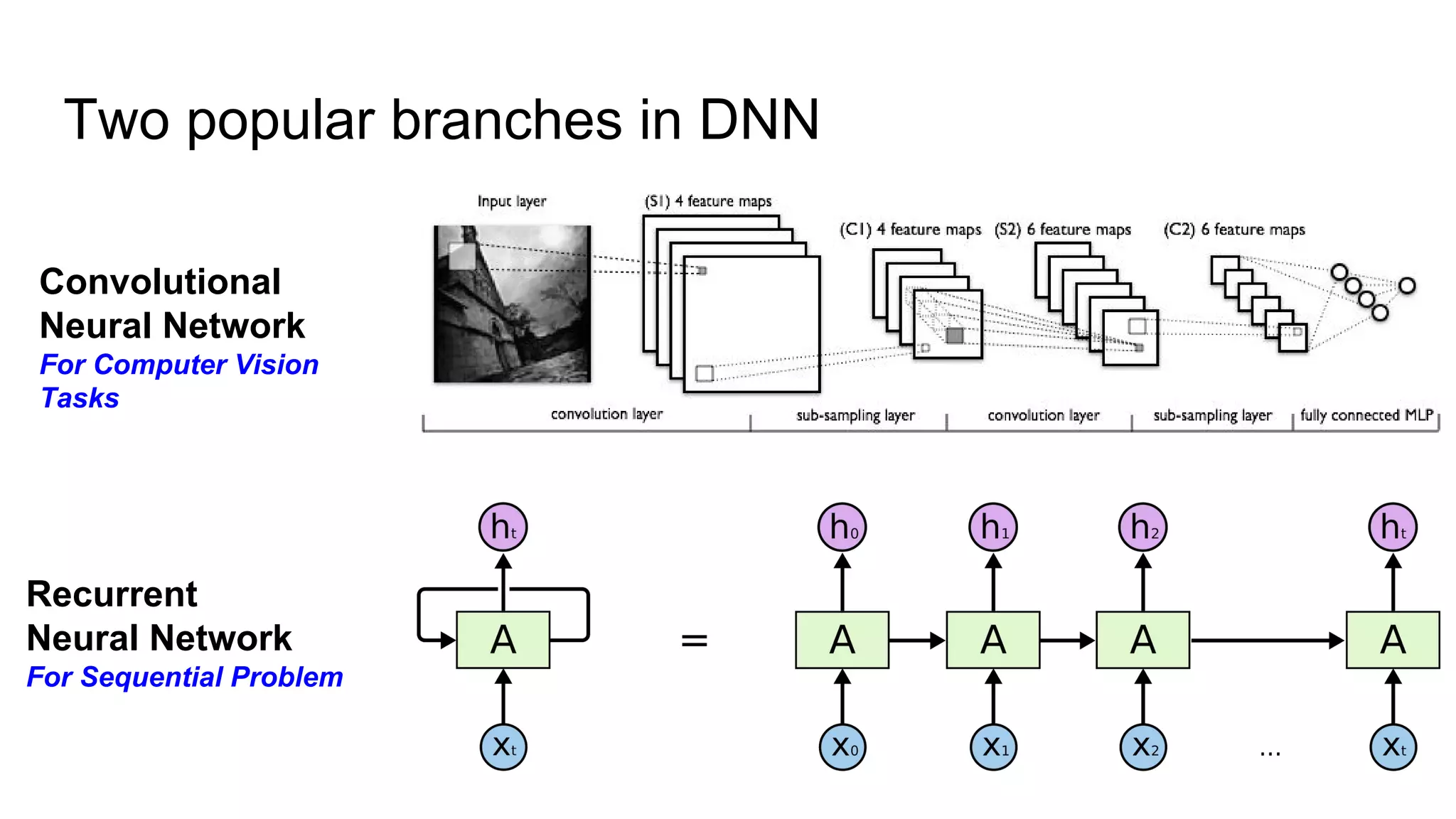

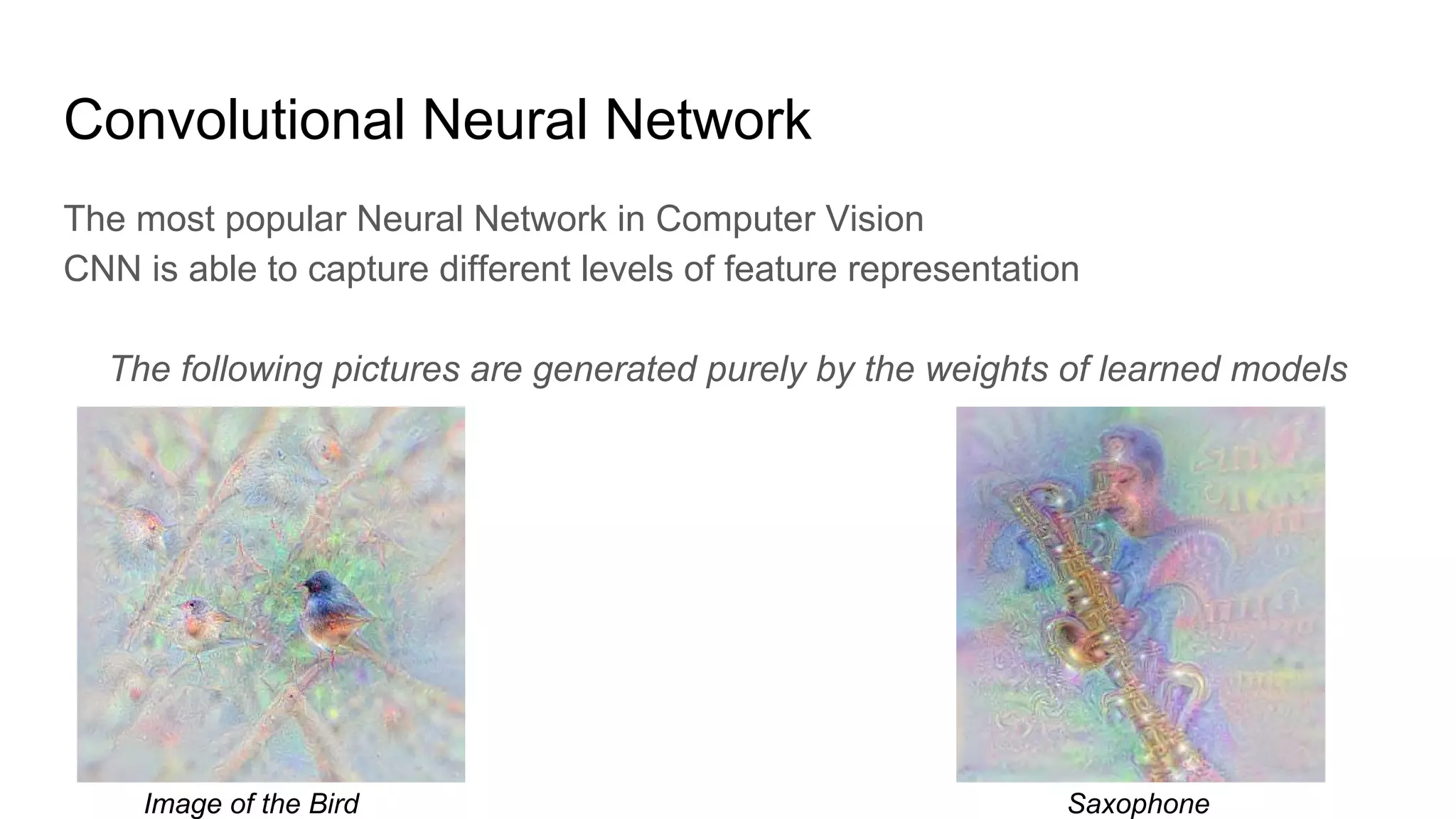

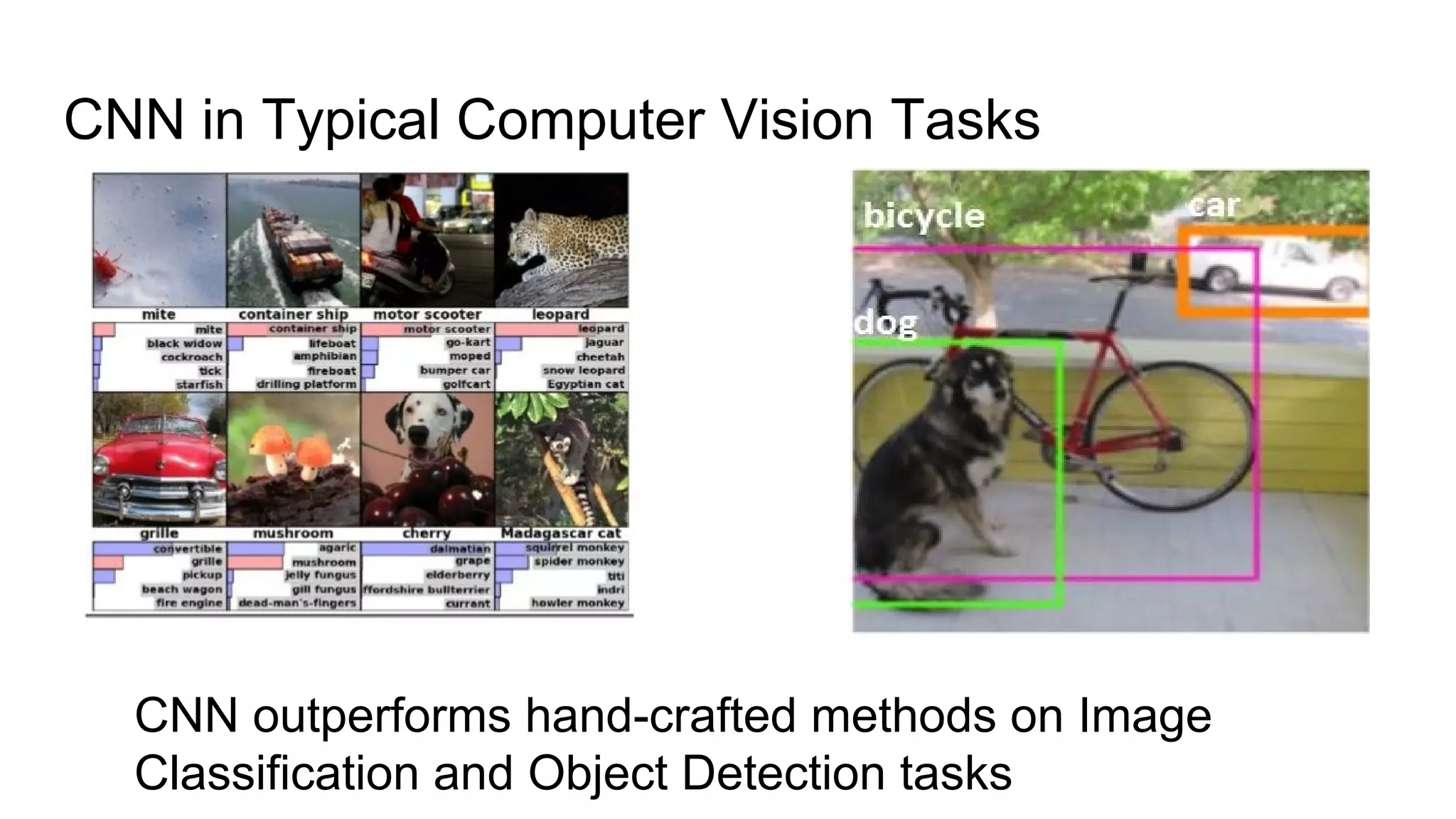

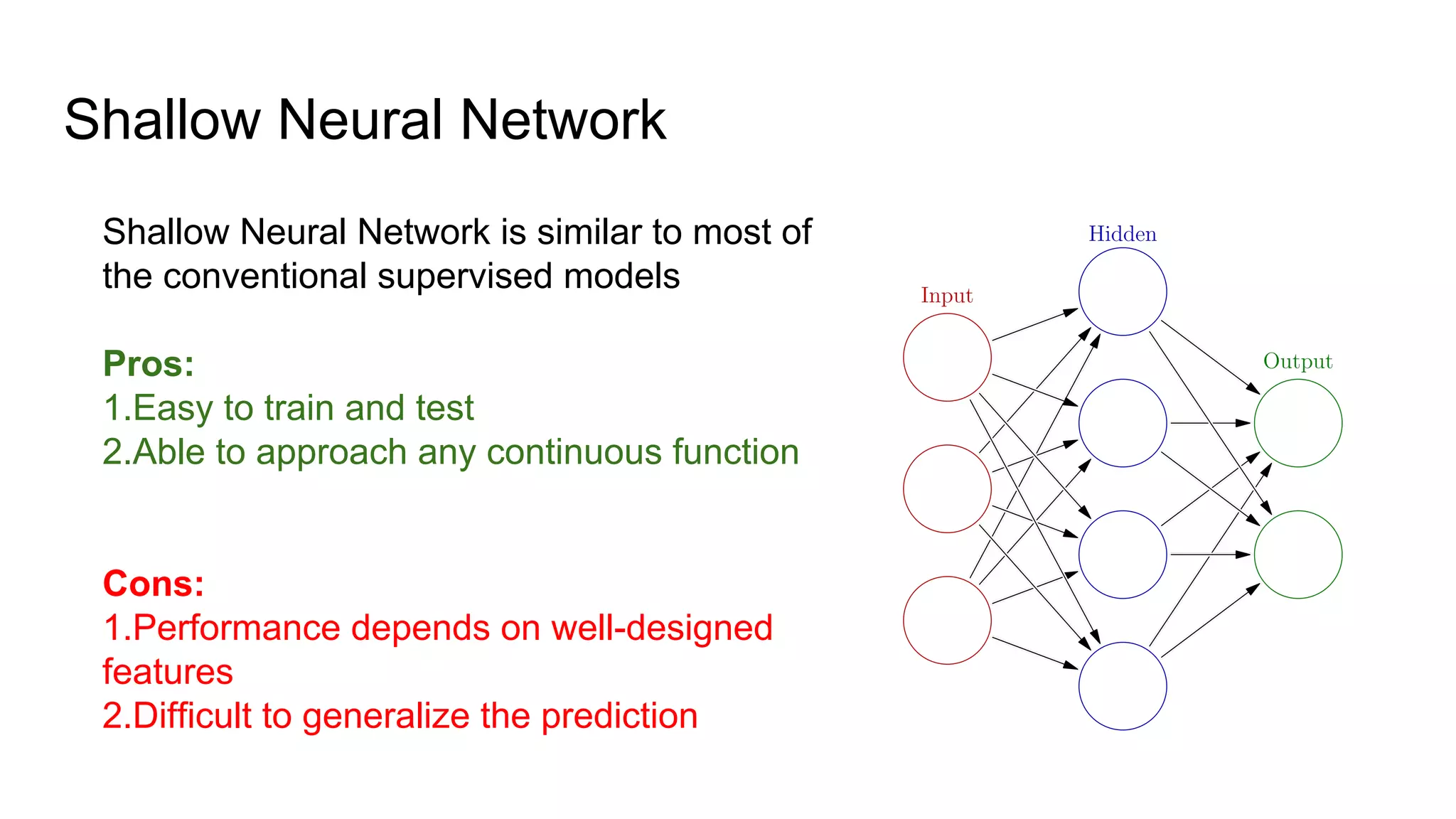

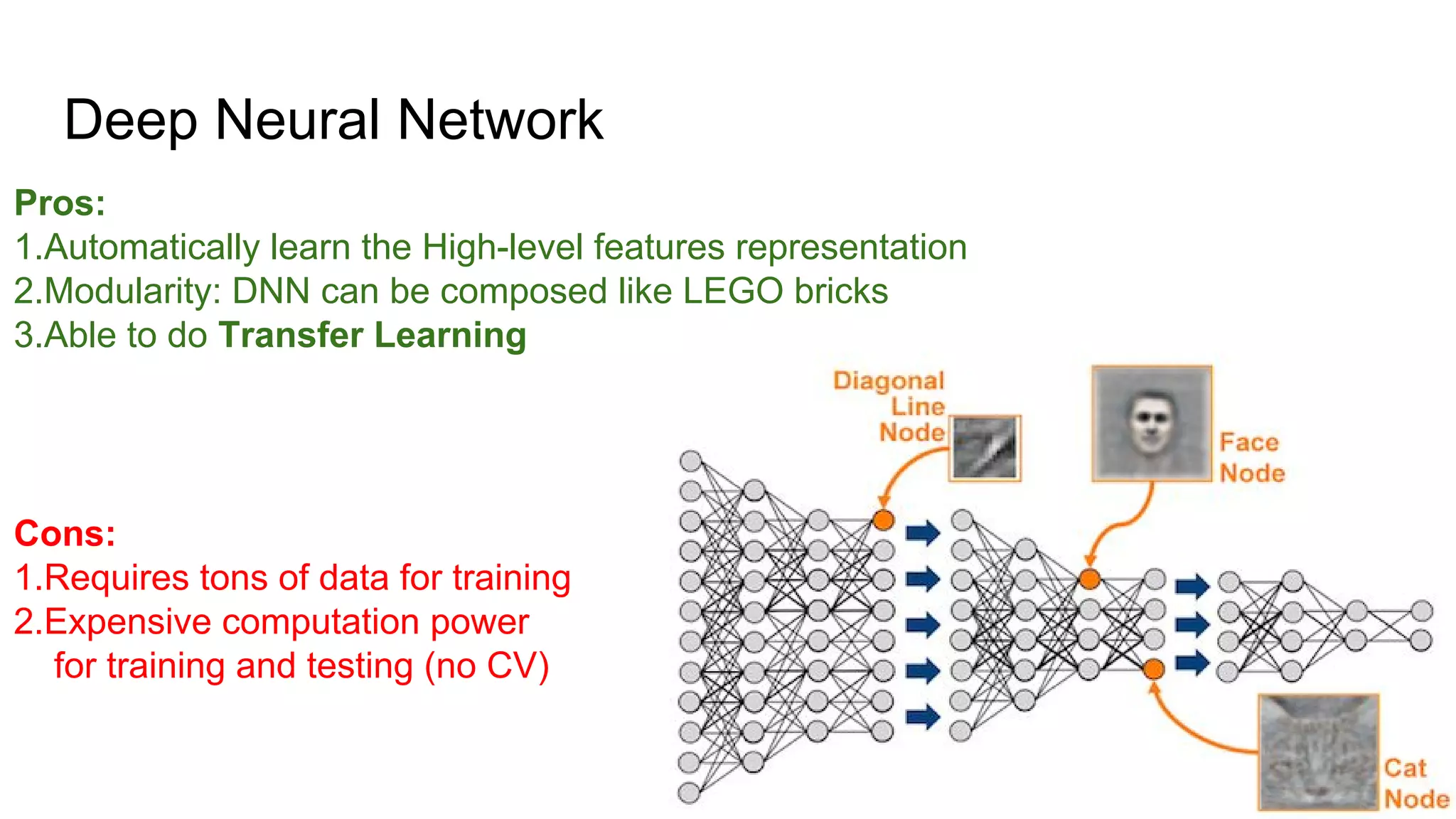

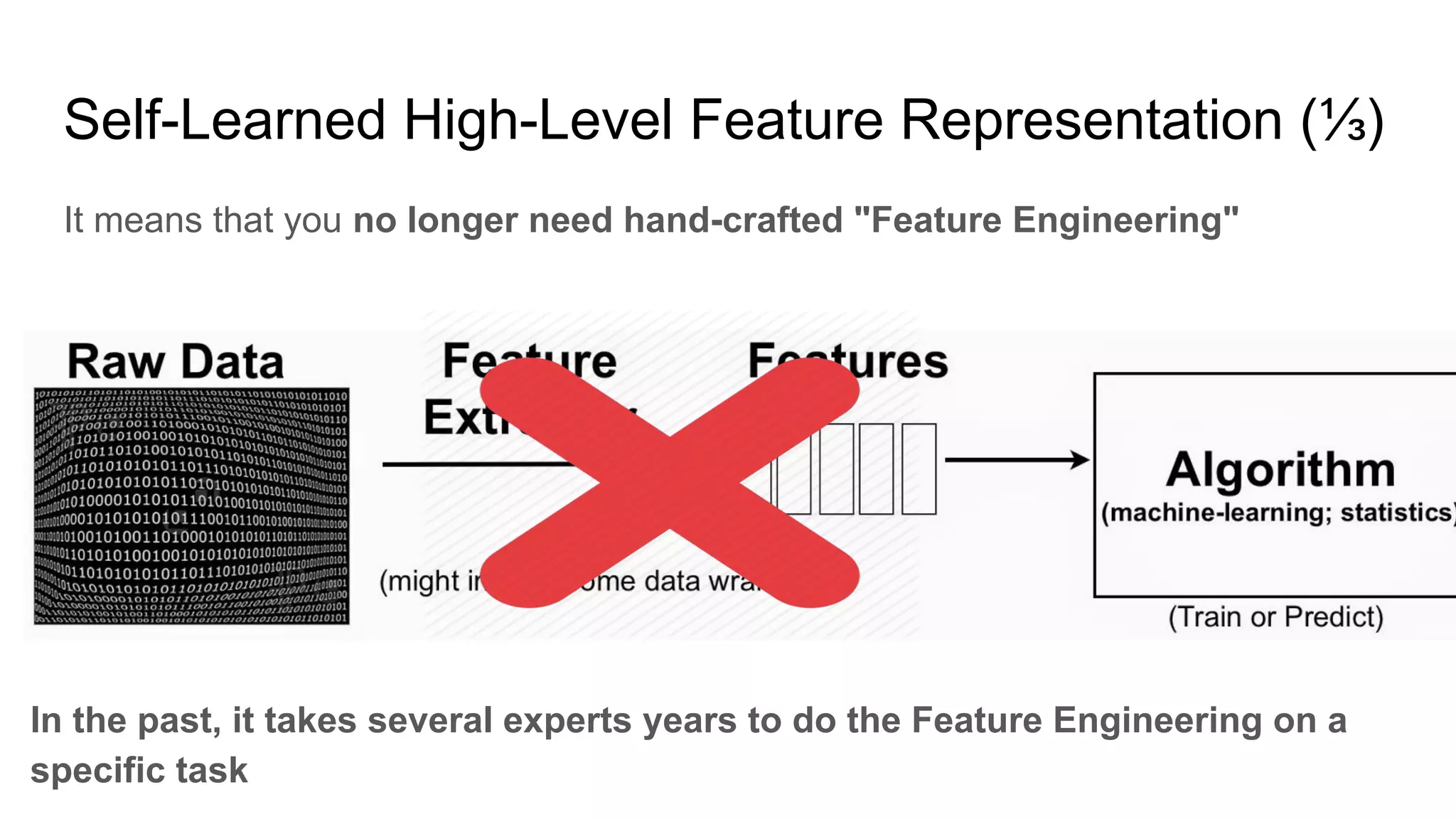

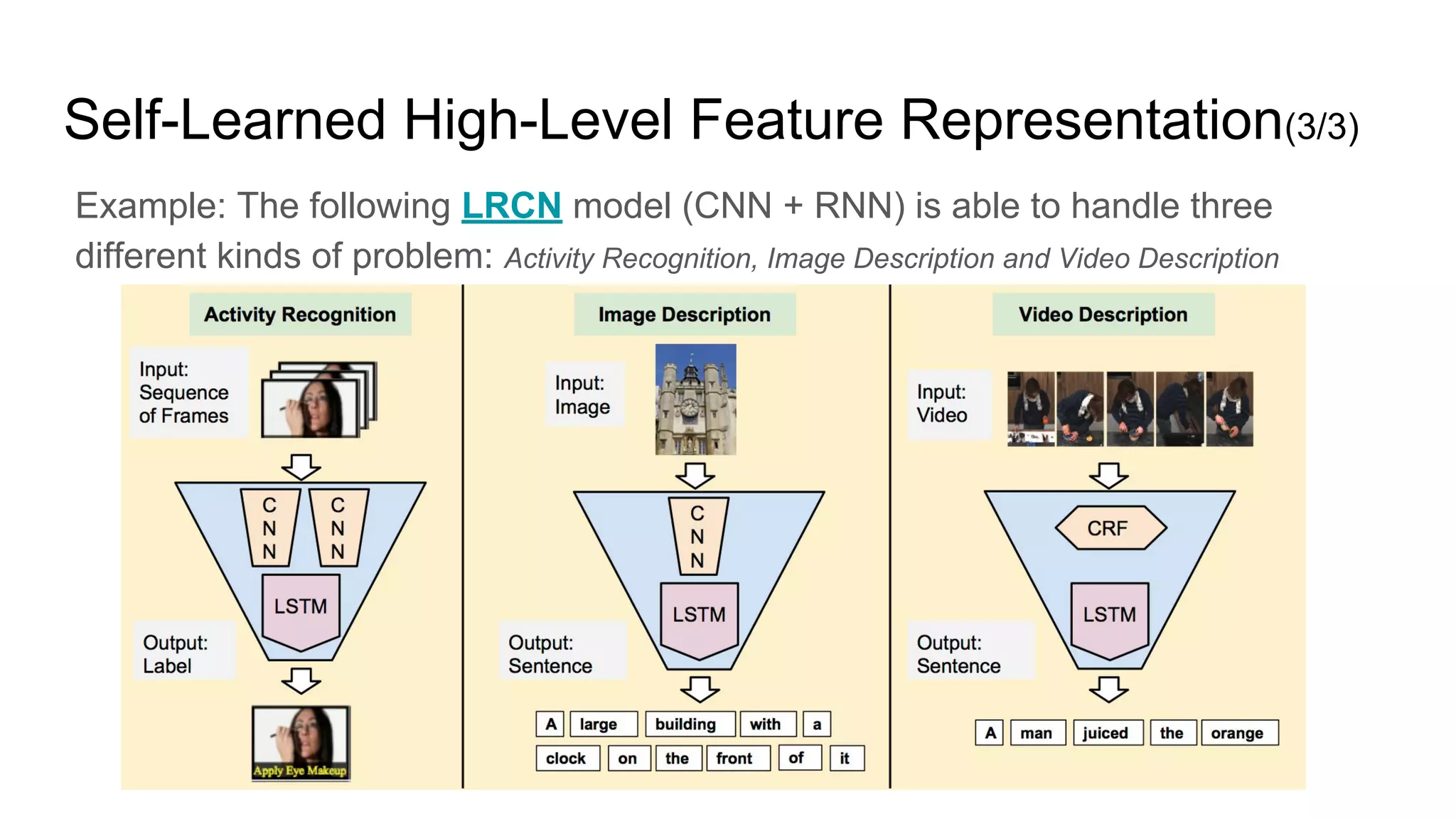

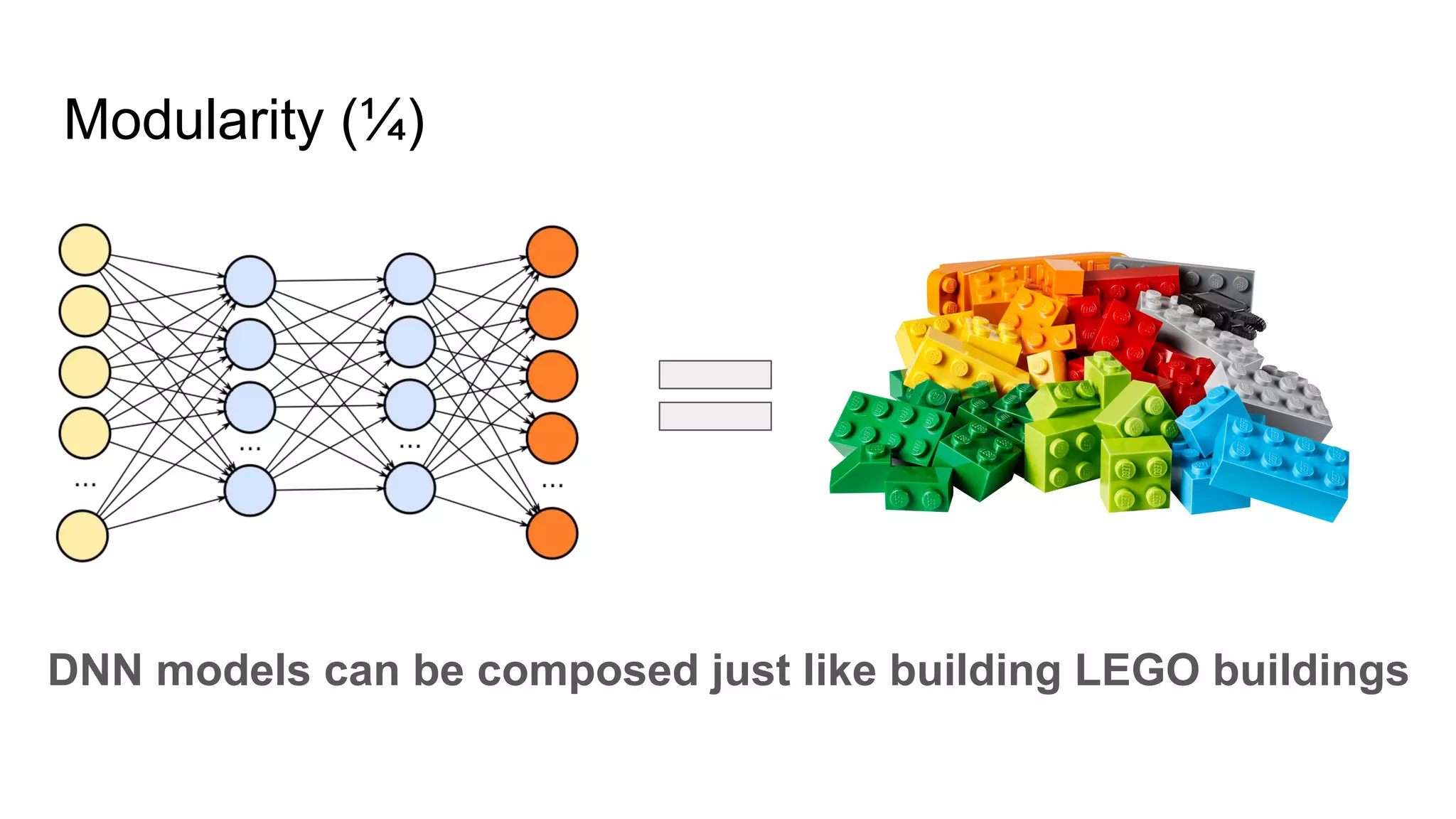

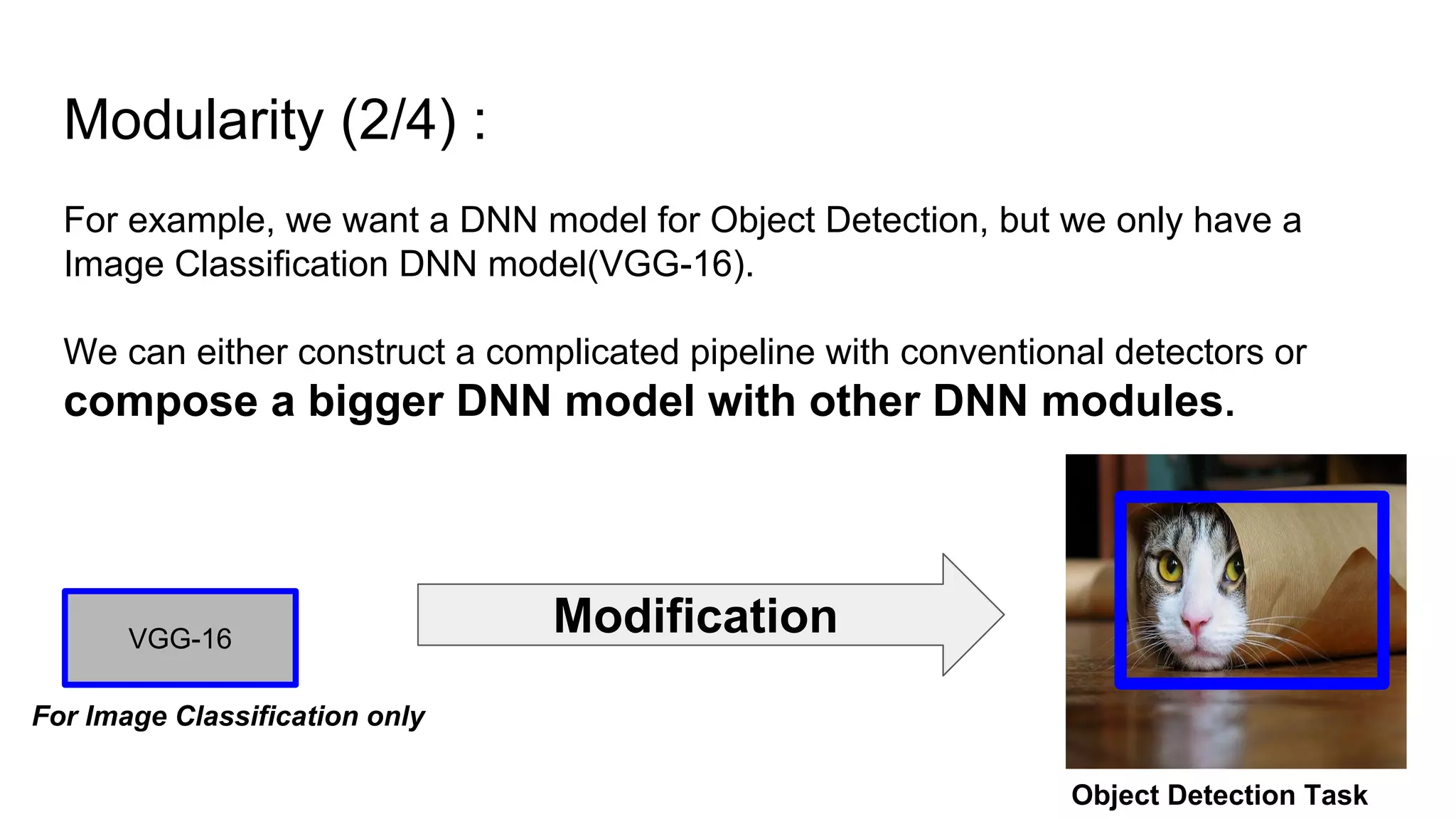

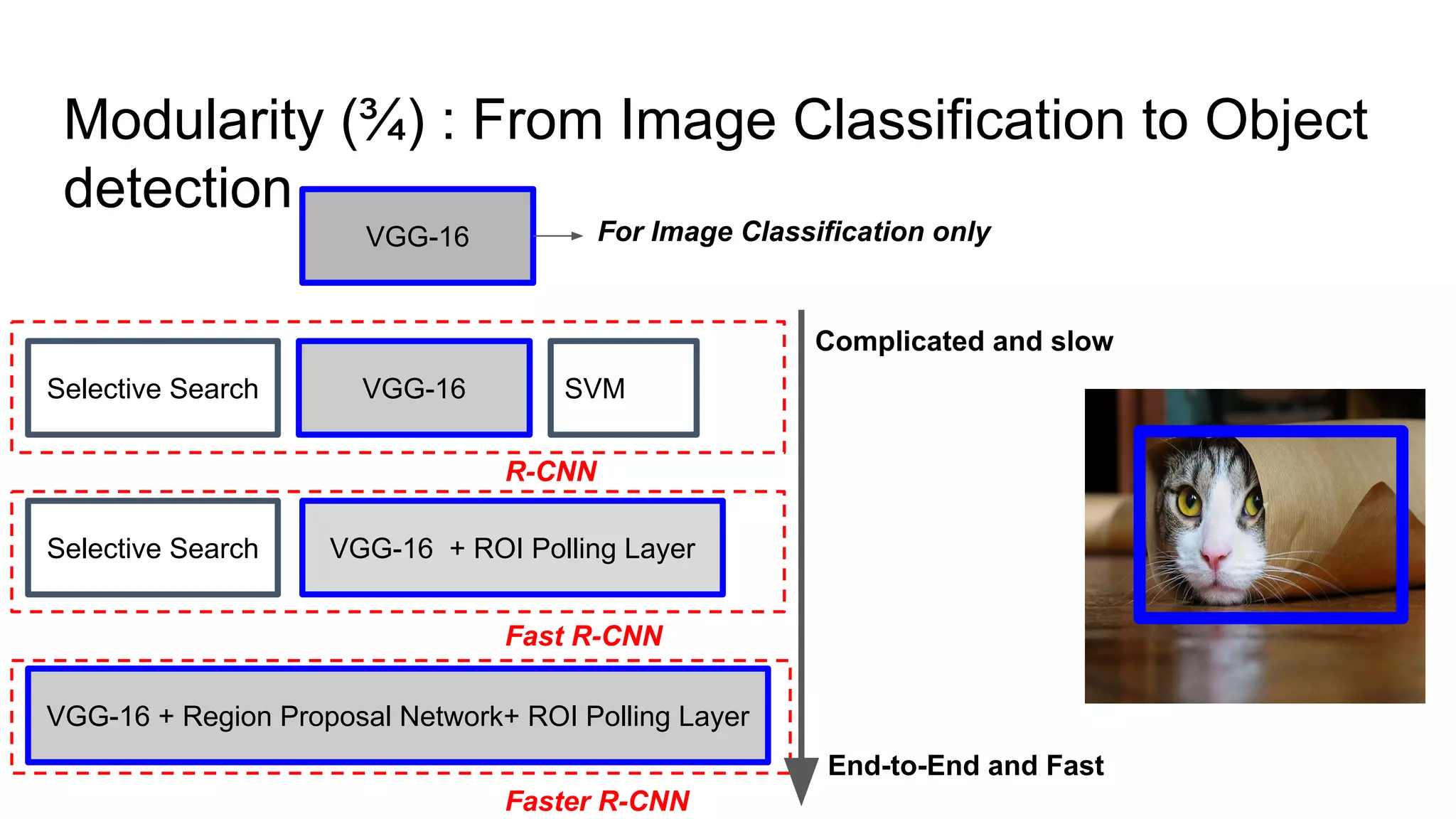

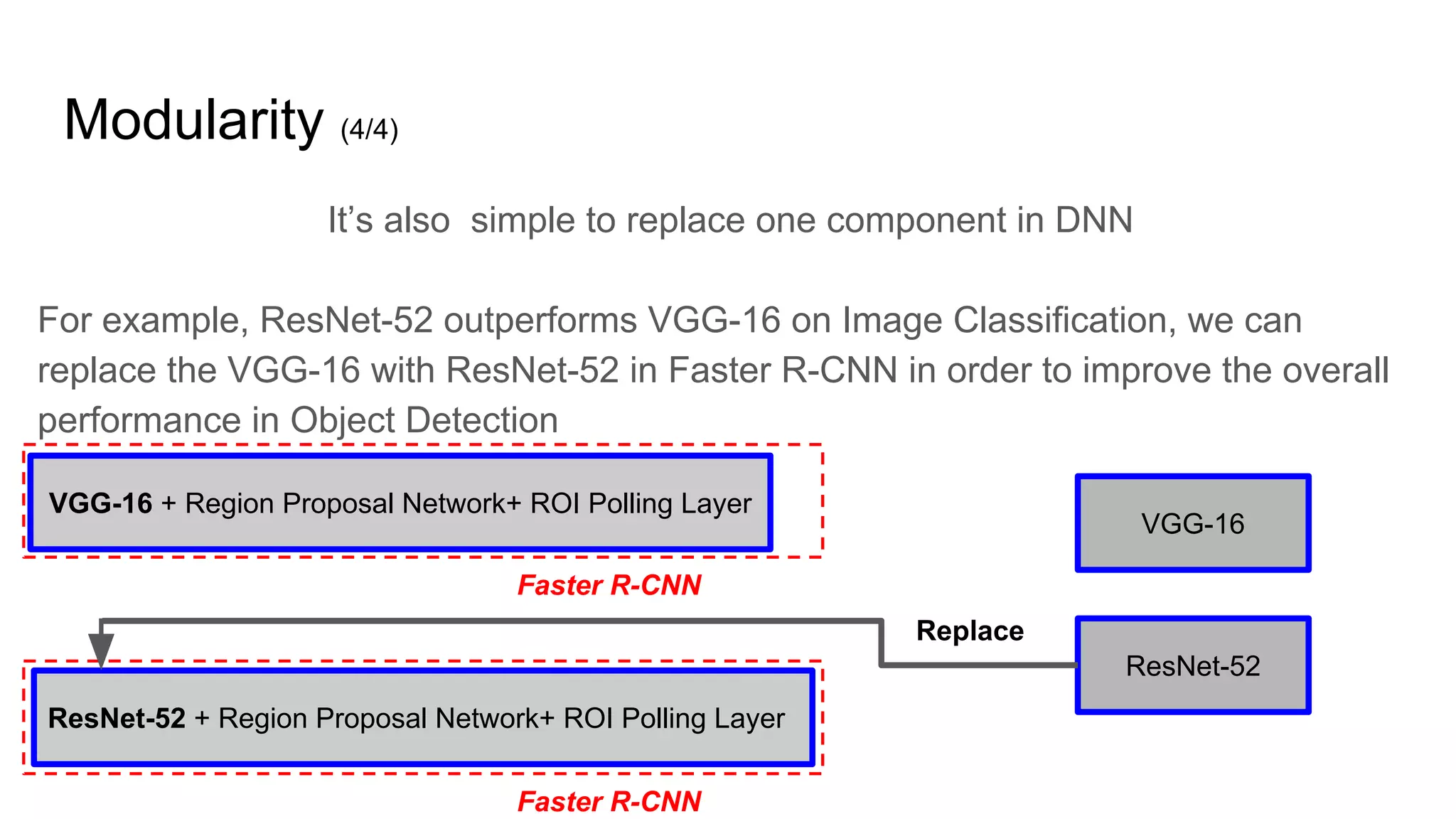

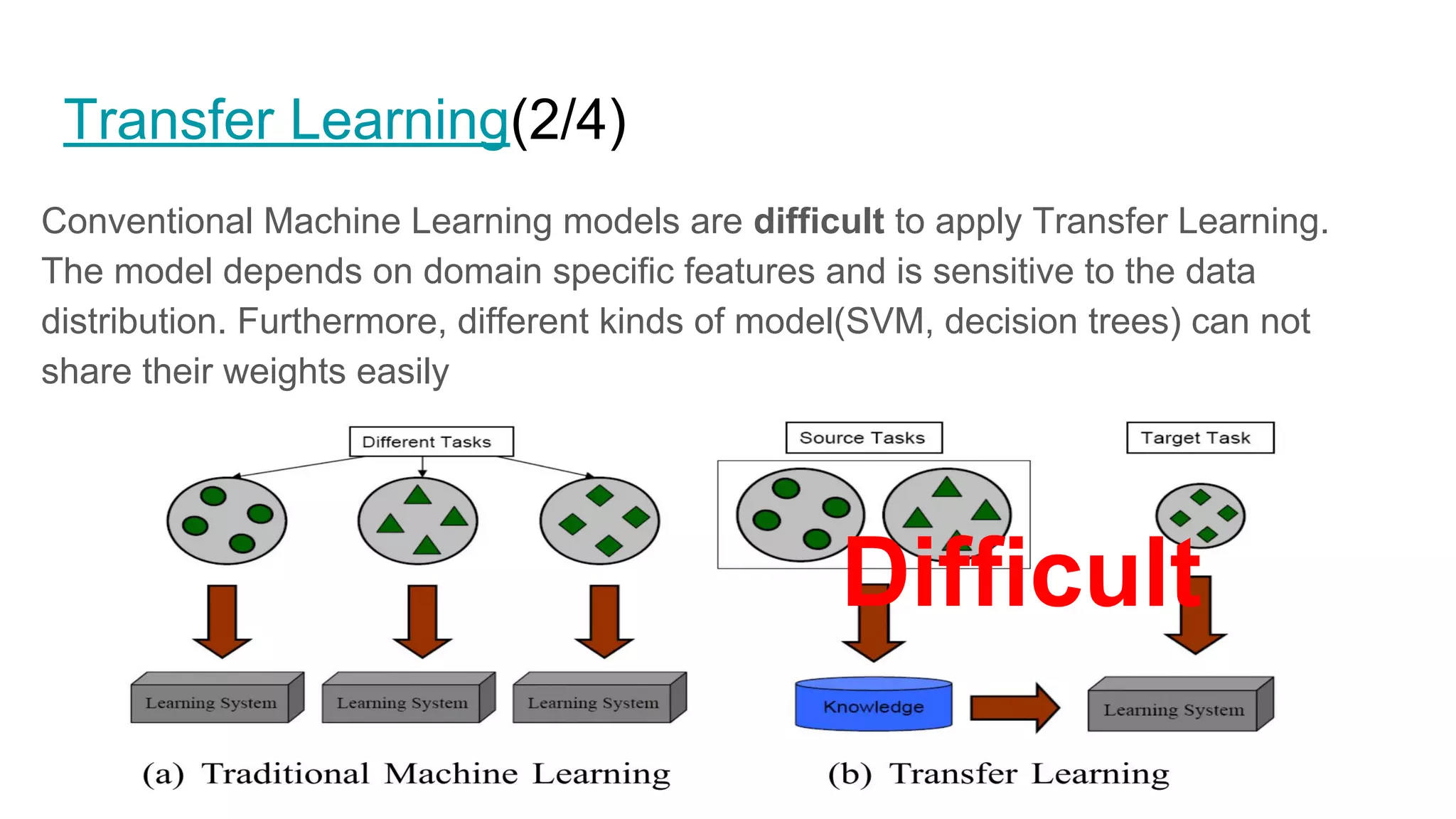

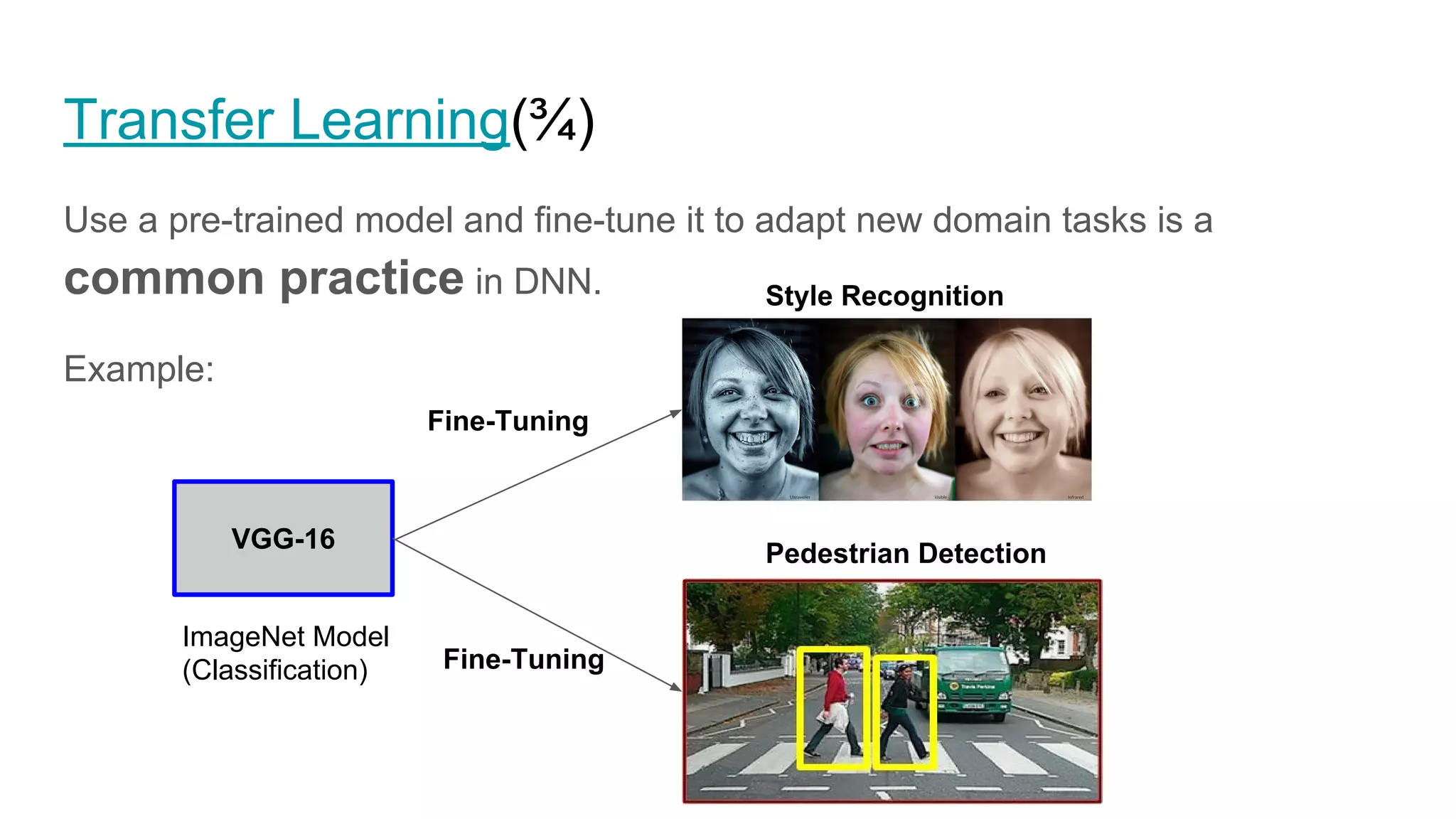

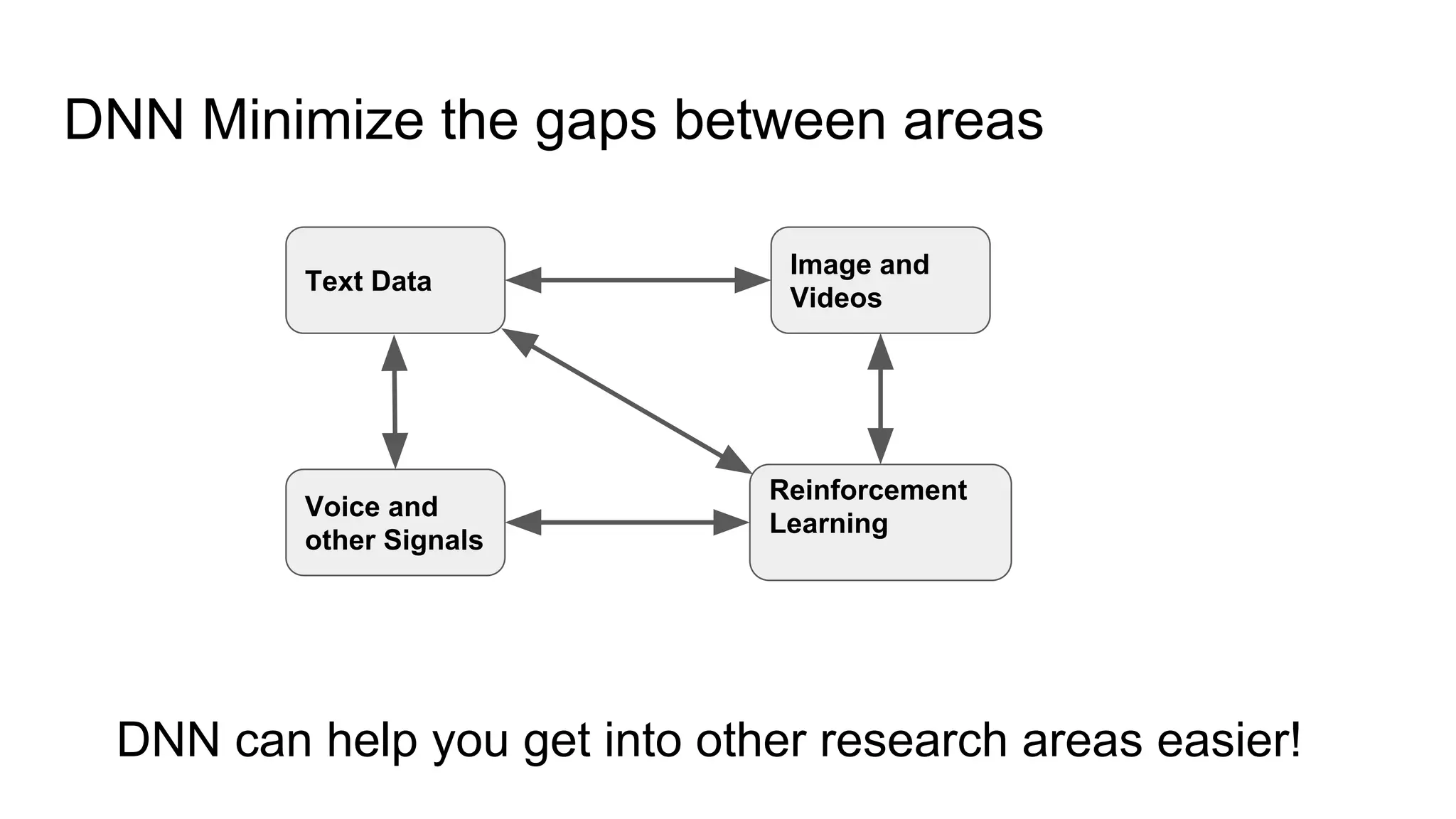

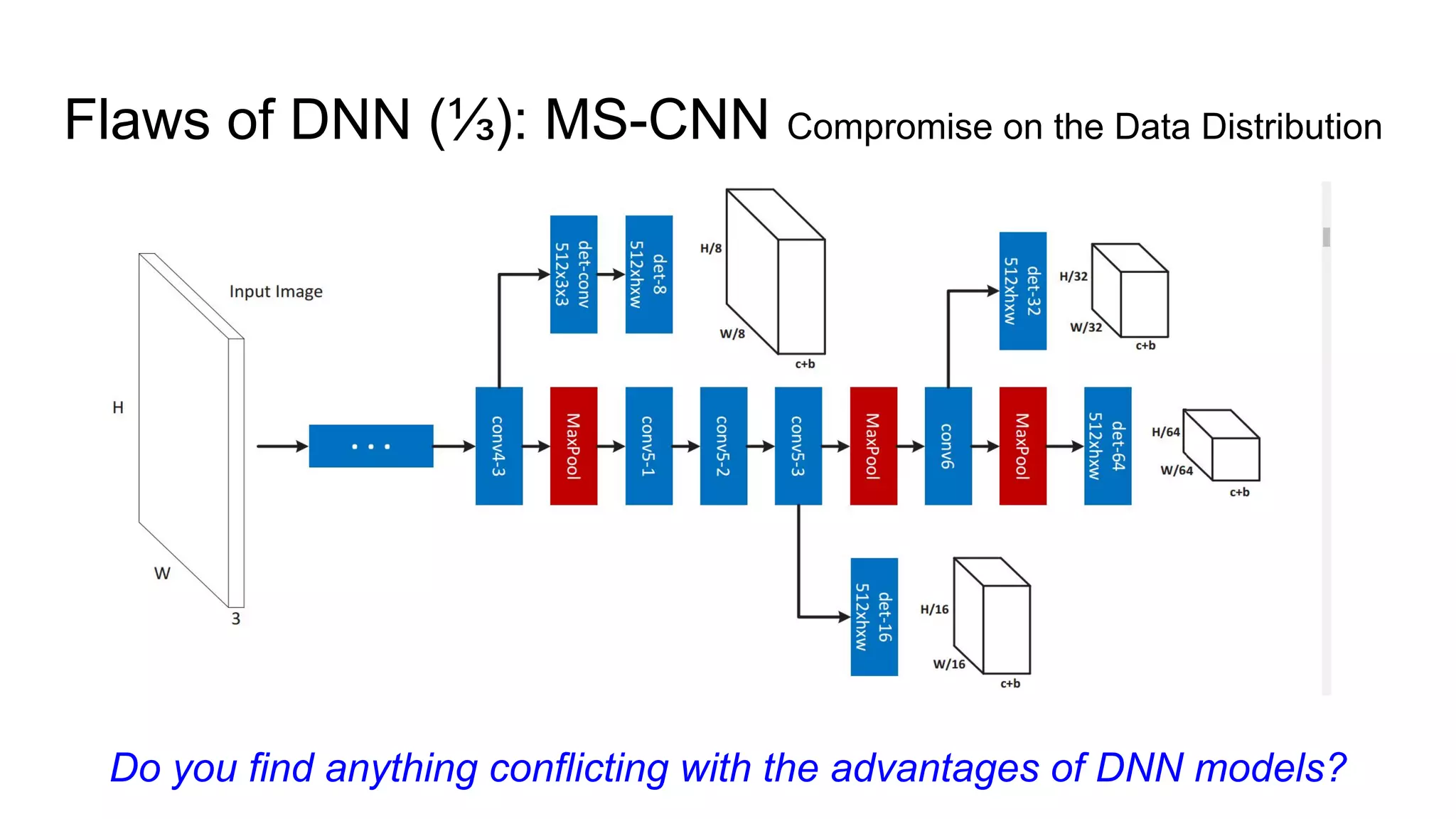

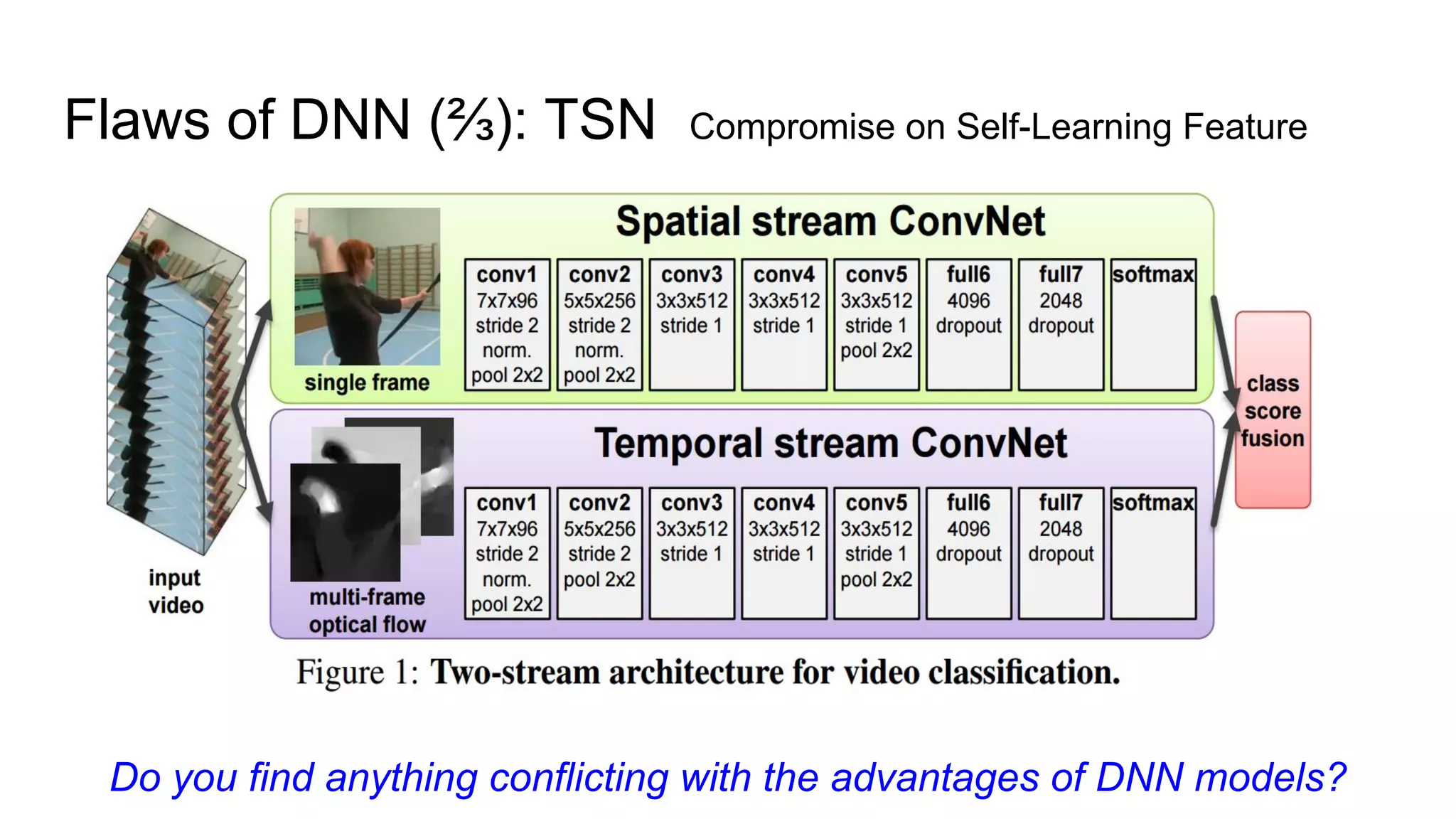

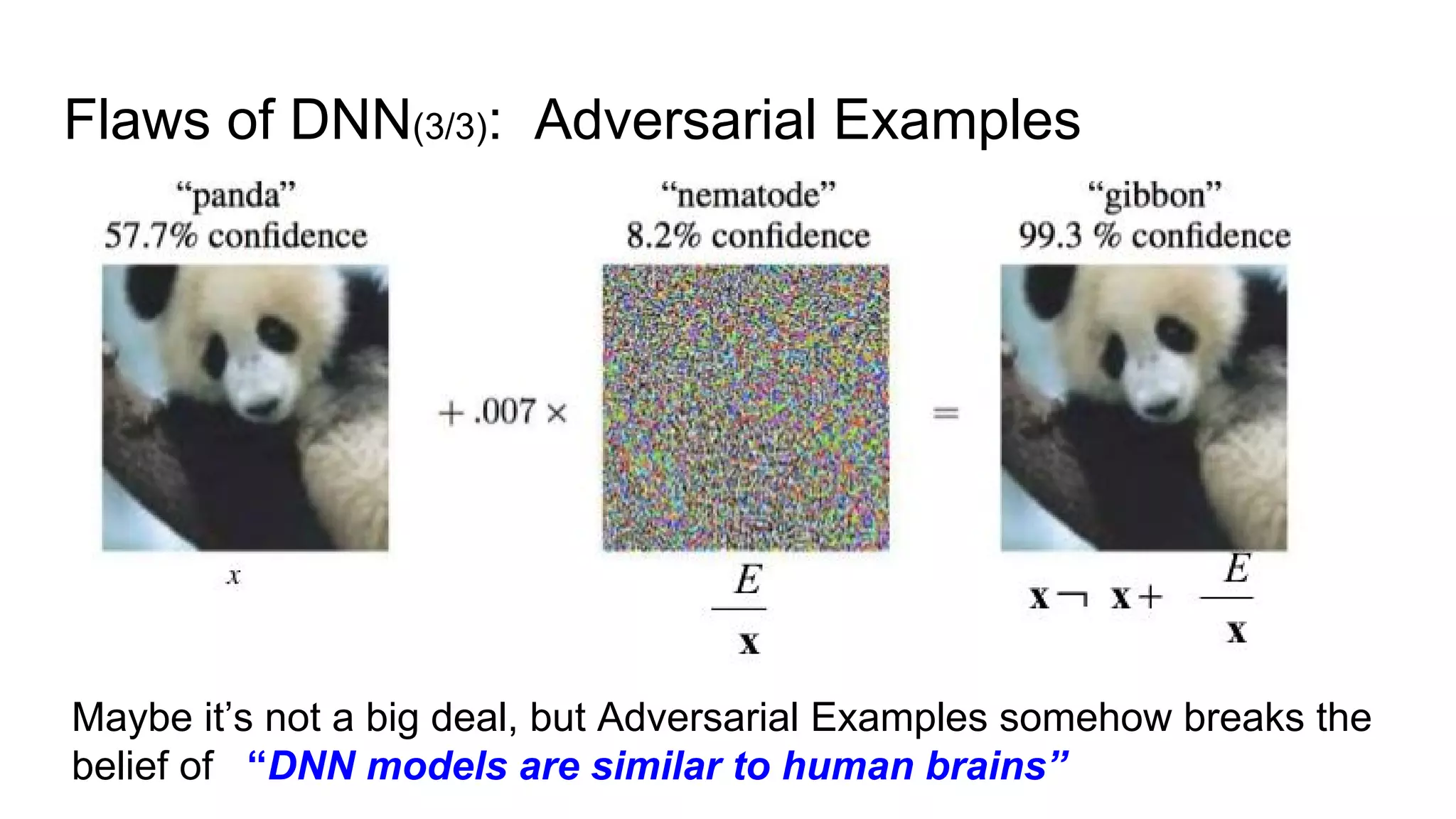

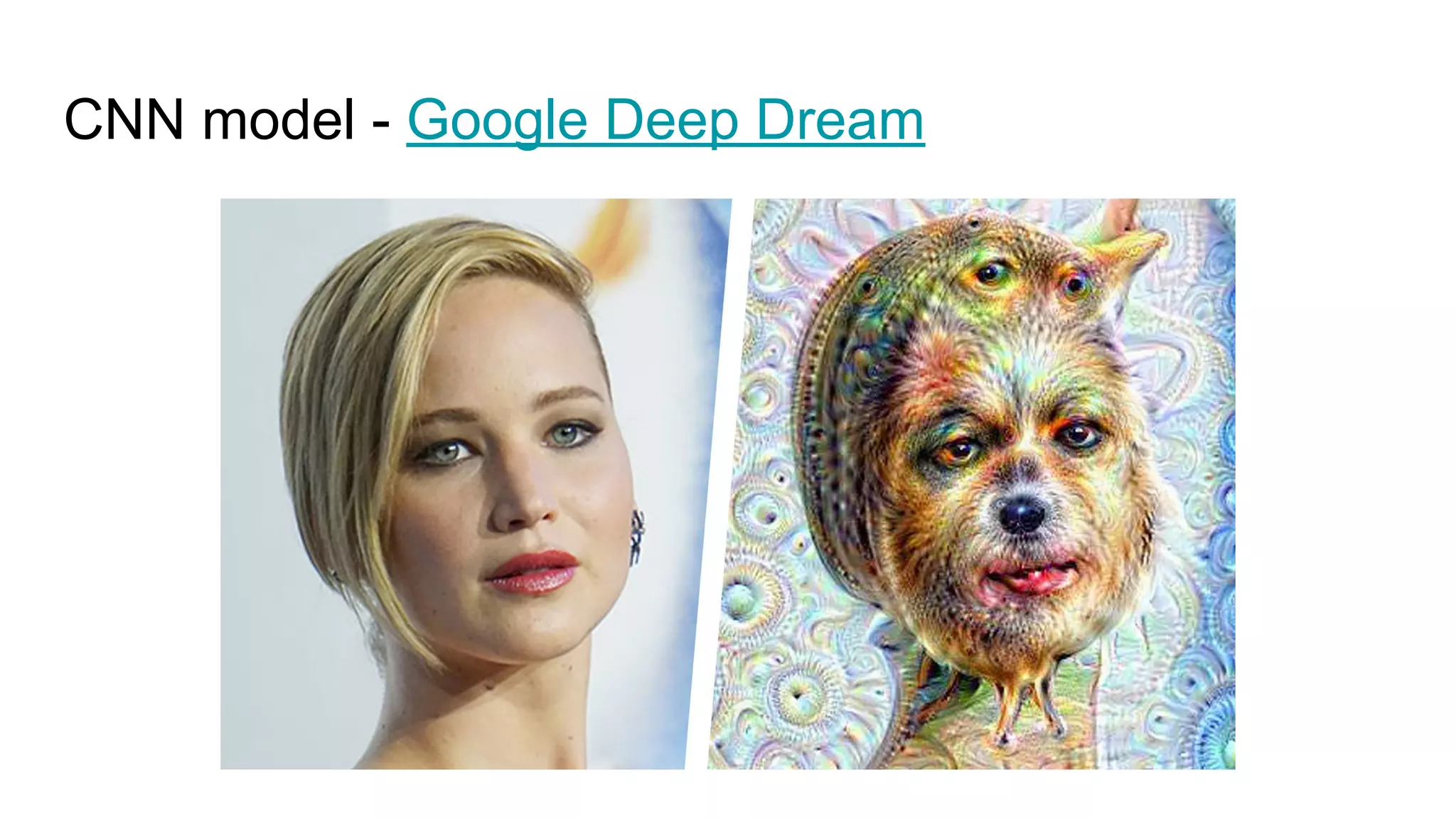

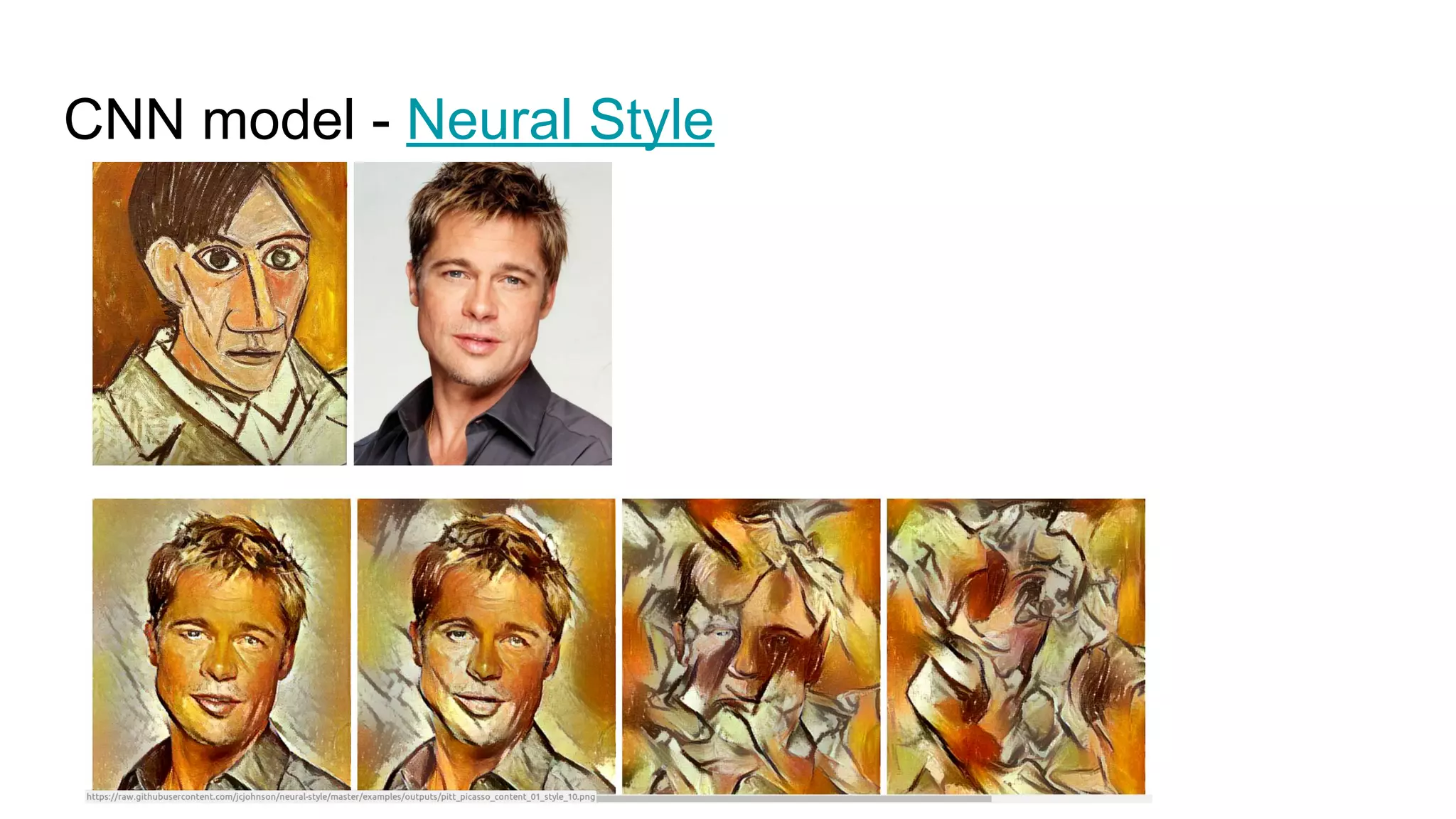

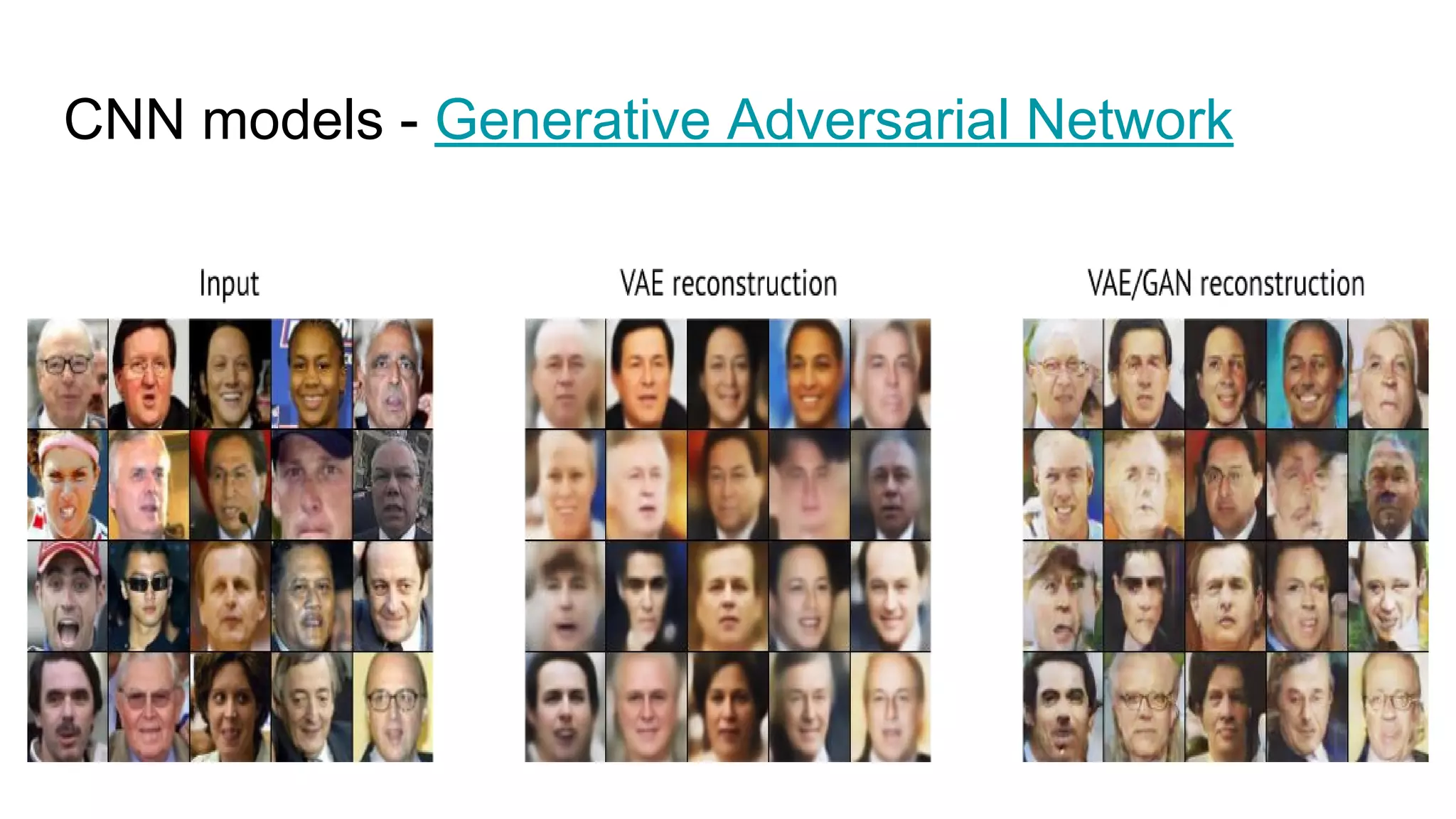

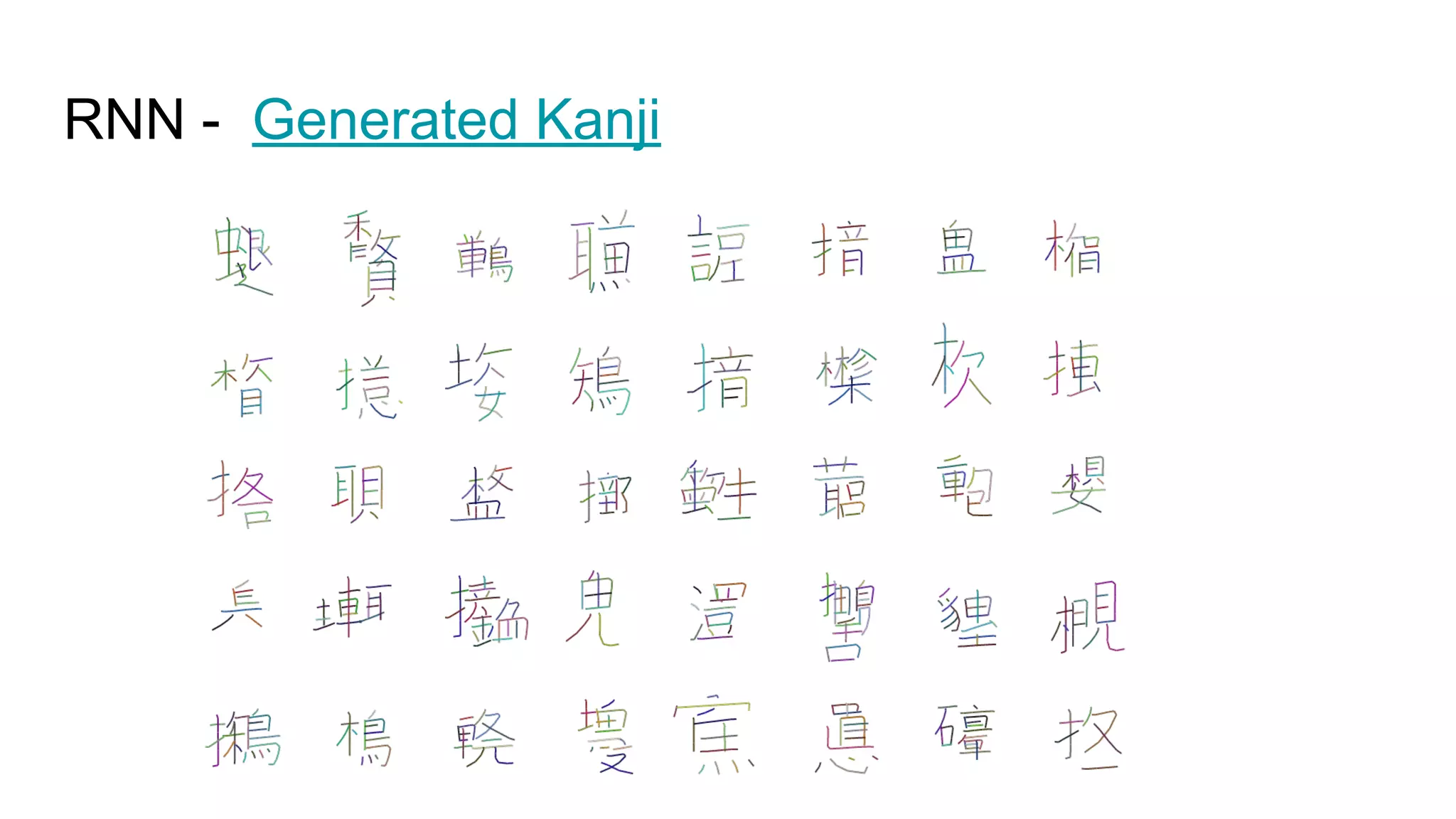

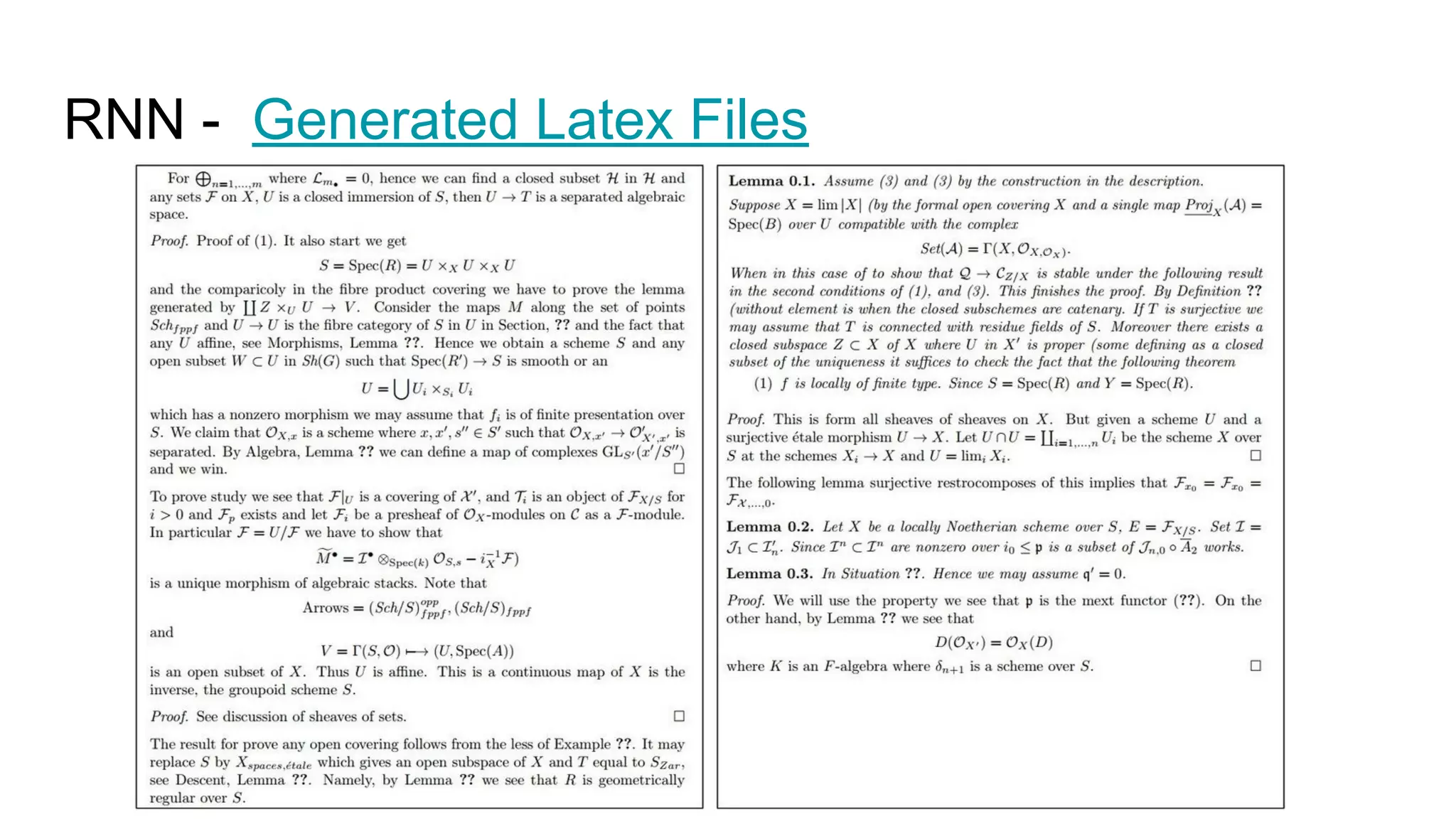

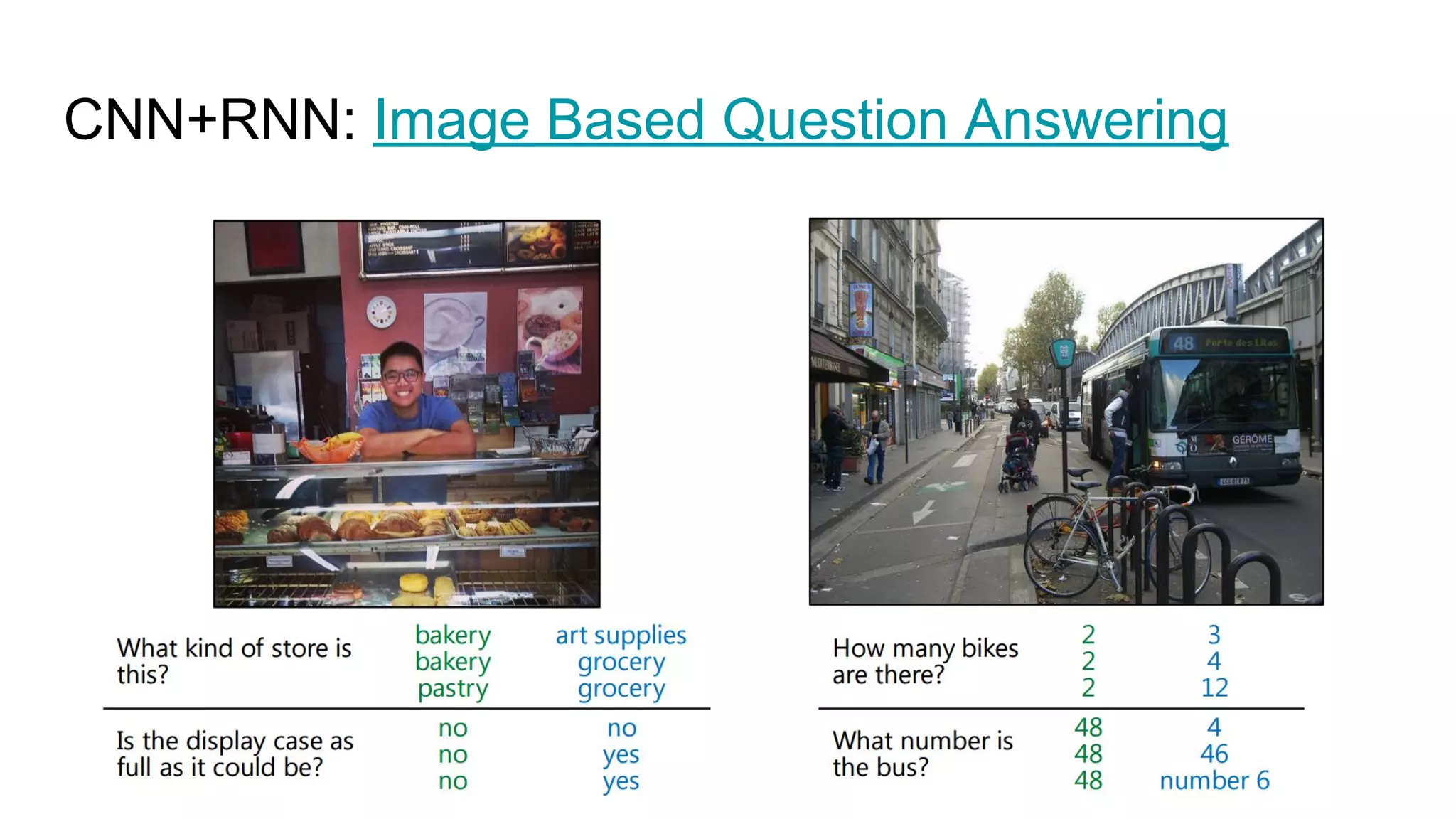

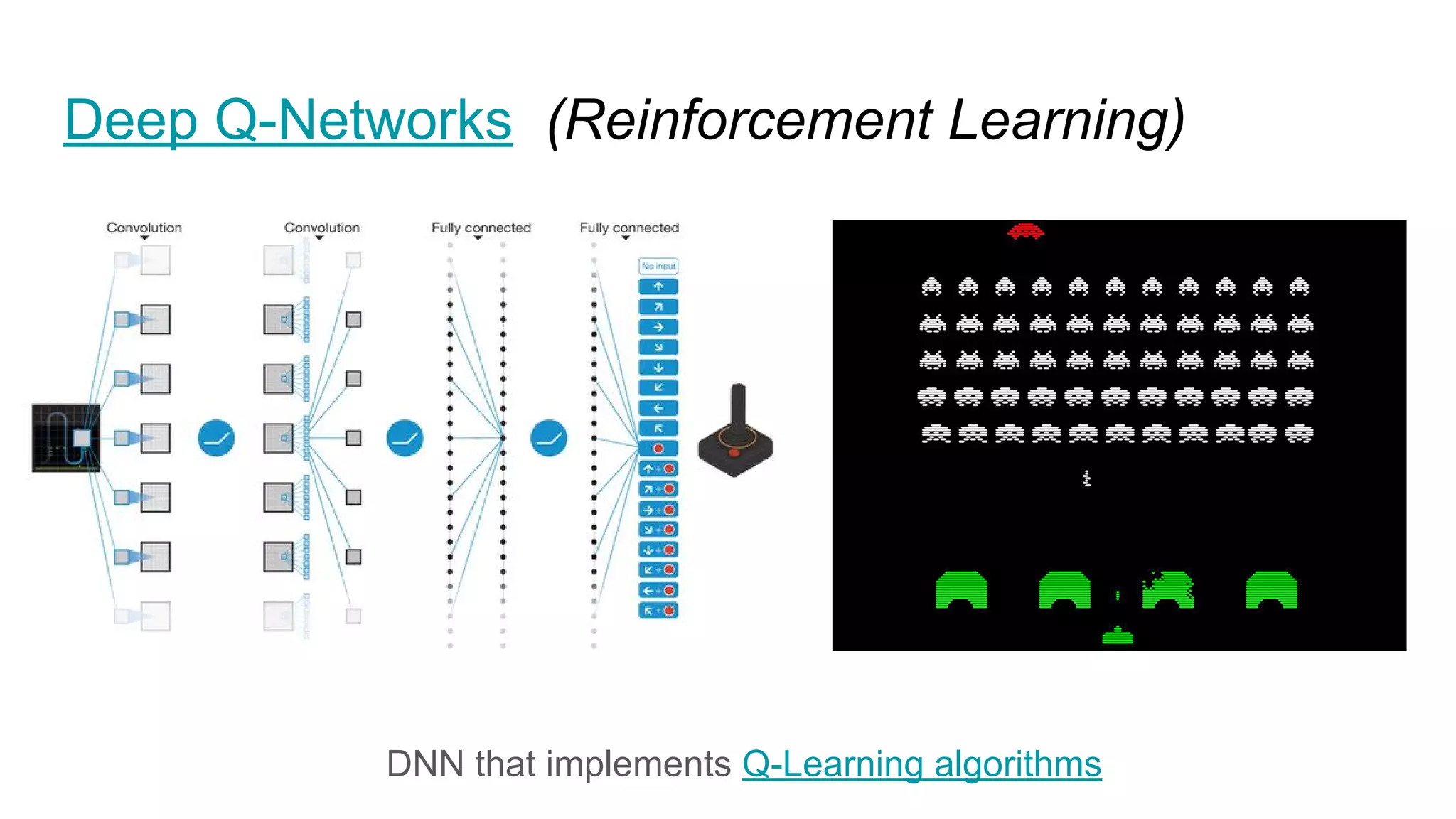

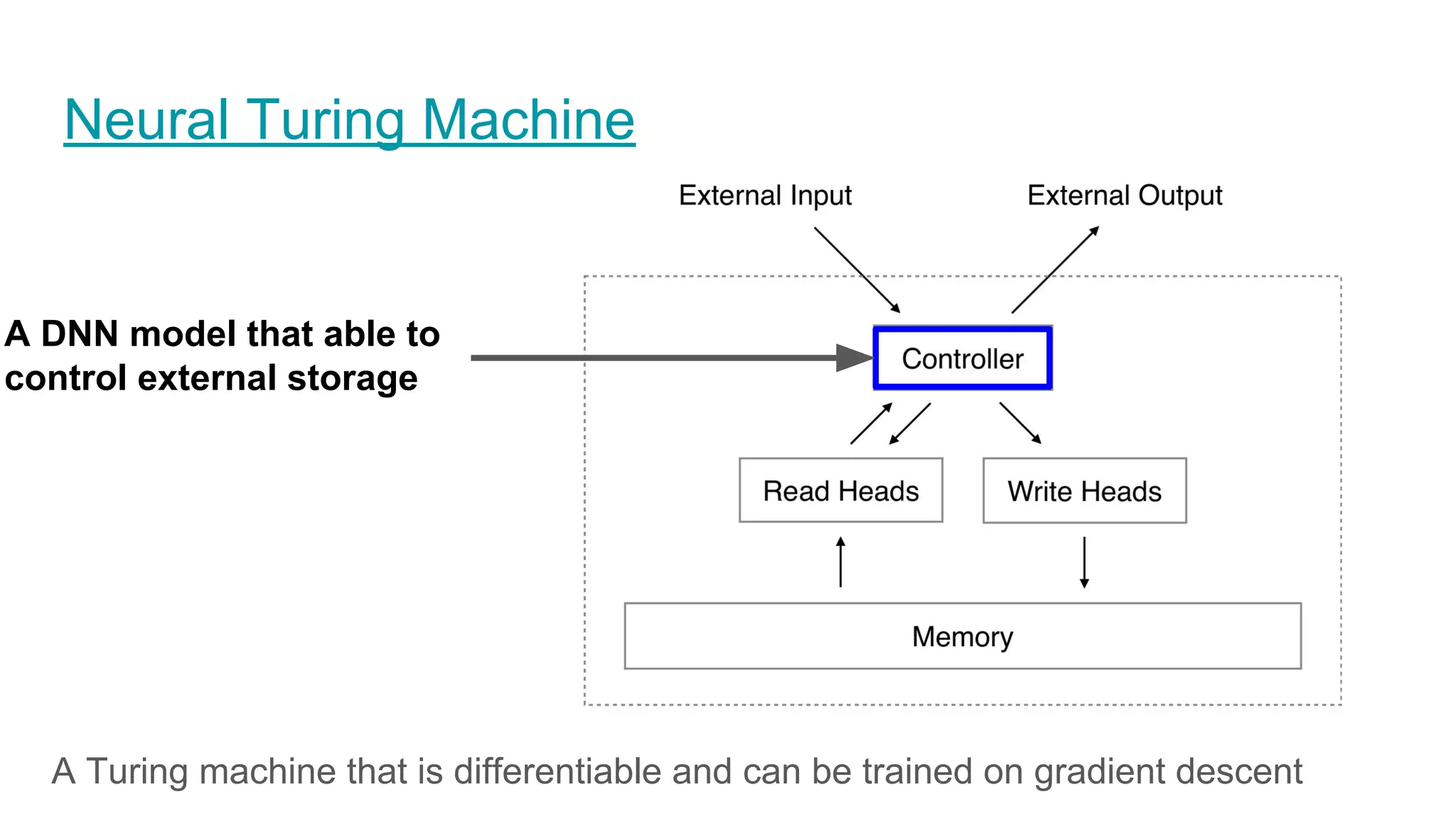

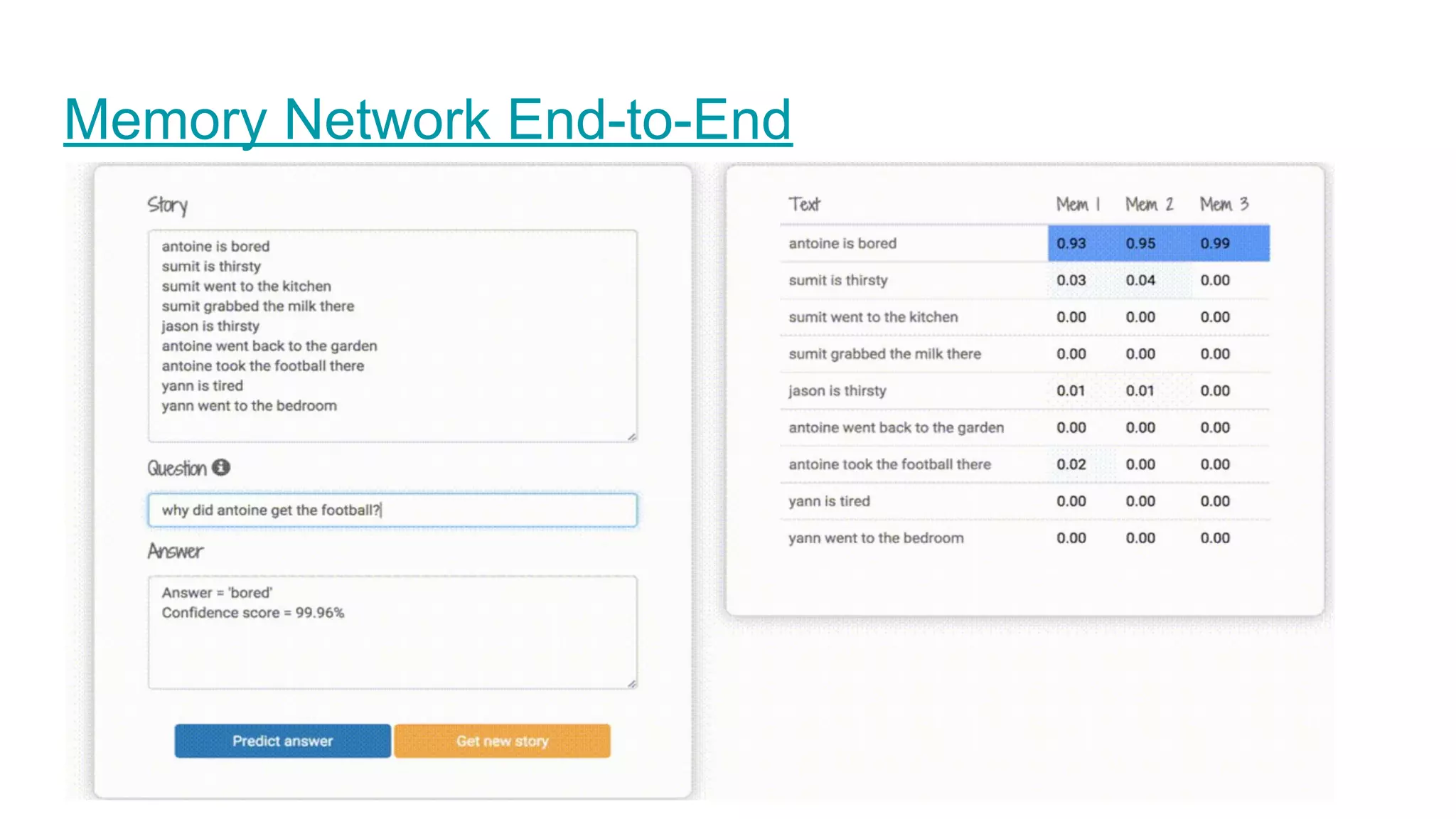

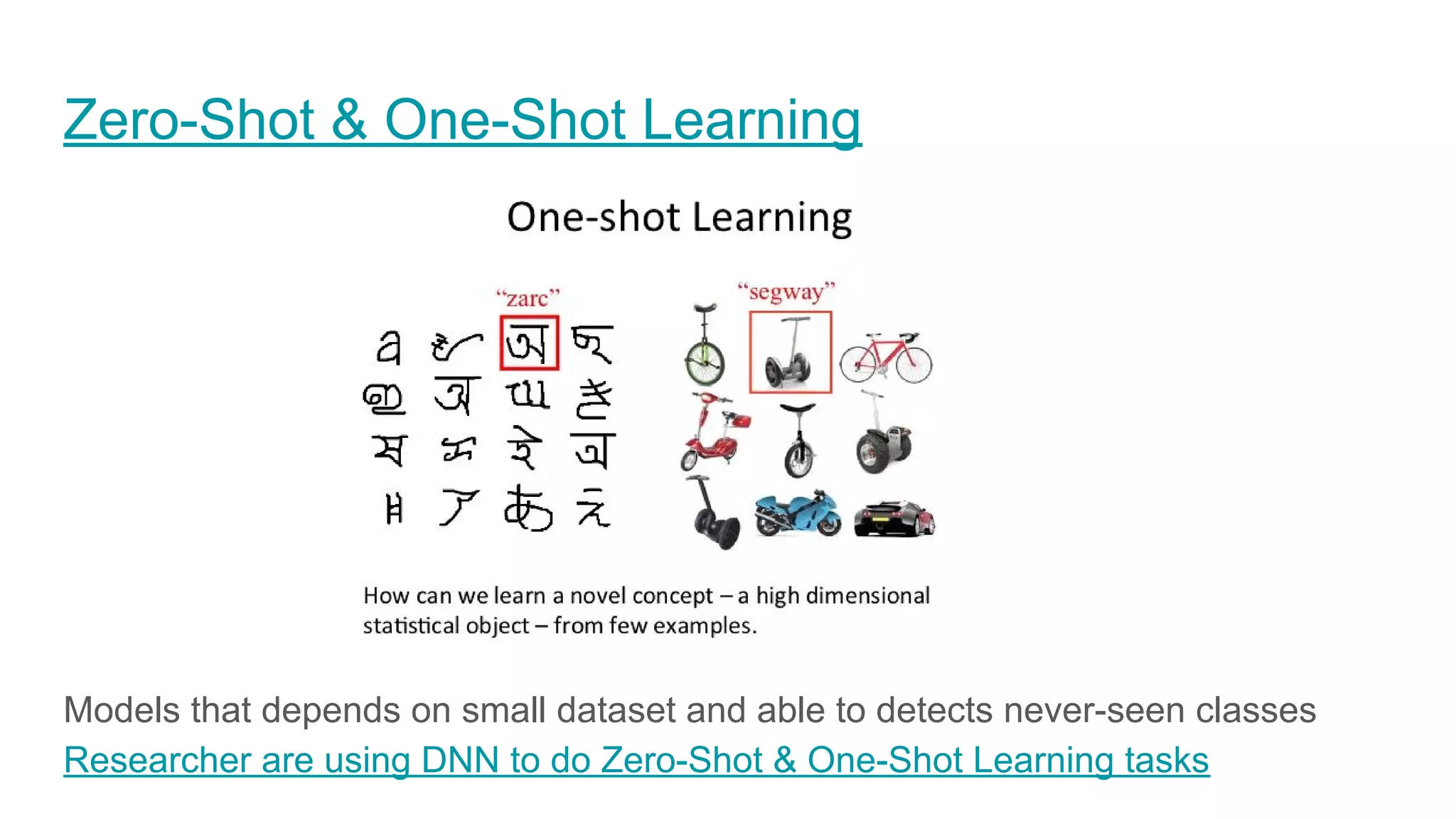

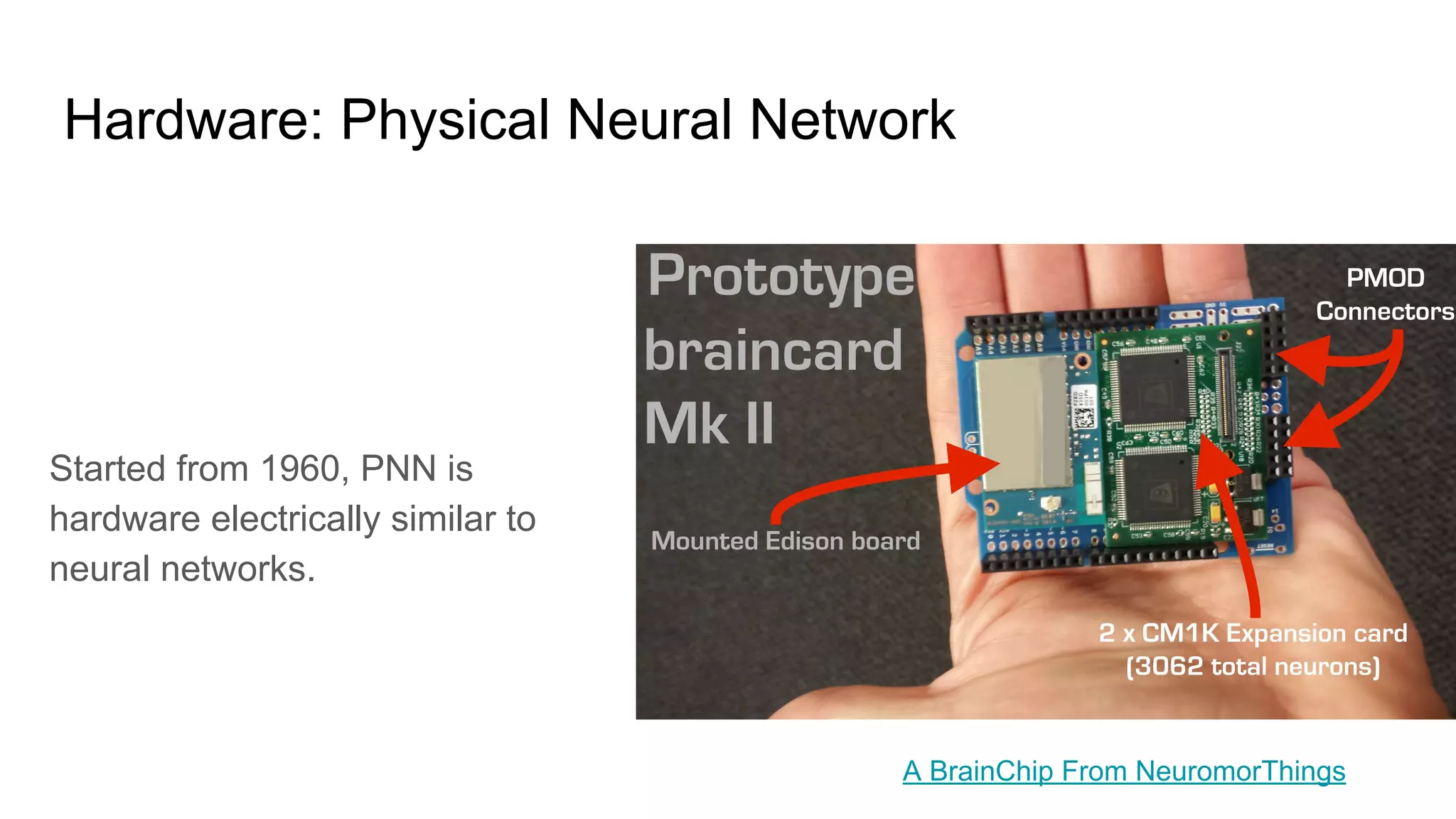

The document outlines the author's early experiences in machine learning, specifically focusing on deep learning techniques such as CNNs and RNNs. It discusses the advantages and flaws of deep neural networks, including their ability to learn high-level features and the challenges they face like adversarial examples. Additionally, it covers the modularity of DNNs and their applications beyond traditional machine learning tasks, alongside a brief overview of the hardware used for DNN computations.