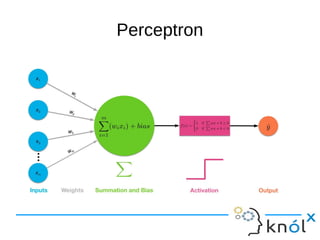

The document provides an overview of machine learning and artificial neural networks, detailing concepts such as perceptrons, activation functions, training and error, and backpropagation. It highlights the importance of these components in creating effective neural networks and discusses various machine learning techniques. Additionally, it addresses the limitations and potential biases present in machine learning applications.