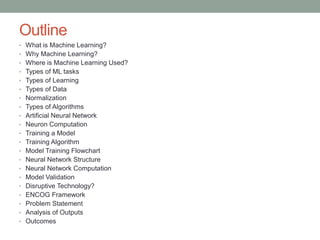

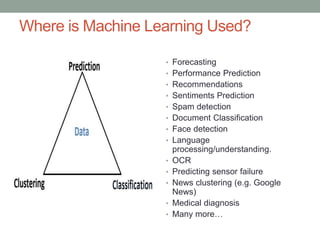

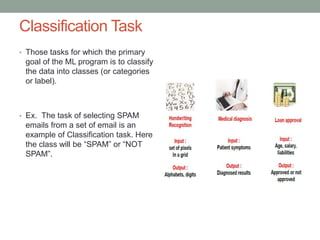

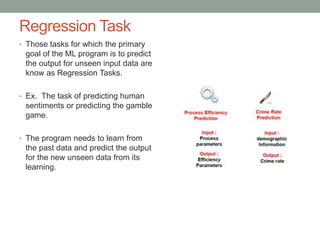

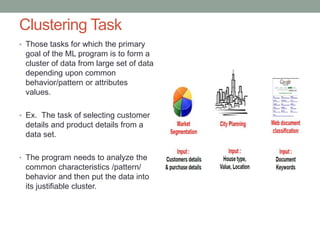

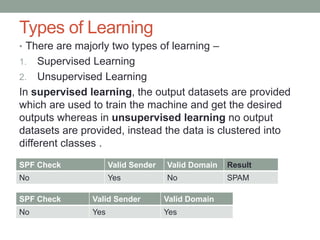

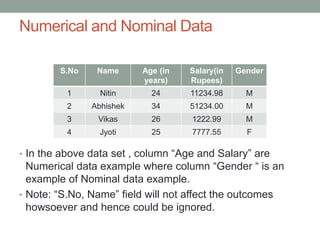

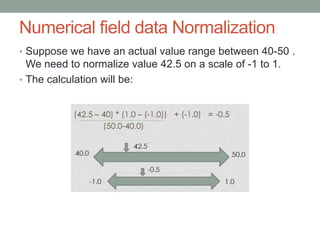

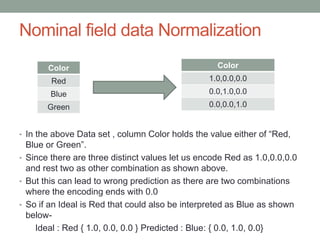

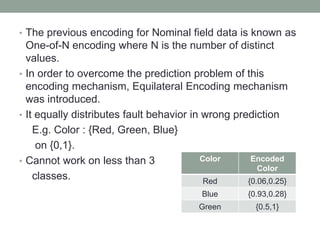

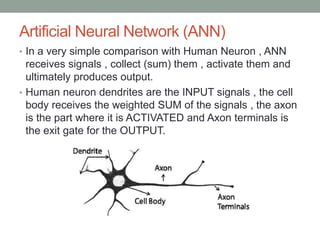

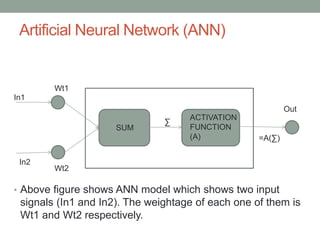

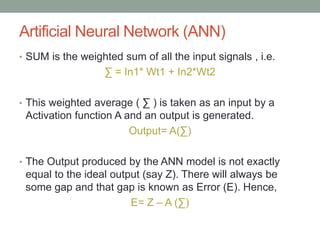

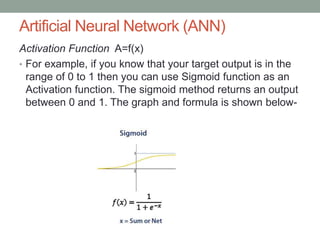

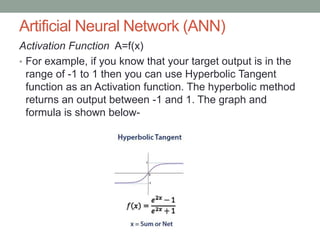

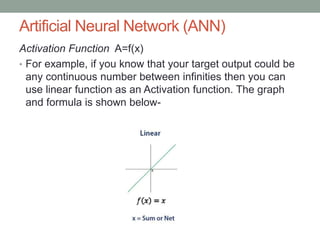

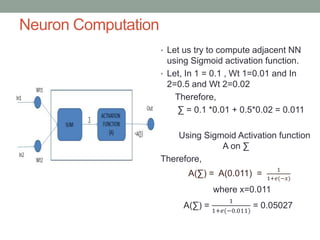

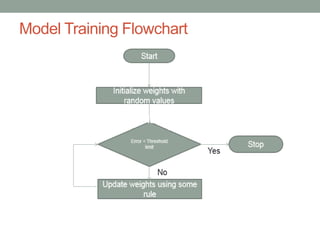

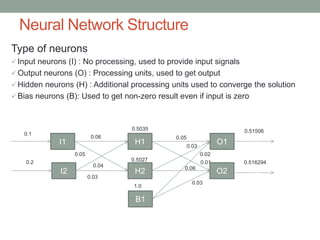

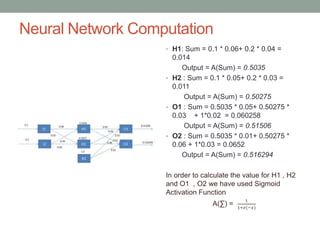

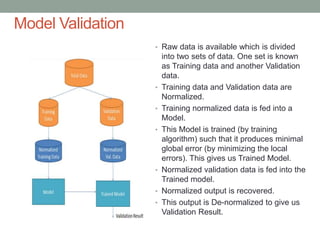

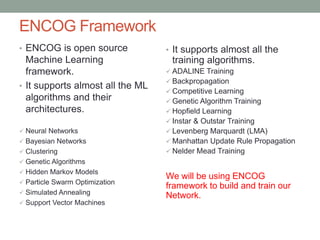

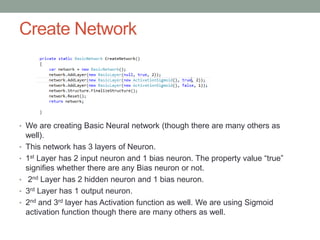

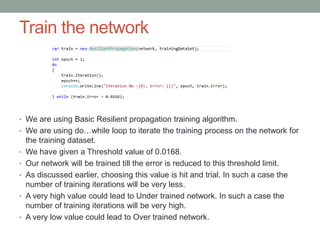

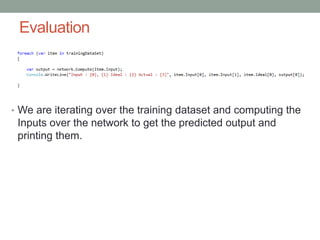

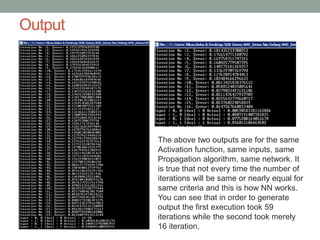

This document provides an overview of machine learning, including definitions of key concepts like artificial neural networks, different types of machine learning tasks and algorithms. It discusses topics such as the motivation for machine learning, common applications, data types and normalization, neural network structure and computation. Training models and validating outputs is also covered. The ENCOG machine learning framework is introduced as a tool that supports various algorithms.