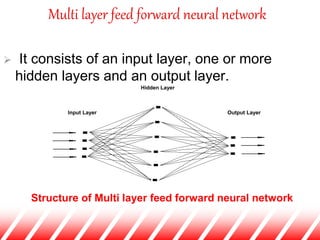

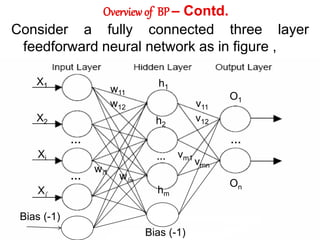

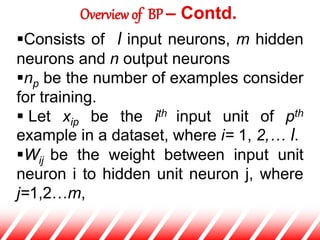

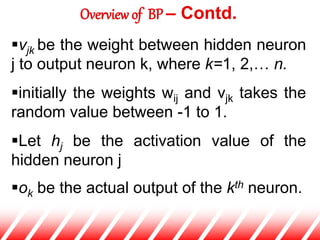

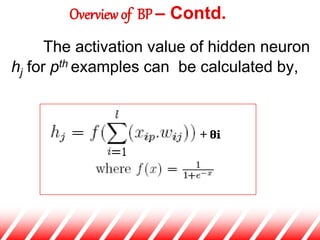

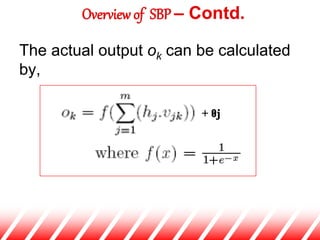

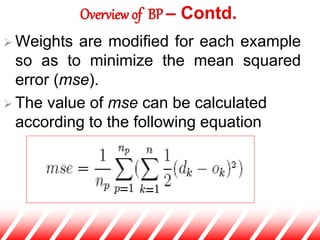

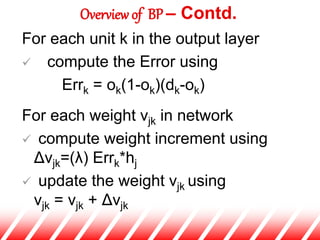

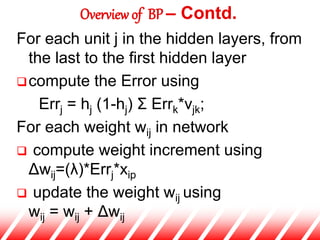

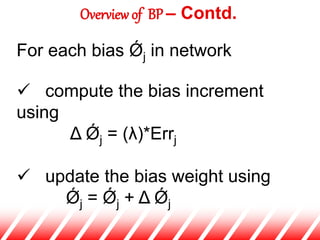

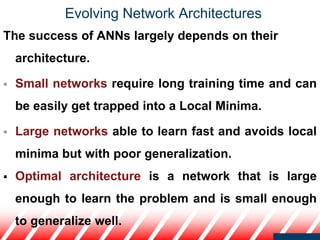

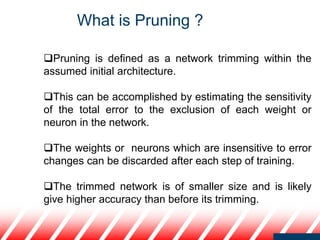

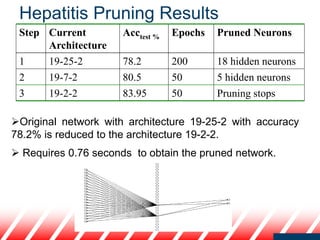

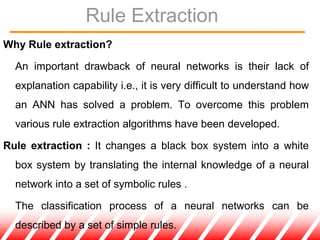

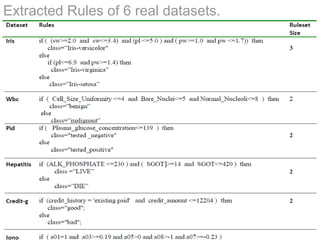

The document provides an overview of neural networks for data mining. It discusses how neural networks can be used for classification tasks in data mining. It describes the structure of a multi-layer feedforward neural network and the backpropagation algorithm used for training neural networks. The document also discusses techniques like neural network pruning and rule extraction that can optimize neural network performance and interpretability.