This document discusses different methods for testing and evaluating user interfaces, including expert reviews, heuristic evaluations, cognitive walkthroughs, usability testing, and surveys. It describes the following key points:

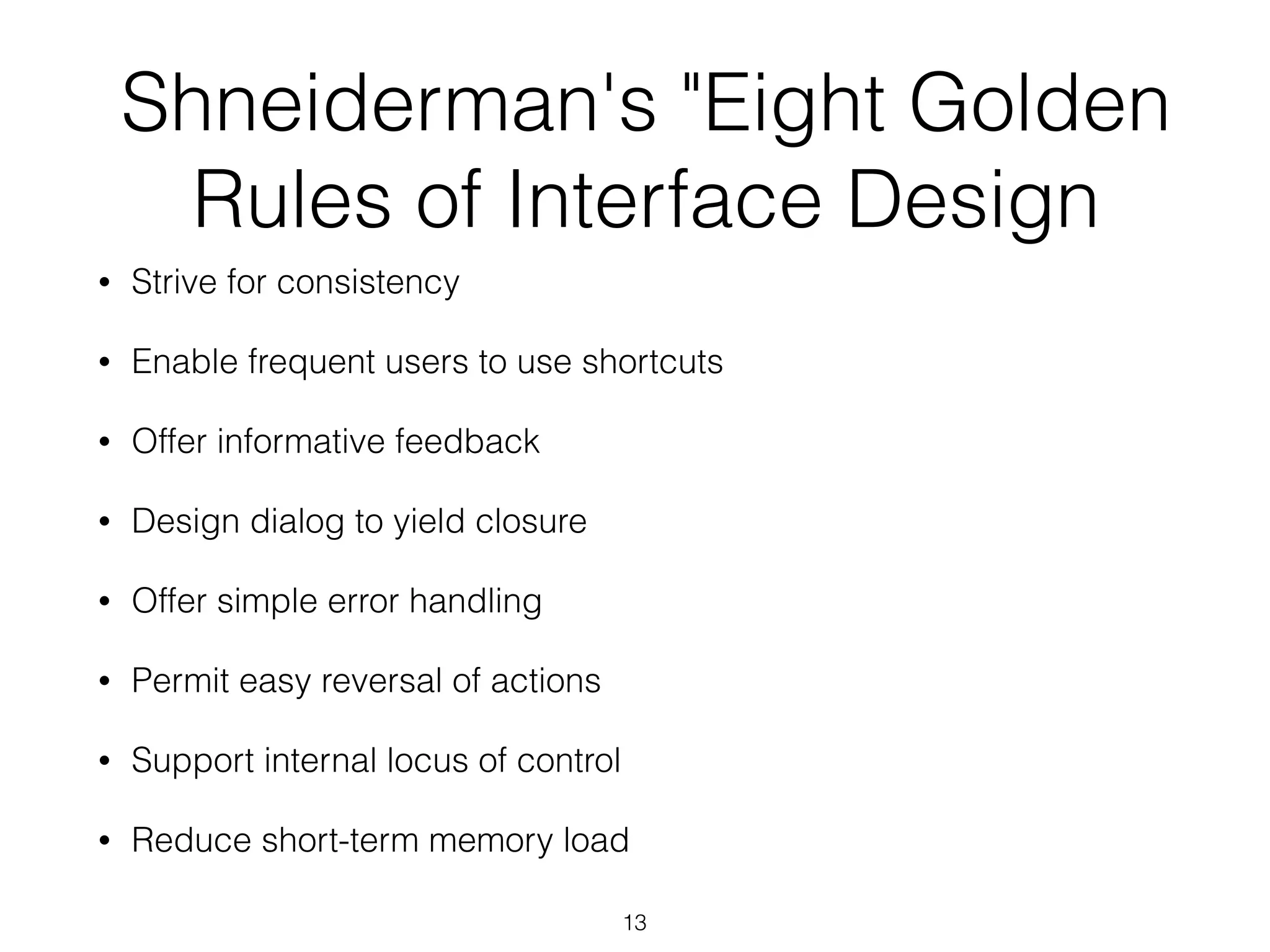

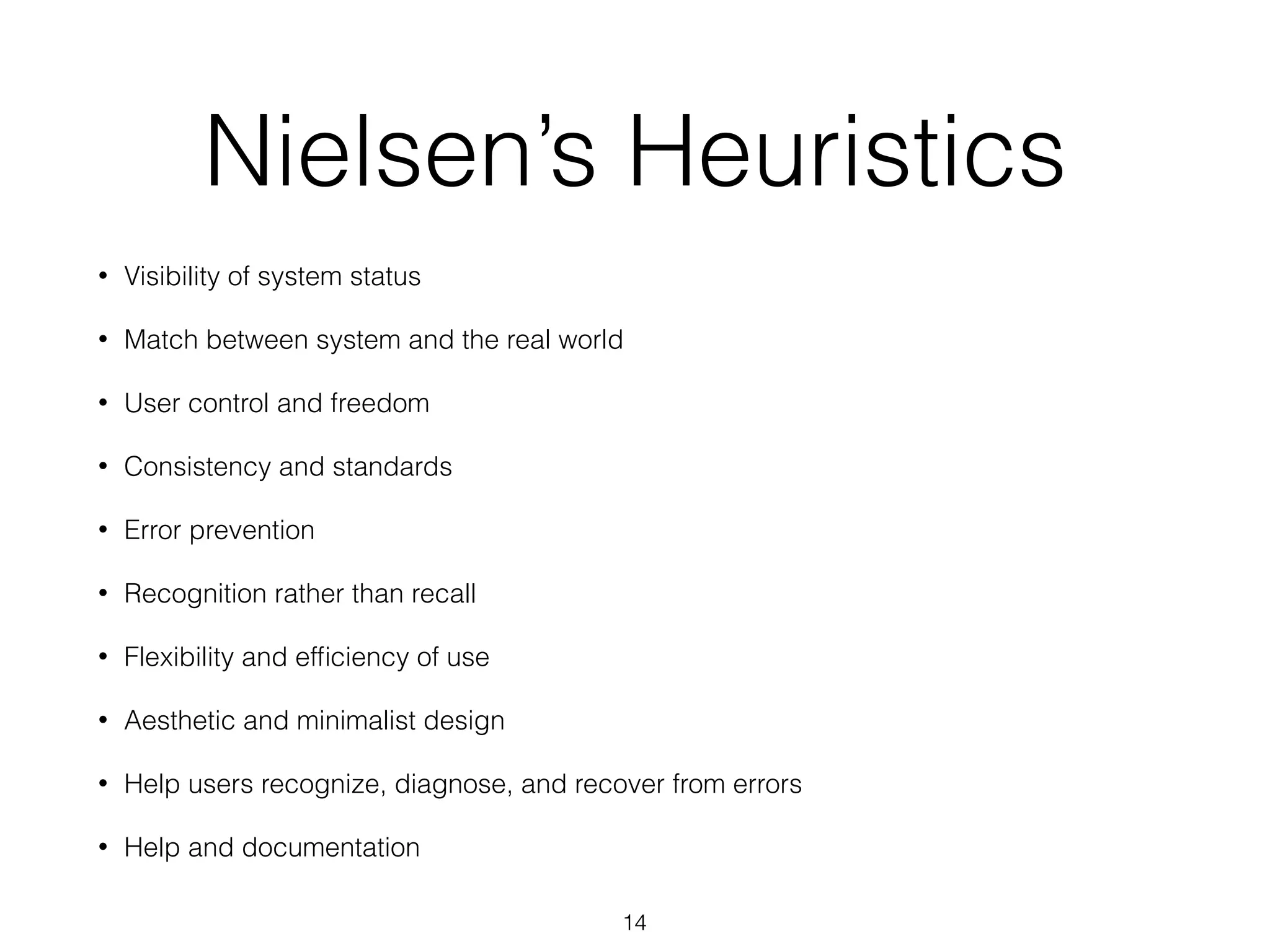

- Expert reviews involve having experts examine an interface and provide feedback, while heuristic evaluations involve having experts evaluate an interface against established usability principles or heuristics.

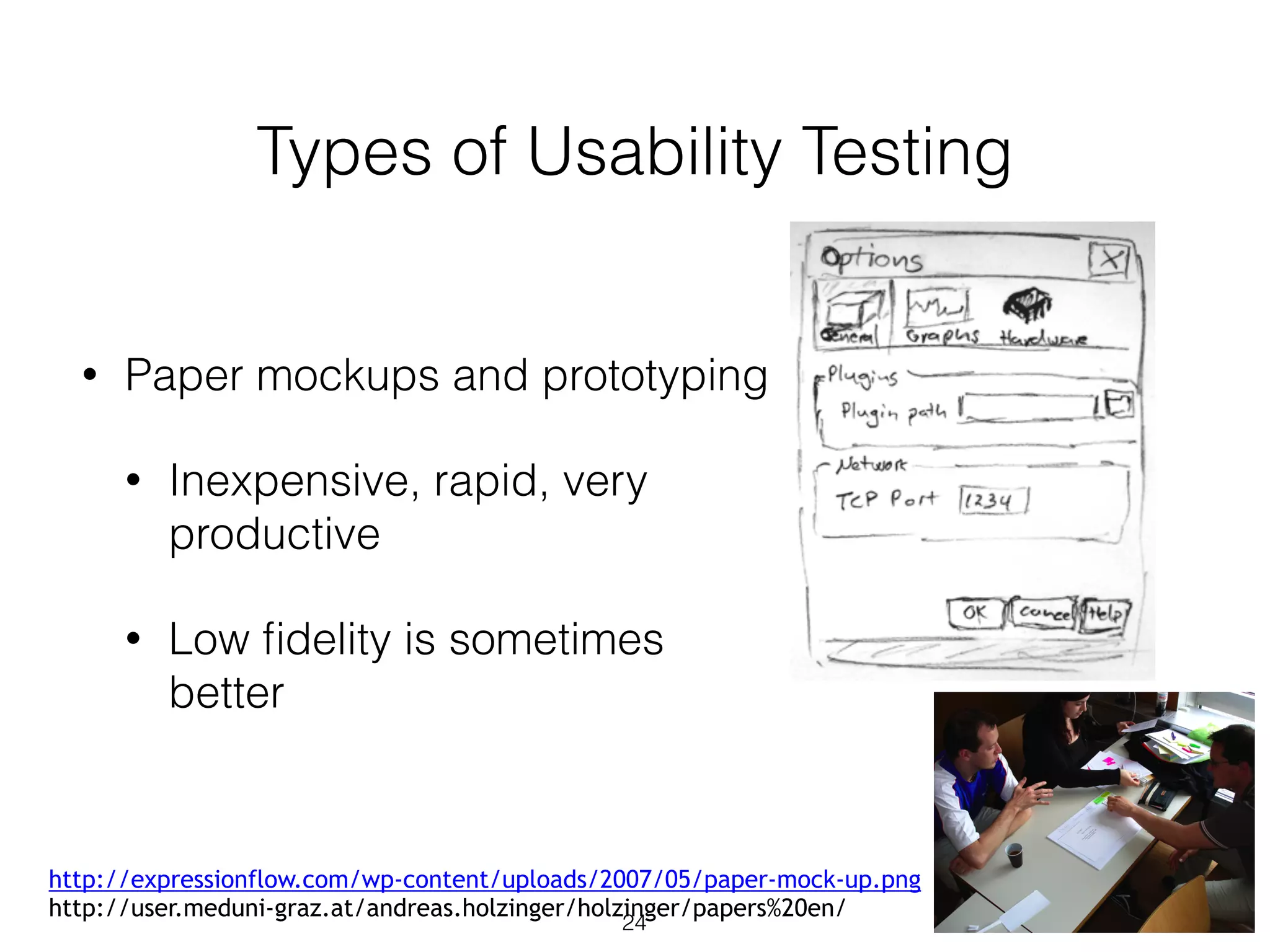

- Usability testing involves observing real users interacting with an interface to identify usability issues. Different types of usability testing are discussed, including discount usability testing and competitive usability testing.

- Surveys can be used to collect feedback from users on their experiences, preferences, and satisfaction. Common survey methods include questionnaires with Likert scales and bipolar