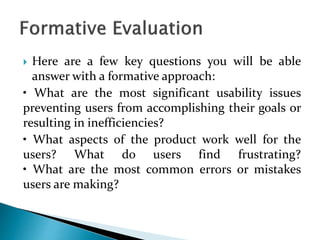

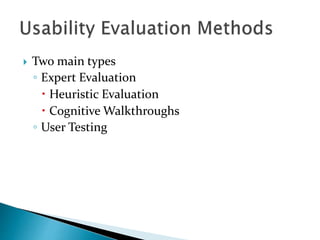

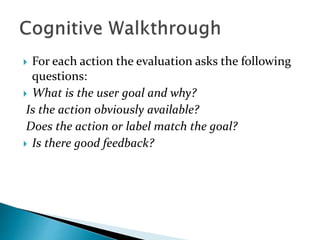

The document discusses different methods for evaluating user interface designs, including expert evaluation techniques like heuristic evaluation and cognitive walkthroughs. It also covers user testing, which is considered more reliable than expert evaluation alone. Formative evaluation involves testing prototypes during development to identify issues, while summative evaluation assesses the final product. Both qualitative and quantitative methods are important to identify usability problems from the user's perspective.