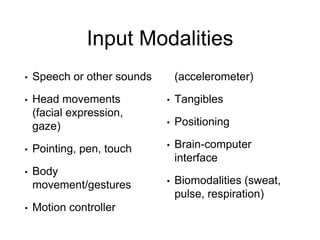

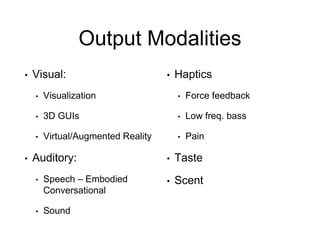

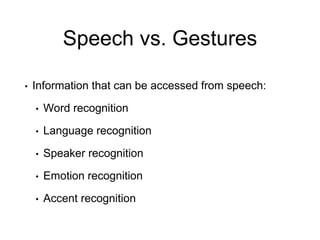

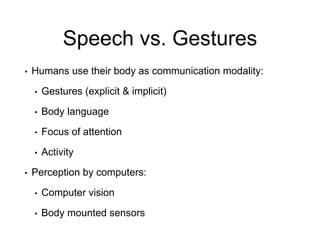

The document provides an overview of a course on intelligent interfaces and multimodal interaction design. It discusses key topics like paper prototyping tasks, characteristics of multimodal interfaces, input and output modalities, challenges in multimodal design, and guidelines for effective multimodal interface design. The goals are to describe multimodal interfaces, compare modalities depending on context, list best practices, and understand multimodal interactions. Examples of speech, gesture, and haptic interactions are provided.

![Multimodal Interactions

• Modality is the mode or path of communication

according to human senses, using different types of

information and different interface devices;

• Some definitions:

Multimodal HCI system is simply one that responds to inputs in

more than one modality or communication channel (e.g. speech,

gesture, writing and others) [James/Sebe]

Multimodal interfaces process two or more combined user input

modes (such as speech, pen, touch, manual gesture, gaze and head

and body movements) in a coordinated manner with multimedia

system output. [Oviatt]](https://image.slidesharecdn.com/ics3211lecture07-161104111827/85/ICS3211-lecture-07-7-320.jpg)

![Multimodal Interactions

• Use this padlet

[https://padlet.com/vanessa_camille/multimodal_ICS

3211 ] to list how the two modalities using speech

and gesture differ;](https://image.slidesharecdn.com/ics3211lecture07-161104111827/85/ICS3211-lecture-07-8-320.jpg)