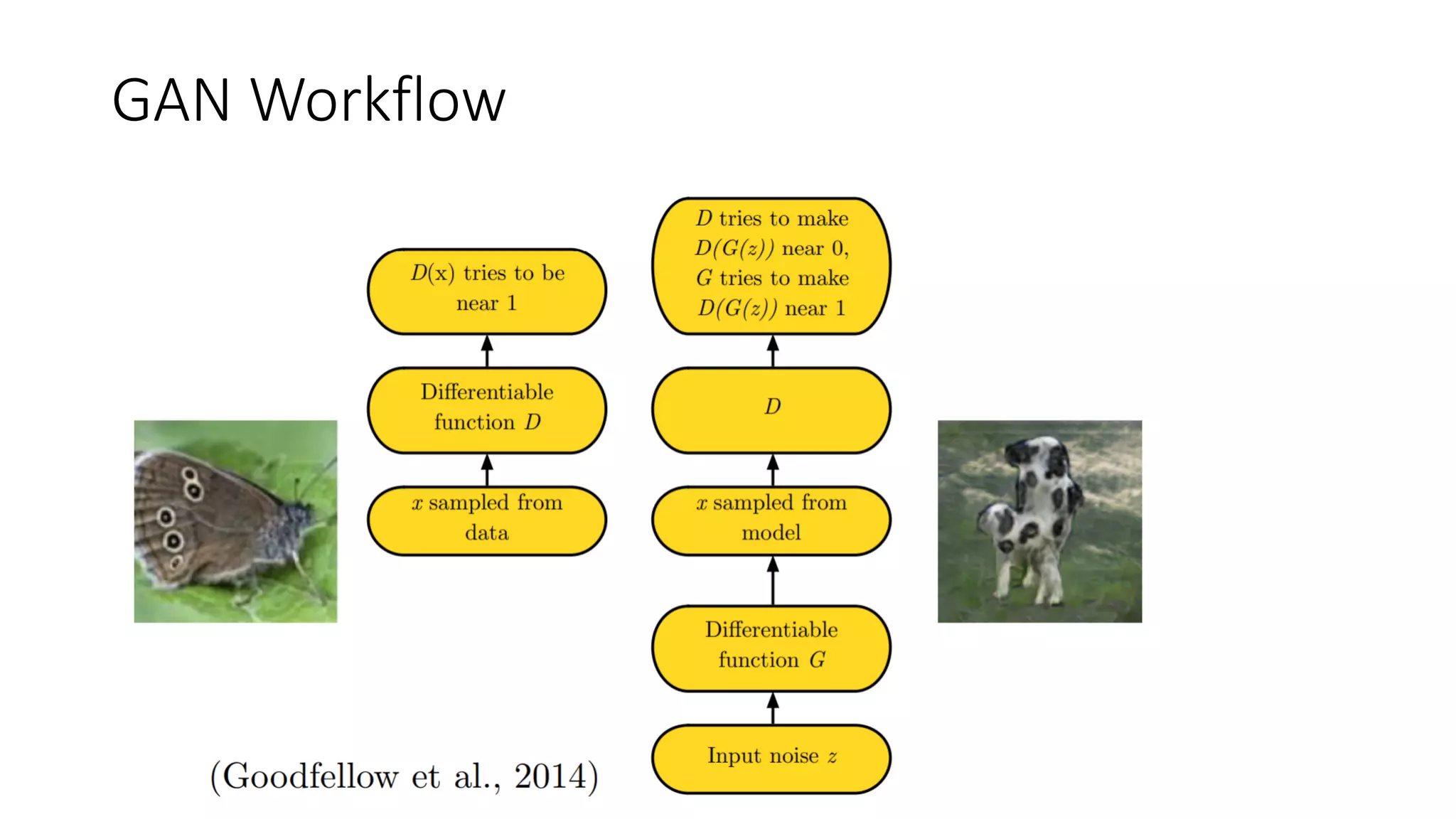

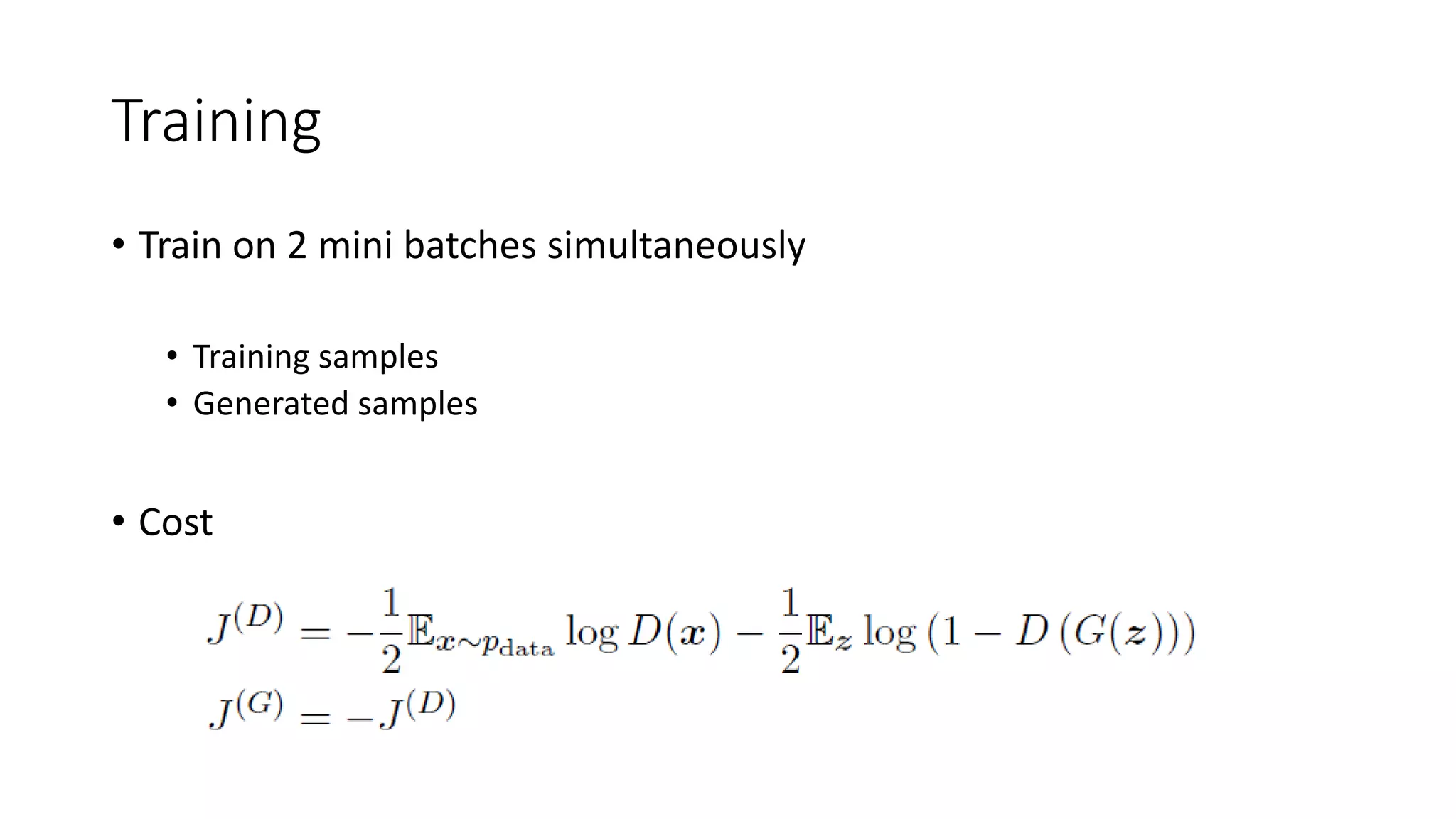

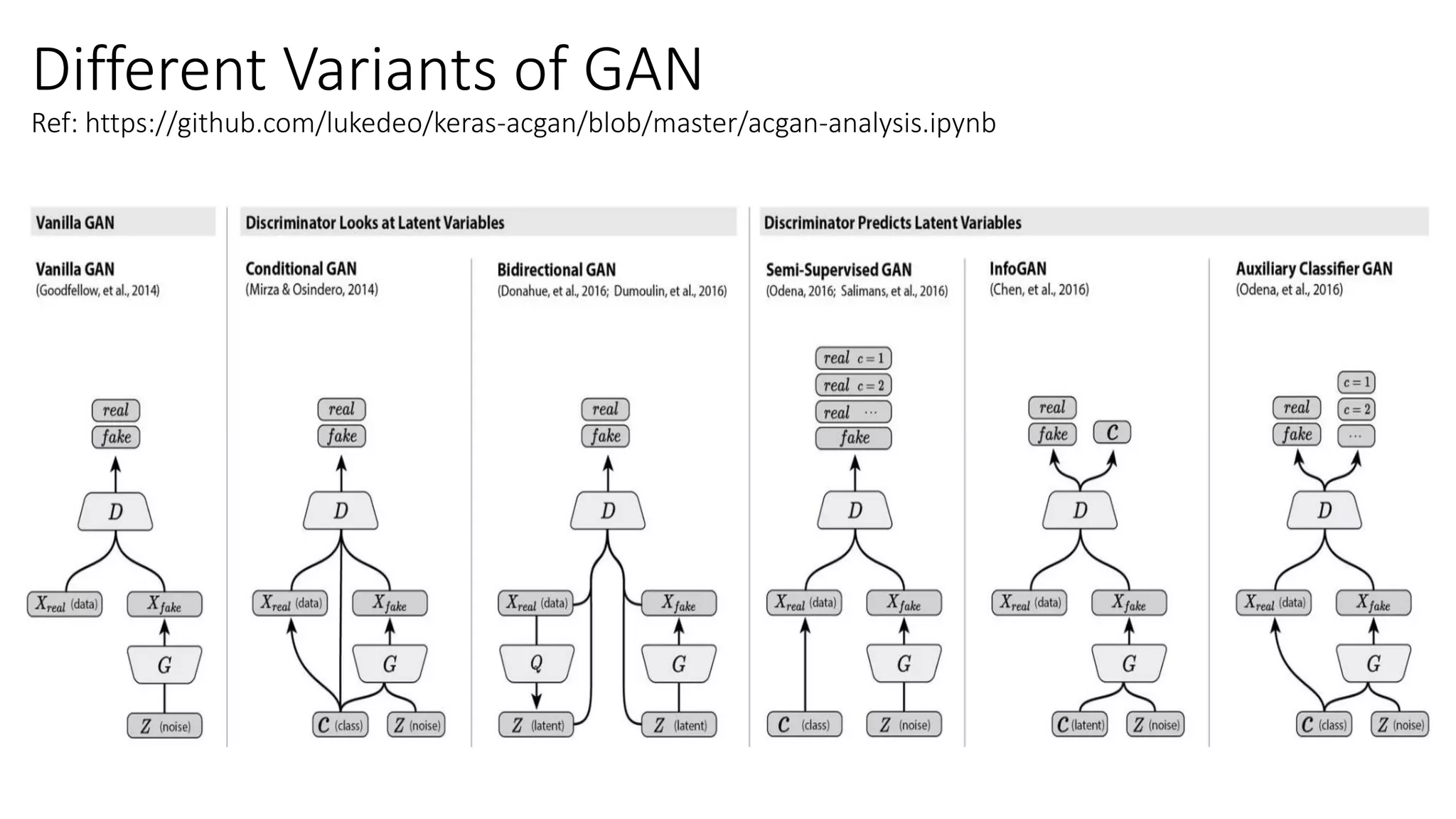

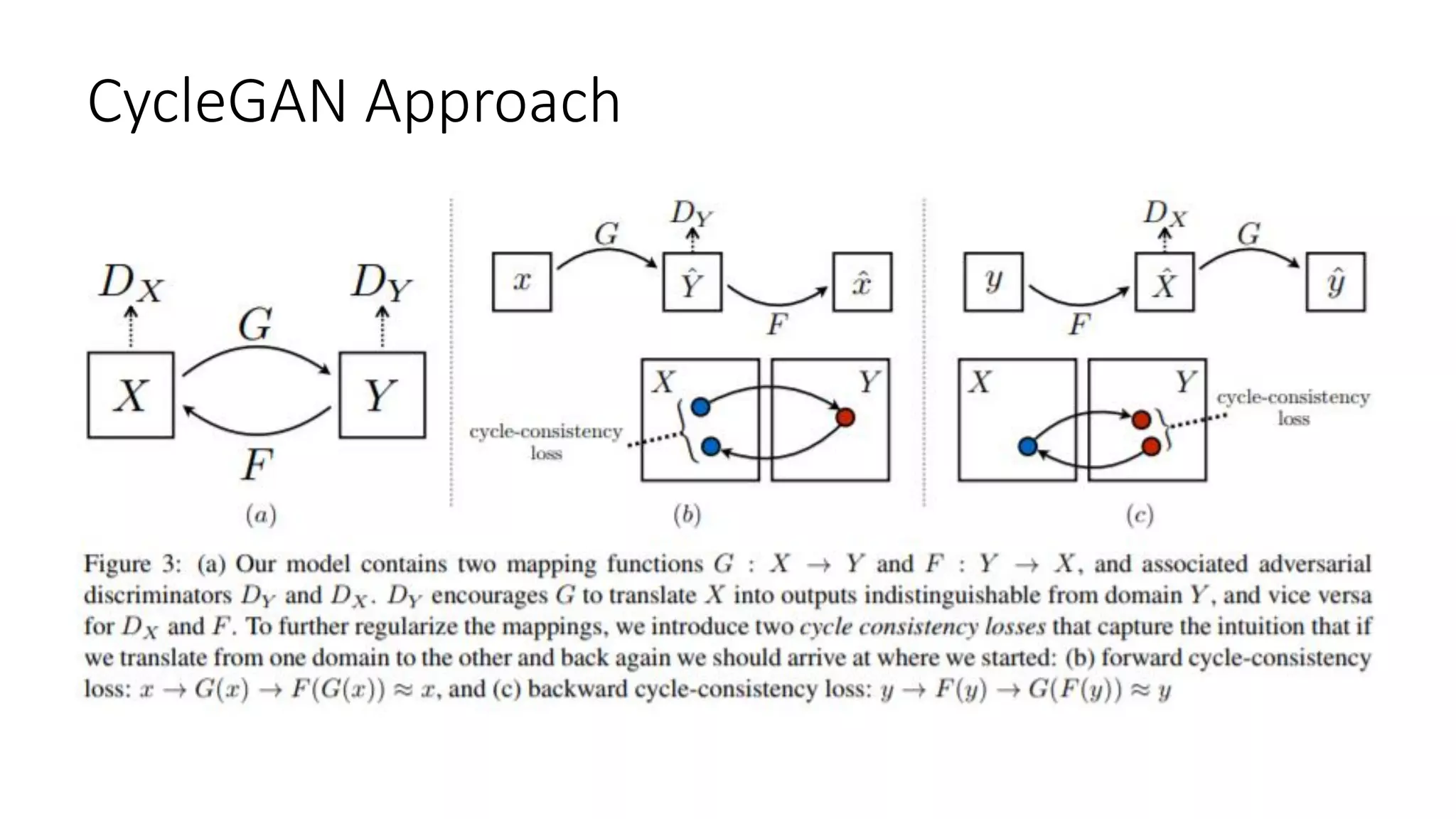

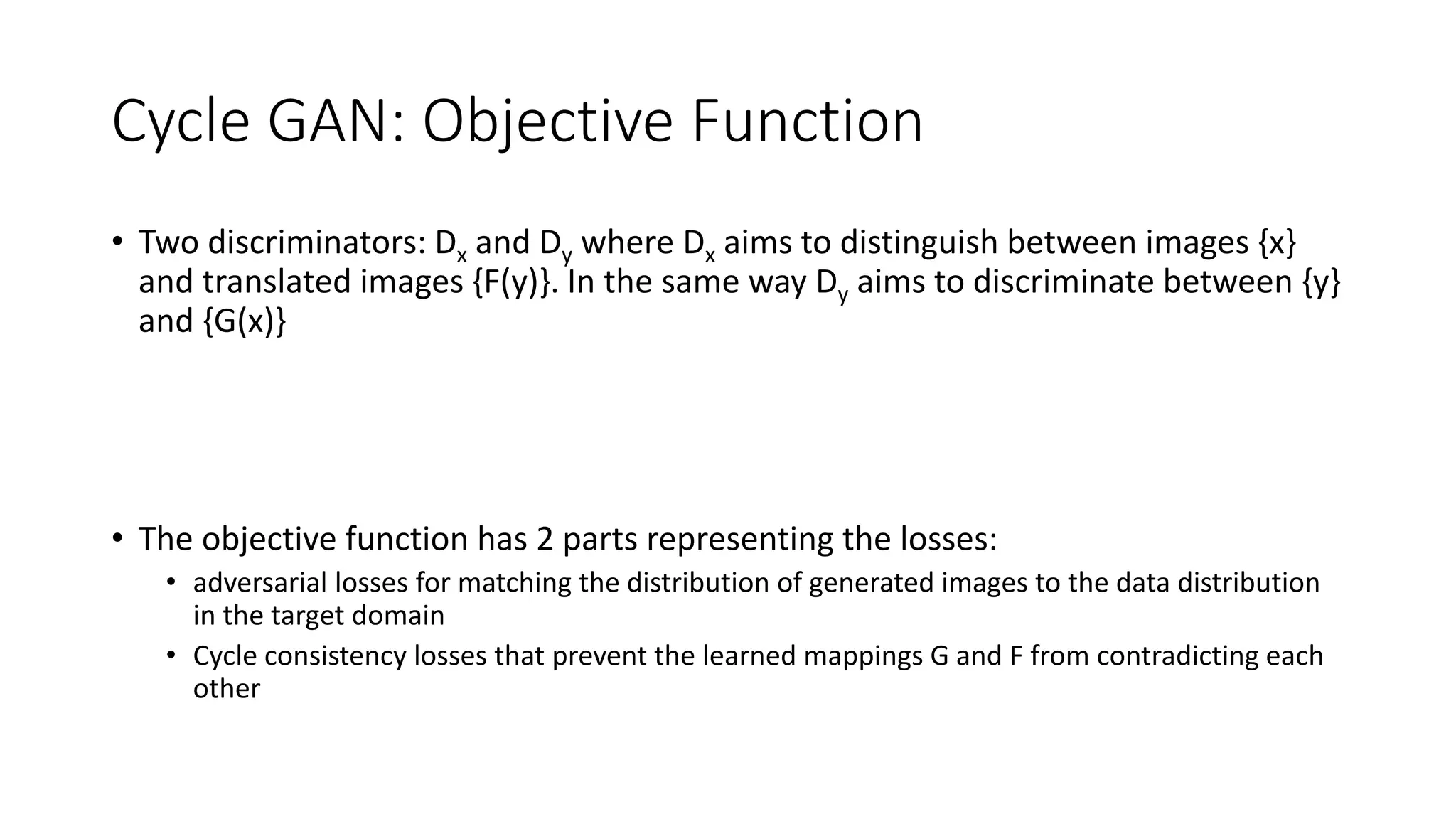

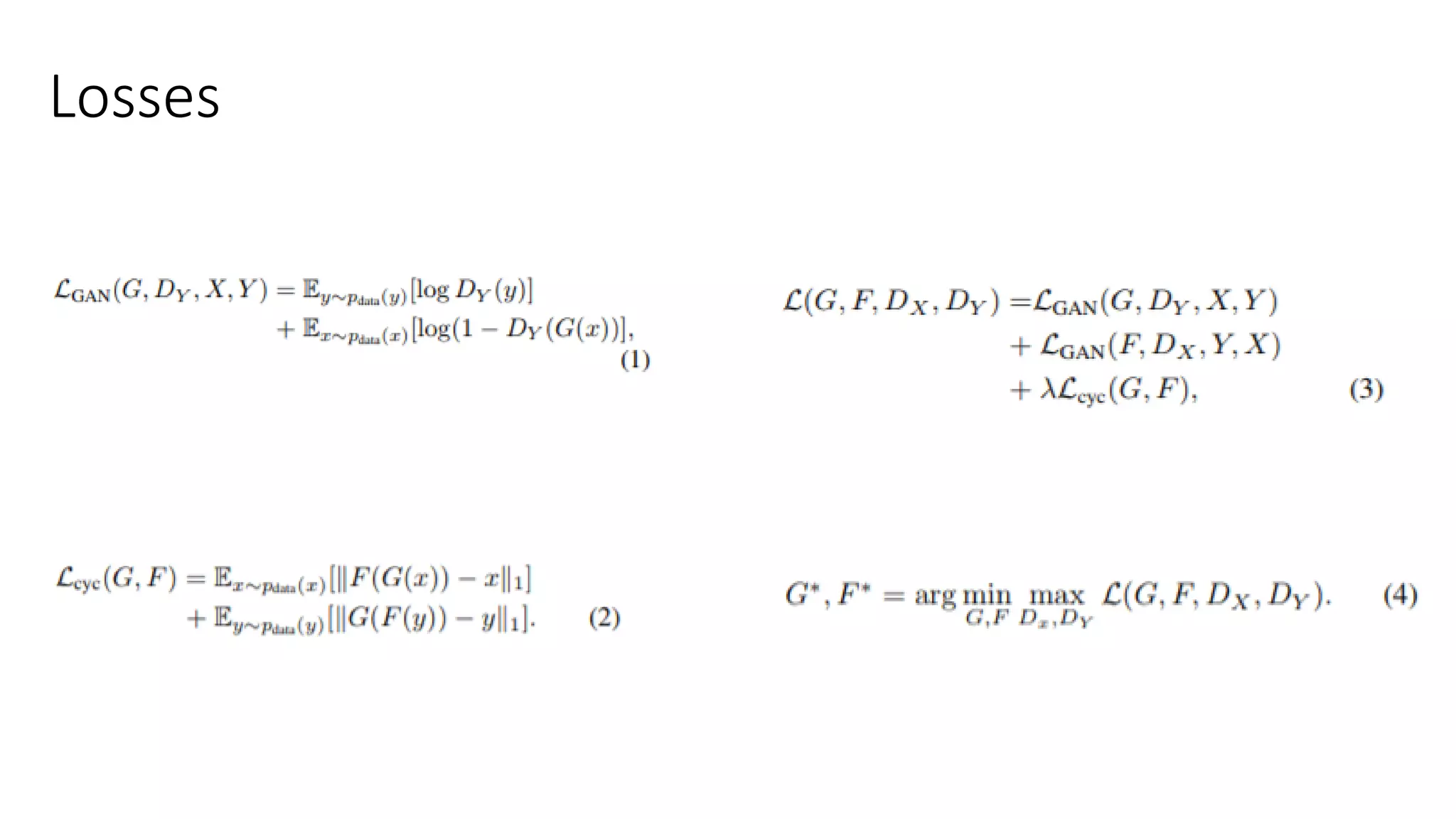

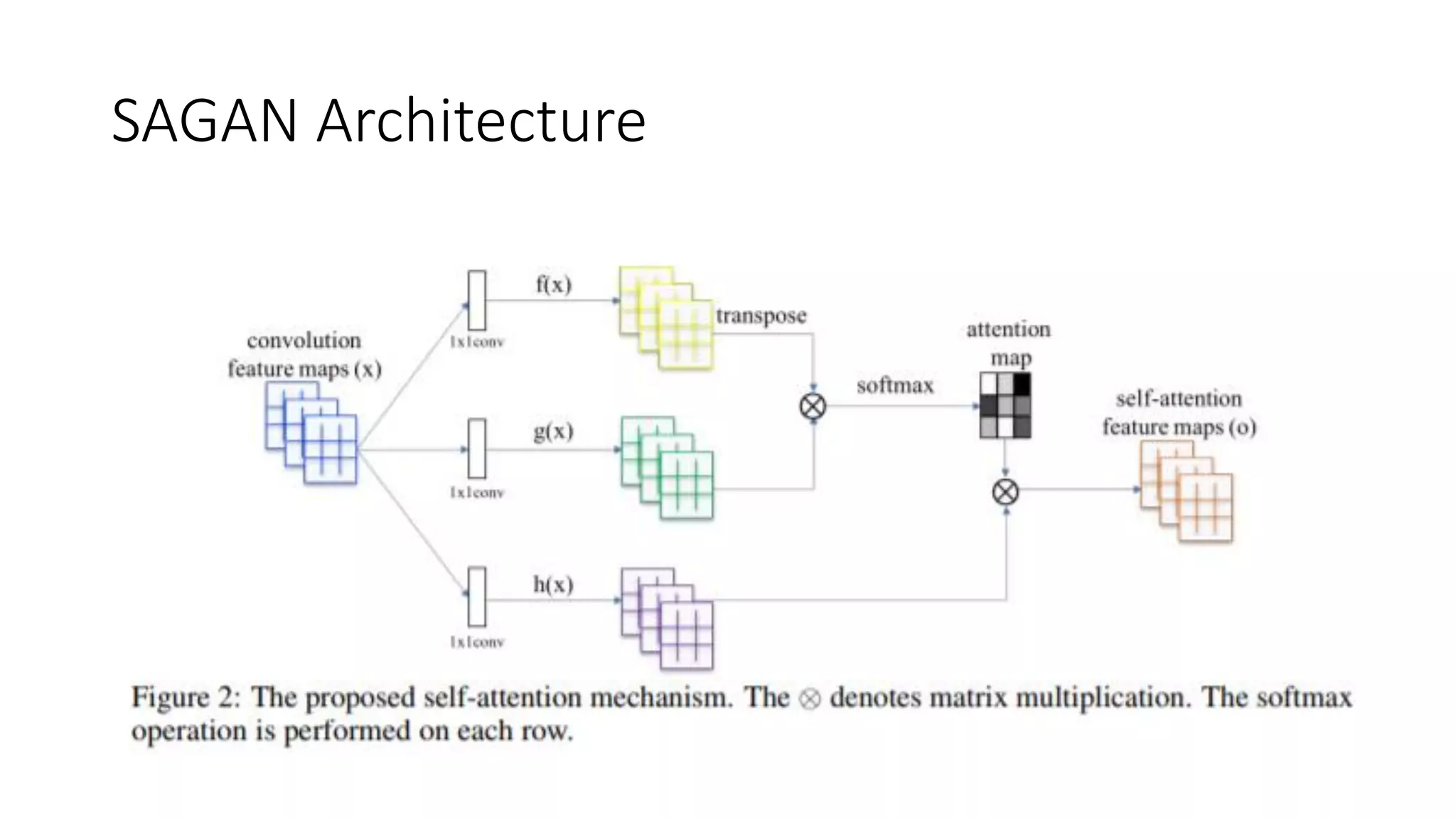

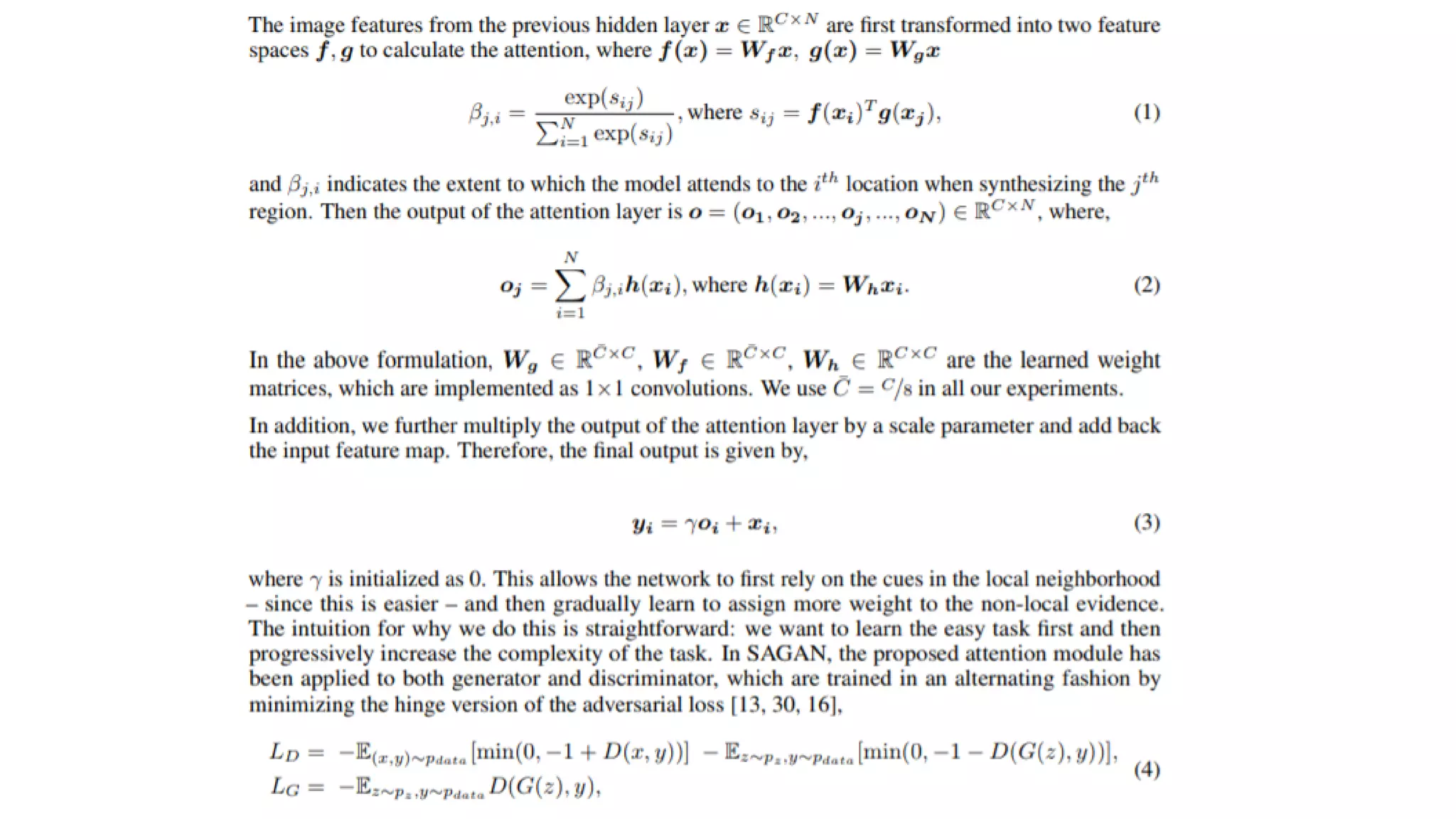

The document discusses Generative Adversarial Networks (GANs), highlighting their ability to learn and generate realistic data, including images and audio. It covers various GAN architectures such as CycleGAN, which enables unpaired image-to-image translation, and Self-Attention GANs (SAGAN), designed to capture both short and long-range dependencies. The document also references various applications and challenges associated with GANs, including their evaluation metrics and future work considerations.