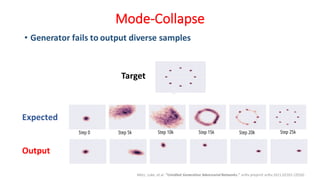

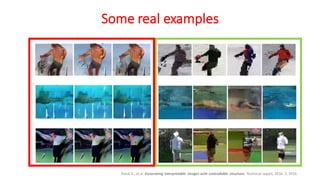

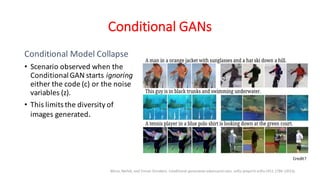

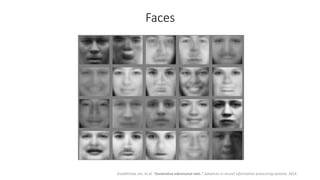

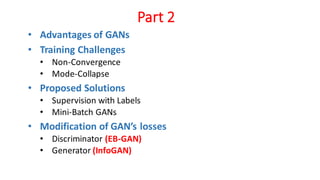

Generative Adversarial Networks (GANs) are a class of deep learning models that are trained using an adversarial process. GANs consist of two neural networks, a generator and a discriminator, that compete against each other. The generator tries to generate new samples from a latent space to fool the discriminator, while the discriminator tries to distinguish real samples from fake ones. GANs can learn complex high-dimensional distributions and have been applied to image generation, video generation, and other domains. However, training GANs is challenging due to issues like non-convergence and mode collapse. Recent work has explored techniques like minibatch discrimination, conditional GANs, and unrolled GANs to help address these training issues.

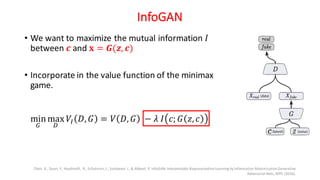

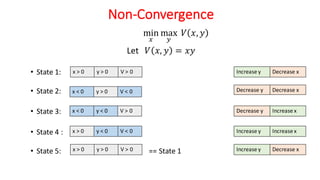

![Non-Convergence

min

1

max

X

𝑥𝑦

•

Z

Z1

= −y …

Z

ZX

= x

•

Z]

ZX]

=

Z

Z1

= −𝑦

• Differential equation’ssolutionhas sinusoidal

terms

• Even with a small learning rate, it will not

converge

Goodfellow, Ian. "NIPS 2016 Tutorial: Generative Adversarial Networks." arXiv preprint arXiv:1701.00160 (2016).](https://image.slidesharecdn.com/gan-230619073553-180c684c/85/gan-pdf-28-320.jpg)