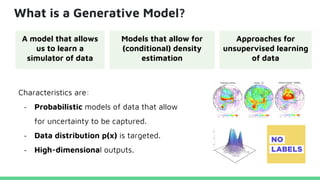

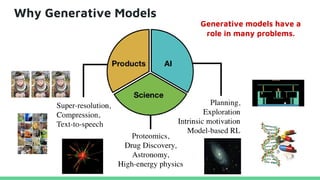

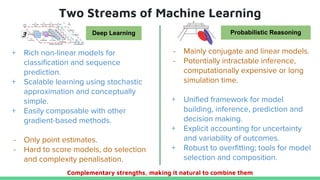

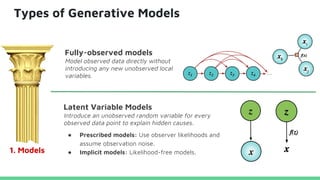

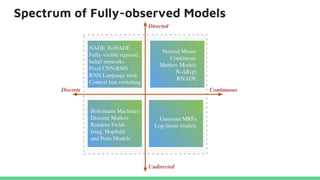

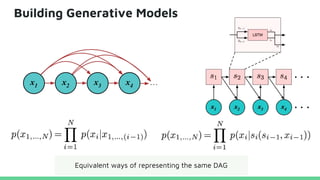

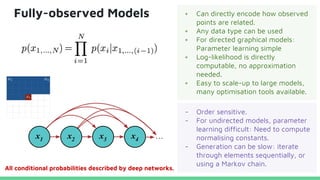

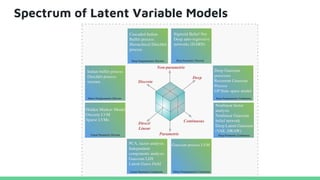

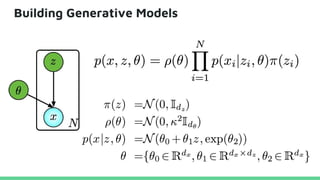

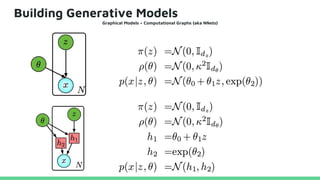

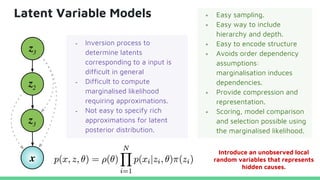

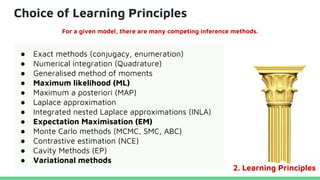

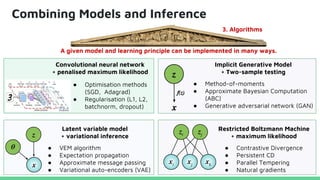

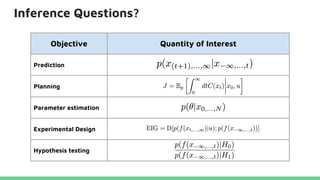

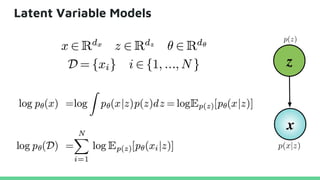

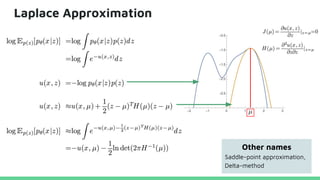

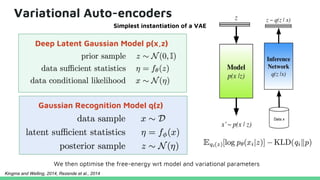

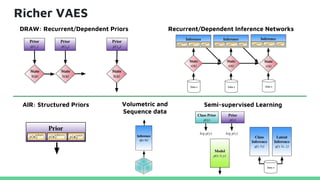

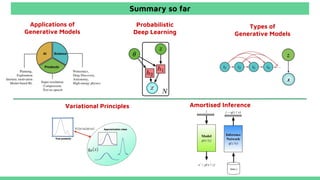

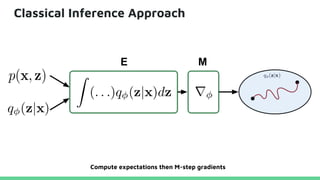

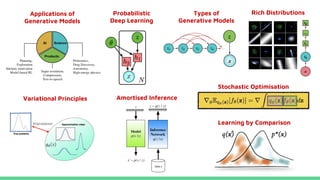

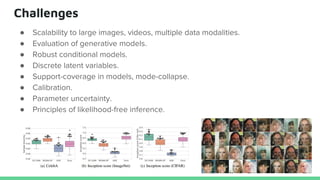

This tutorial provides an overview of recent advances in deep generative models. It will cover three types of generative models: Markov models, latent variable models, and implicit models. The tutorial aims to give attendees a full understanding of the latest developments in generative modeling and how these models can be applied to high-dimensional data. Several challenges and open questions in the field will also be discussed. The tutorial is intended for the 2017 conference of the International Society for Bayesian Analysis.