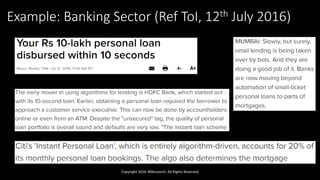

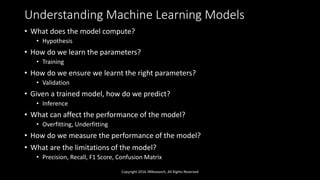

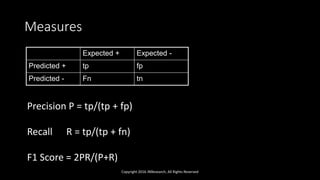

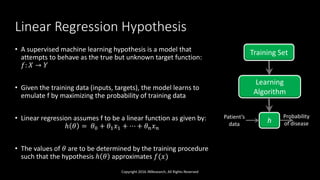

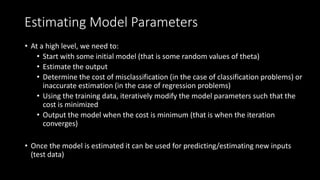

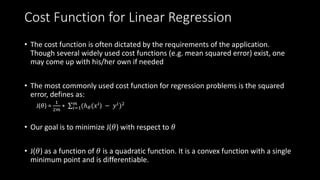

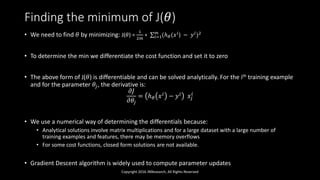

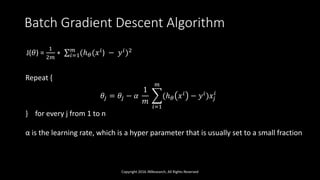

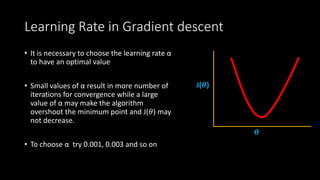

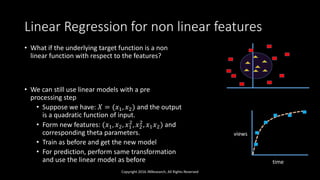

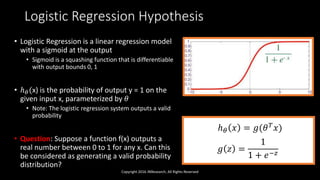

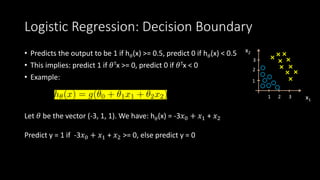

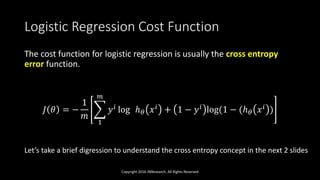

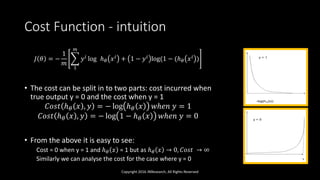

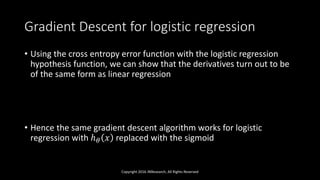

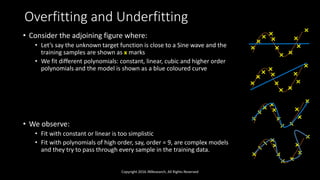

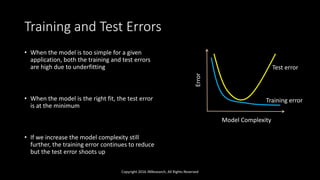

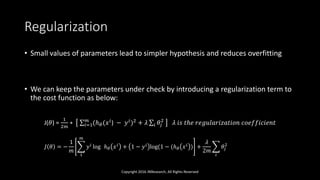

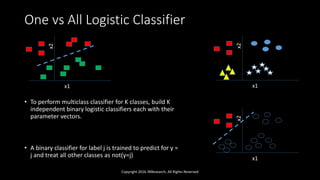

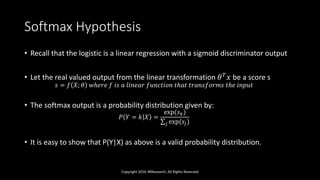

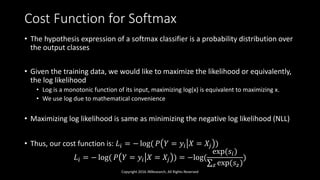

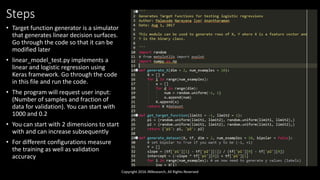

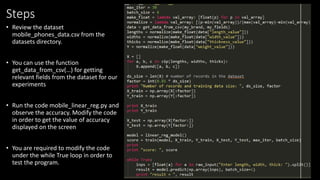

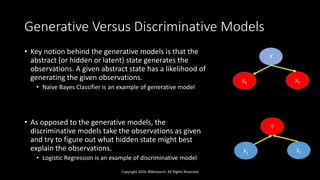

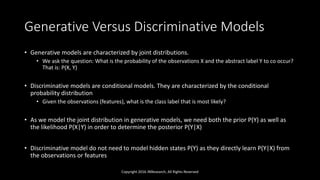

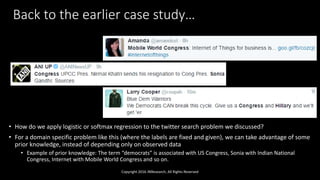

The document discusses linear models in machine learning, specifically focusing on linear and logistic regression techniques, their applications, and frameworks for training and evaluating models. It details concepts such as overfitting, underfitting, regularization, and optimization methods like gradient descent, while also addressing classification problems. Additionally, it introduces multiclass classification methods and the softmax classifier, offering insights into their operational mechanics and real-world applications.