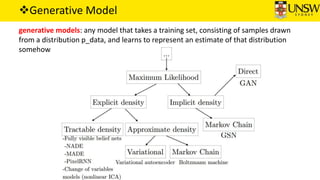

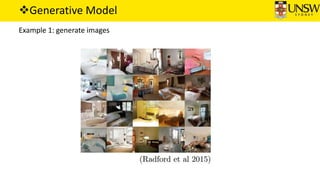

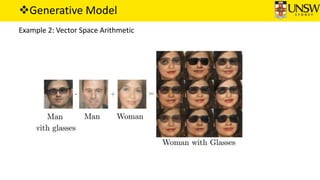

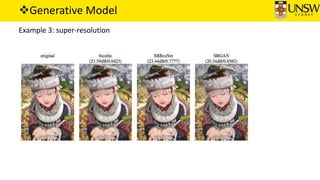

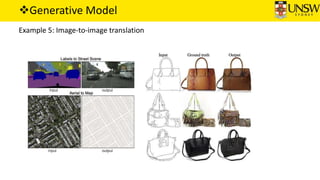

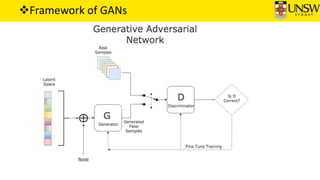

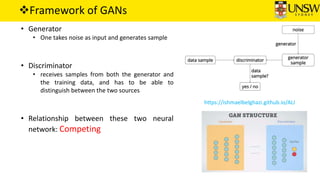

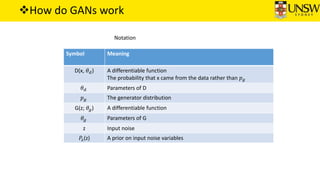

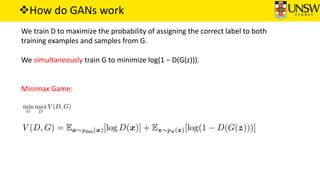

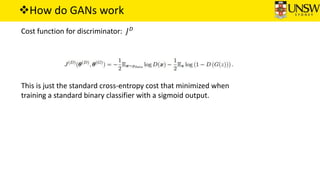

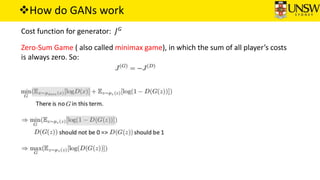

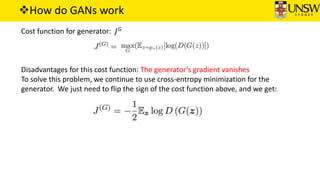

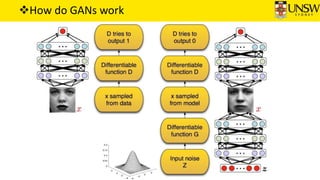

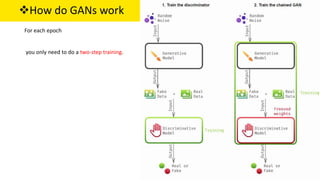

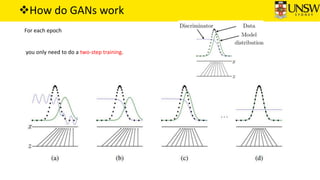

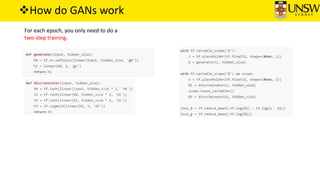

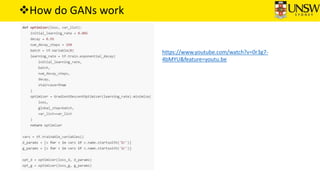

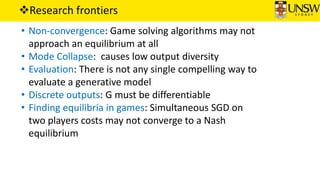

Generative Adversarial Networks (GANs) are a type of generative model that uses two neural networks - a generator and discriminator - competing against each other. The generator takes noise as input and generates synthetic samples, while the discriminator evaluates samples as real or generated. They are trained together until the generator fools the discriminator. GANs can generate realistic images, do image-to-image translation, and have applications in reinforcement learning. However, training GANs is challenging due to issues like non-convergence and mode collapse.