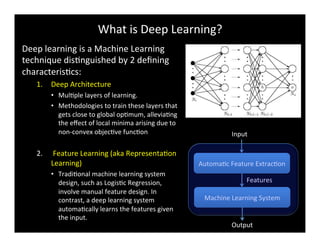

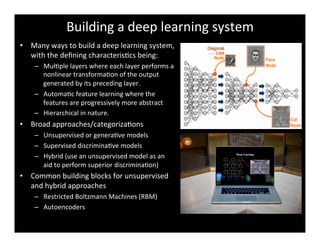

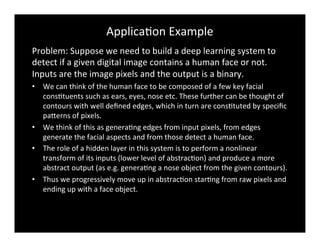

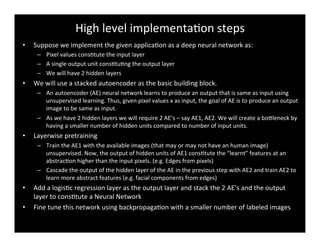

Deep learning is a machine learning approach characterized by deep architectures and automatic feature learning. It has garnered significant interest due to breakthroughs in areas such as speech and image recognition, and natural language processing. The document outlines the construction of a deep learning system using techniques like stacked autoencoders and layer-wise pretraining to enhance feature abstraction.