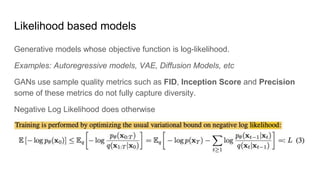

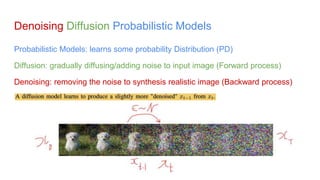

Diffusion models have recently been shown to produce higher quality images than GANs while also offering better diversity and being easier to scale and train. Specifically, a 2021 paper by OpenAI demonstrated that a diffusion model achieved an FID score of 2.97 on ImageNet 128x128, beating the previous state-of-the-art held by BigGAN. Diffusion models work by gradually adding noise to images in a forward process and then learning to remove noise in a backward denoising process, allowing them to generate diverse, high fidelity images.