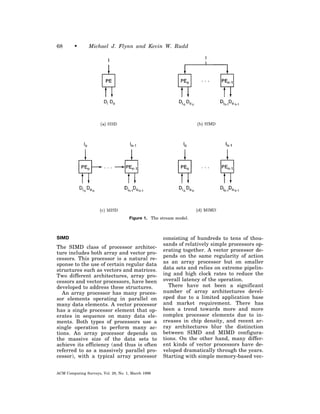

The document discusses different types of parallel architectures including SISD, SIMD, MISD, and MIMD. SISD refers to a single instruction single data stream and includes traditional uniprocessors. SIMD uses a single instruction on multiple data streams, like in vector processors. MISD has multiple instructions on a single data stream, like systolic arrays. MIMD uses multiple instructions and data streams, including traditional multiprocessors and networks of workstations. The document explores techniques for exploiting parallelism in different architectures and trends towards superscalar designs and instruction-level parallelism. It argues future systems will require even more parallelism to continue improving performance.

![Parallel Architectures

MICHAEL J. FLYNN AND KEVIN W. RUDD

Stanford University ͗flynn@Umunhum.Stanford.edu͘; ͗kevin@Umunhum.Stanford.edu͘

PARALLEL ARCHITECTURES currently performing different phases of

processing an instruction. This does not

Parallel or concurrent operation has

achieve concurrency of execution (with

many different forms within a computer

system. Using a model based on the multiple actions being taken on objects)

different streams used in the computa- but does achieve a concurrency of pro-

tion process, we represent some of the cessing—an improvement in efficiency

different kinds of parallelism available. upon which almost all processors de-

A stream is a sequence of objects such pend today.

as data, or of actions such as instruc- Techniques that exploit concurrency

tions. Each stream is independent of all of execution, often called instruction-

other streams, and each element of a level parallelism (ILP), are also com-

stream can consist of one or more ob- mon. Two architectures that exploit ILP

jects or actions. We thus have four com- are superscalar and VLIW (very long

binations that describe most familiar

parallel architectures: instruction word). These techniques

schedule different operations to execute

(1) SISD: single instruction, single data concurrently based on analyzing the de-

stream. This is the traditional uni- pendencies between the operations

processor [Figure 1(a)]. within the instruction stream—dynami-

(2) SIMD: single instruction, multiple cally at run time in a superscalar pro-

data stream. This includes vector cessor and statically at compile time in

processors as well as massively par- a VLIW processor. Both ILP approaches

allel processors [Figure 1(b)]. trade off adaptability against complex-

(3) MISD: multiple instruction, single ity—the superscalar processor is adapt-

data stream. These are typically able but complex whereas the VLIW

systolic arrays [Figure 1(c)].

processor is not adaptable but simple.

(4) MIMD: multiple instruction, multi- Both superscalar and VLIW use the

ple data stream. This includes tradi-

same compiler techniques to achieve

tional multiprocessors as well as the

newer networks of workstations high performance.

[Figure 1(d)]. The current trend for SISD processors

is towards superscalar designs in order

Each of these combinations character- to exploit available ILP as well as exist-

izes a class of architectures and a corre- ing object code. In the marketplace

sponding type of parallelism.

there are few VLIW designs, due to code

compatibility issues, although advances

SISD in compiler technology may cause this

to change. However, research in all as-

The SISD class of processor architecture

pects of ILP is fundamental to the de-

is the most familiar class and has the

least obvious concurrency of any of the velopment of improved architectures in

models, yet a good deal of concurrency all classes because of the frequent use of

can be present. Pipelining is a straight- SISD architectures as the processor ele-

forward approach that is based on con- ments in most implementations.

Copyright © 1996, CRC Press.

ACM Computing Surveys, Vol. 28, No. 1, March 1996](https://image.slidesharecdn.com/flynntaxonomies-130320113518-phpapp02/75/Flynn-taxonomies-1-2048.jpg)