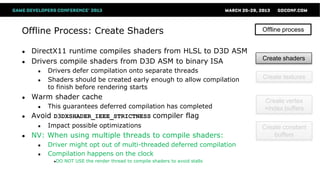

This document provides recommendations for optimizing DirectX 11 performance. It separates the graphics pipeline process into offline and runtime stages. For the offline stage, it recommends creating resources like buffers, textures and shaders on multiple threads. For the runtime stage, it suggests culling unused objects, minimizing state changes, and pushing commands to the driver quickly. It also provides tips for updating dynamic resources efficiently and grouping related constants together. The goal is to keep the CPU and GPU pipelines fully utilized for maximum performance.

![Depth Test – Early Z vs Late Z rules

Pixel Shader

Hi-Z / ZCull

Depth/Stencil Test

Output Merger

“Early” Depth

Stencil Test

Rasterizer

“Late” Depth

Stencil Test

Opaque primitives

[earlydepthstencil]

Clip()/Discard()

Alpha to Mask Output

Coverage Mask Output

Depth

Writes

OFF

with

Pixel Shader

Hi-Z / ZCull

Depth/Stencil Test

Output Merger

“Early” Depth

Stencil Test

Rasterizer

“Late” Depth

Stencil Test

Clip()/Discard()

Alpha to Mask Output

Coverage Mask Output

Depth

Writes

ON

with

Pixel Shader

Hi-Z / ZCull

Depth/Stencil Test

Output Merger

“Early” Depth

Stencil Test

Rasterizer

“Late” Depth

Stencil Test

oDepth output

UAV output](https://image.slidesharecdn.com/dx11performancereloaded-150328115341-conversion-gate01/85/Dx11-performancereloaded-33-320.jpg)

![Compute Shader 3/3

Performance Recommendations continued

Thread Group Shared Memory (TGSM)

● Store the result of thread computations into TGSM for work sharing

● E.g. resource fetches

● Only synchronize threads when needed

● GroupMemoryBarrier[WithGroupSync]

● TGSM declaration size affects machine occupancy

Bank Conflicts

● Read/writes to the same memory bank (bank=address%32) from parallel

threads cause serialization

● Exception: all threads reading from the same address is OK

Learn more in “DirectCompute for Gaming: Supercharge your engine with Compute

Shaders” presentation from Stephan and Layla at 1.30pm](https://image.slidesharecdn.com/dx11performancereloaded-150328115341-conversion-gate01/85/Dx11-performancereloaded-42-320.jpg)