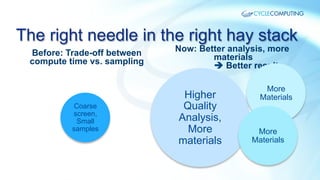

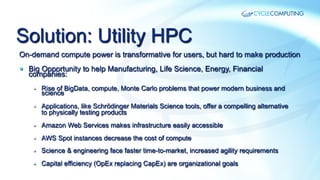

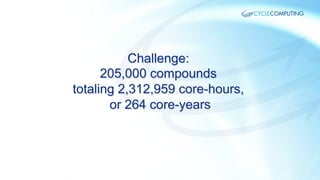

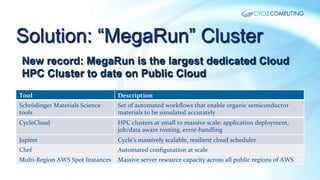

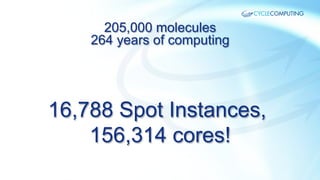

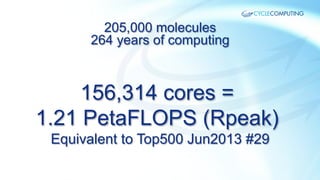

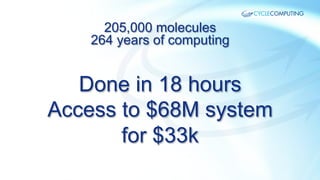

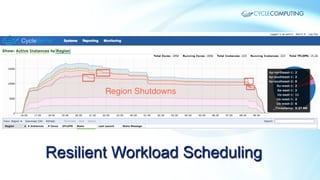

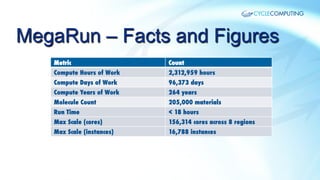

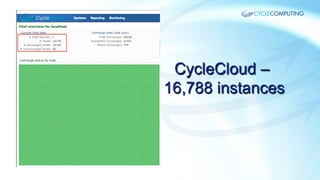

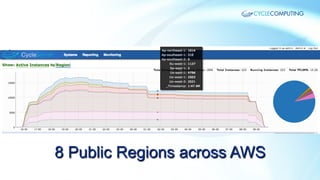

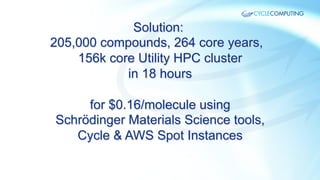

Cycle Computing's recent 'megarun' utilized a 156,314-core cluster to achieve 1.21 petaflops, processing 205,000 organic photovoltaic compounds in just 18 hours for a fraction of the cost of traditional methods. This utility high-performance computing (HPC) approach enables faster materials discovery for energy applications, demonstrating its potential to transform science and engineering. Leveraging Amazon Web Services (AWS) spot instances, the run exemplifies a more efficient and cost-effective model for materials science research.