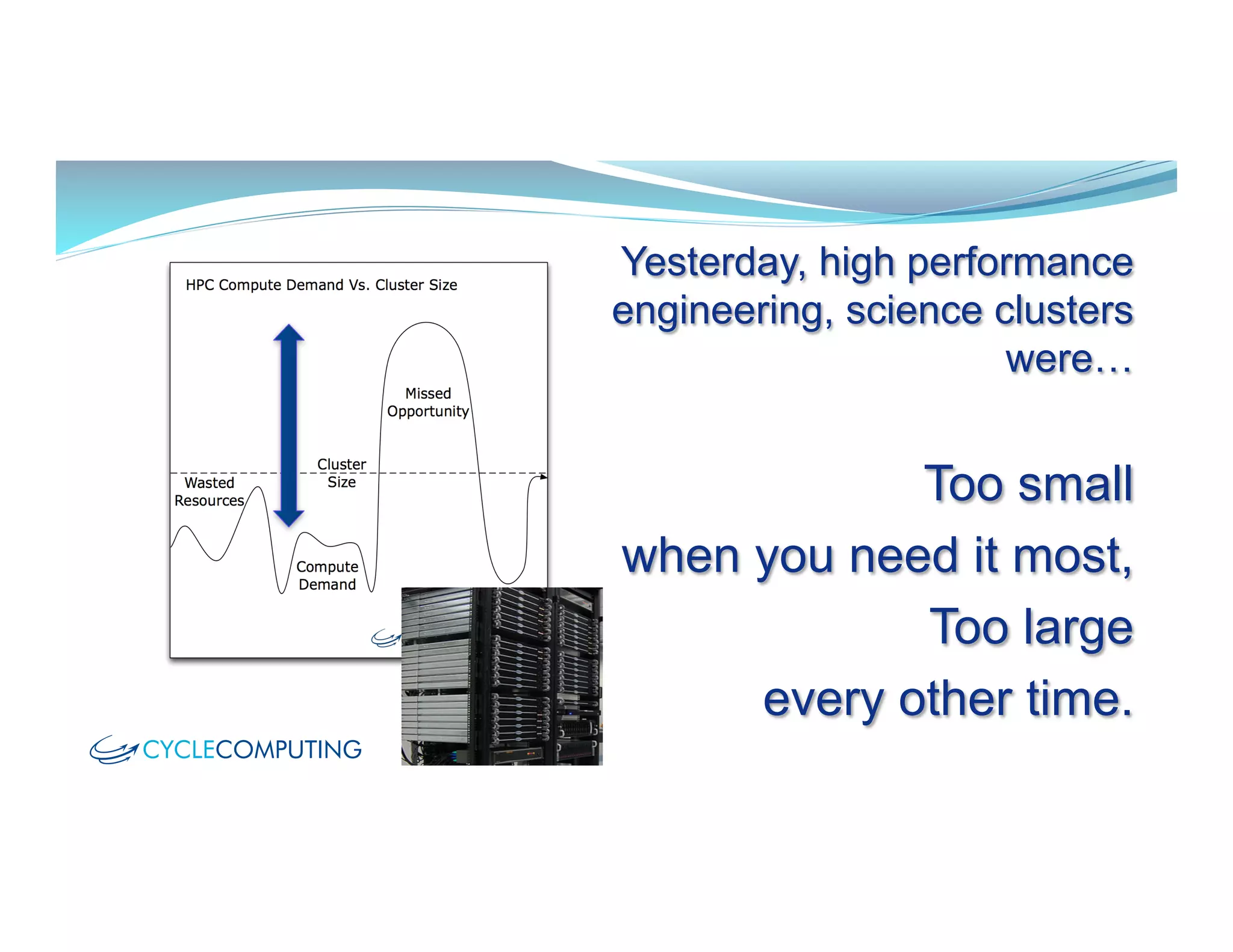

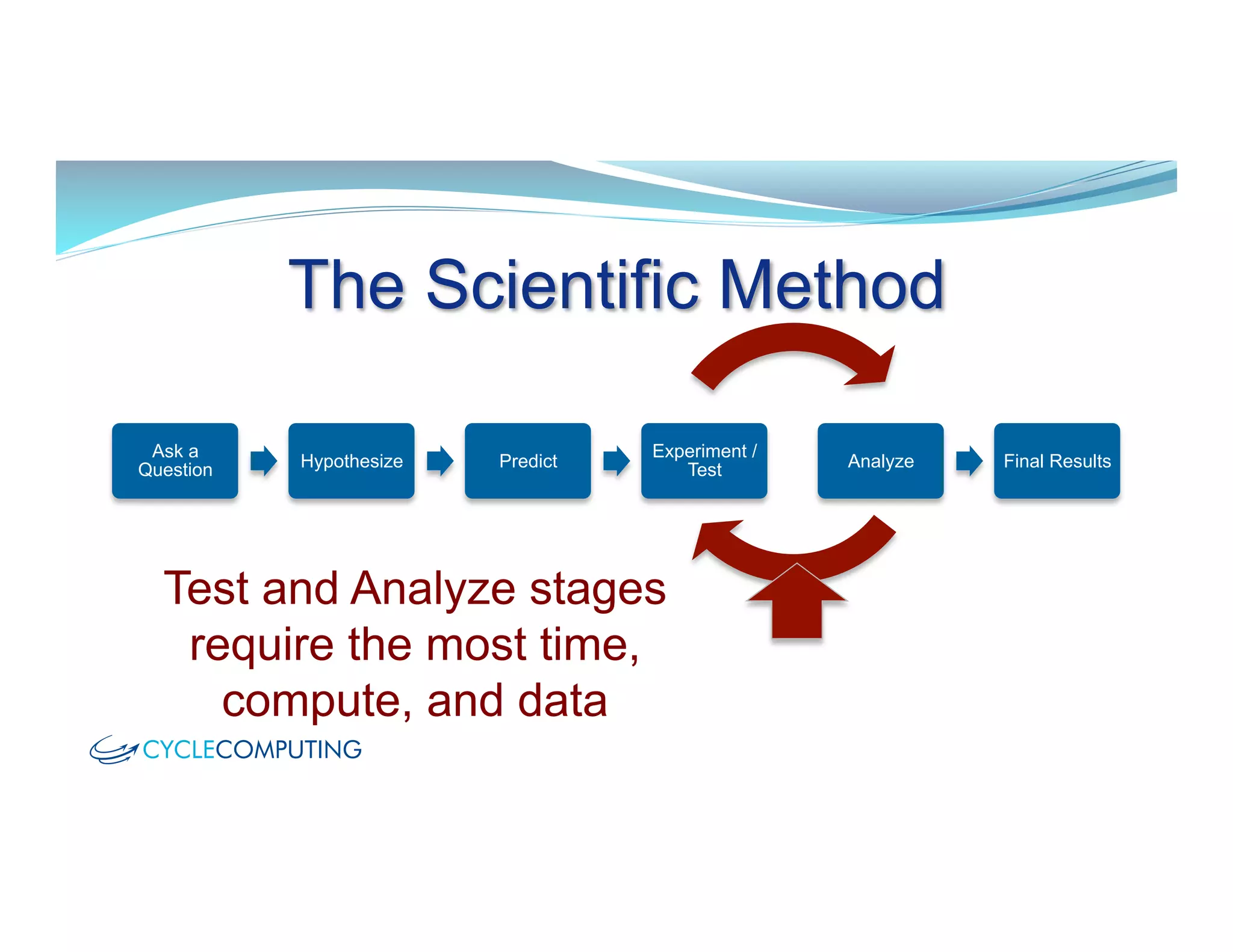

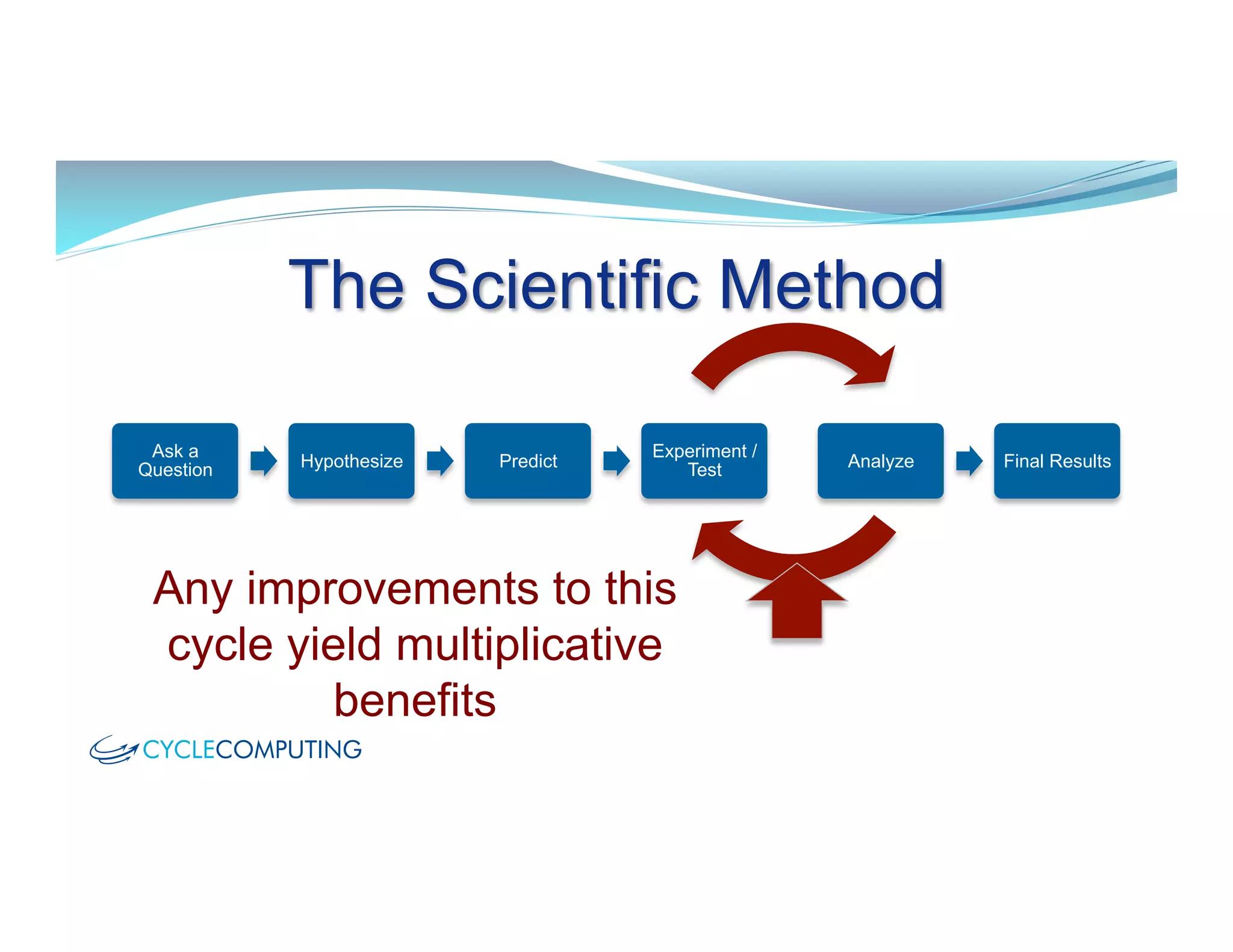

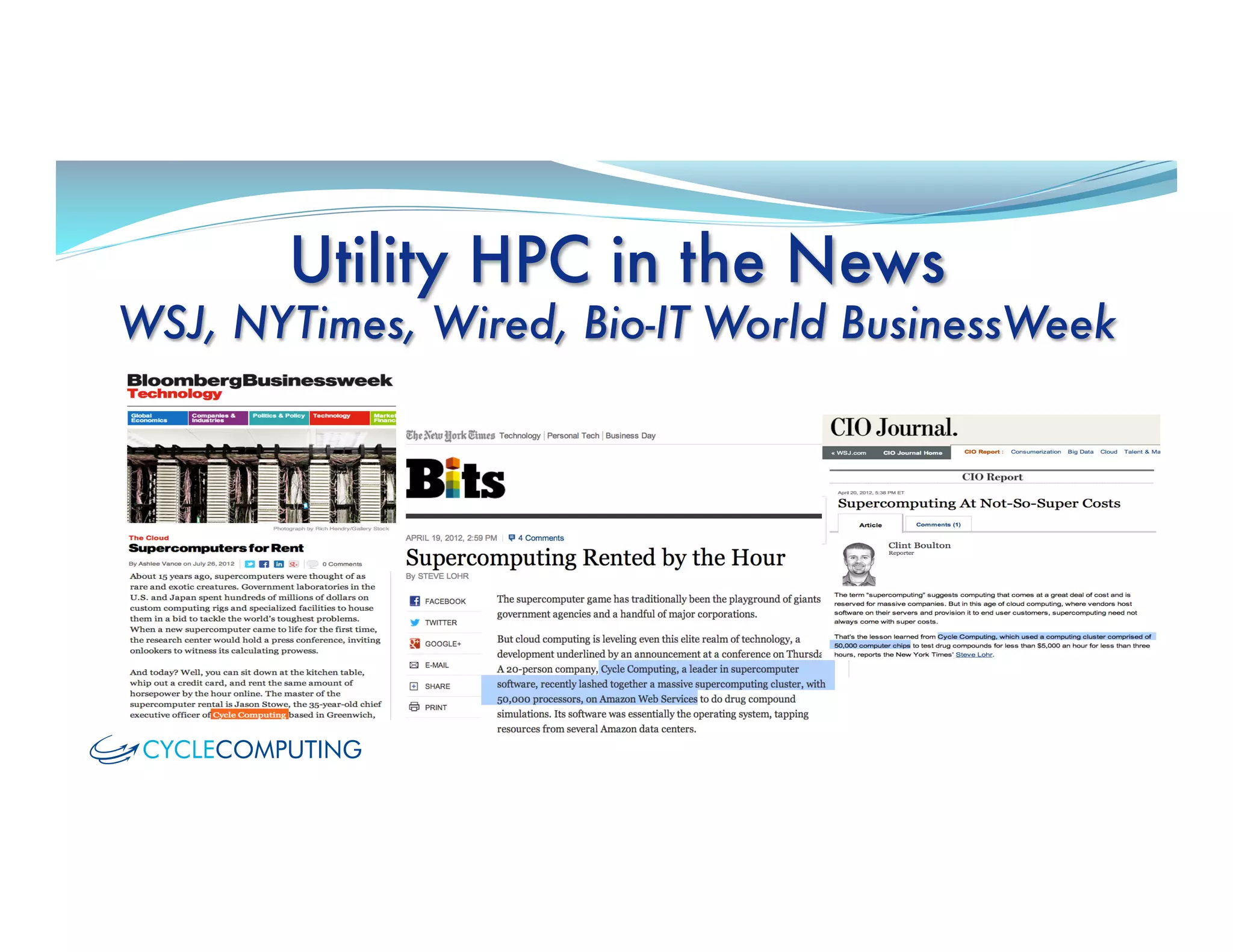

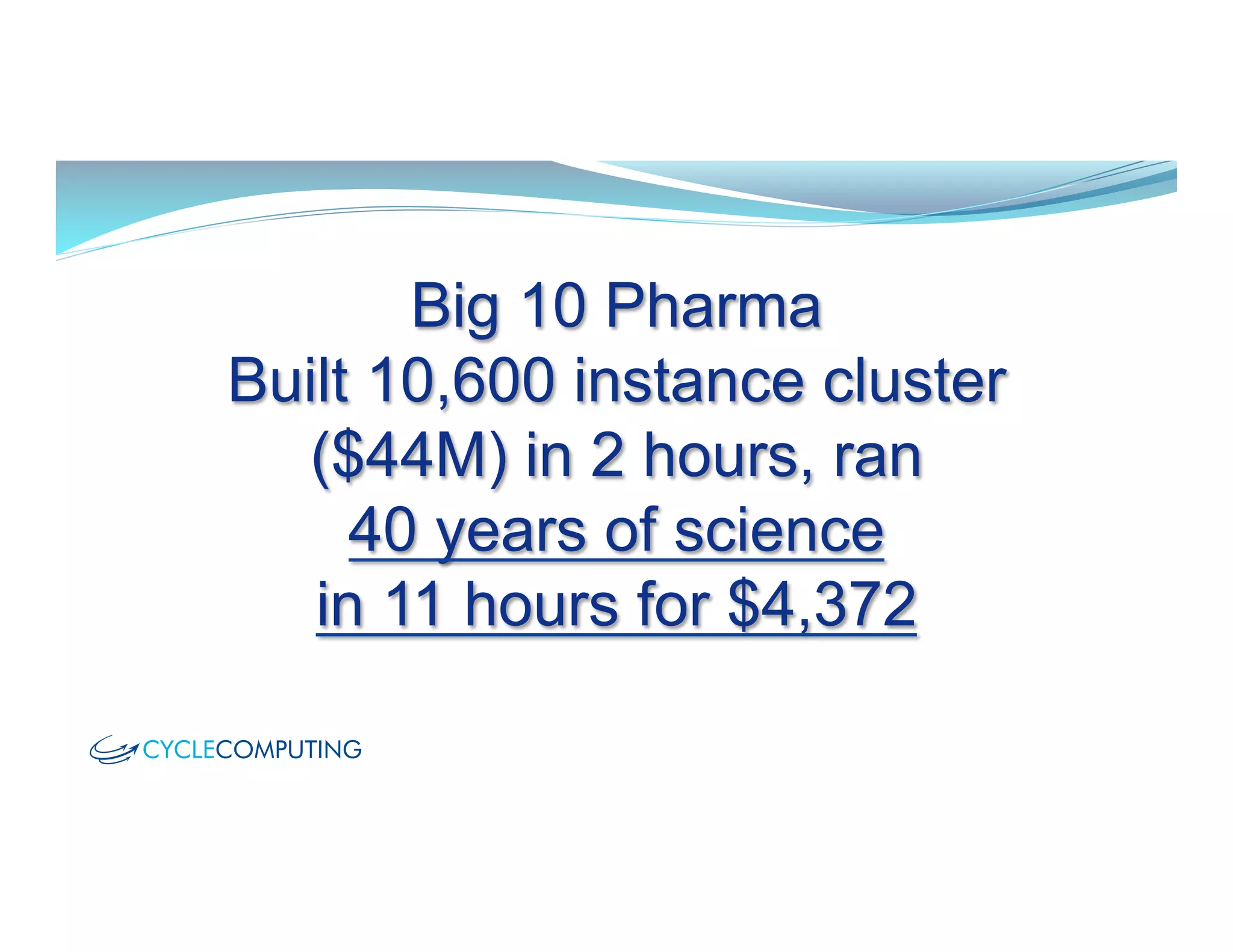

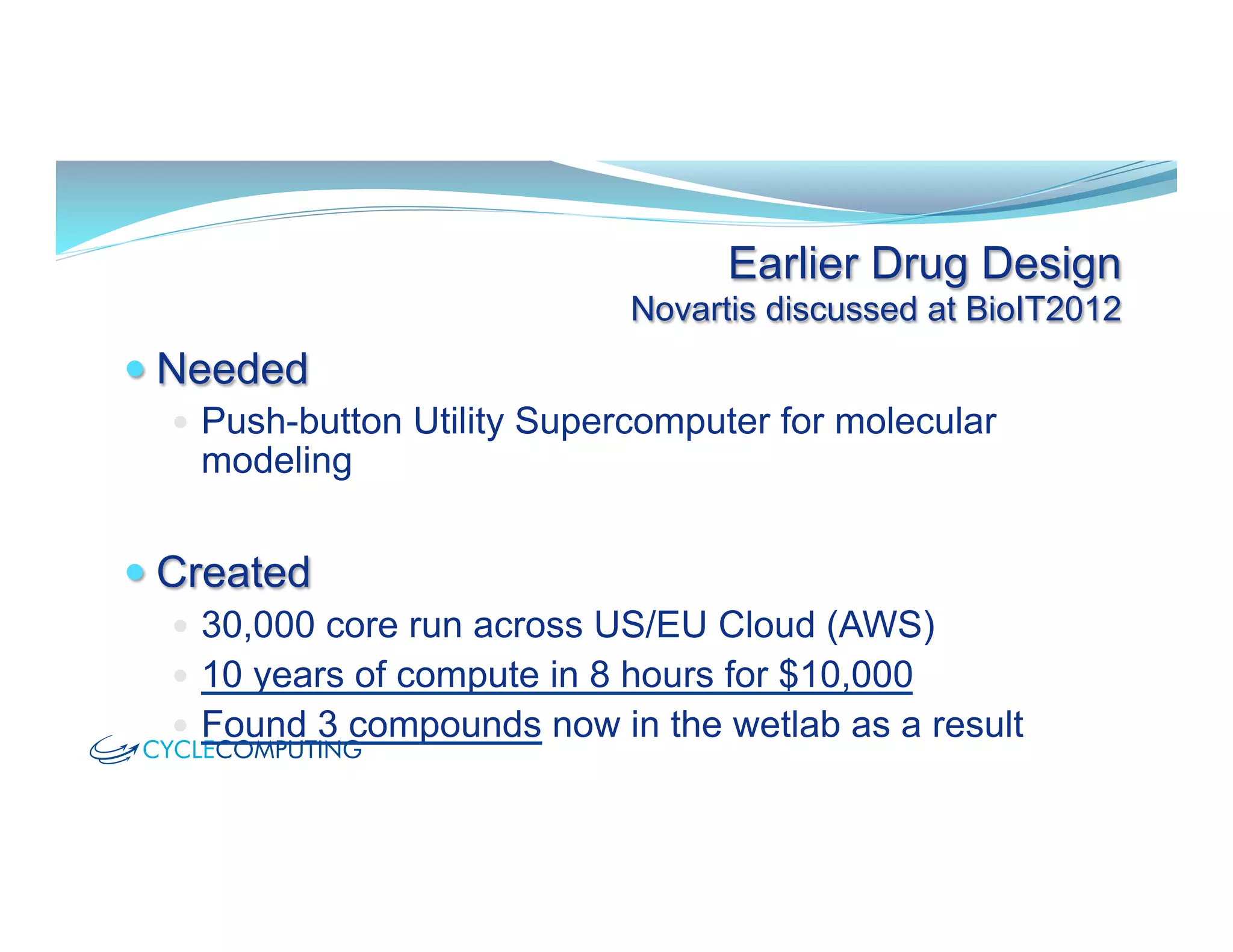

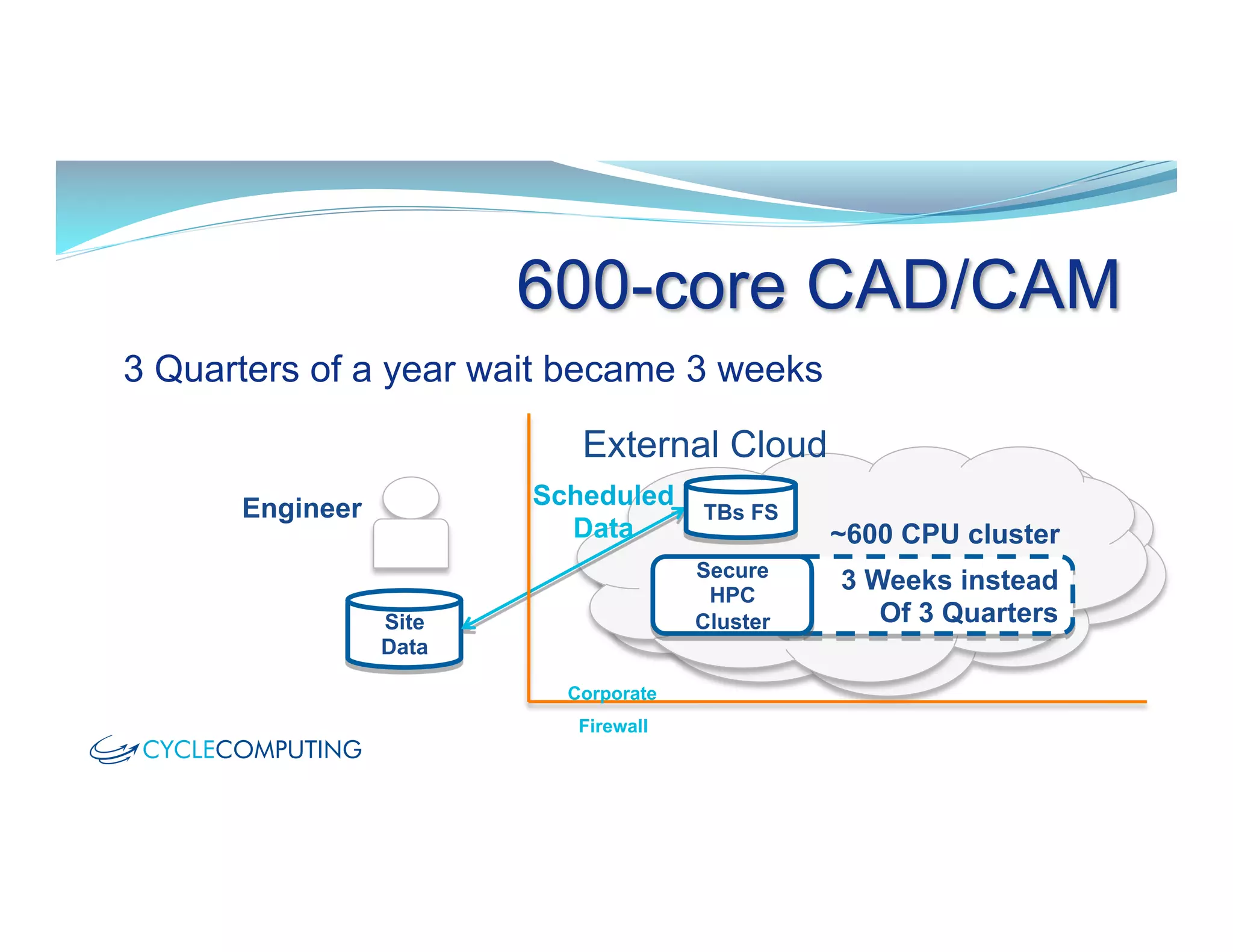

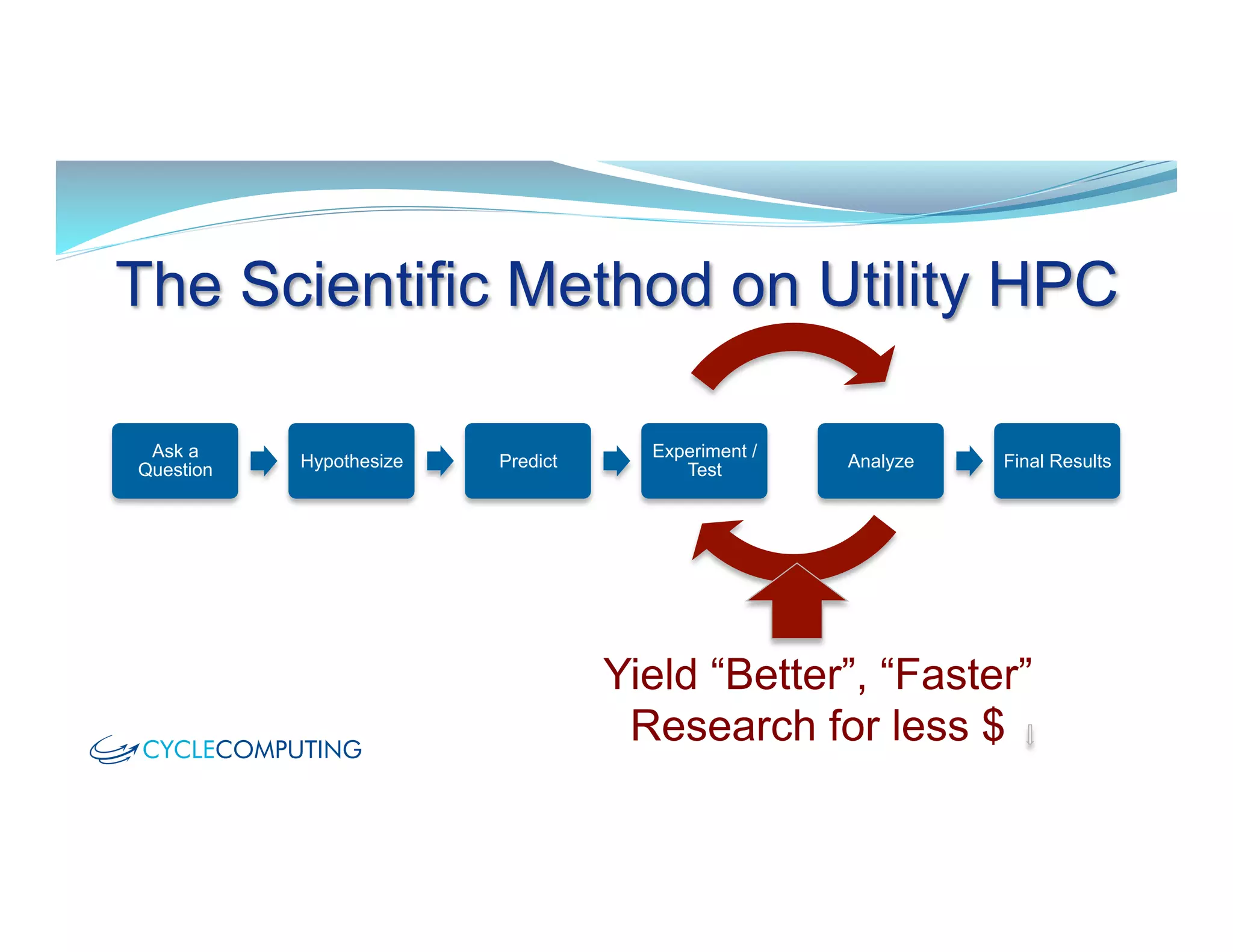

1) Utility access to computing power through dynamic, on-demand cloud infrastructure allows scientists to ask bigger questions and accelerate research by running experiments that previously took months or years to complete in just hours or days.

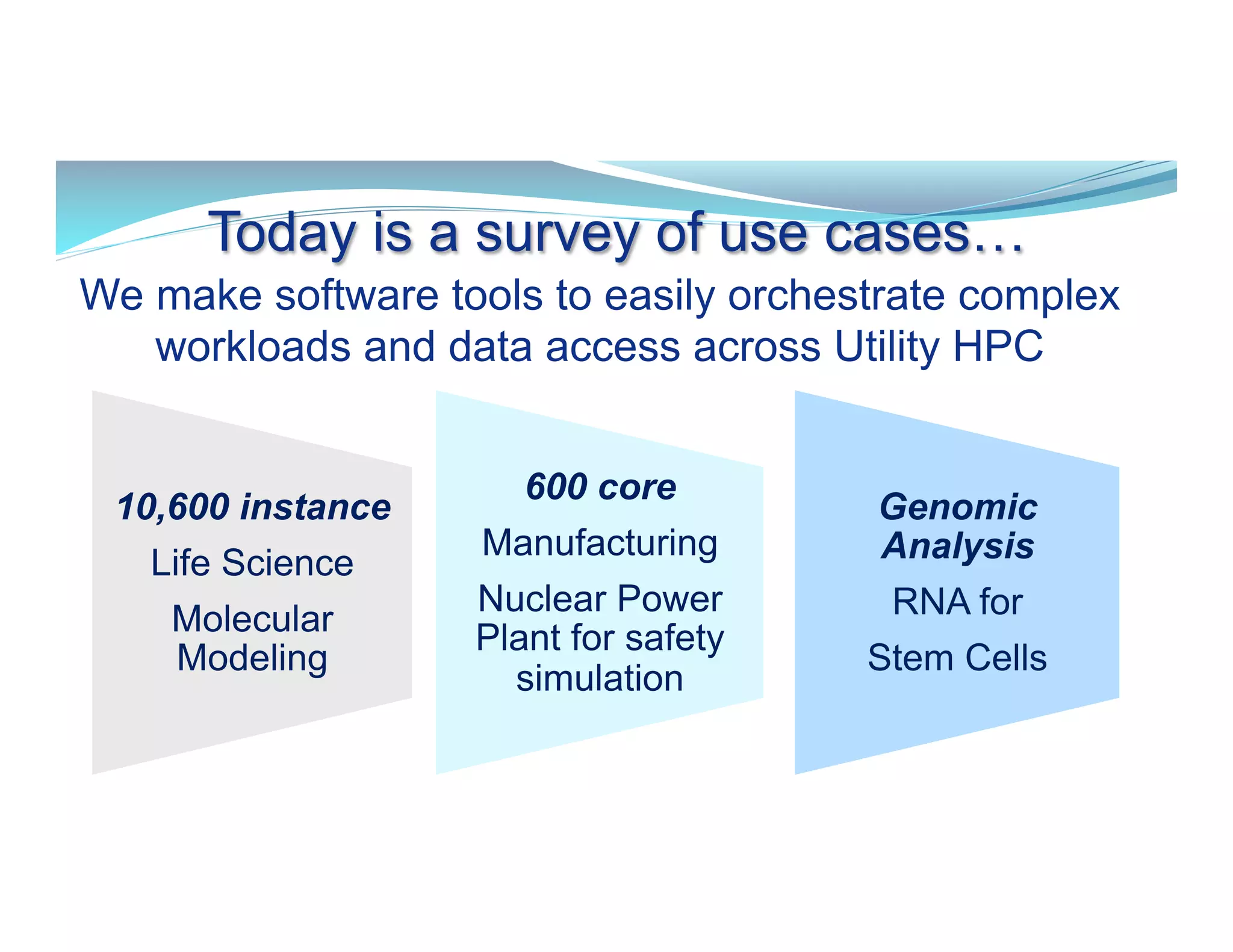

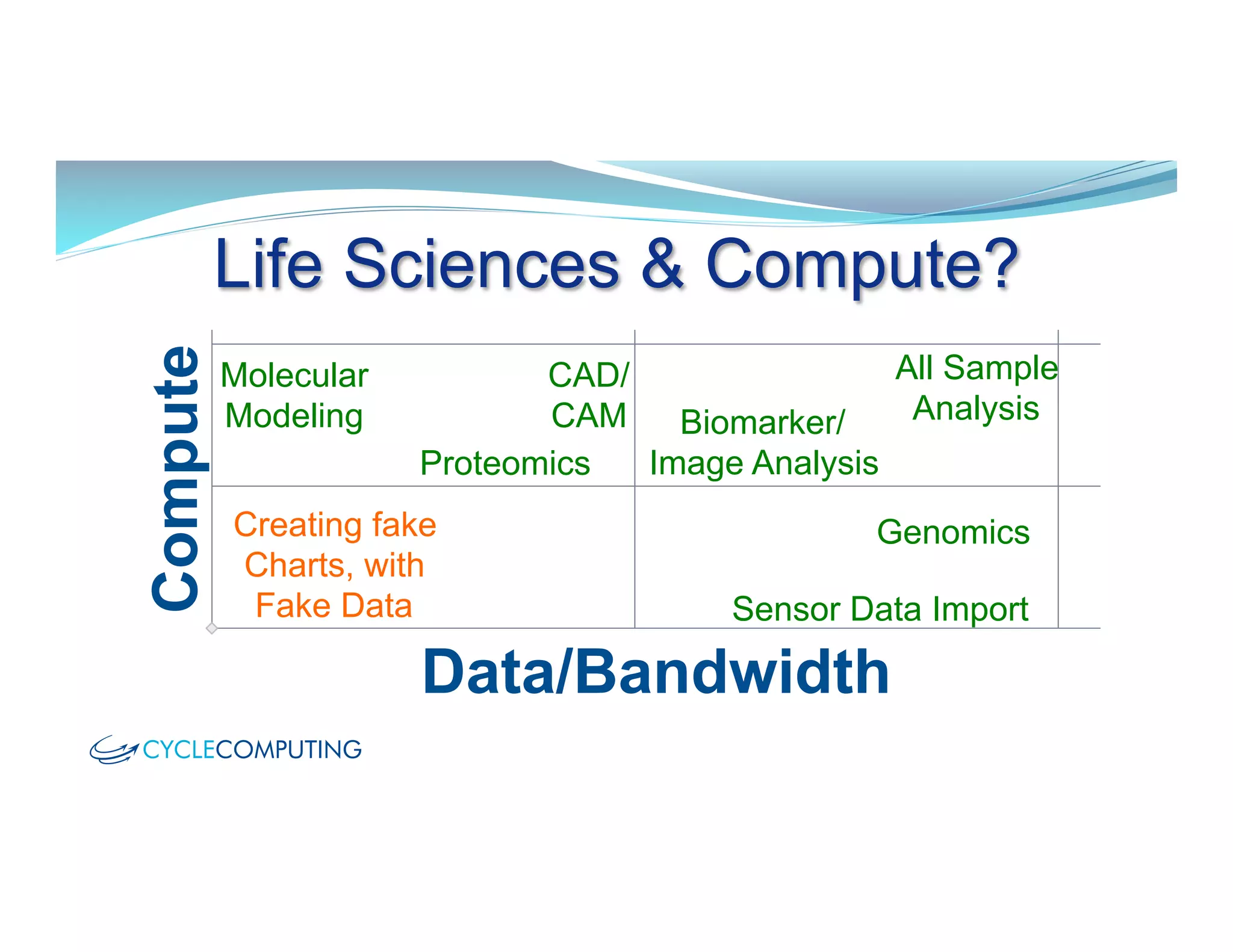

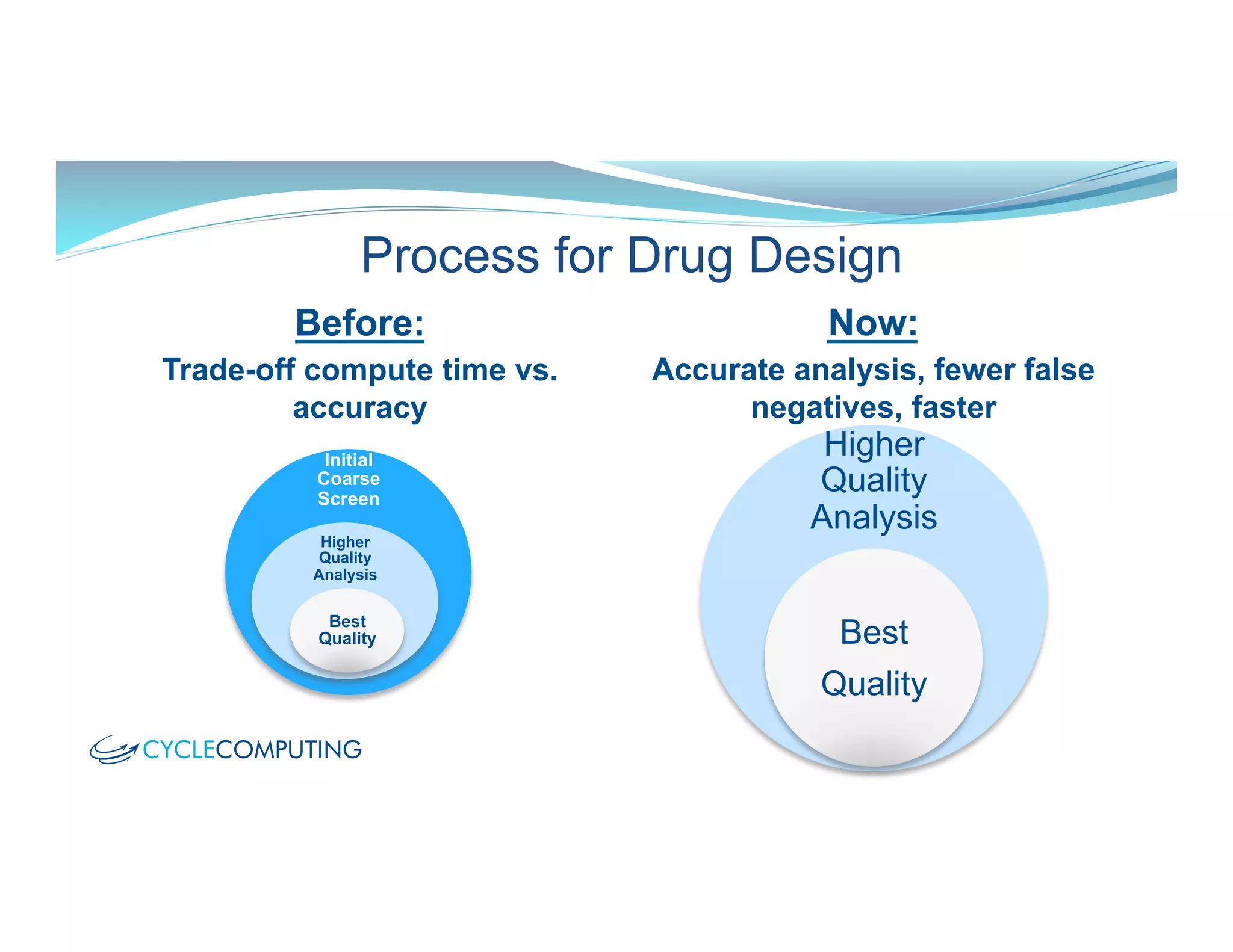

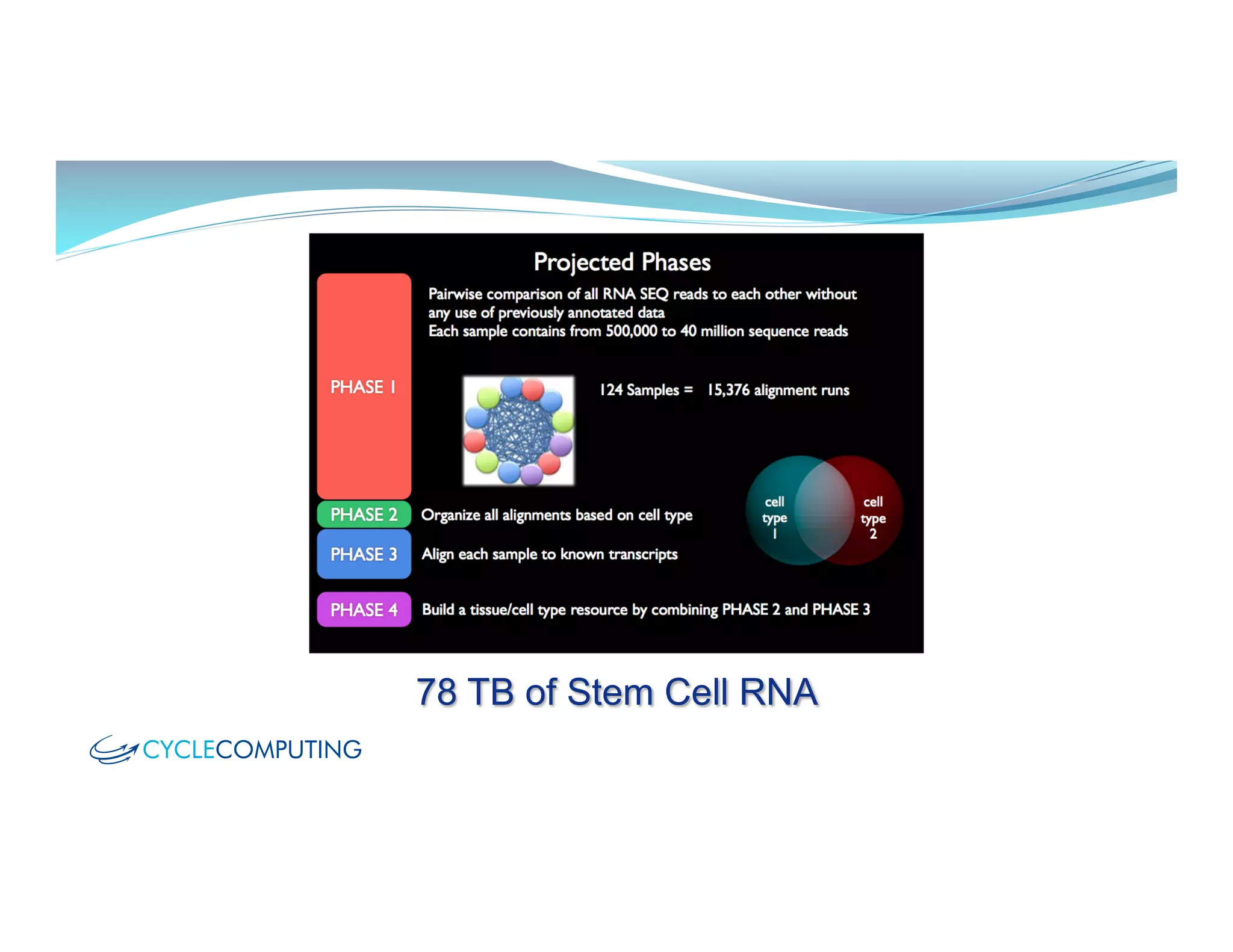

2) Examples of use cases that benefit from utility HPC include drug design through molecular modeling, CAD/CAM engineering simulations, genomic analysis of stem cell RNA, and nuclear power plant safety simulations.

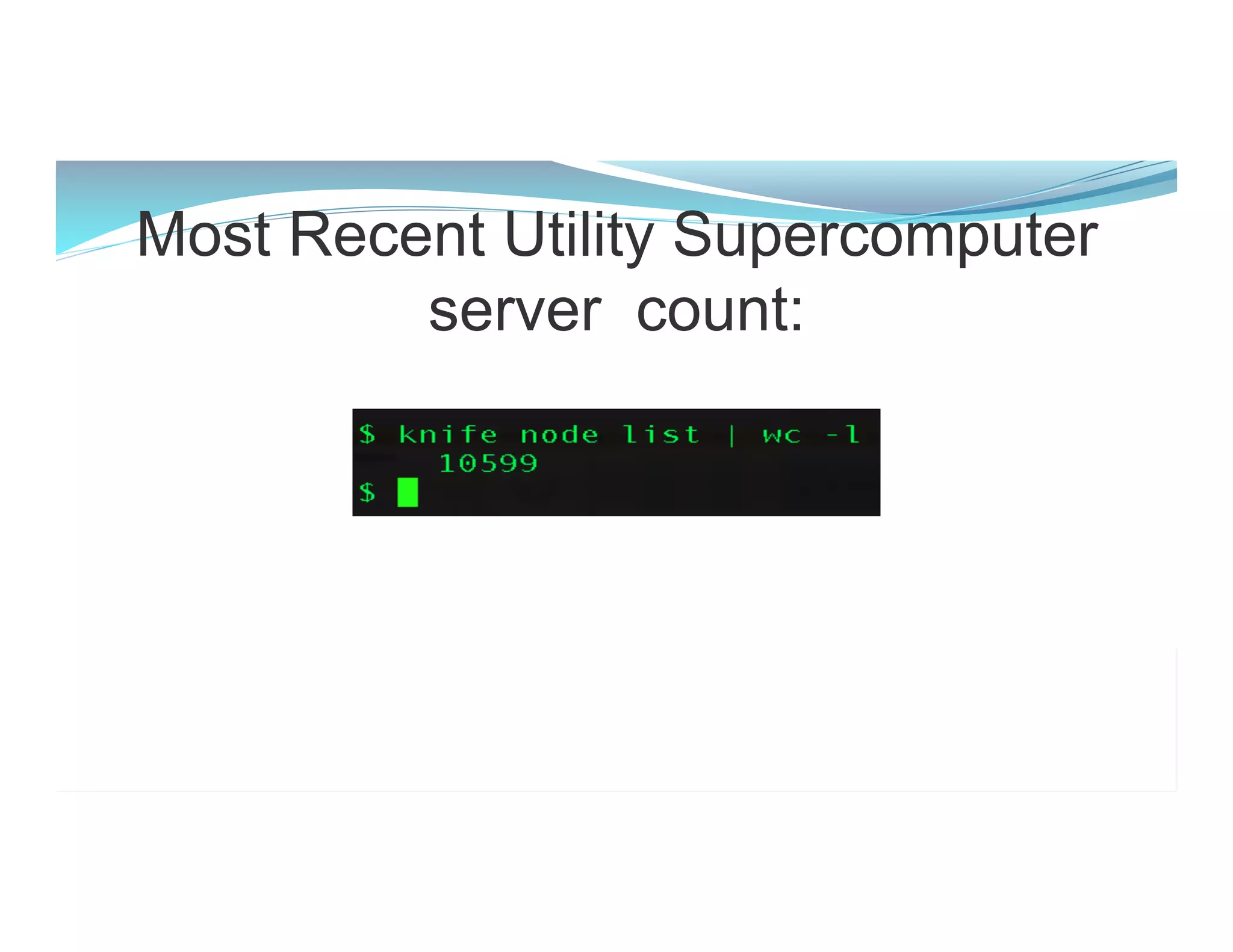

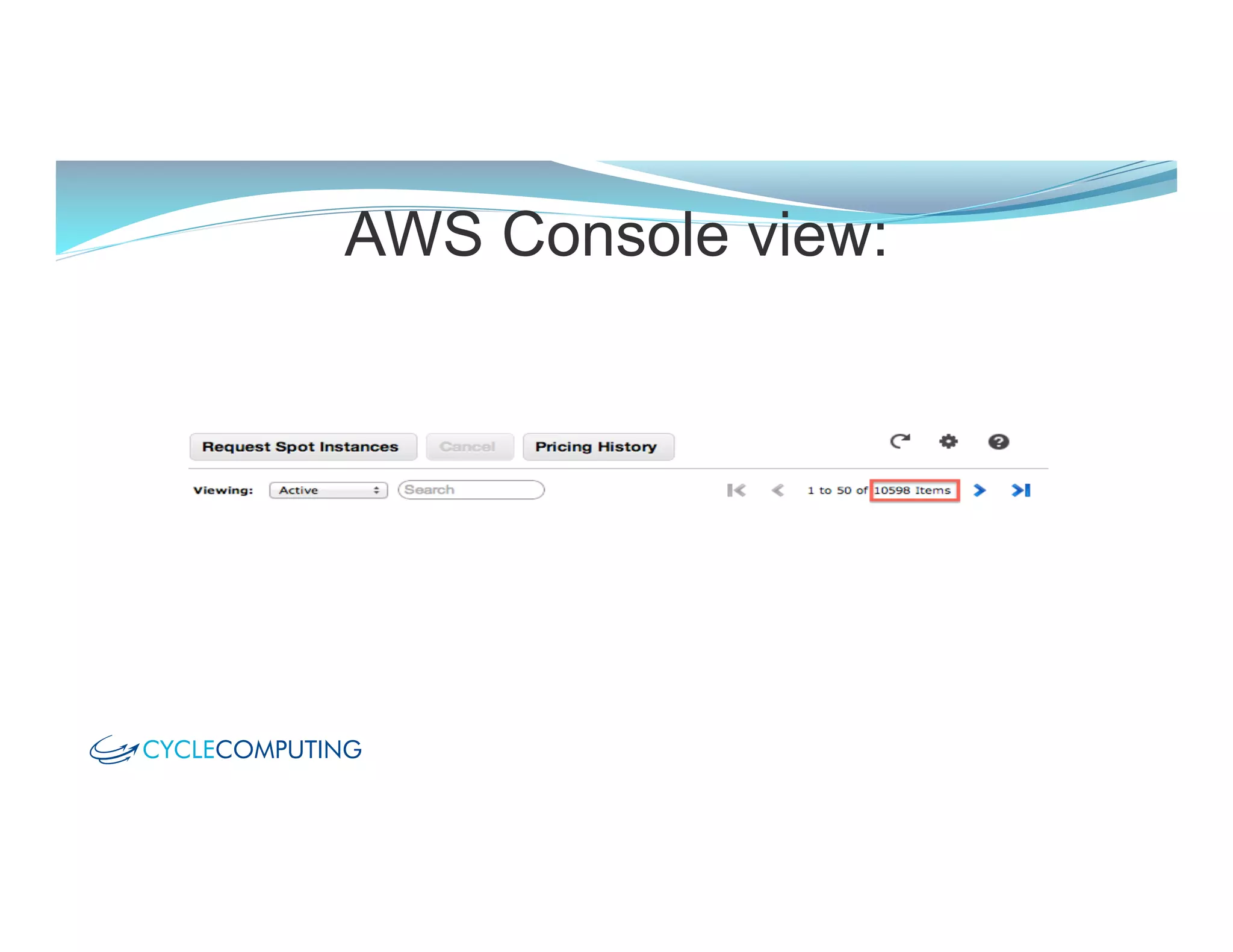

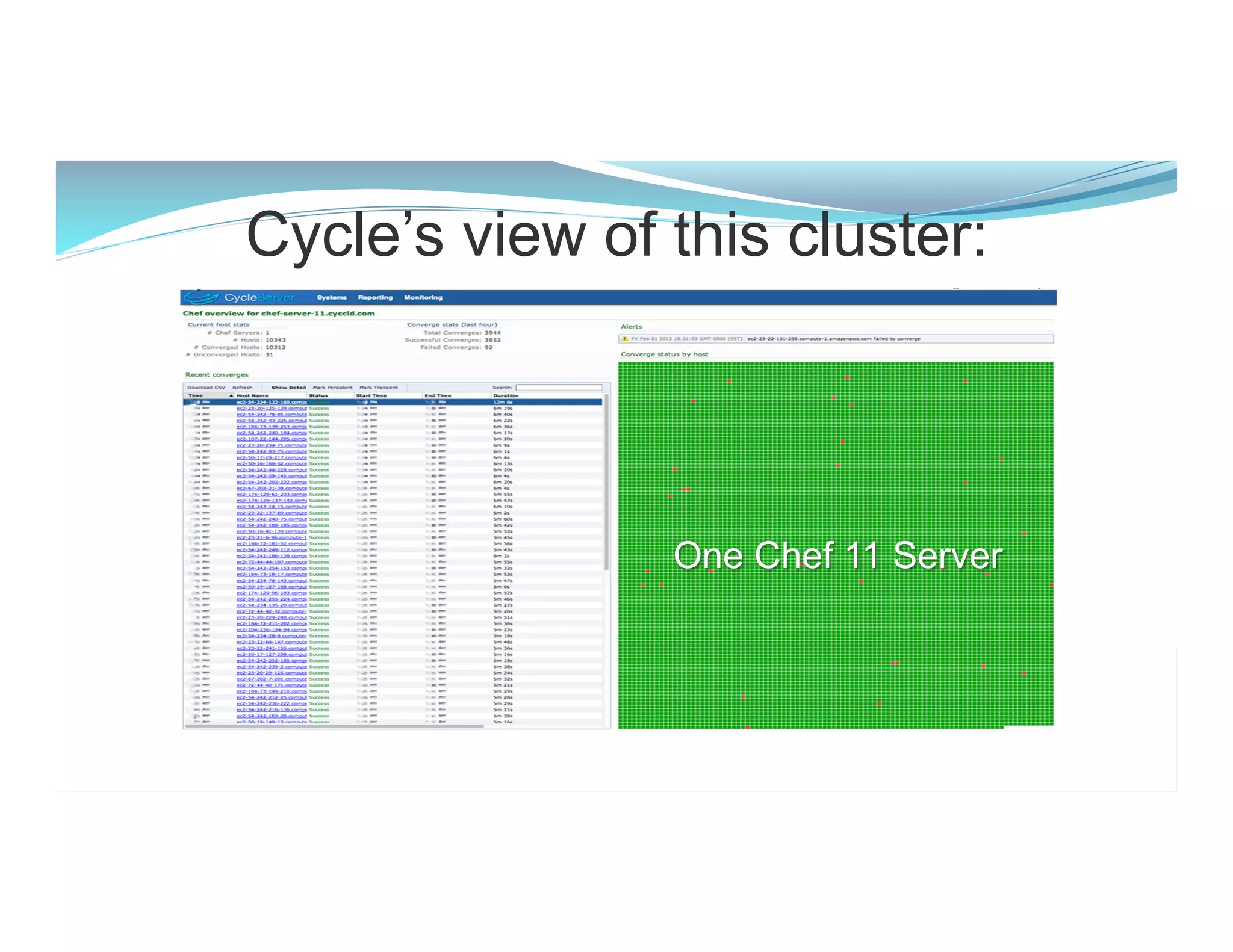

3) Automating cloud infrastructure provisioning and workload management through tools like Chef allows researchers to easily create large supercomputer clusters of tens of thousands of cores on demand for a fraction of the cost of traditional HPC systems.