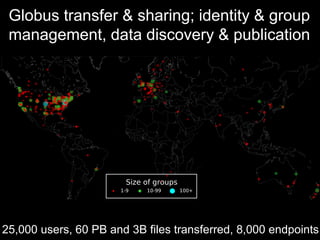

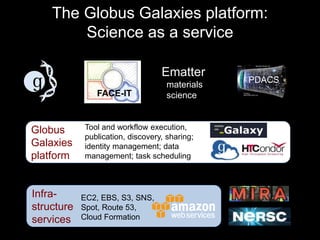

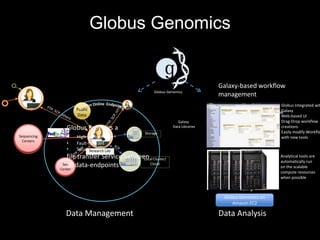

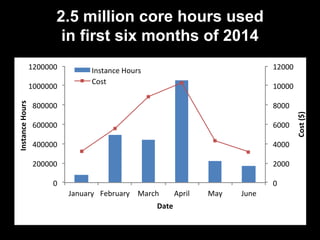

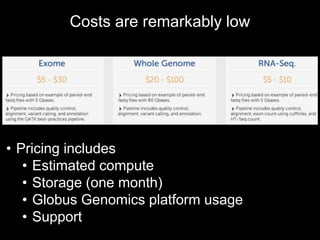

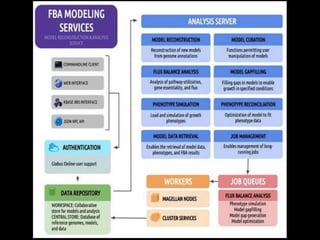

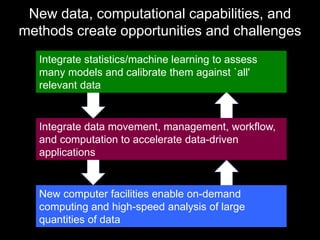

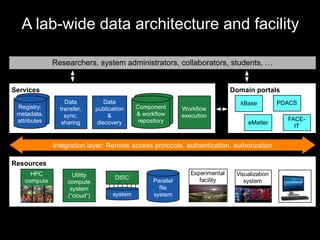

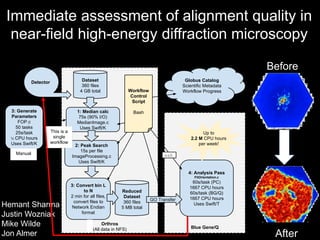

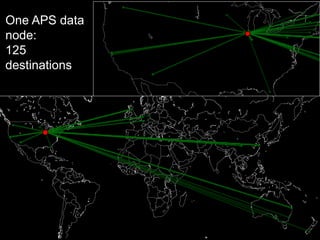

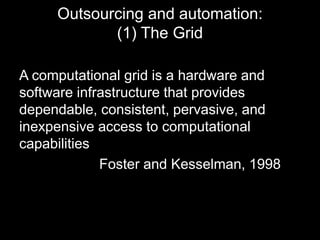

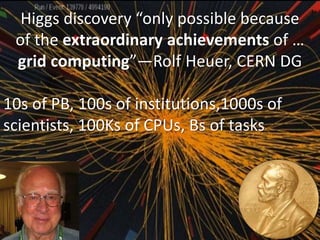

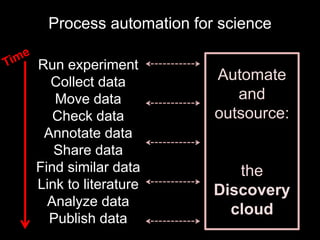

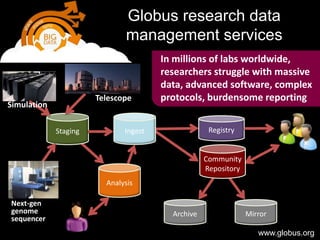

The document discusses the concept of the Discovery Cloud, emphasizing the need for automation and outsourcing to enhance scientific discovery through improved data management and computational capabilities. It explores tools like computational grids and cloud computing, exemplifying their use in various scientific fields to facilitate data sharing, analysis, and workflow automation. Additionally, the paper highlights initiatives such as the Globus platform, which aids in managing and transferring large datasets while promoting collaboration among researchers.

![“I need to easily, quickly, and reliably mirror

[portions of] my data to other places.”

Research Computing HPC Cluster

Campus Home Filesystem

Lab Server

Desktop Workstation

Personal Laptop

XSEDE Resource

Public Cloud](https://image.slidesharecdn.com/lanlfostersept2014-140919132738-phpapp02/85/The-Discovery-Cloud-Accelerating-Science-via-Outsourcing-and-Automation-17-320.jpg)