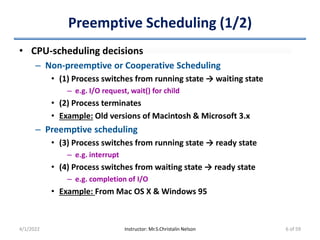

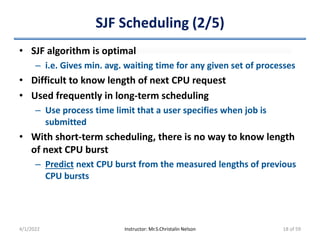

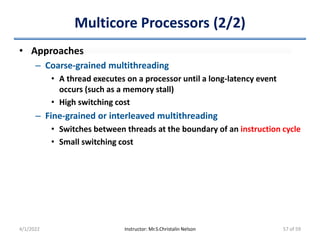

This document discusses CPU scheduling and the CPU-I/O burst cycle. It begins by explaining that processes alternate between CPU bursts and I/O bursts when executing. It then discusses various CPU scheduling algorithms like FCFS, SJF, priority scheduling, and round robin scheduling. It provides examples and analyses of how each algorithm works and its performance characteristics. It also discusses techniques like preemptive versus non-preemptive scheduling and using time quantums in round robin scheduling.