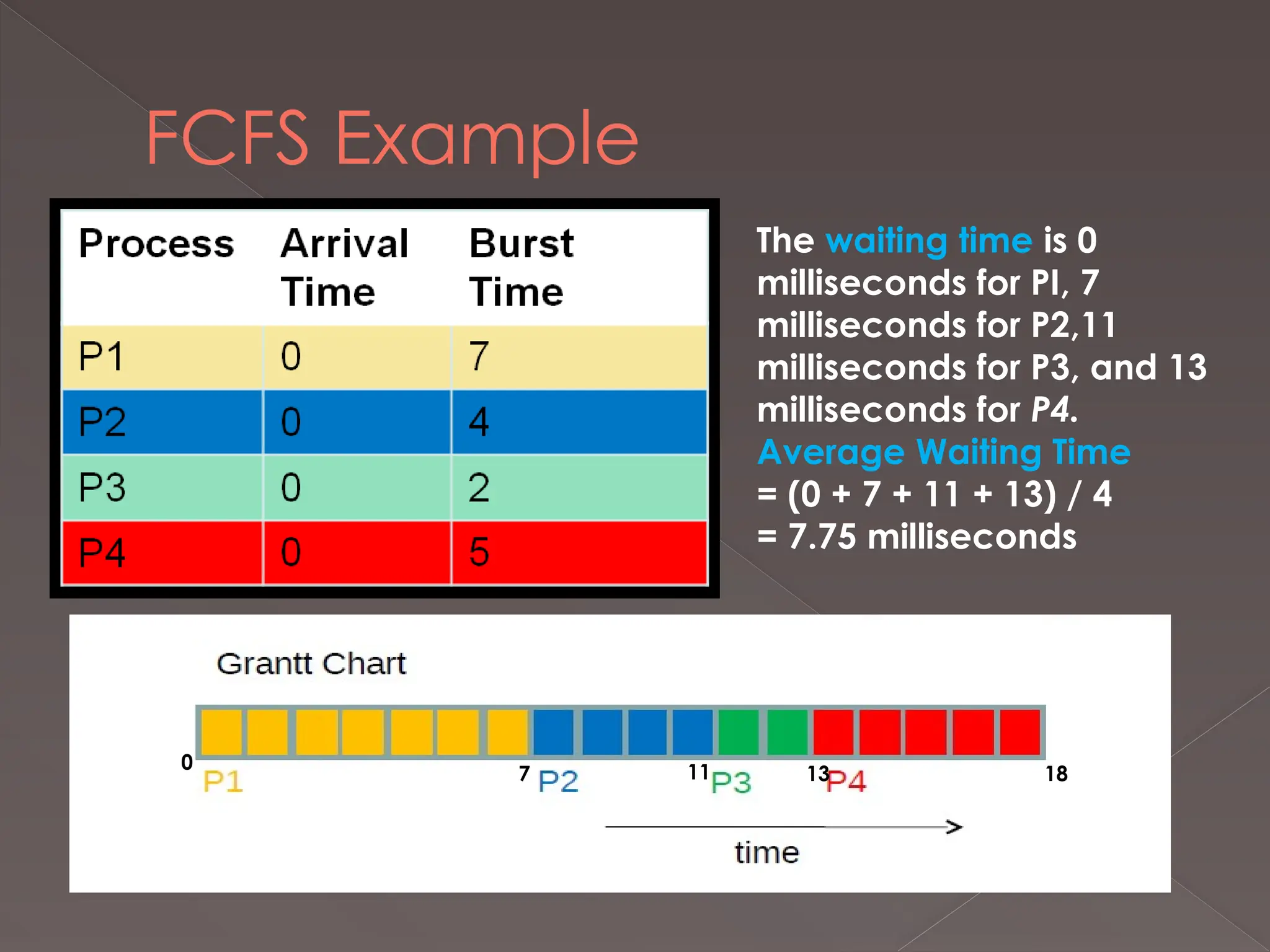

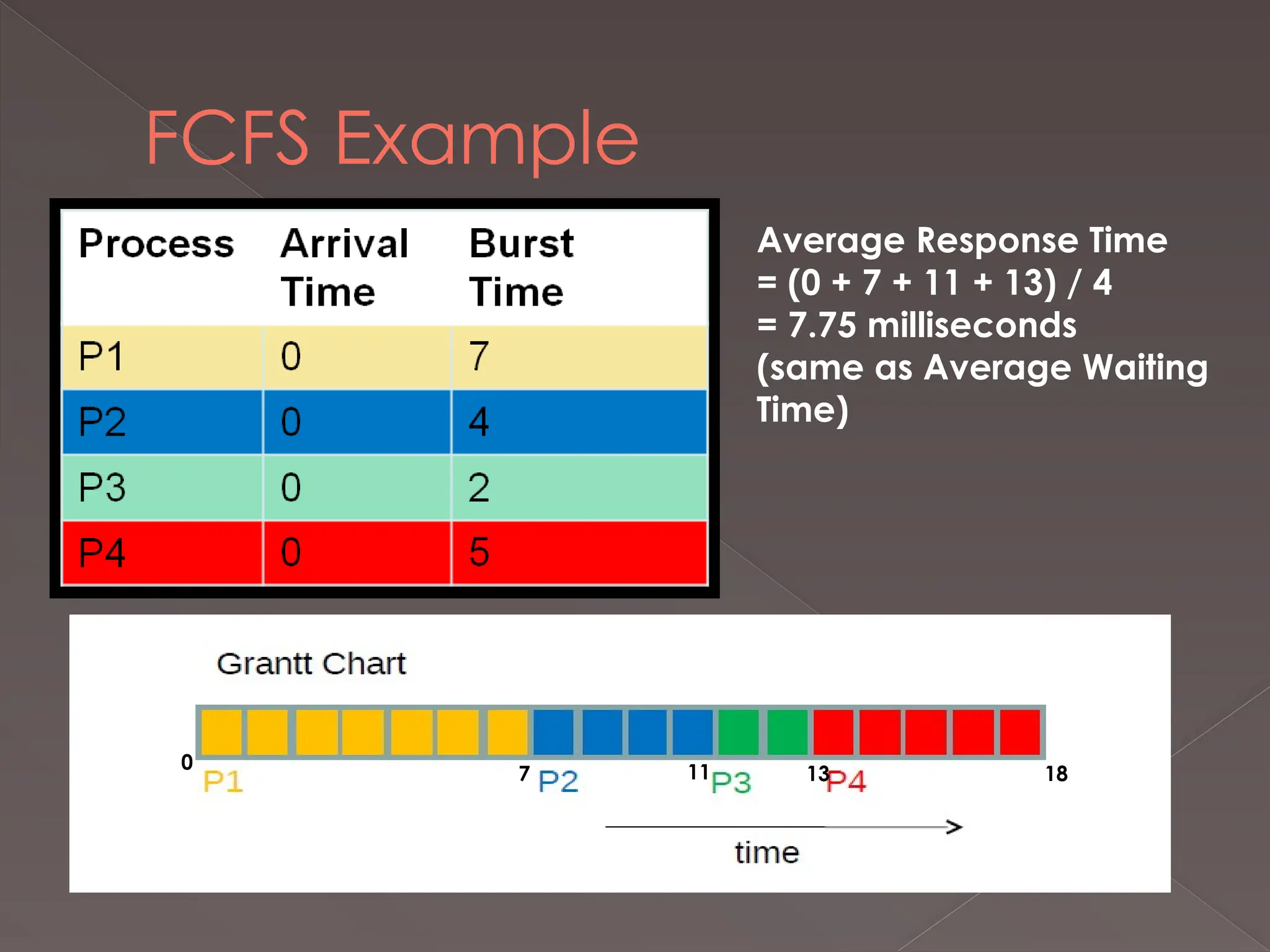

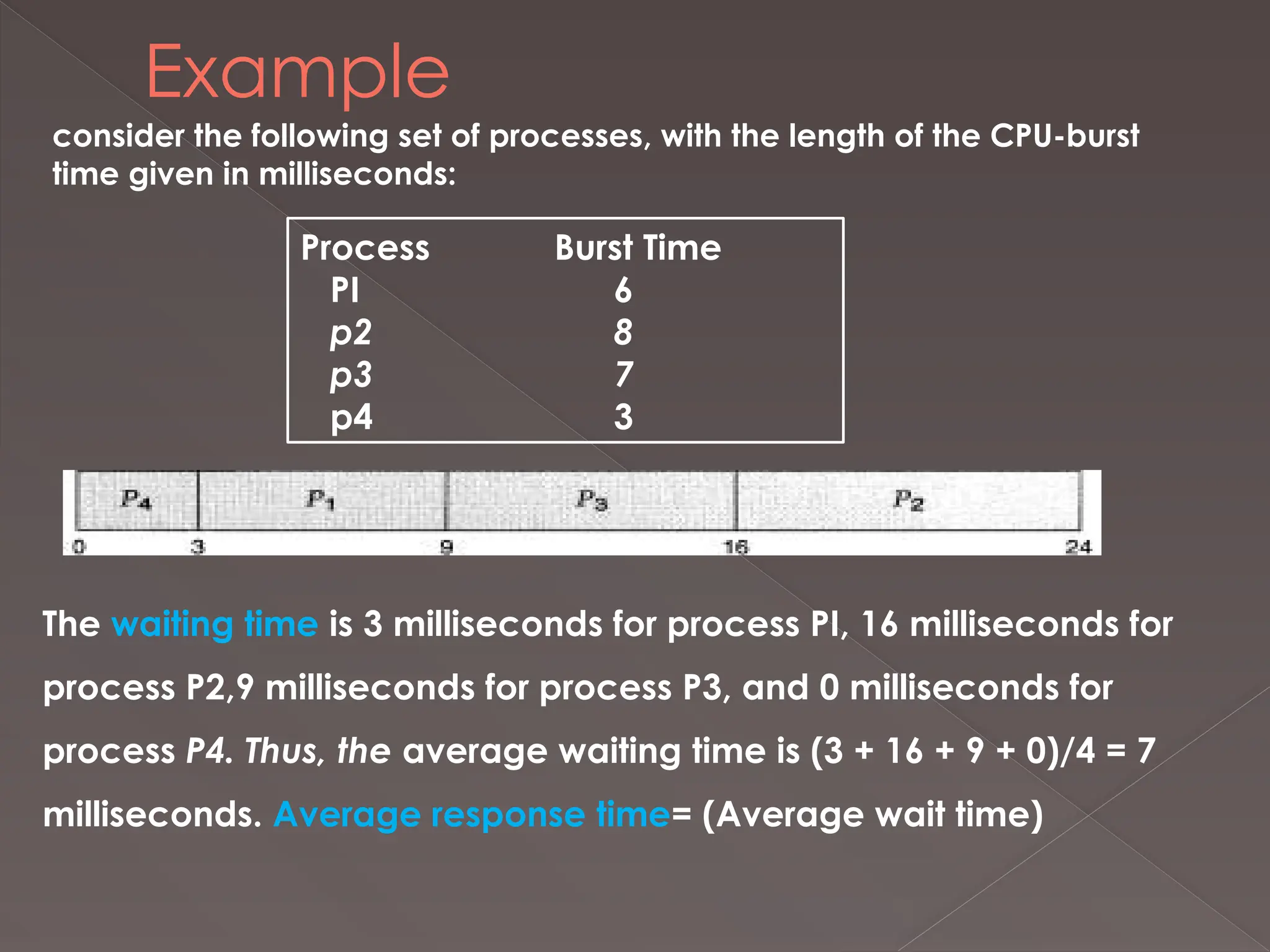

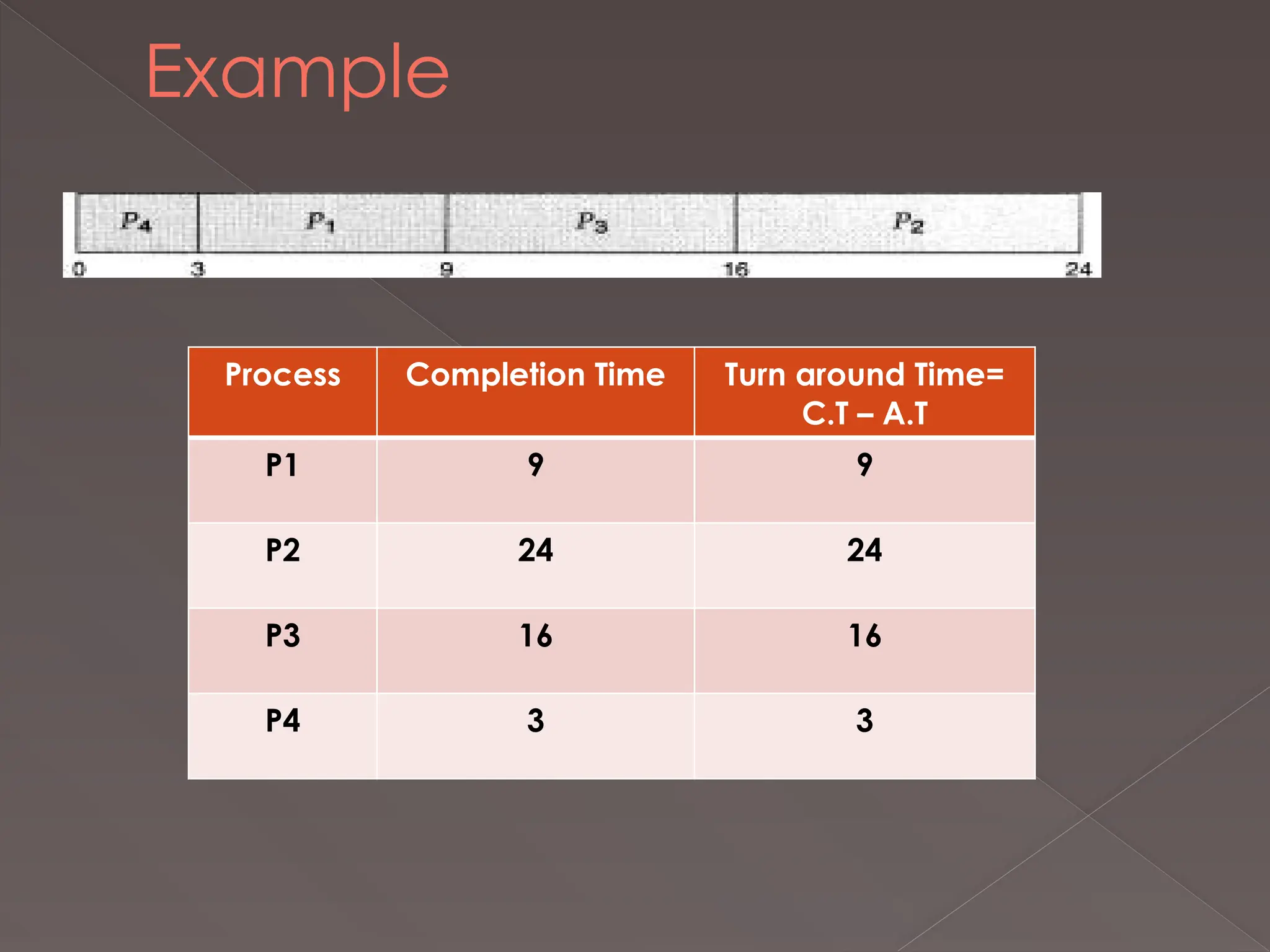

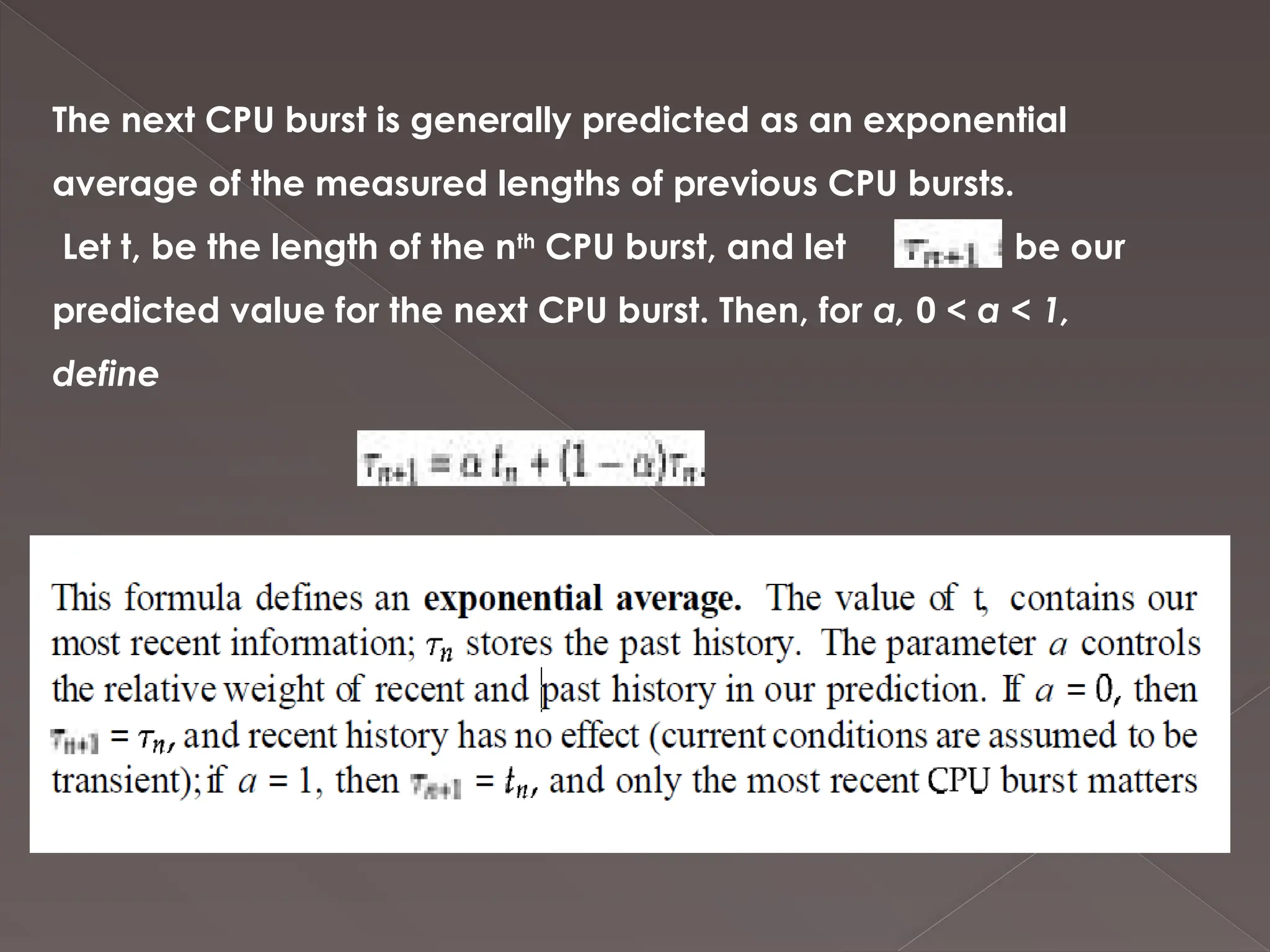

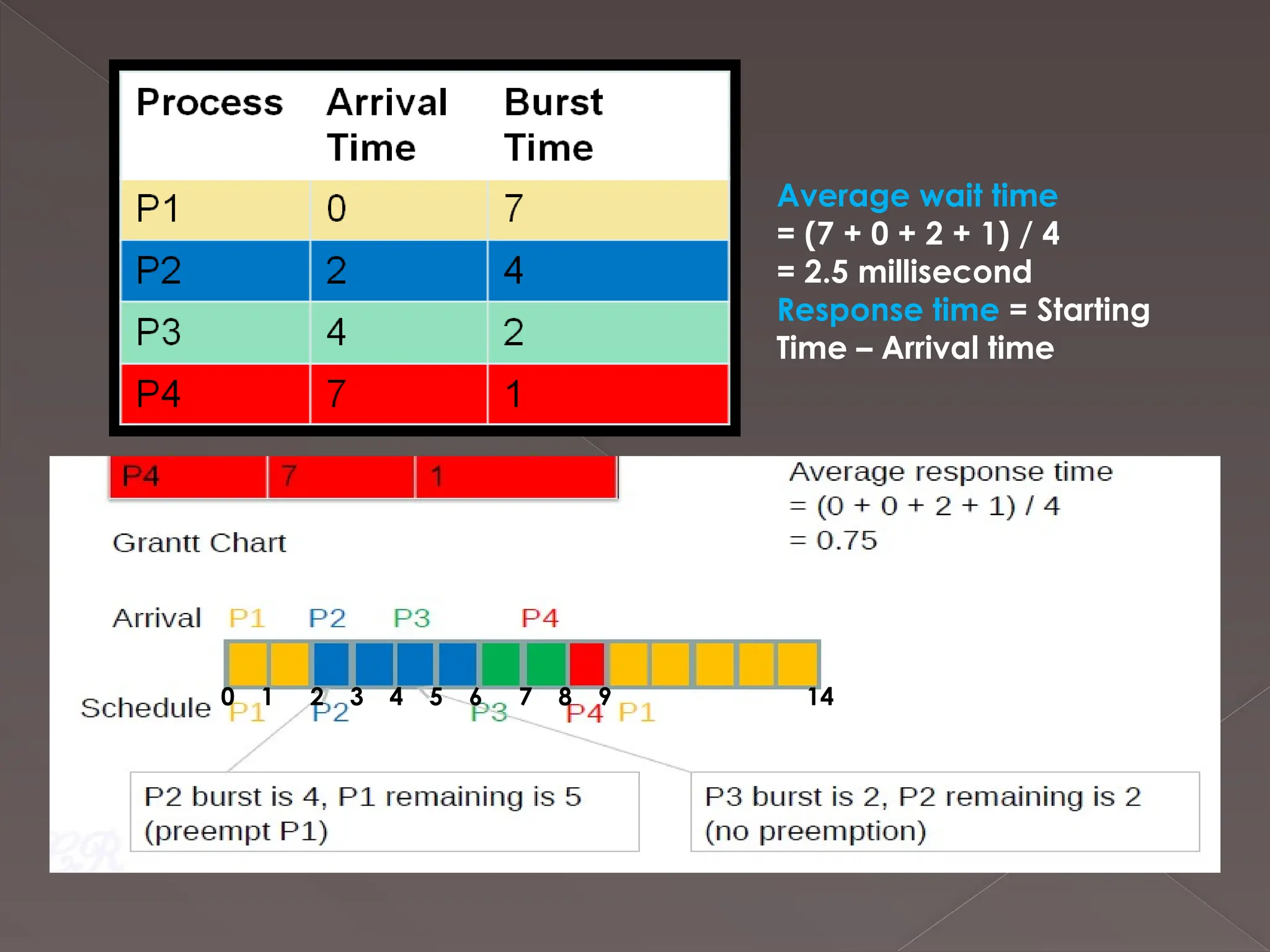

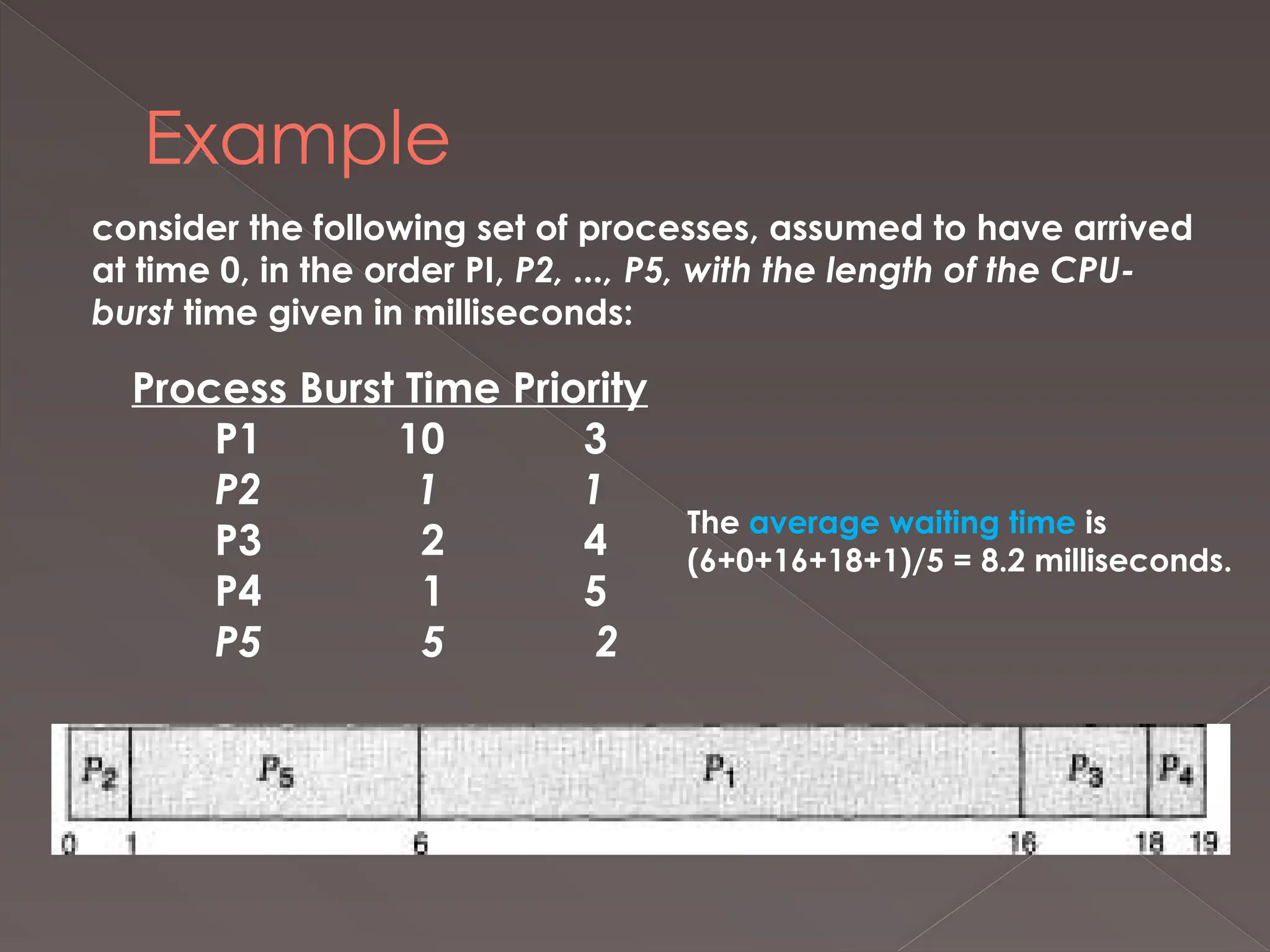

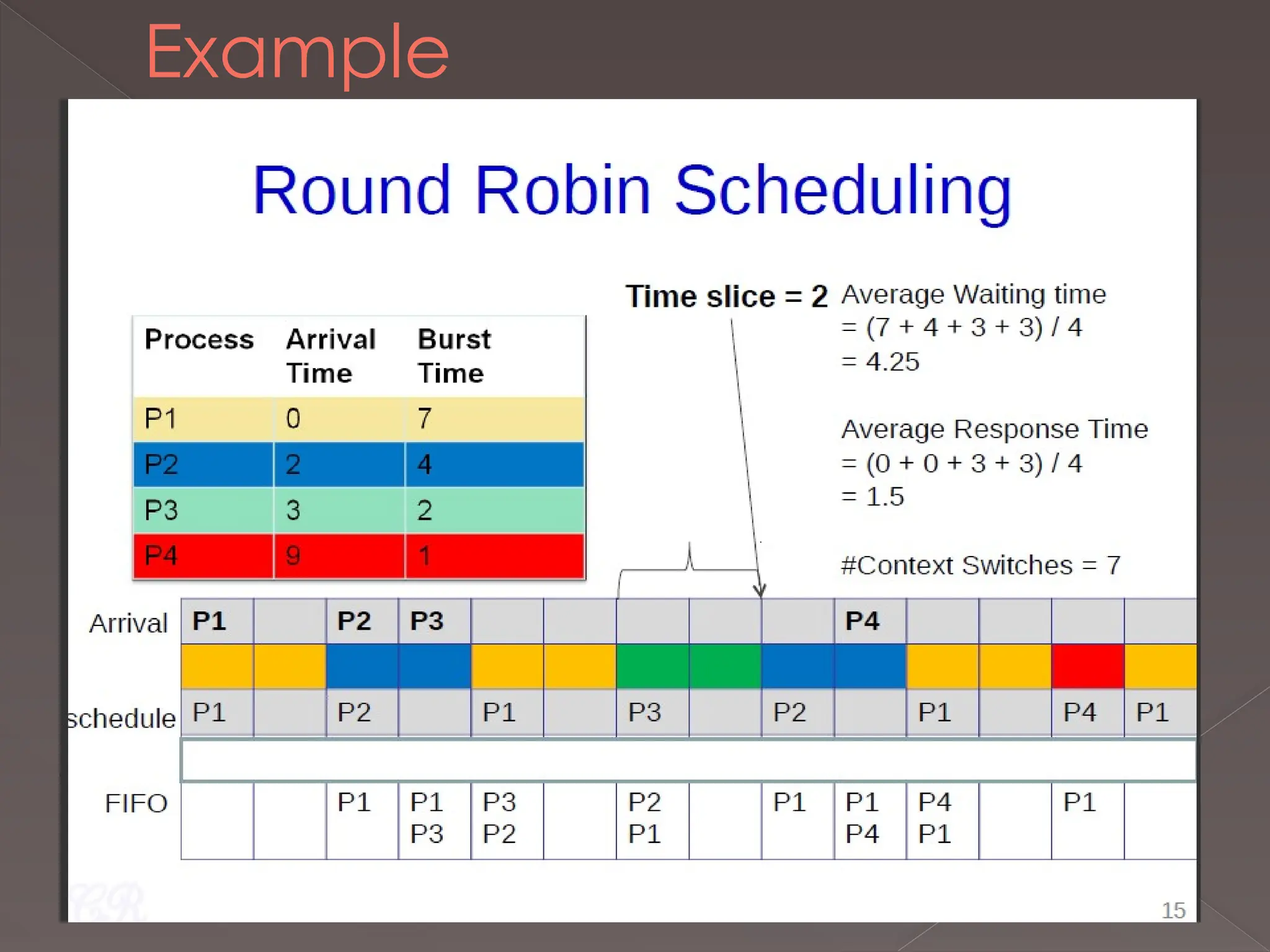

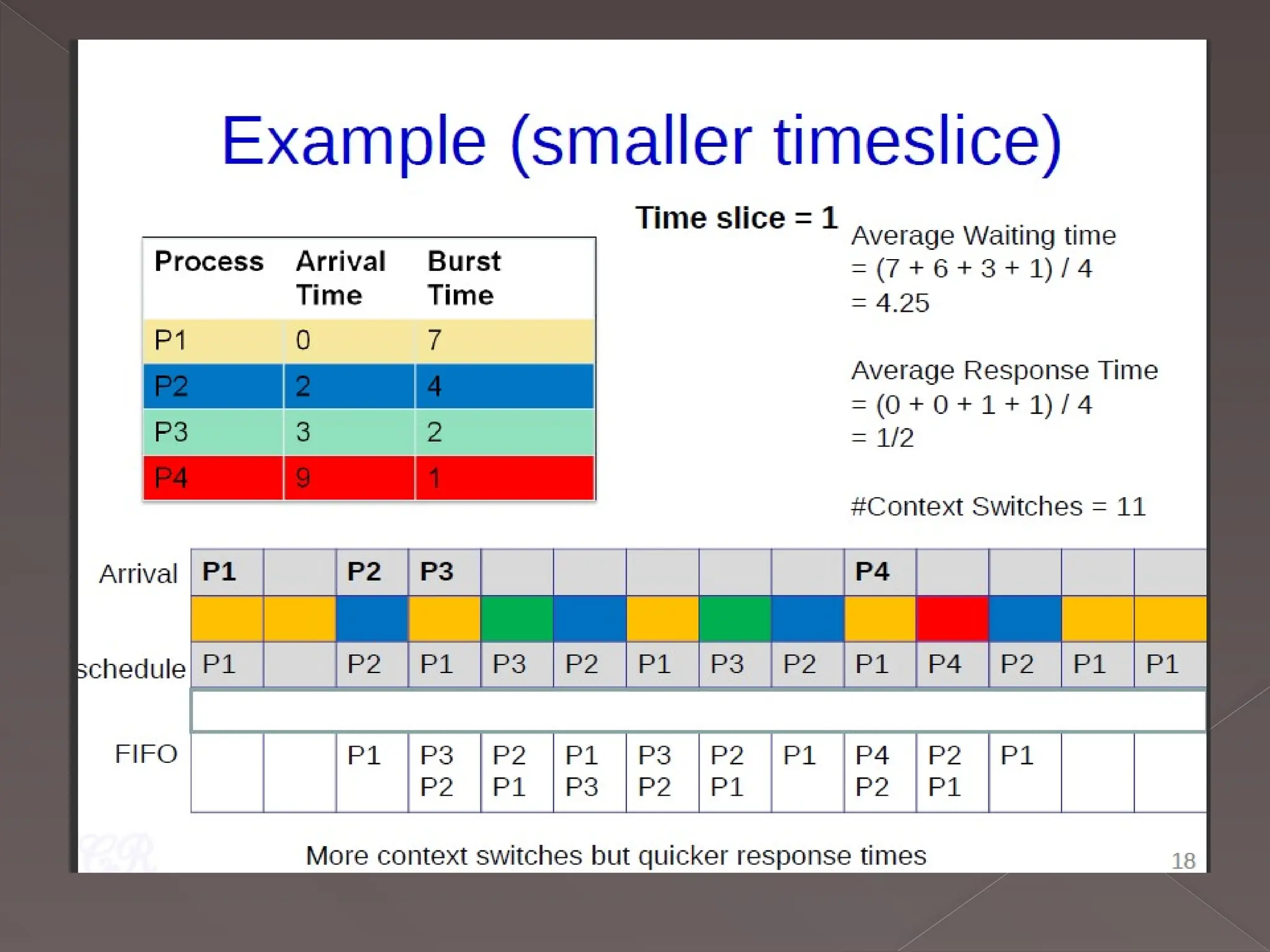

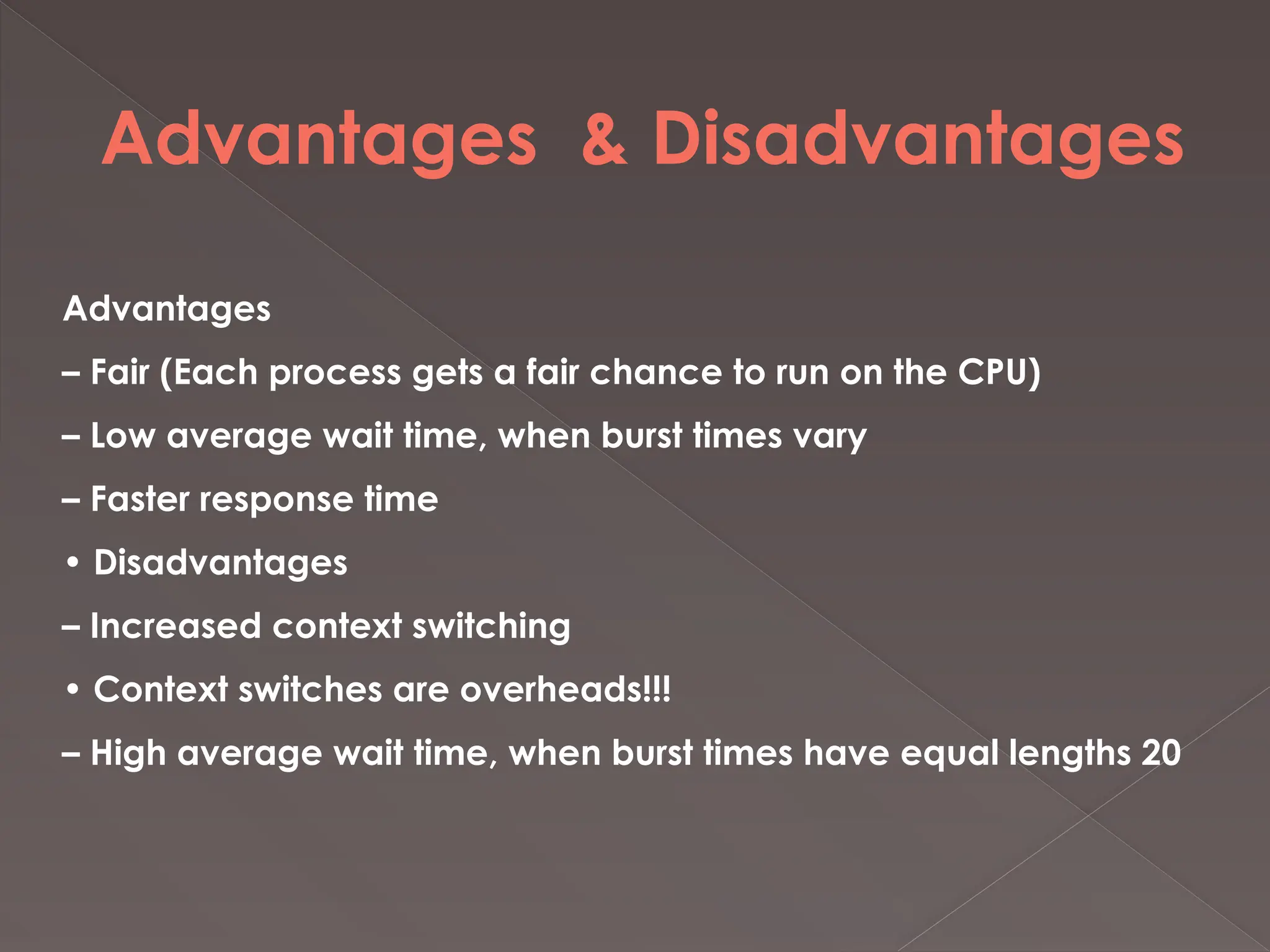

The document discusses CPU scheduling in operating systems, highlighting the importance of CPU-I/O burst cycles and the role of the CPU scheduler in process management. It explores various scheduling algorithms, including First-Come, First-Served (FCFS), Shortest Job First (SJF), and Round Robin (RR), along with their advantages and disadvantages. Additionally, it addresses concepts like preemptive and non-preemptive scheduling, as well as the issue of starvation in priority scheduling and potential solutions like aging.